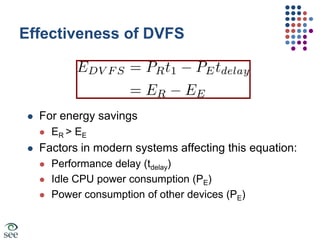

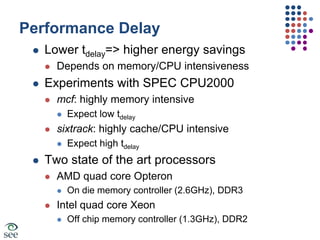

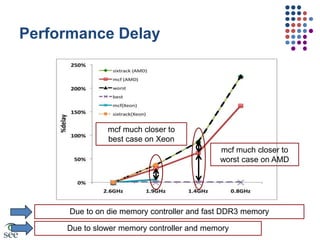

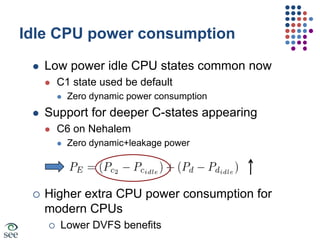

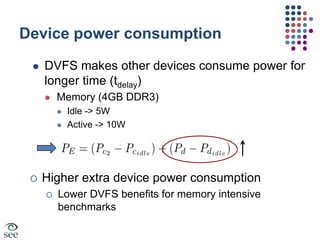

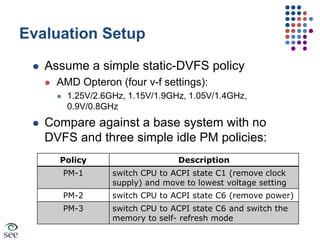

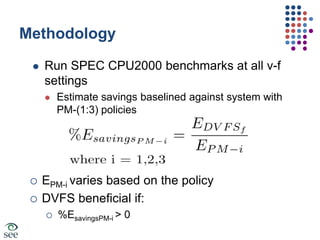

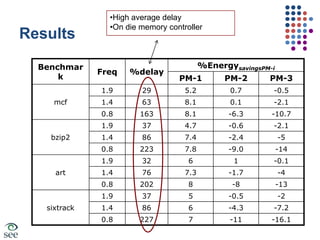

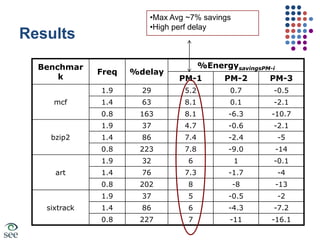

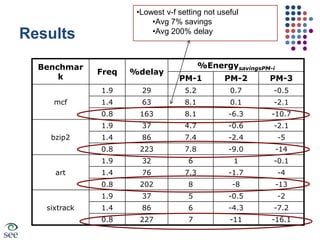

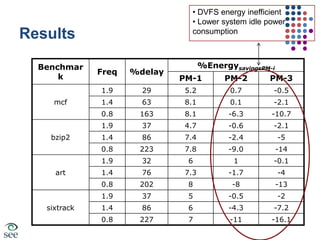

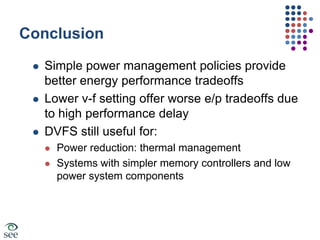

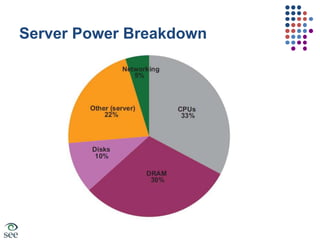

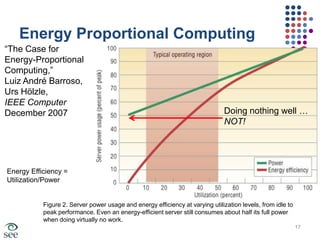

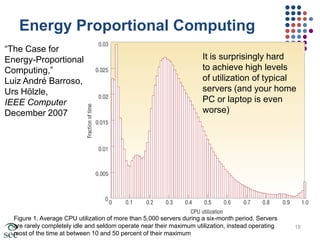

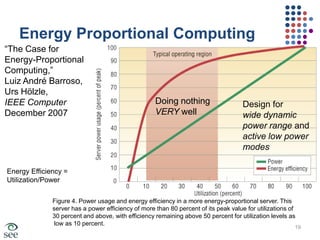

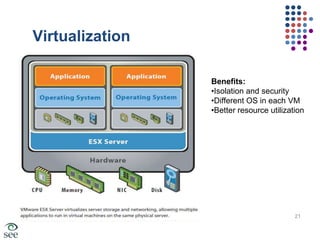

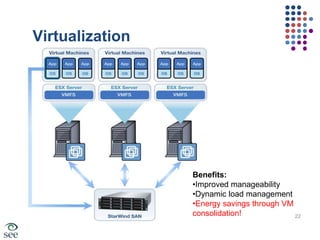

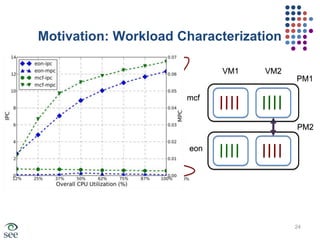

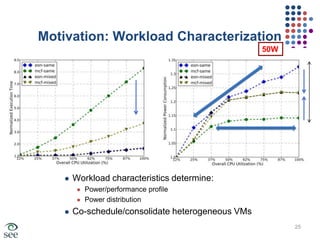

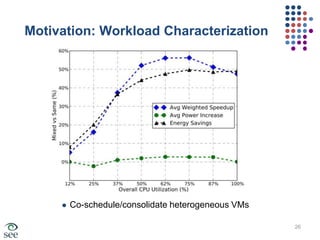

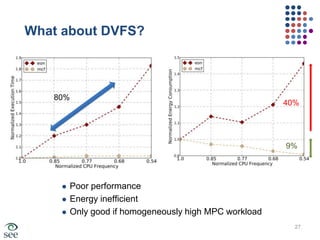

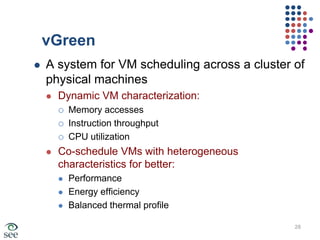

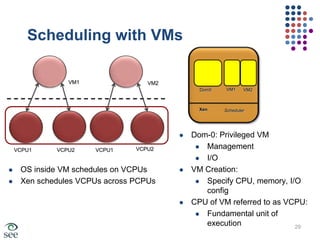

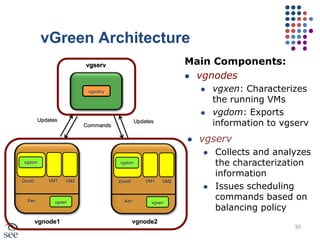

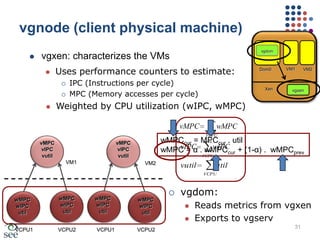

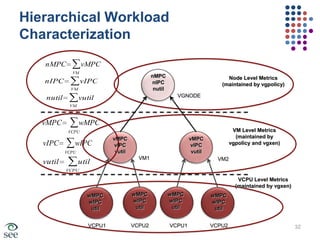

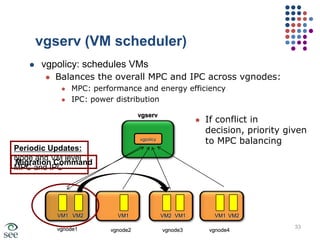

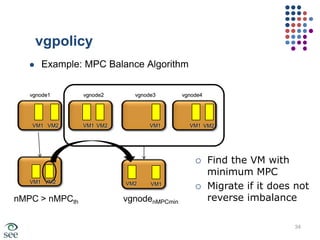

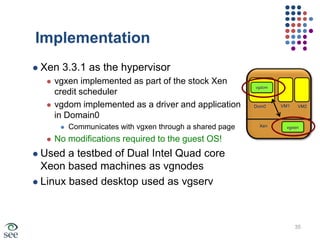

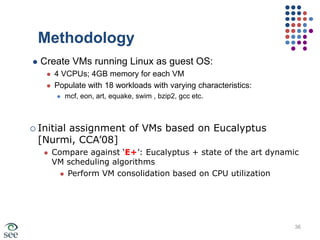

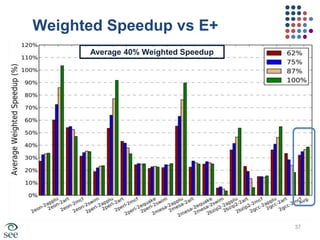

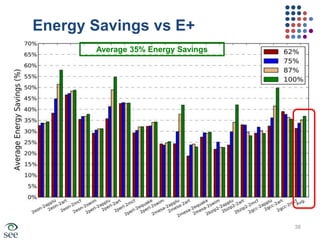

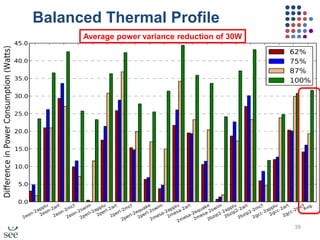

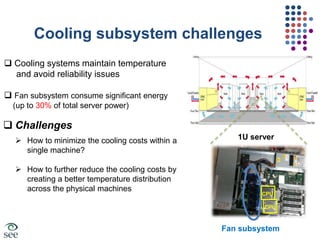

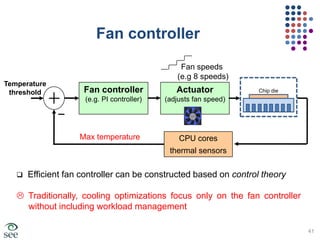

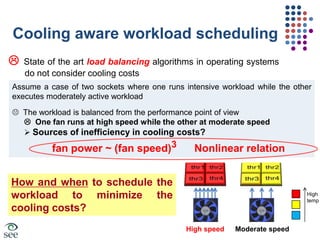

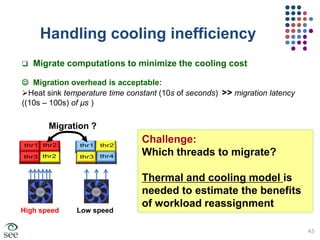

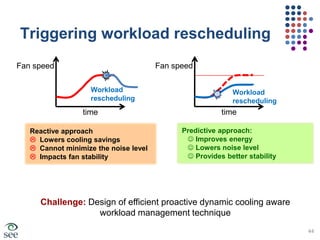

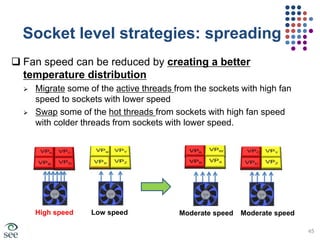

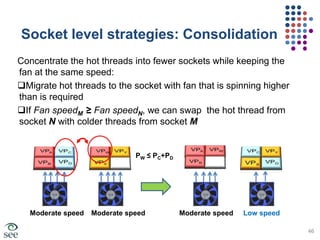

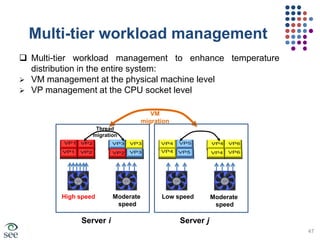

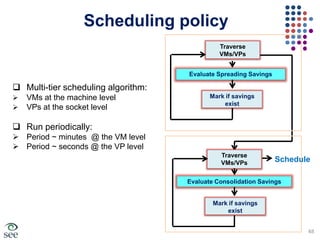

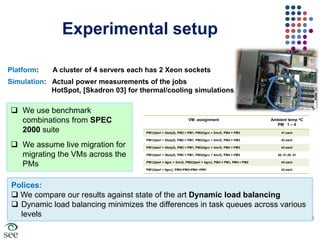

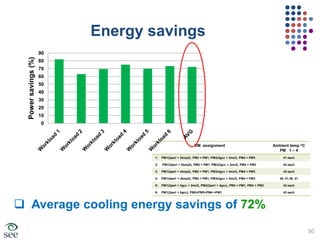

The document discusses energy efficiency in large-scale systems, emphasizing the significance of power consumption and the operational costs associated with data centers. It evaluates how dynamic voltage and frequency scaling (DVFS) affects energy savings and highlights the challenges of achieving energy-proportional computing. The text also explores virtualization benefits for resource utilization and strategies for optimizing cooling systems in server architectures.

![CoolingBy 2010, US electricity bill for powering and cooling data centers ~$7B[1]Electricity input to data centers in the US exceeds electricity consumption of Italy![1]: Meisner et al, ASPLOS 20082](https://image.slidesharecdn.com/nsfgreenlightmsi-ciecworkshopjune2010-100915102518-phpapp01/85/Energy-Efficiency-in-Large-Scale-Systems-3-320.jpg)