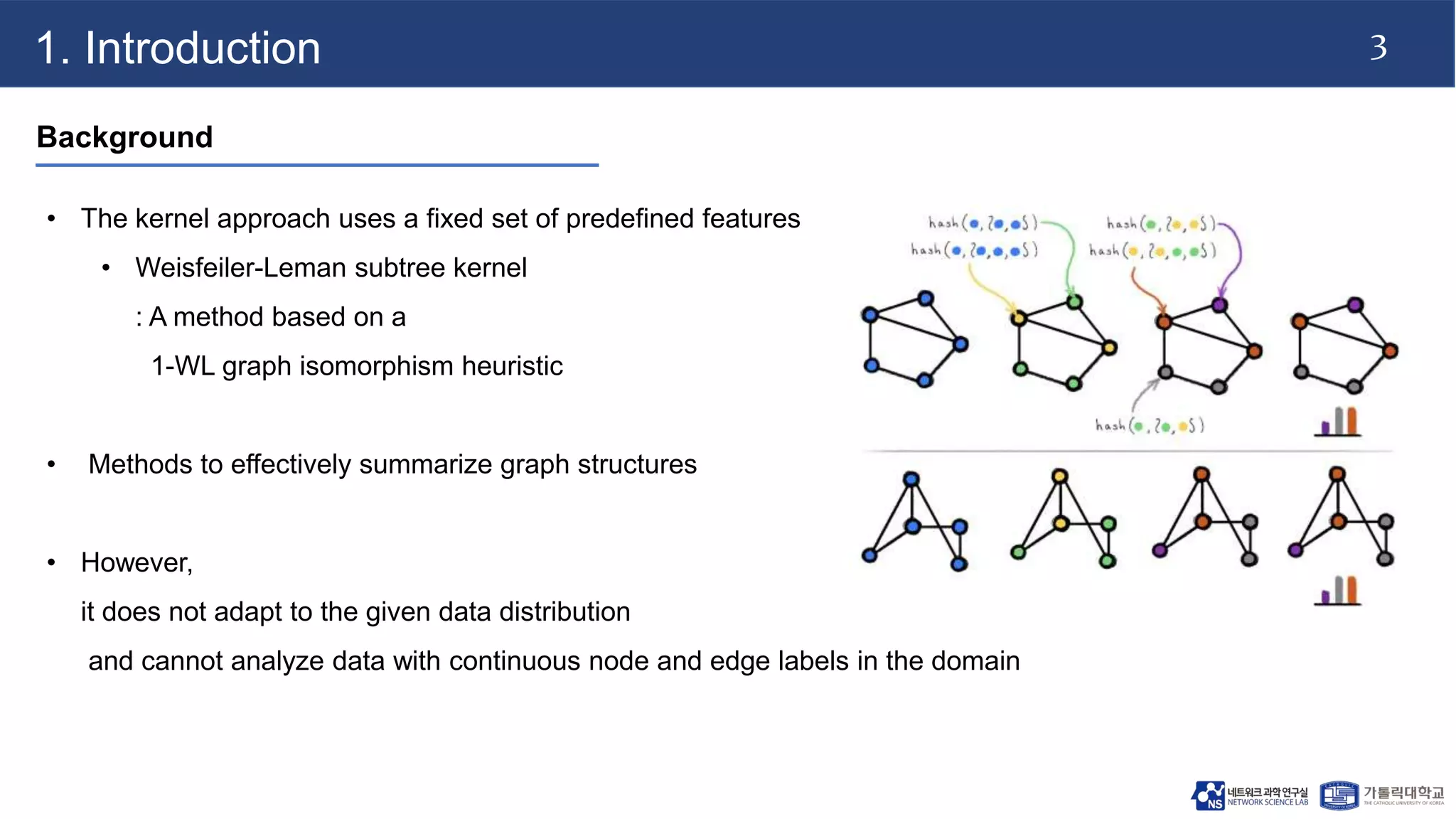

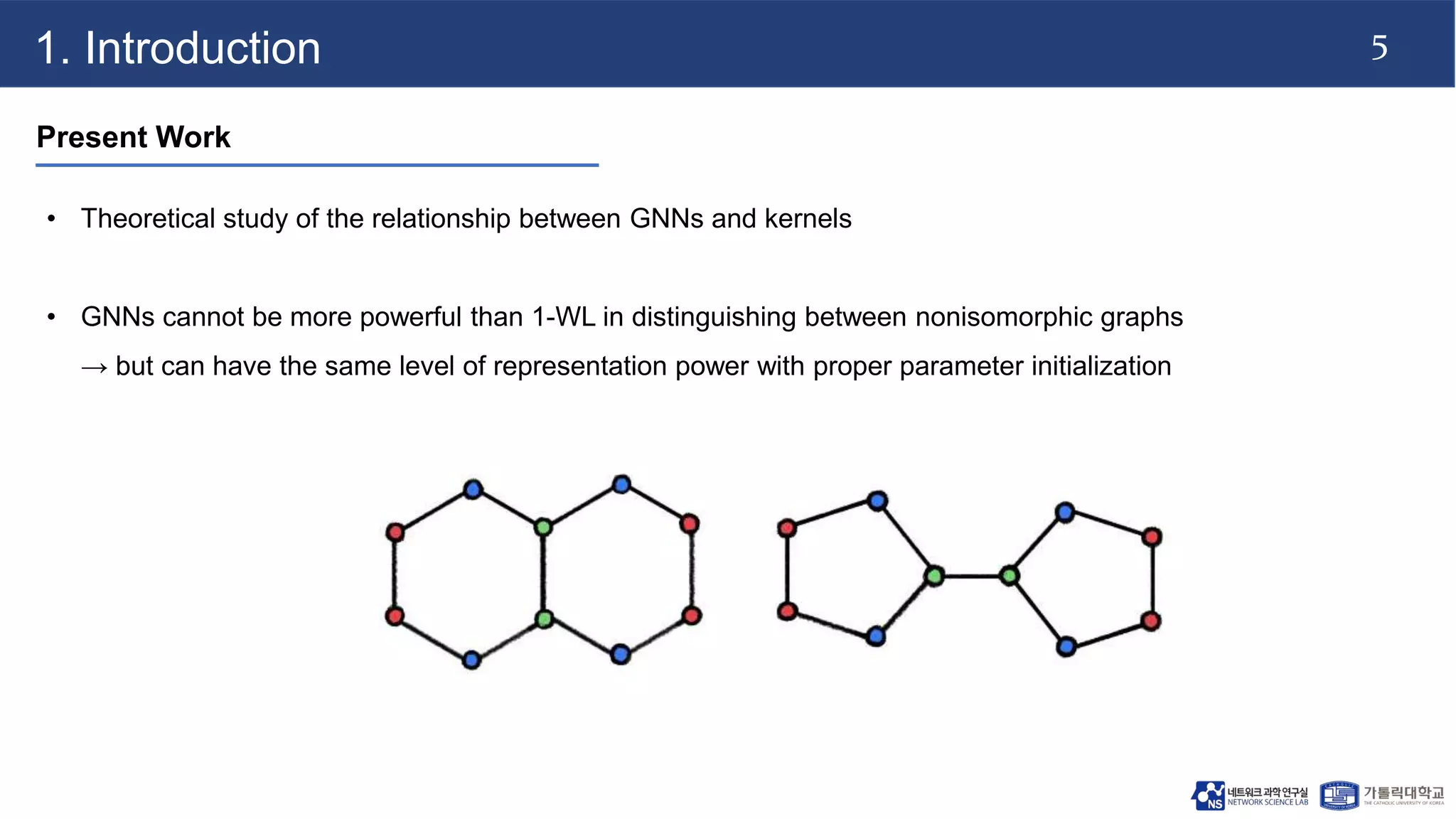

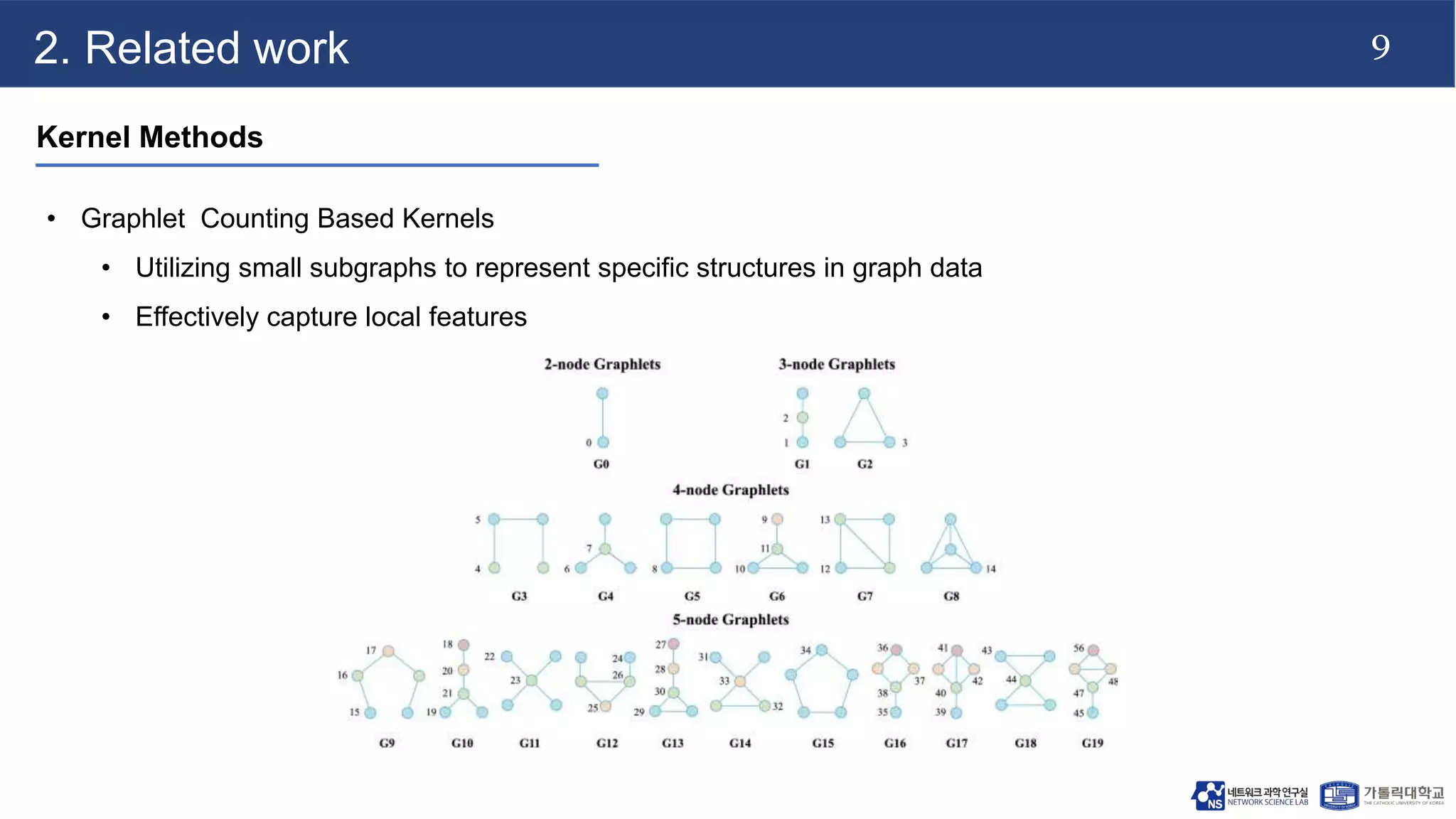

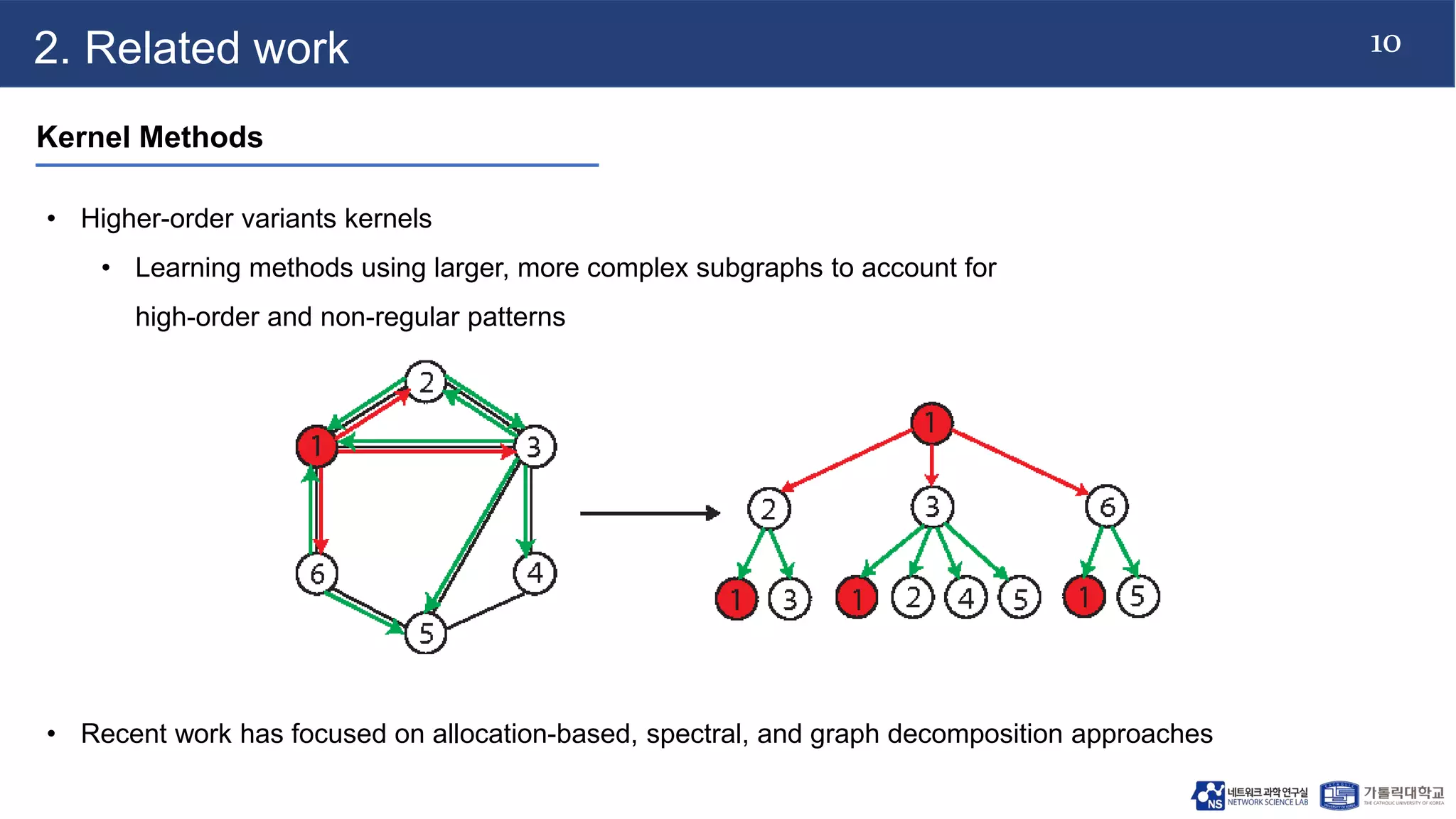

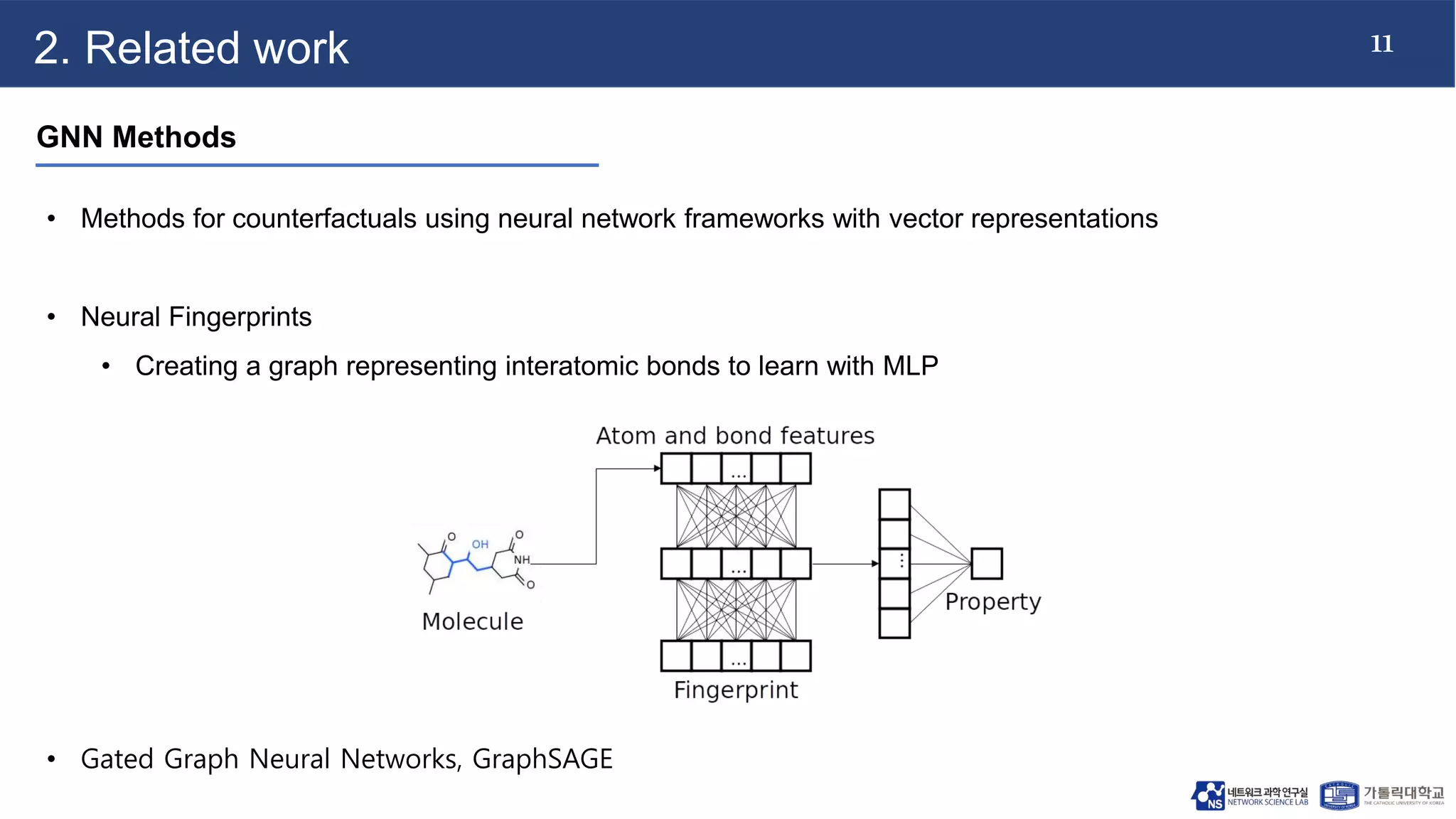

The document discusses advancements in machine learning techniques for analyzing graph structures, highlighting the limitations of kernel methods and the advantages of graph neural networks (GNNs). It proposes a novel model called k-GNN, which incorporates theoretical insights to enable direct message passing between subgraph structures, while maintaining comparable representational power to the Weisfeiler-Lehman algorithm. The work emphasizes the importance of higher-order graph characterization in classification and regression tasks.