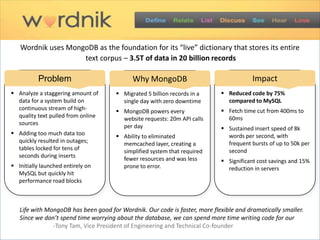

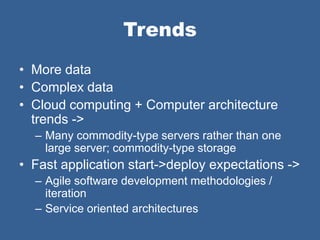

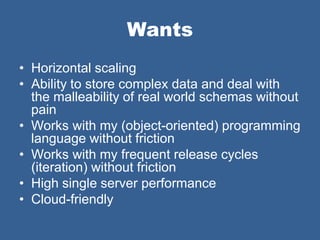

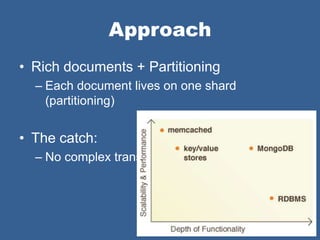

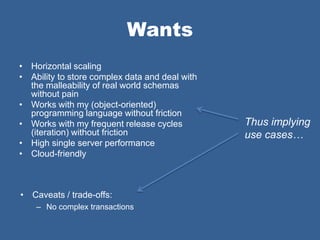

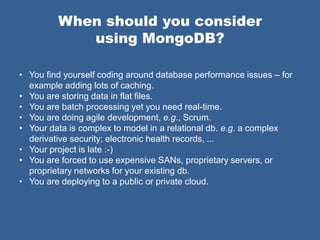

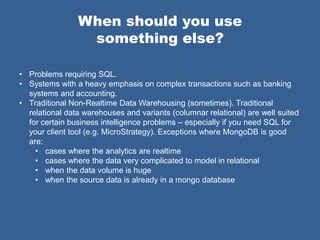

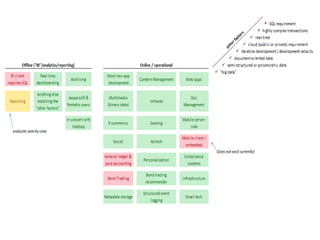

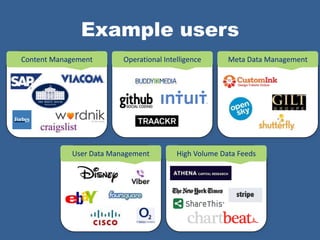

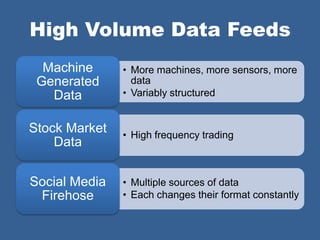

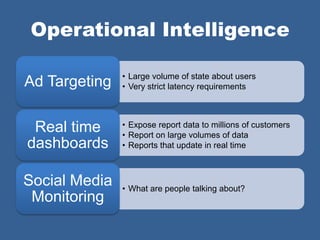

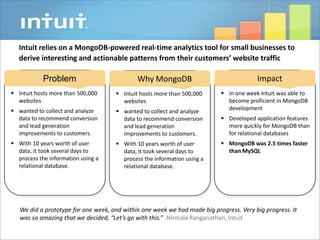

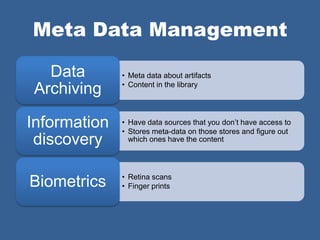

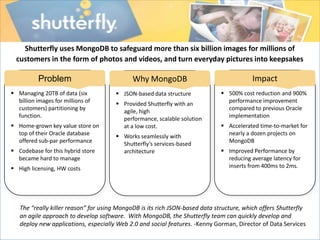

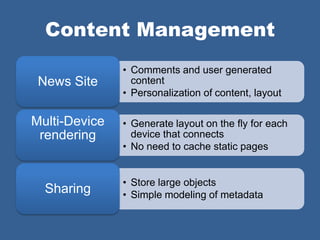

MongoDB and NoSQL use cases address trends of more and complex data, cloud computing, and fast application development. MongoDB provides horizontal scaling, ability to store complex data without pain, compatibility with object-oriented languages and frequent releases, high single-server performance, and cloud friendliness. However, it offers no complex transactions. Suitable use cases include high data volumes, complex data models, real-time analytics, agile development, and cloud deployment. Examples of users are given for content management, operational intelligence, metadata management, high-volume data feeds, marketing personalization, and dictionary services.

![Marketing Personalization

Rich profiles

collecting multiple

complex actions

1 See Ad

Scale out to support { cookie_id: ‚1234512413243‛,

high throughput of advertiser:{

apple: {

activities tracked actions: [

2 See Ad { impression: ‘ad1’, time: 123 },

{ impression: ‘ad2’, time: 232 },

{ click: ‘ad2’, time: 235 },

{ add_to_cart: ‘laptop’,

sku: ‘asdf23f’,

time: 254 },

Click { purchase: ‘laptop’, time: 354 }

3 ]

}

}

}

Dynamic schemas

make it easy to track

Indexing and

4 Convert vendor specific

querying to support

attributes

matching, frequency

capping](https://image.slidesharecdn.com/nosqlnow2012mongodbusecases-120823161603-phpapp02/85/Nosql-Now-2012-MongoDB-Use-Cases-17-320.jpg)

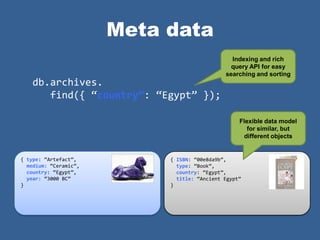

![Content Management

Geo spatial indexing

Flexible data model for location based

GridFS for large

for similar, but searches

object storage

different objects

{ camera: ‚Nikon d4‛,

location: [ -122.418333, 37.775 ]

}

{ camera: ‚Canon 5d mkII‛,

people: [ ‚Jim‛, ‚Carol‛ ],

taken_on: ISODate("2012-03-07T18:32:35.002Z")

}

{ origin: ‚facebook.com/photos/xwdf23fsdf‛,

license: ‚Creative Commons CC0‛,

size: {

dimensions: [ 124, 52 ],

units: ‚pixels‛

Horizontal scalability }

for large data sets }](https://image.slidesharecdn.com/nosqlnow2012mongodbusecases-120823161603-phpapp02/85/Nosql-Now-2012-MongoDB-Use-Cases-22-320.jpg)