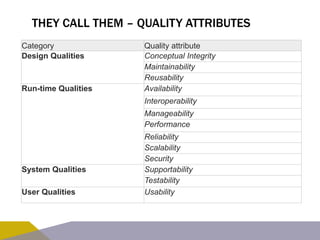

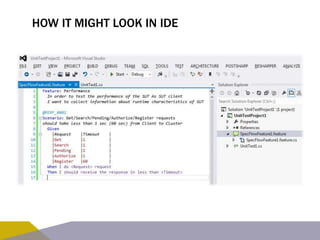

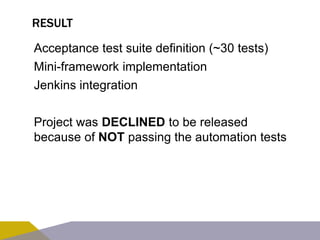

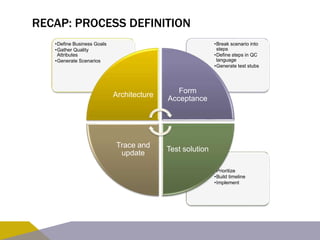

This document summarizes an expert's experience and qualifications in software architecture and automation testing, including 8 years of IT experience and a PhD in IT automation testing. It then discusses what software architecture is, how it is formed based on business, users and systems, and what quality attributes and acceptance criteria can be tested. Finally, it provides an example of defining acceptance criteria for a software error scenario using a specific methodology and tools.