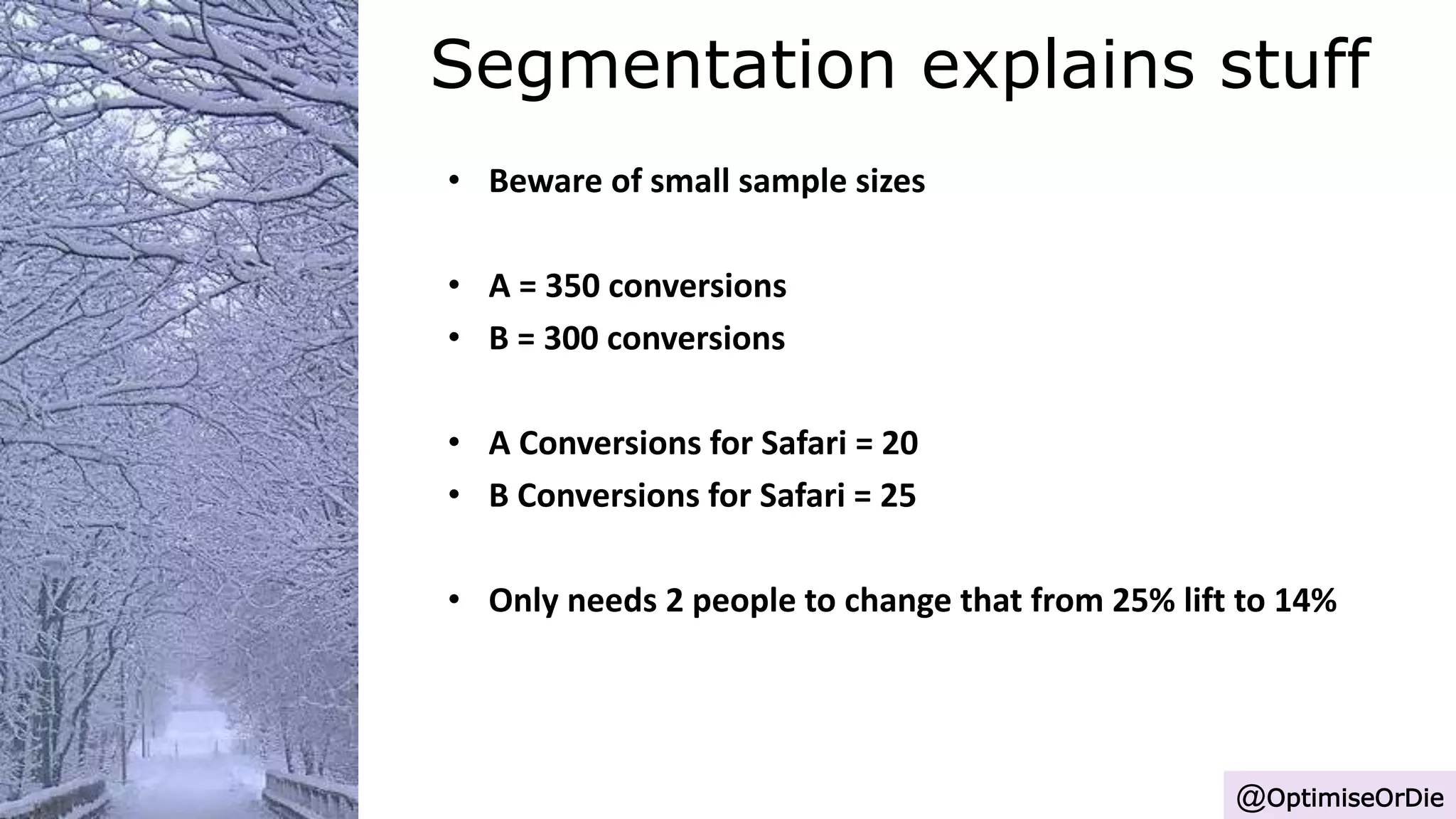

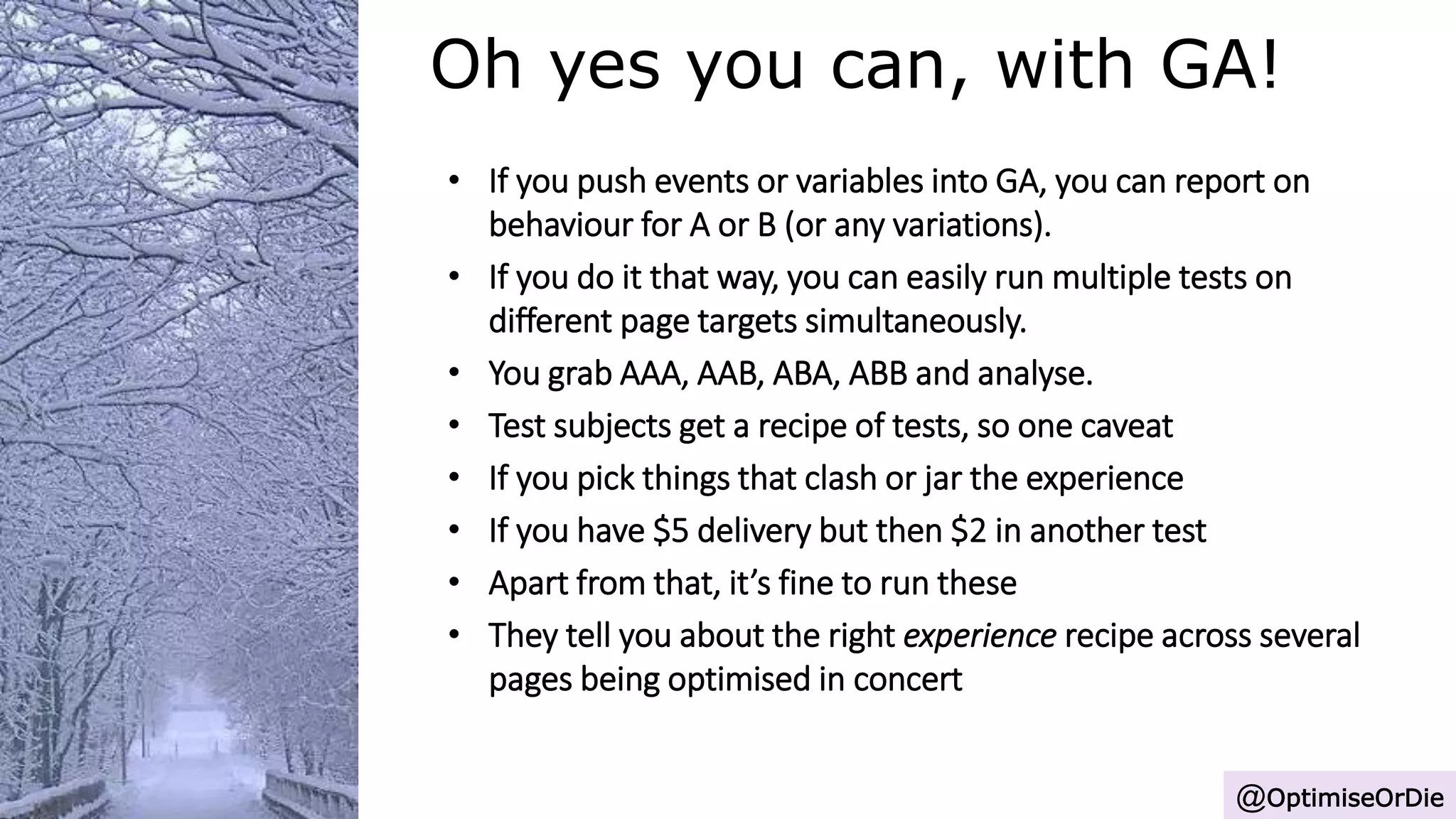

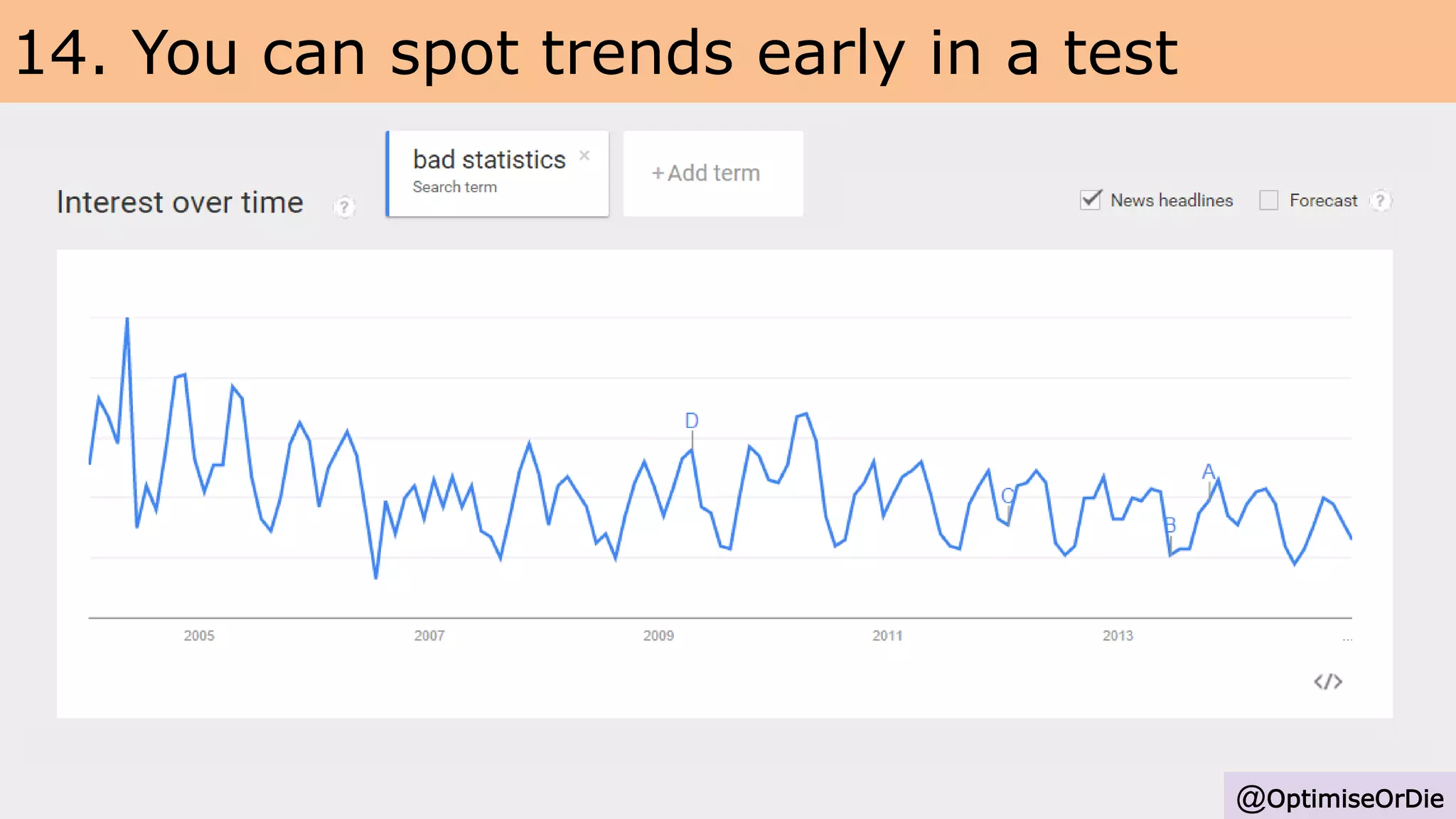

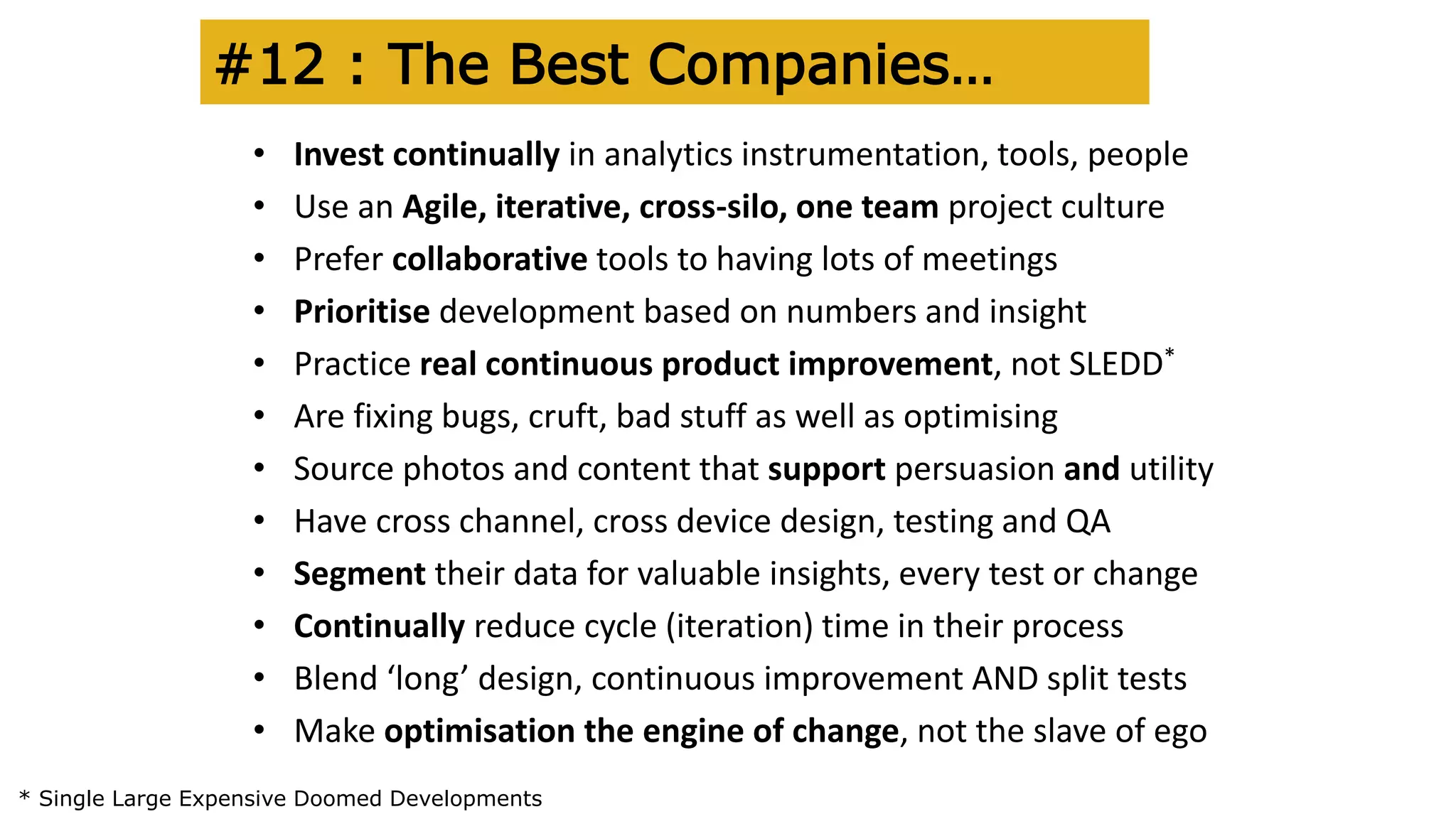

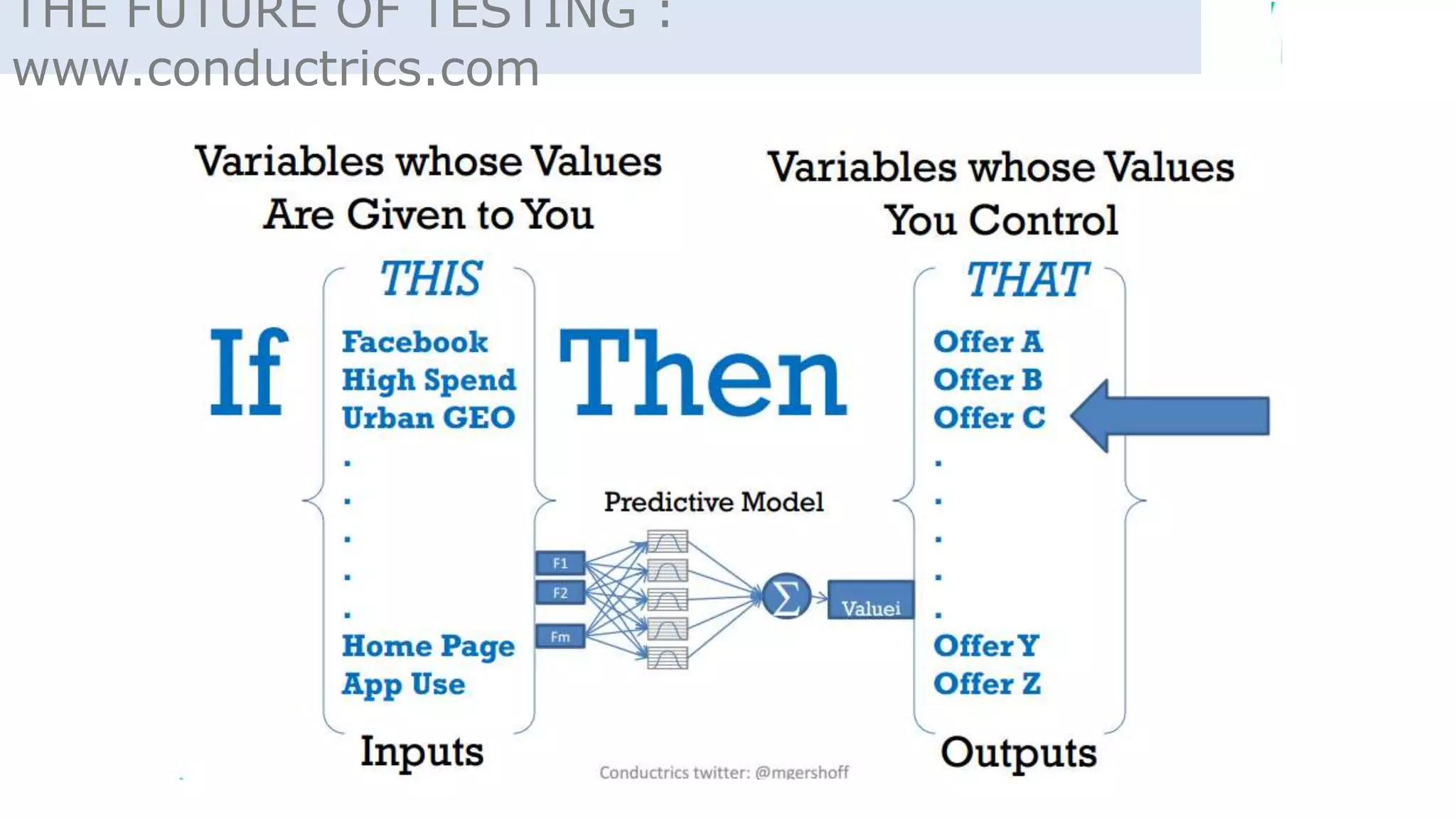

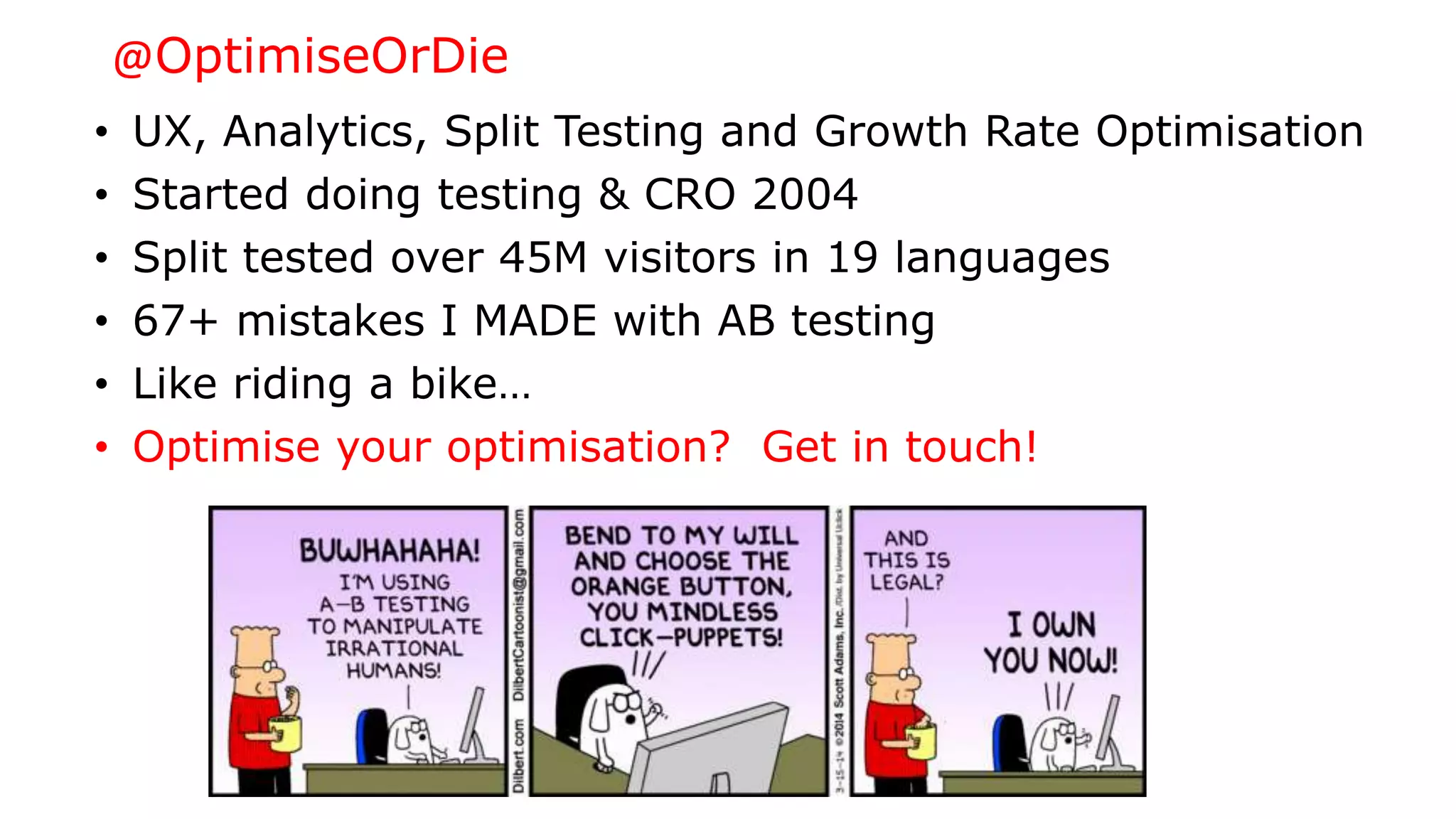

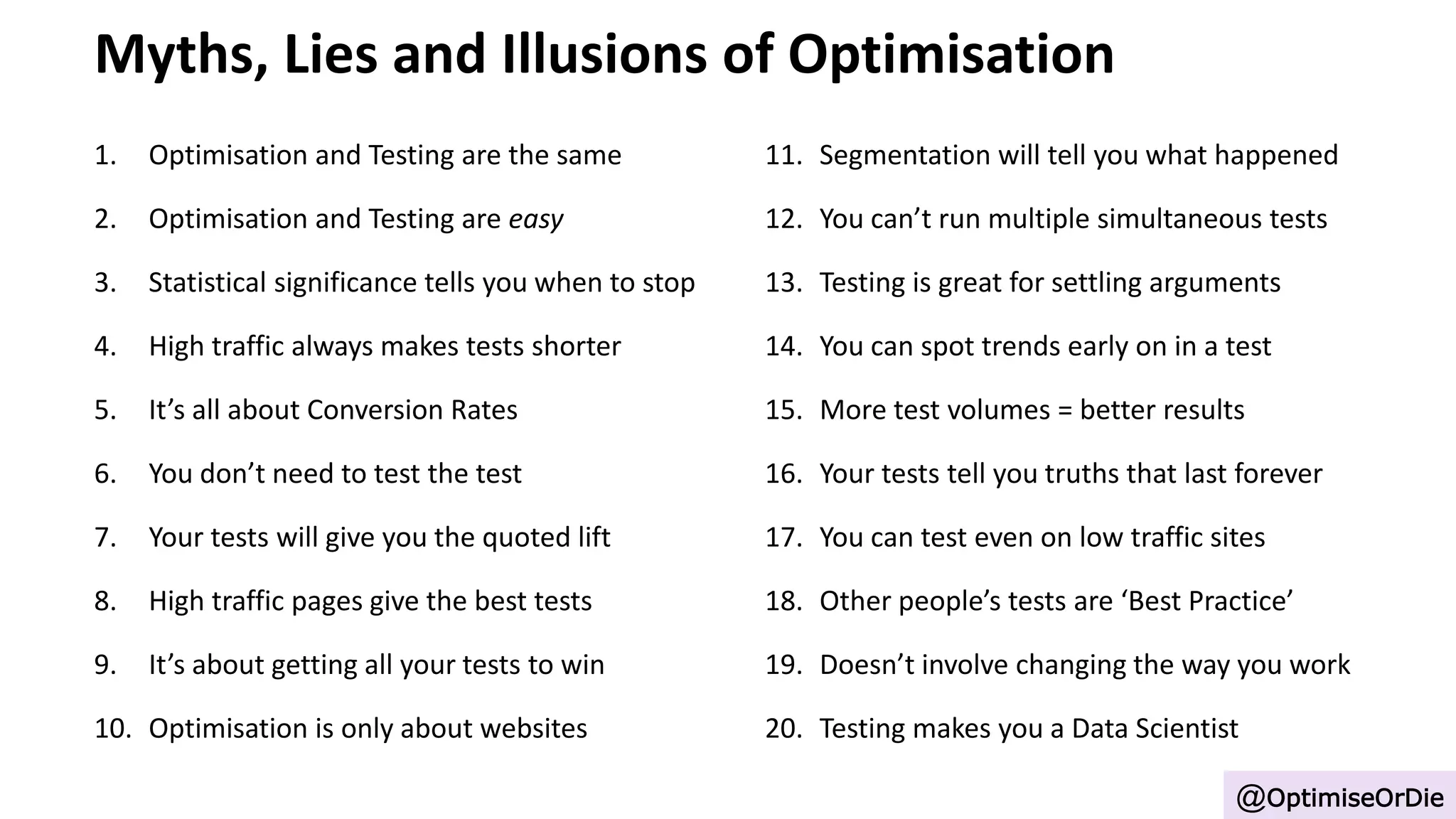

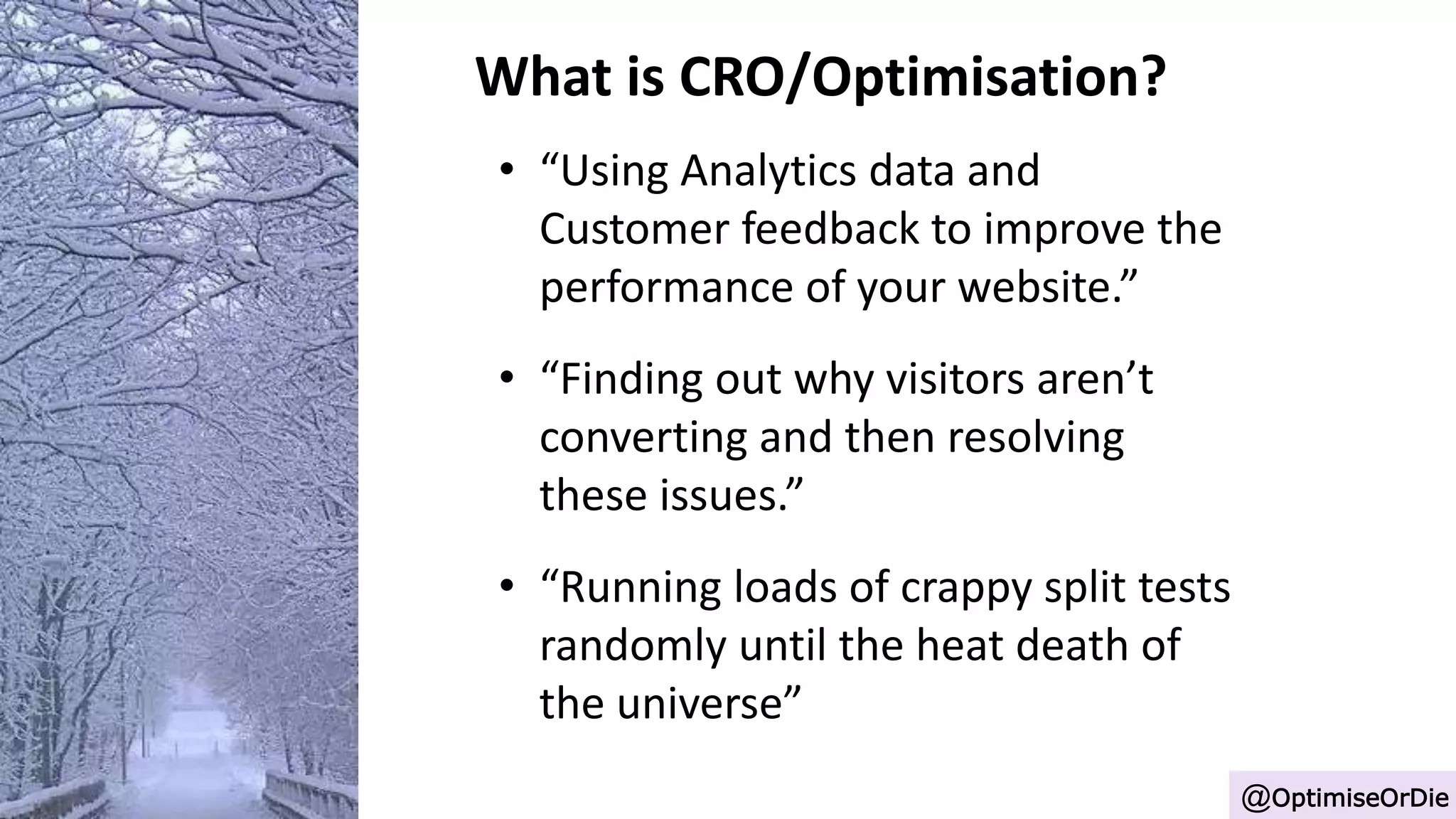

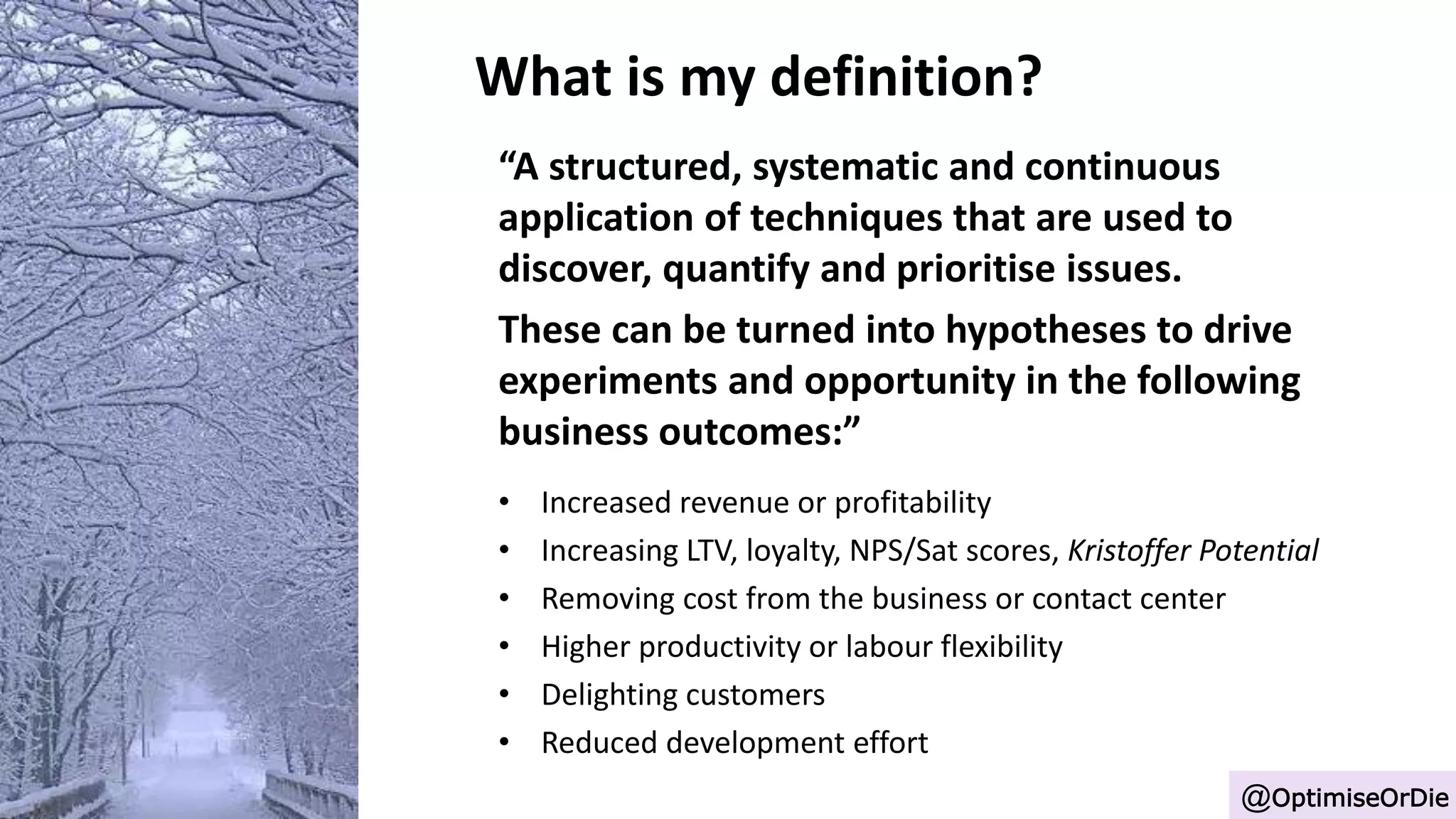

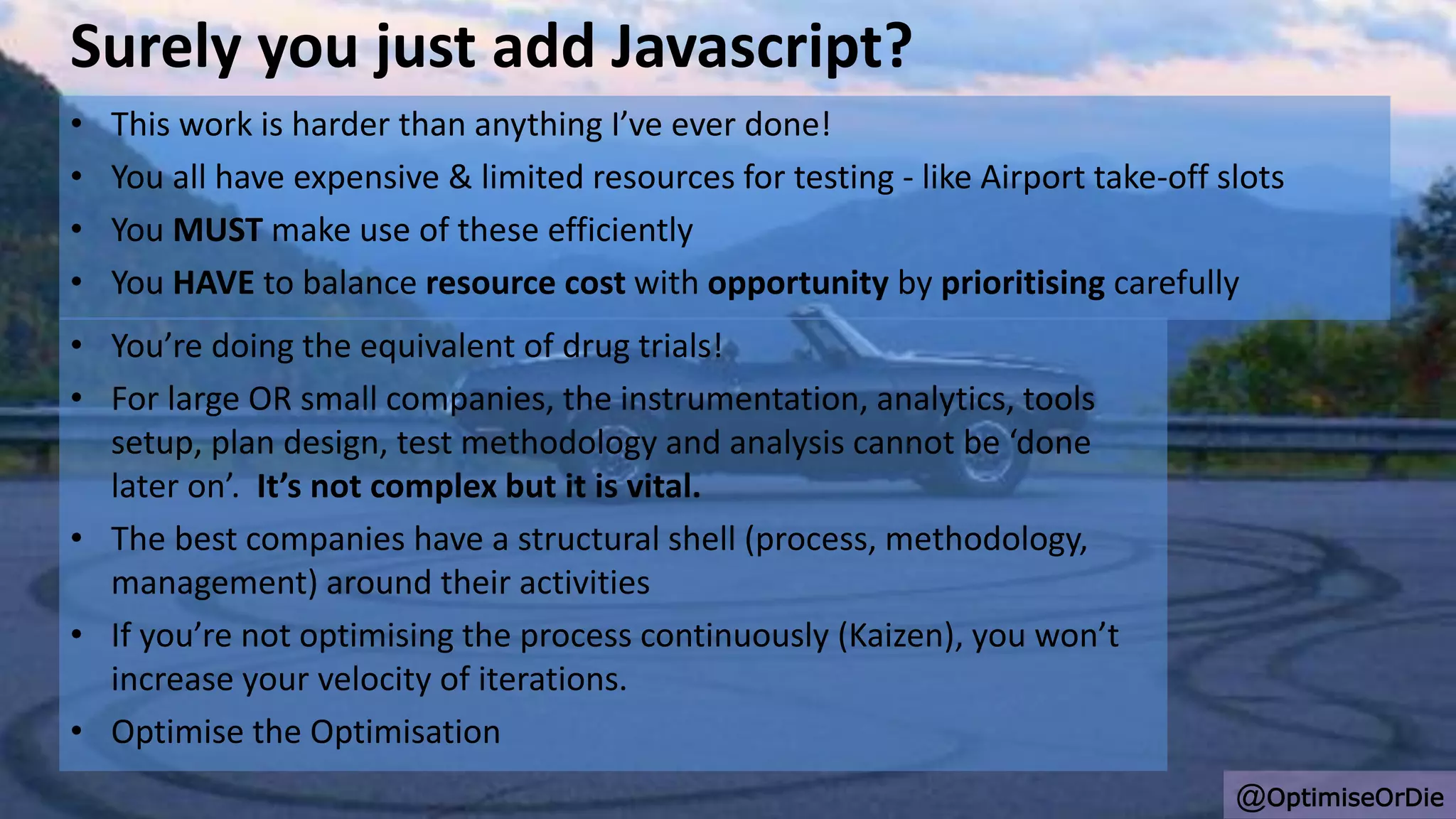

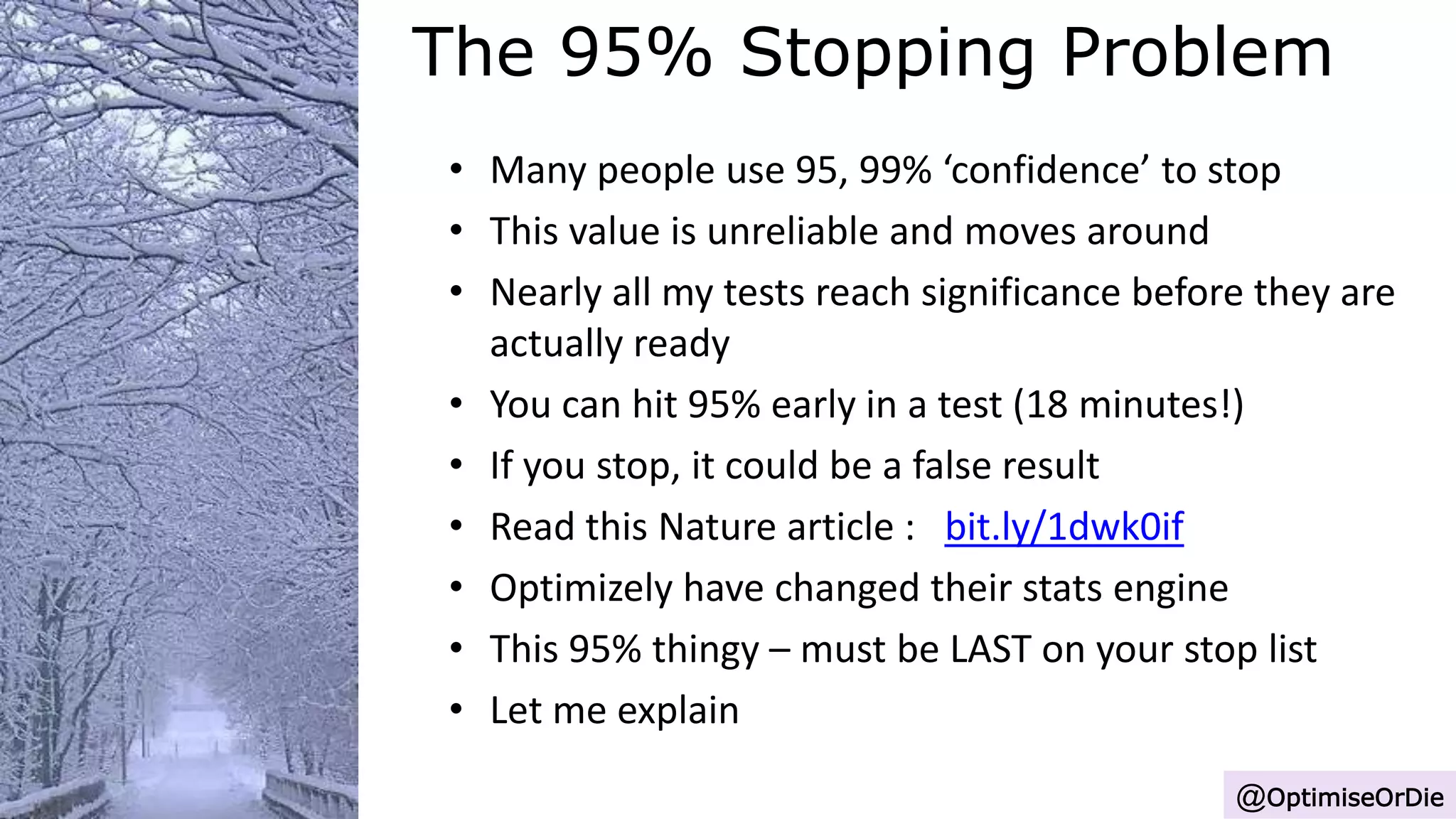

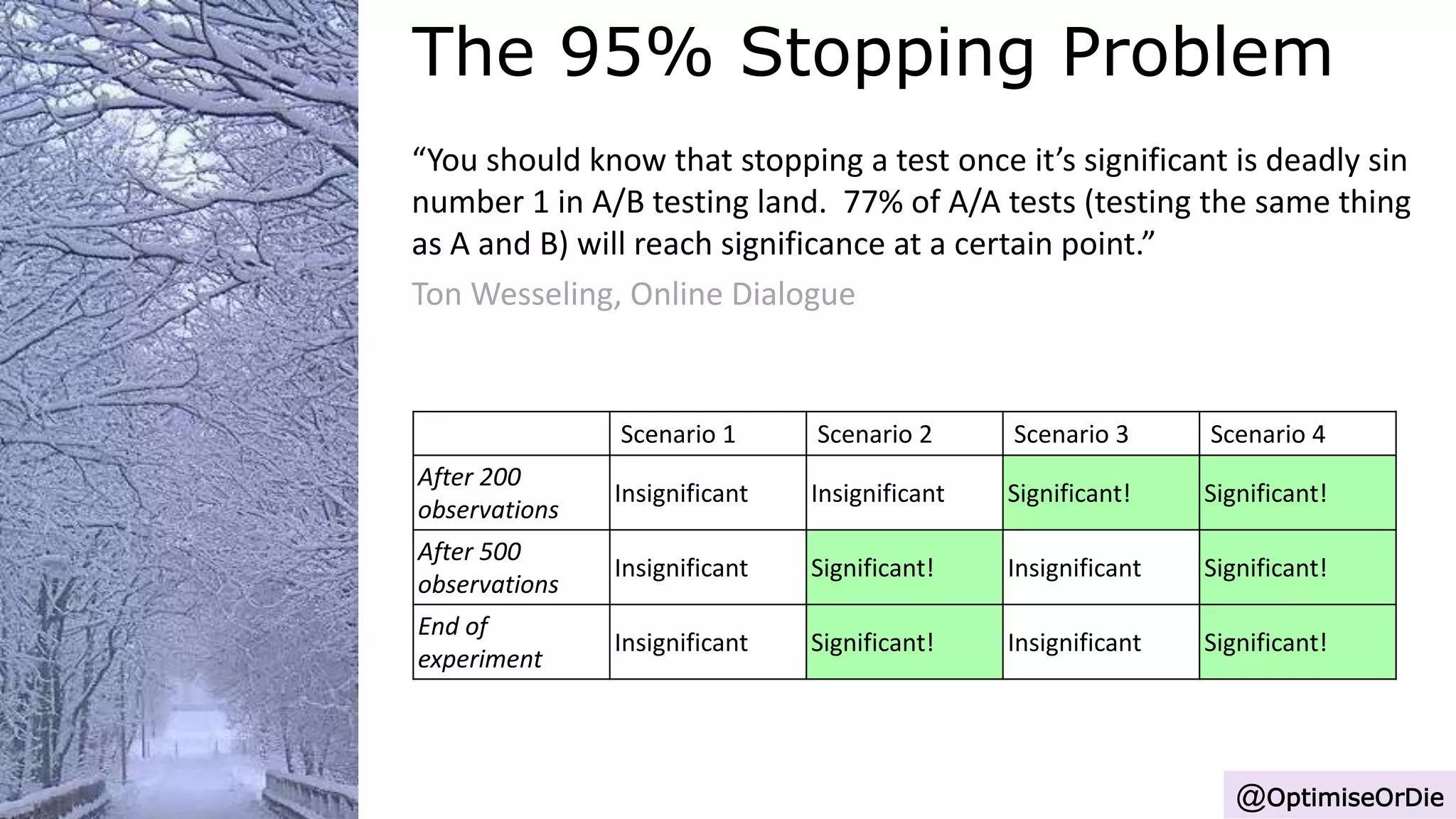

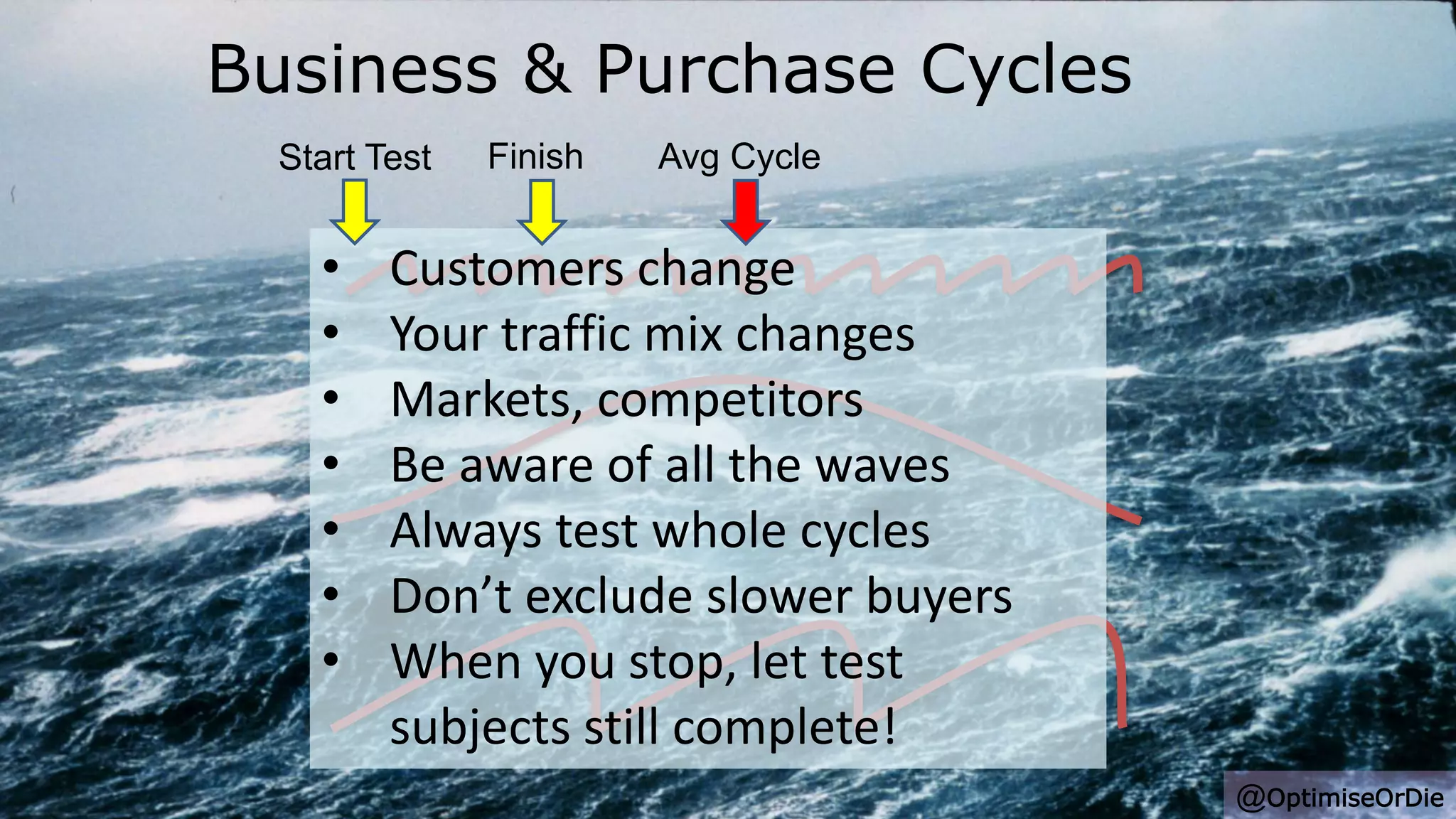

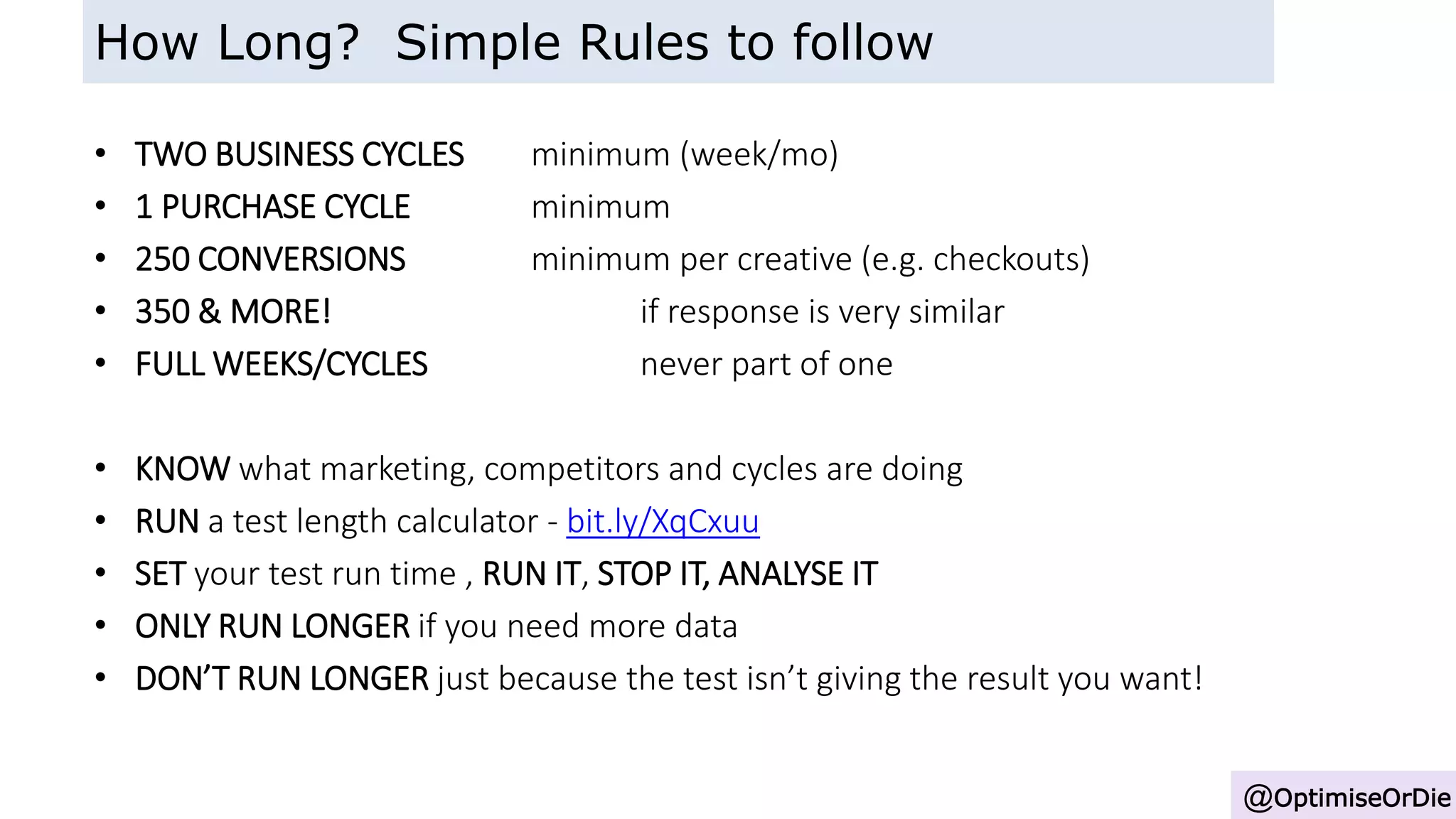

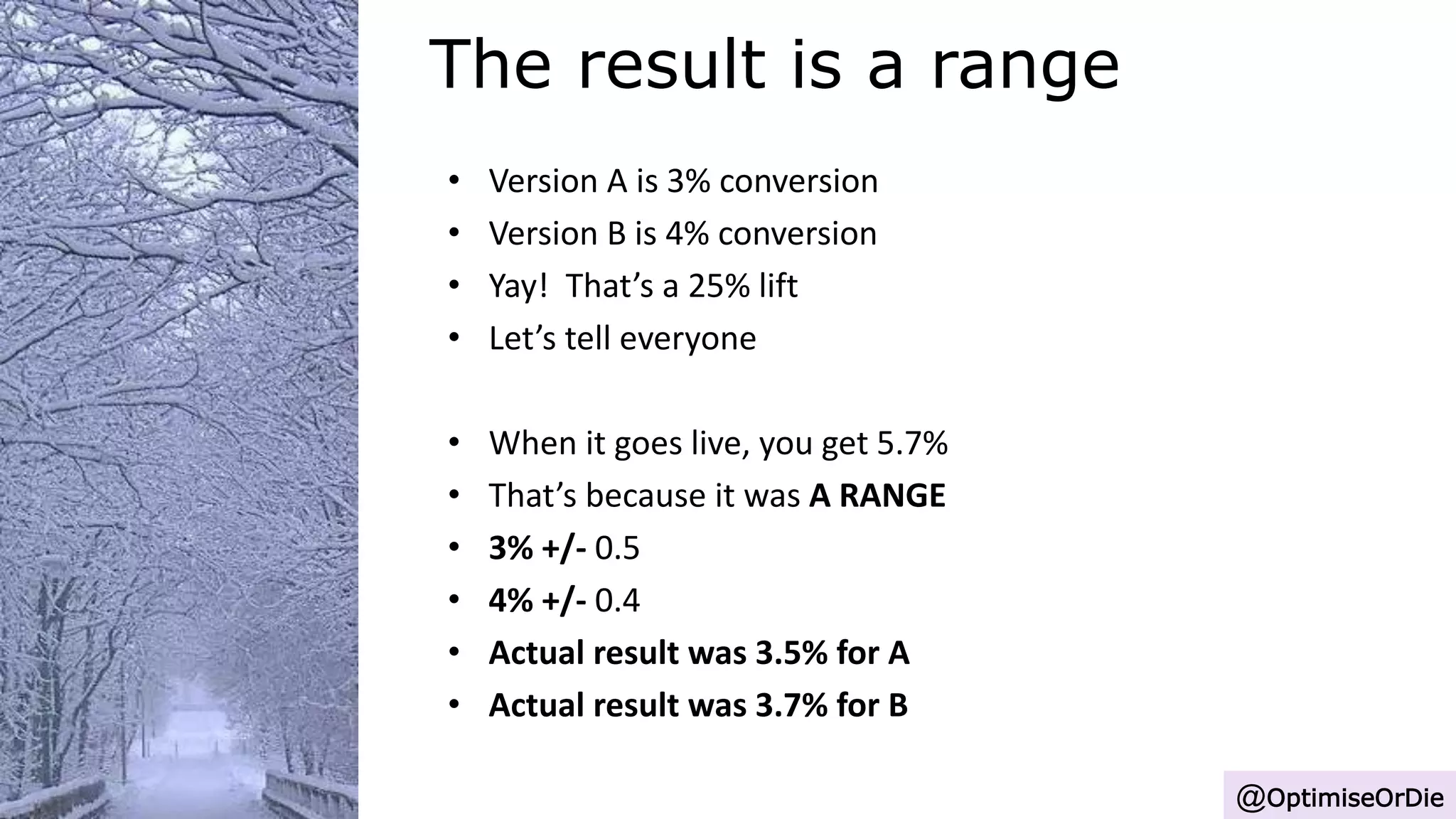

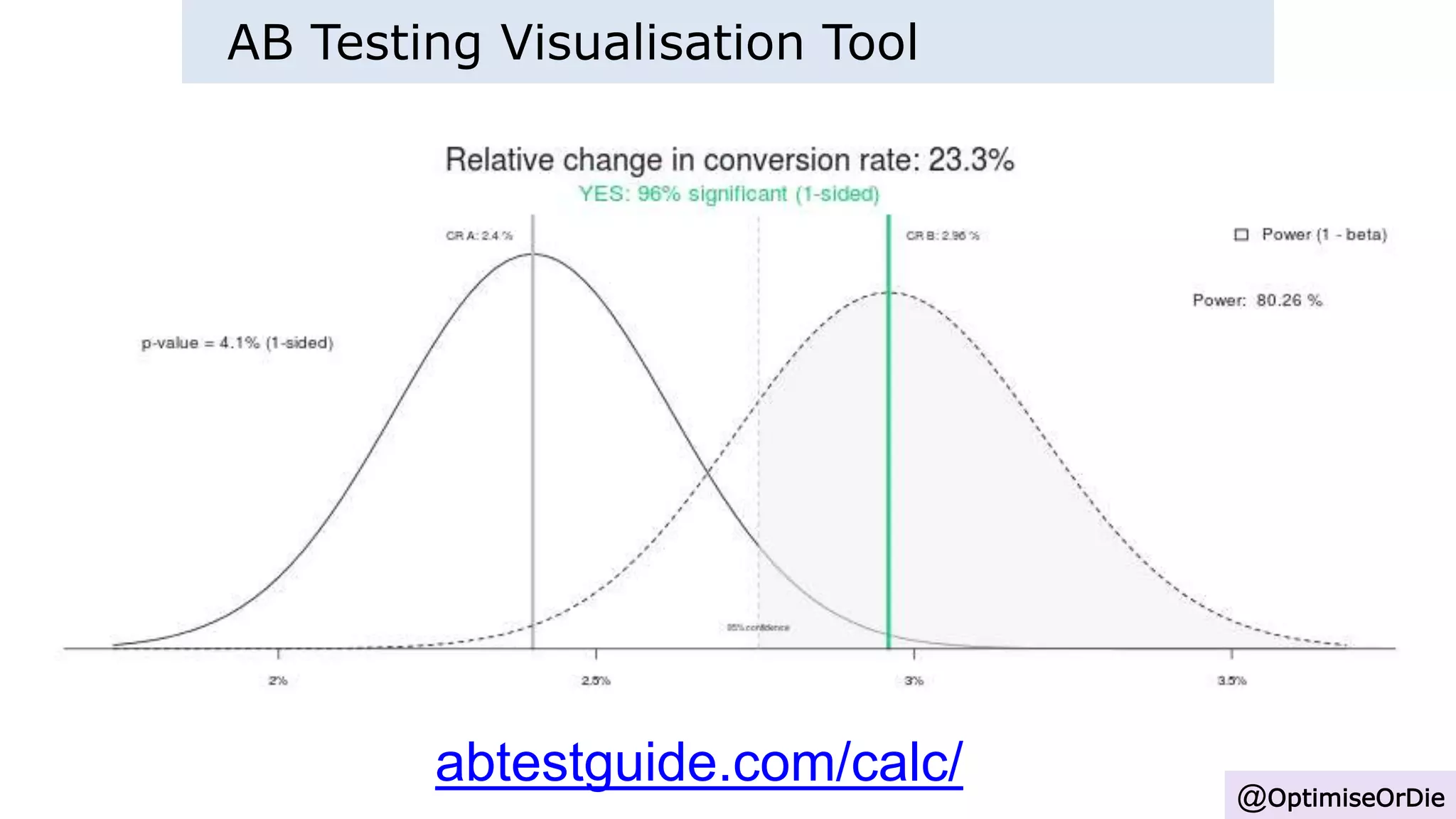

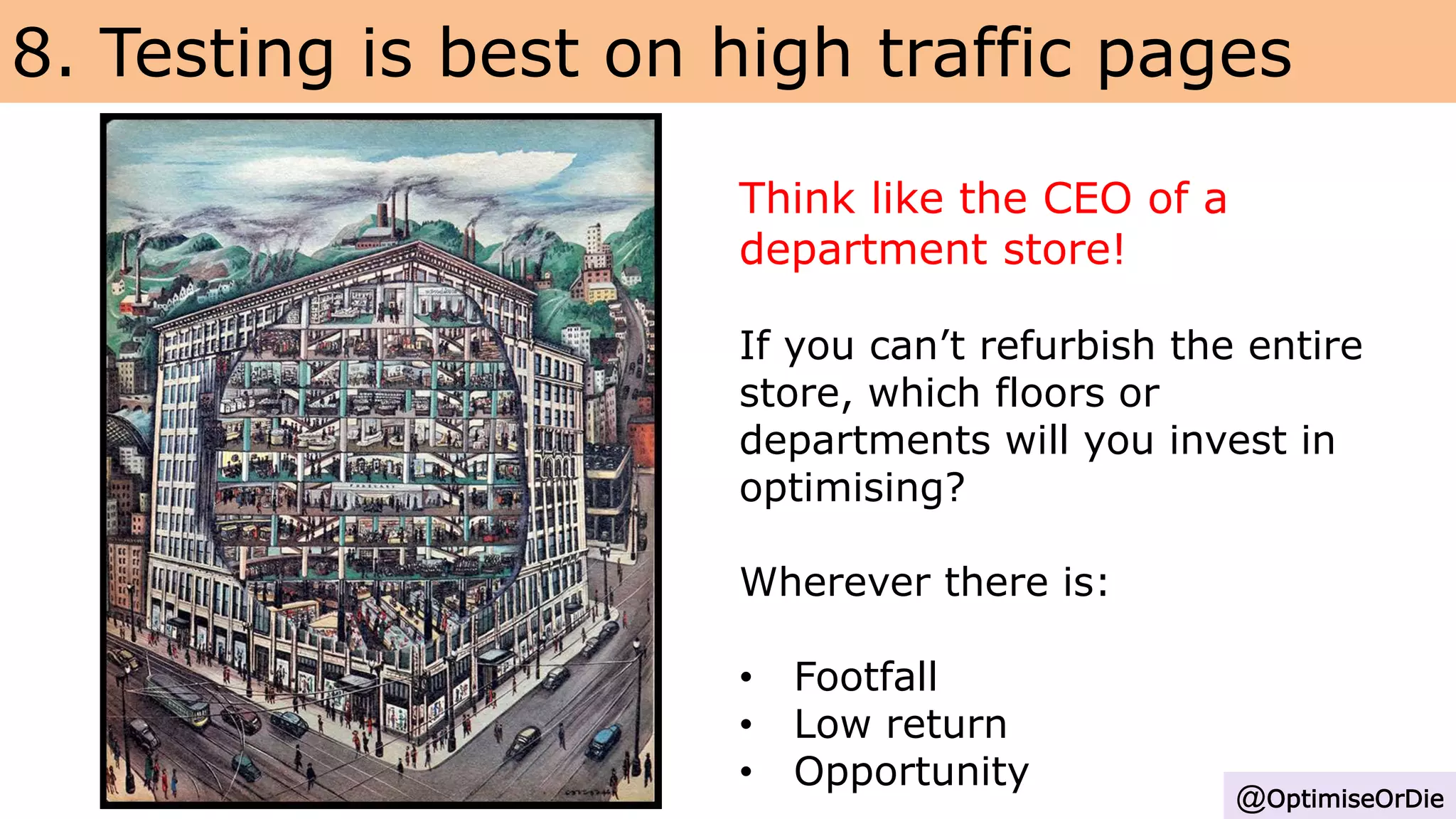

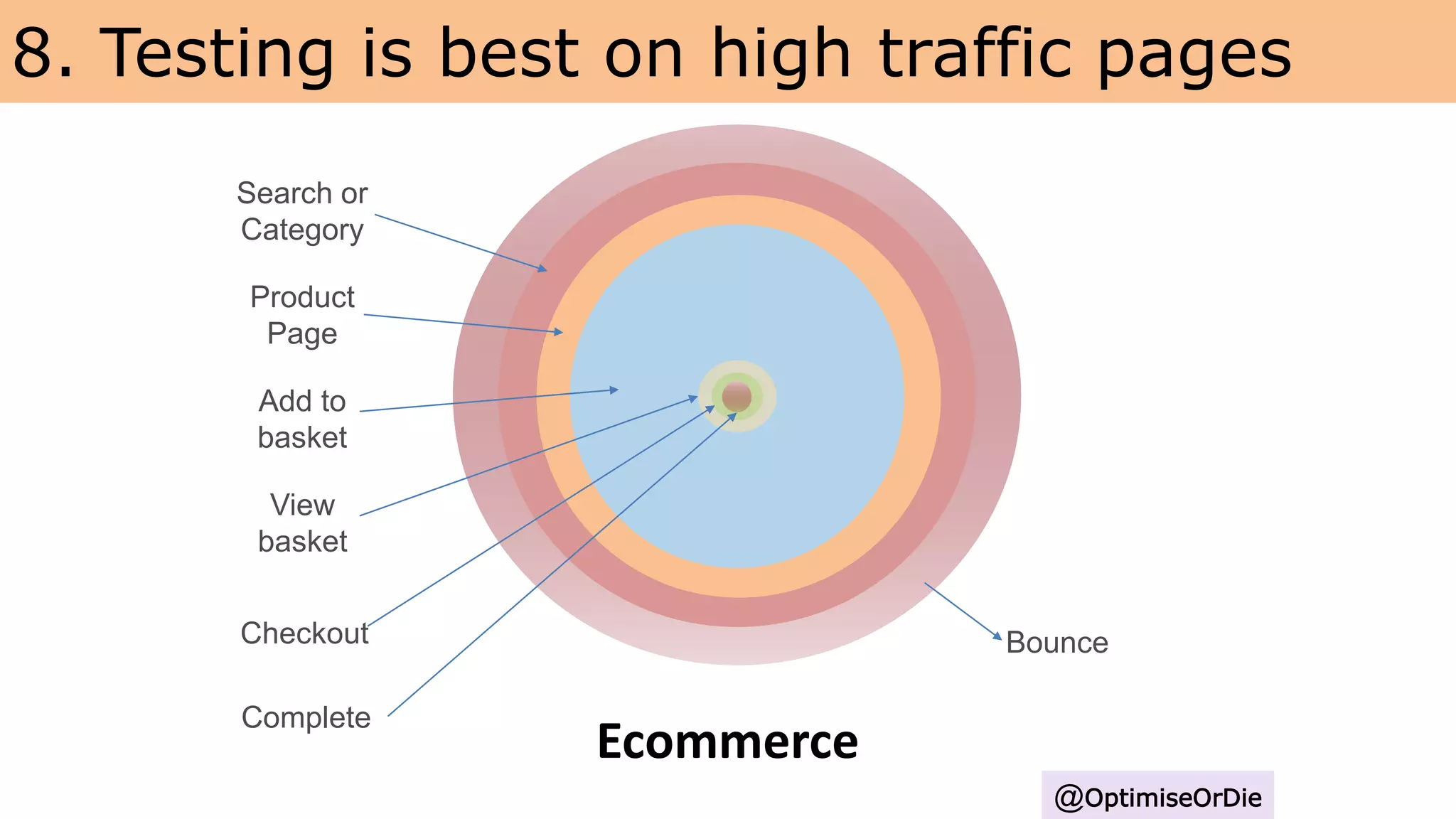

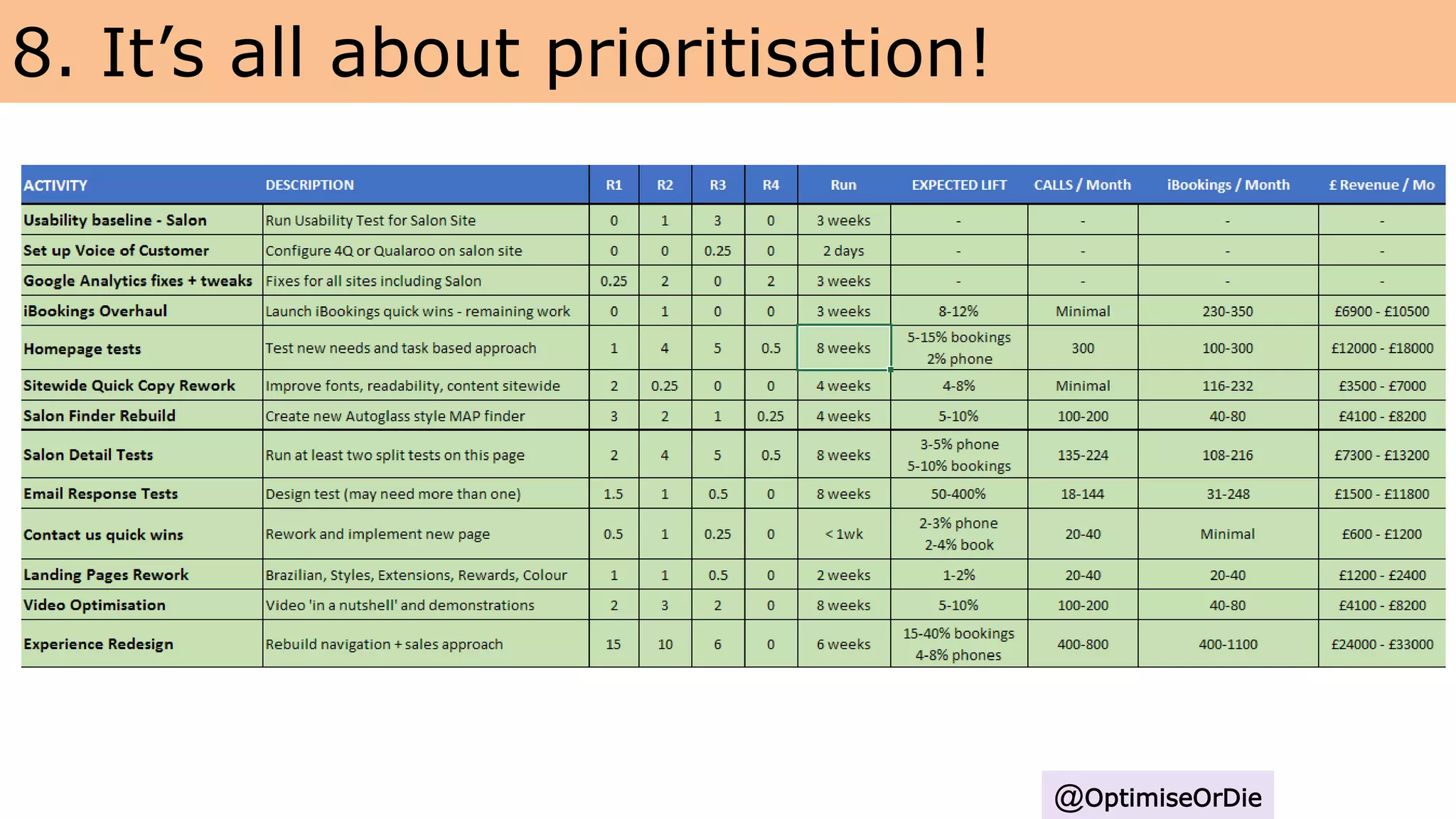

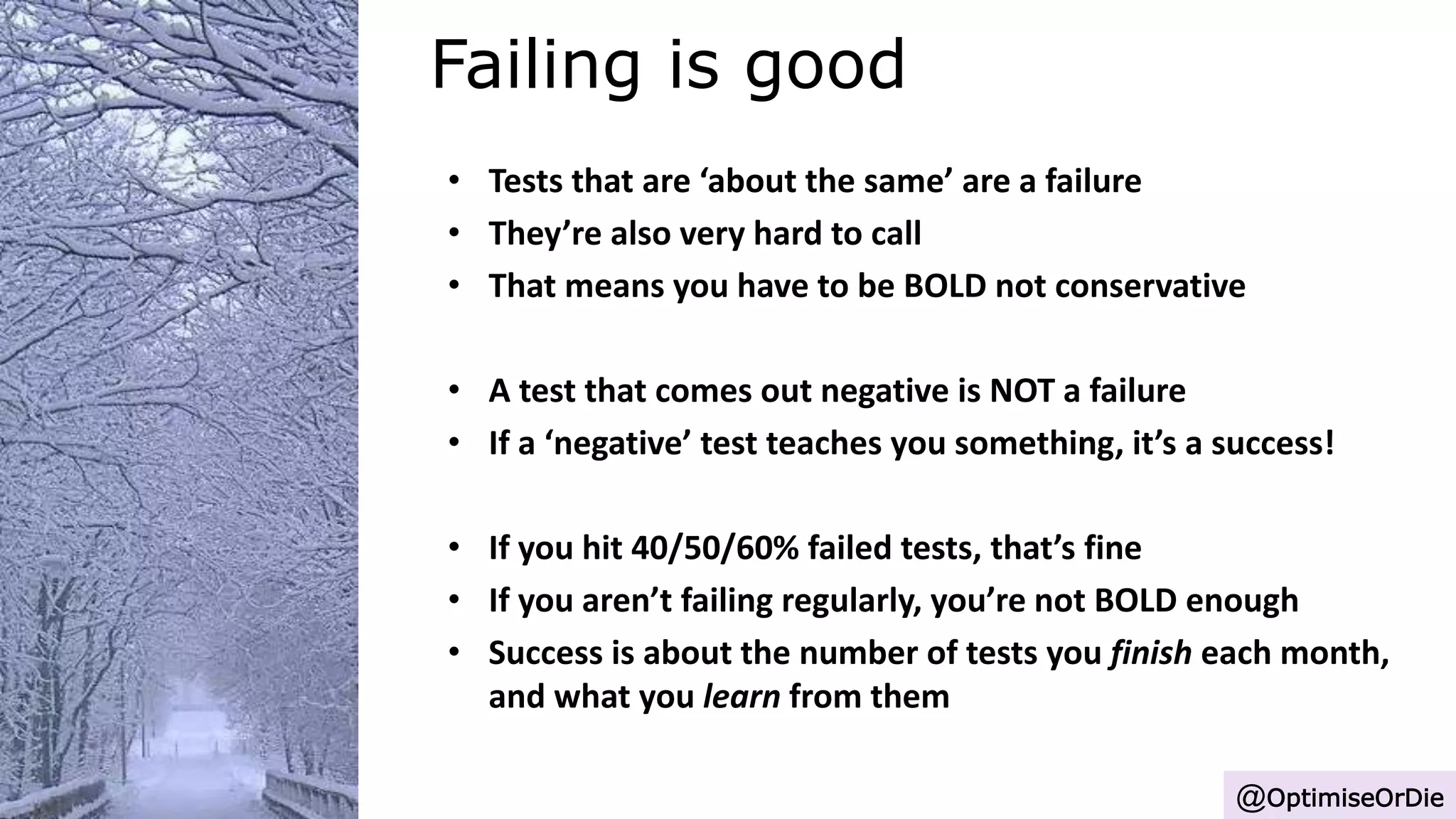

The document discusses the misconceptions and realities of split testing and optimization, highlighting 67 common mistakes made in A/B testing. It emphasizes that optimization is a systematic process that requires a thorough understanding of customer insights, analytics, and iterative testing rather than just an easy method to increase conversion rates. The document also critiques common misbeliefs about testing, including the reliance on statistical significance and the idea that testing is only relevant for high-traffic websites, stressing the importance of continuous improvement and learning from test outcomes.

![We believe that doing [A]

for People [B] will make

outcome [C] happen.

We’ll know this when we

observe data [D] and

obtain feedback [E].

(reverse)

@OptimiseOrDie](https://image.slidesharecdn.com/superweek-mythsliesandillusionsofabtesting-v1-150122040724-conversion-gate01/75/Myths-Lies-and-Illusions-of-AB-and-Split-Testing-36-2048.jpg)