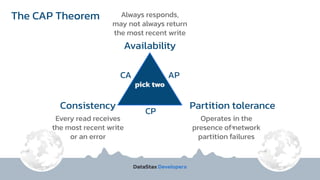

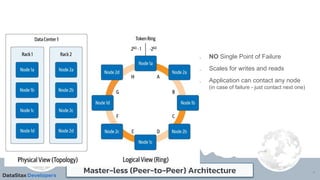

The document discusses the integration of NoSQL databases, specifically Apache Cassandra, with Kubernetes through the K8ssandra operator, which facilitates multi-cluster deployments. It highlights the advantages of NoSQL over relational databases, including scalability and flexibility, and explains the architectural principles behind Cassandra's operation, such as the CAP theorem. Additionally, it outlines installation procedures and resources for utilizing K8ssandra effectively in cloud-native environments.

![Why partitioning?

Because scaling doesn’t have to be [s]hard!

Big Data doesn’t fit to a single server, splitting it into

chunks we can easily spread them over dozens, hundreds

or even thousands of servers, adding more if needed.](https://image.slidesharecdn.com/kcddcrags-220920164329-66ce42f3/85/Multi-cluster-k8ssandra-12-320.jpg)