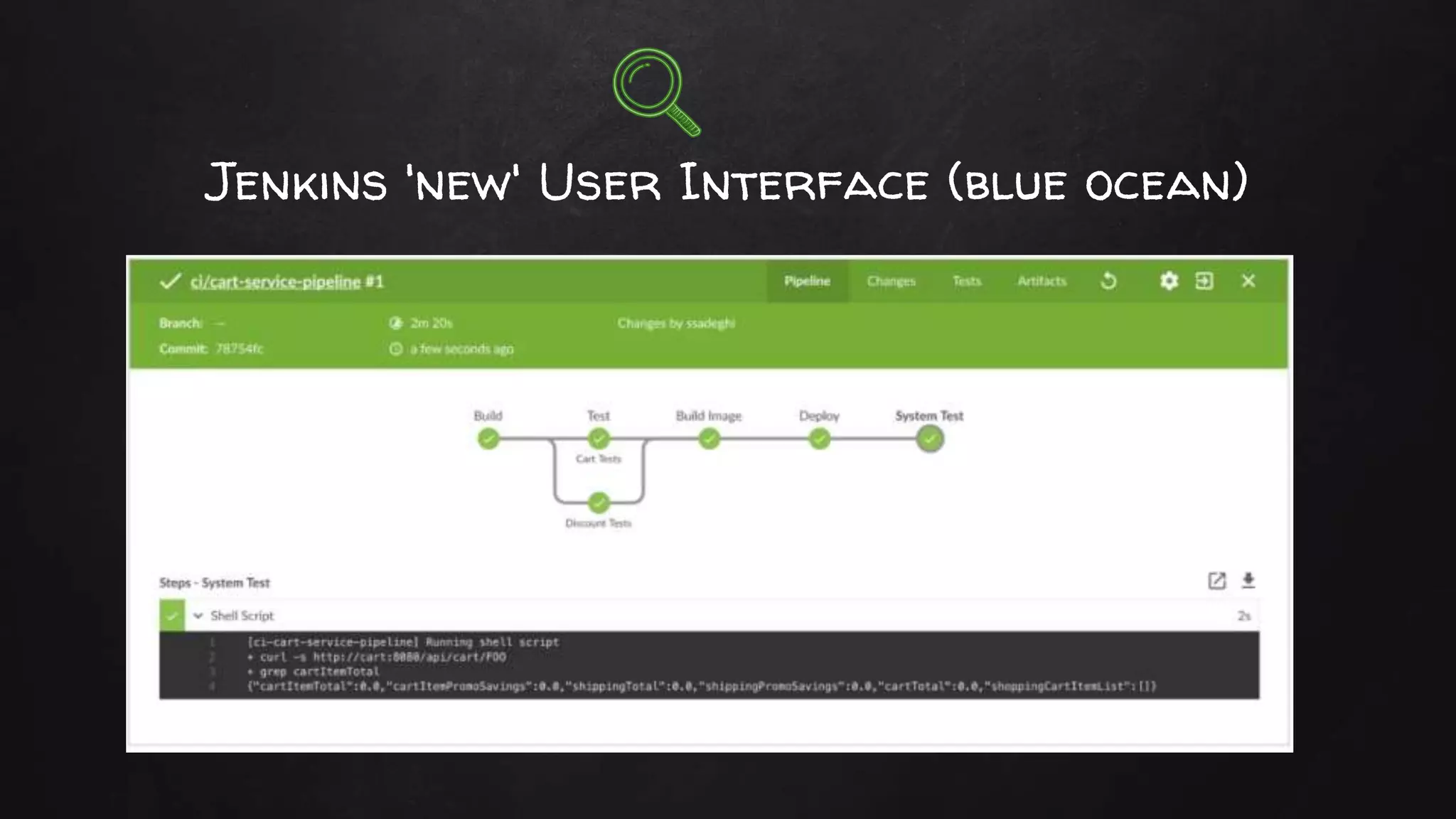

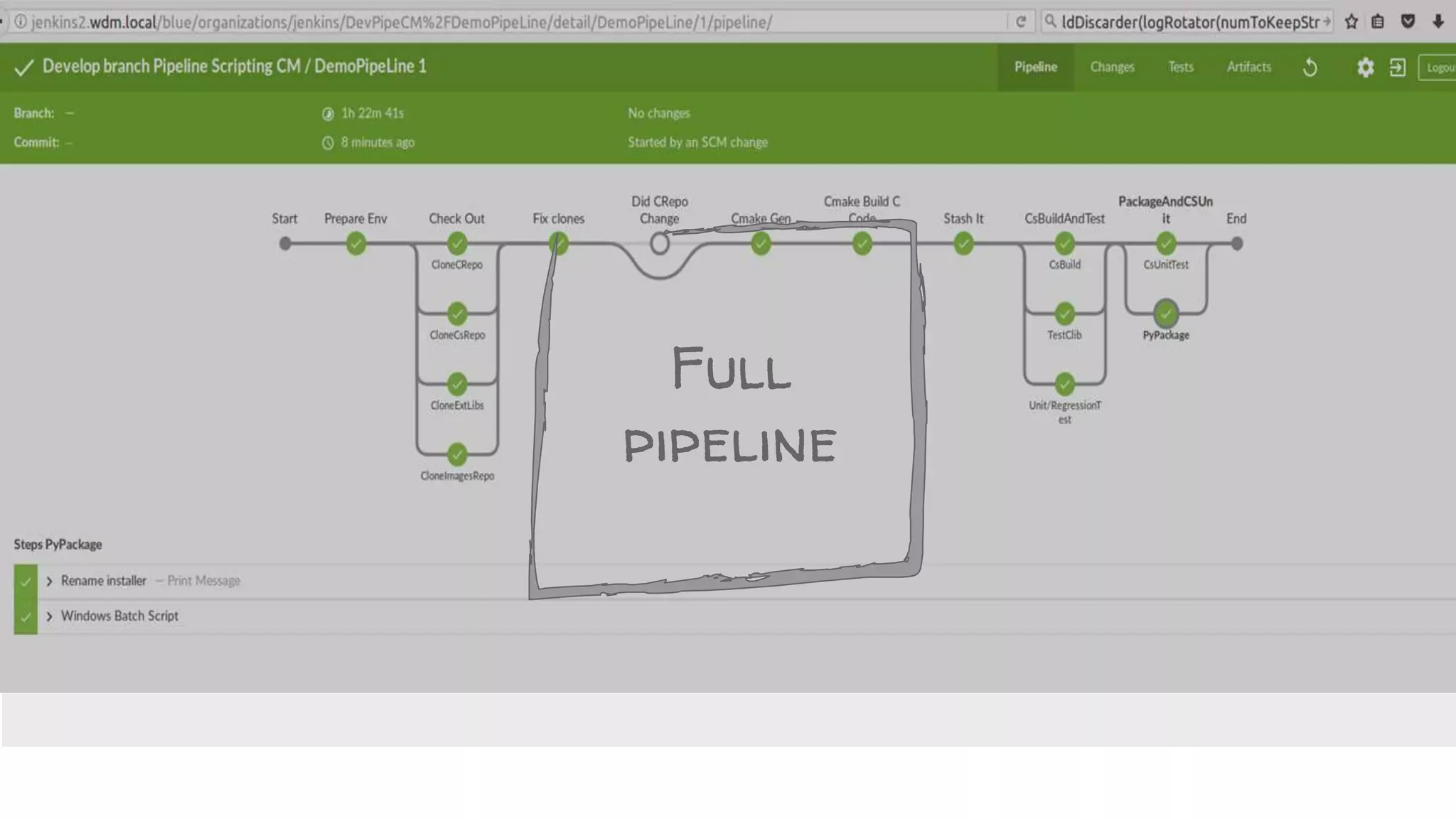

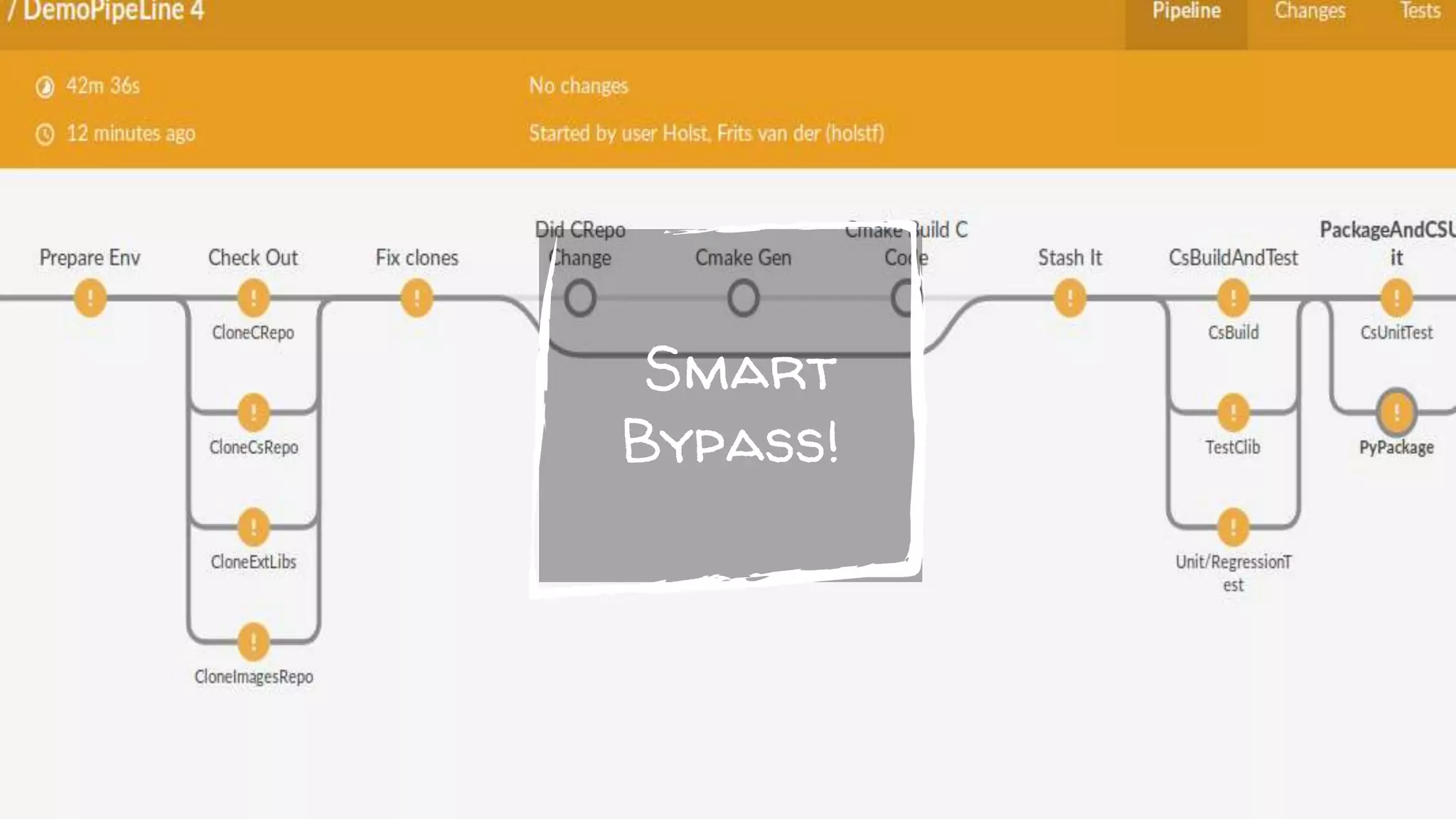

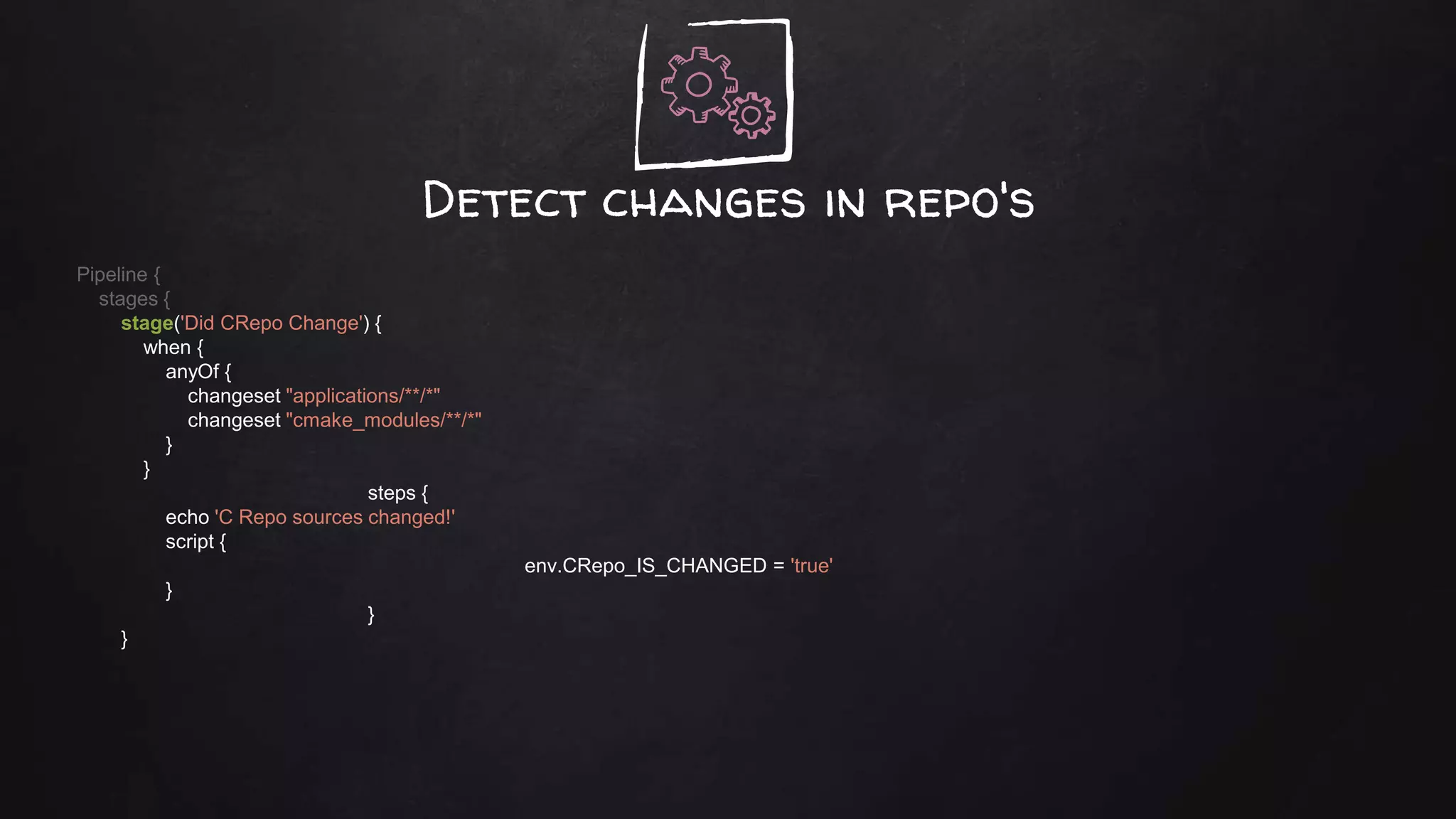

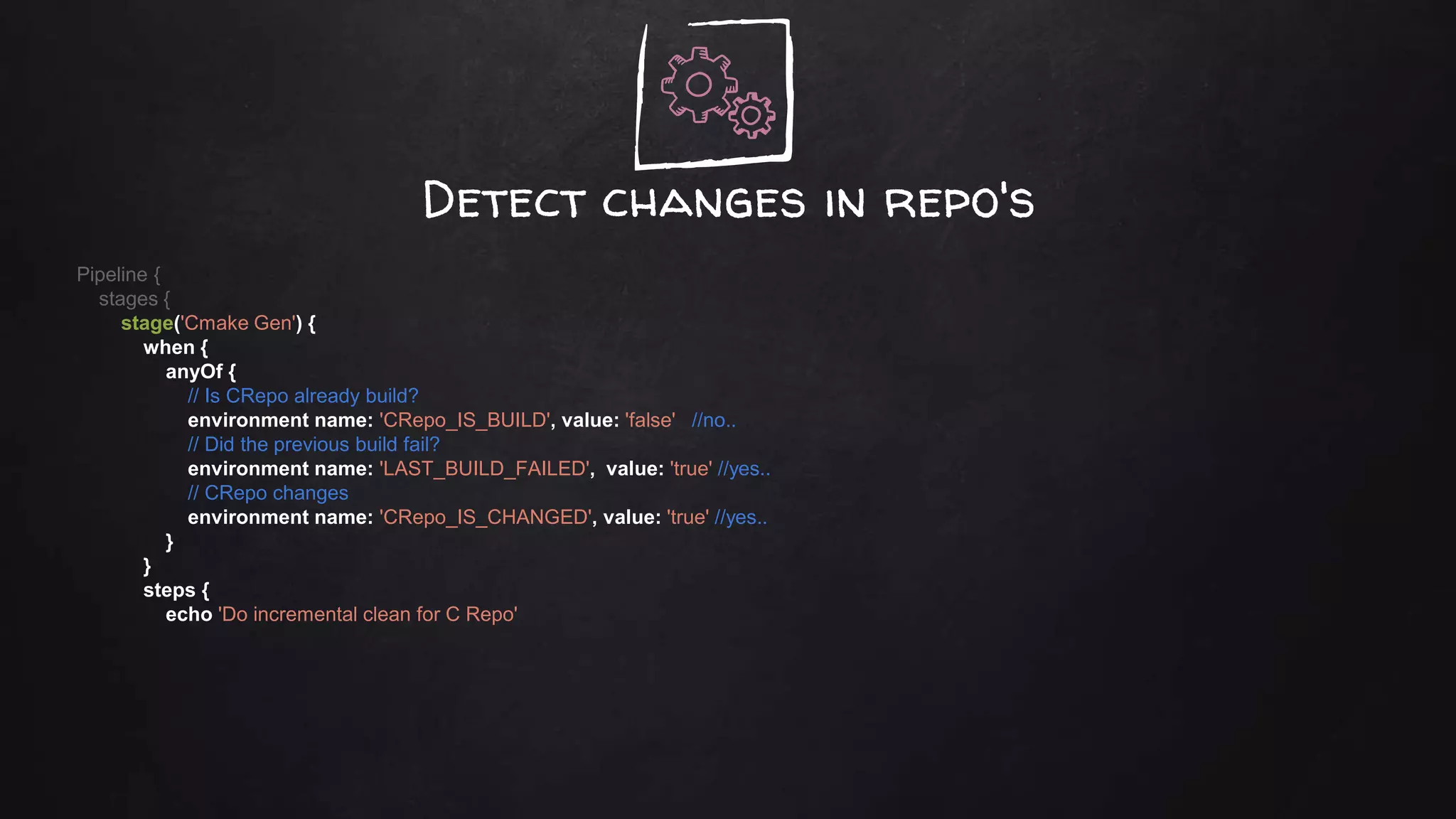

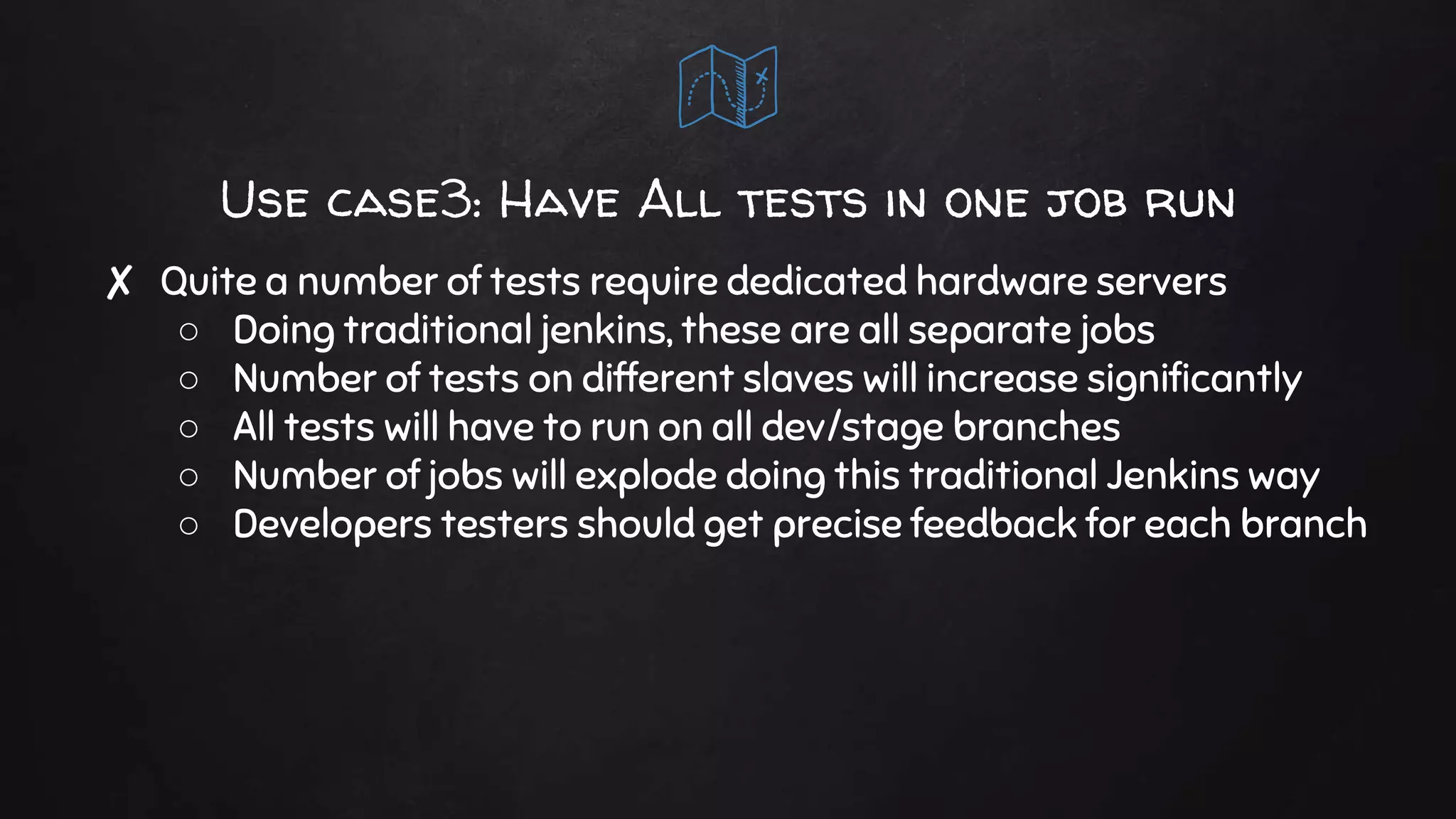

The document discusses the transition from Jenkins 1 to Jenkins 2, focusing on the use of the Declarative Pipeline for complex builds and testing scenarios. It emphasizes the challenges faced with traditional configurations and outlines various strategies and examples for efficient pipeline management, including code repositories integration and automating testing processes. Additionally, it highlights the improvements brought by the Blue Ocean interface and CloudBees' ongoing commitment to enhancing Jenkins functionalities.

![Starting The Pipeline

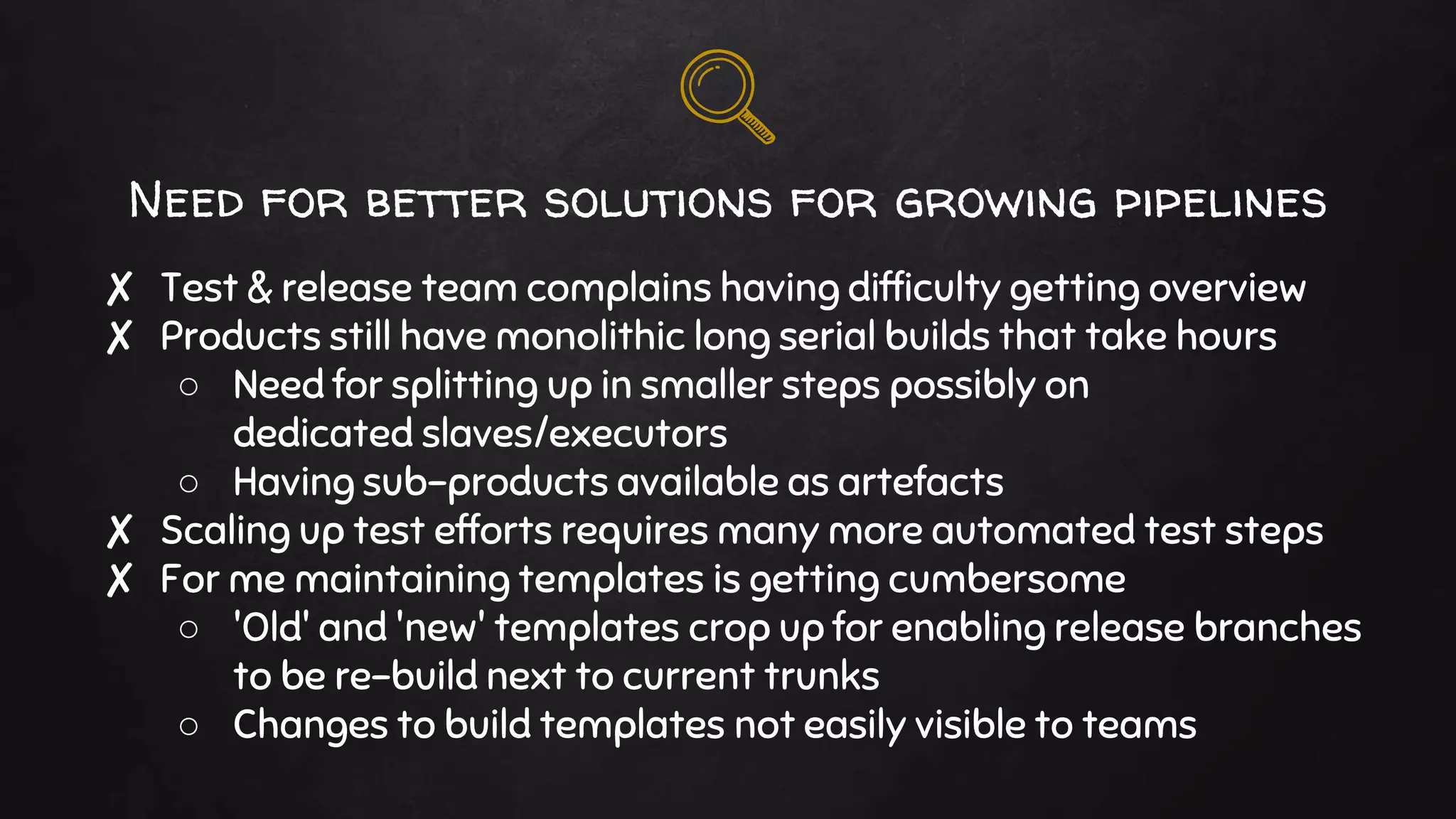

#!/usr/bin/env groovy

pipeline {

// Agent definition for whole pipe. General vs14 Windows build slave.

agent {

label "Windows && x64 && ${GENERATOR}"

}

// General config for build, timestamps and console coloring.

options {

timestamps()

buildDiscarder(logRotator(daysToKeepStr:'90', artifactDaysToKeepStr:'20'))

}

// Poll SCM every 5 minutes every work day.

triggers {

pollSCM('H/5 * * * 1-5')

}

properties( buildDiscarder(logRotator(artifactDaysToKeepStr: '5', artifactNumToKeepStr: '', daysToKeepStr: '15', numToKeepStr: '')) ])](https://image.slidesharecdn.com/c6w8y7ueqbiwstcvibhl-signature-b44bc92d6851ec2cbb91b0bd2feaef9cd53f6a5e6f090e27d0c8a6e4665fcf72-poli-171205140235/75/Moving-from-Jenkins-1-to-2-declarative-pipeline-adventures-27-2048.jpg)

![Parallel checkoutPipeline {

stages {

// Check out stage.. parallel checkout of all repo's.

stage('Check Out') {

parallel {

stage ("CloneCsRepo") {

steps {

echo 'Now let us check out C#Repo'

// Use sleep time preventing different HG threads grab same log file name

sleep 9

checkout changelog: true, poll: true, scm: [$class: 'MercurialSCM',

clean: true, credentialsId: '', installation: 'HG Multibranch',

source: "http://hg.wdm.local/hg/CsRepo/", subdir: 'CsRepo']

}

}

stage ("CloneCRepo") {

steps {

echo 'Now let us check out C Repo'

sleep 15

checkout changelog: true, poll: true,

Failed to parse ...Testing_Pipeline2_TMFC/builds/174/changelog2.xml: '<?xml version="1.0" encoding="UTF-8"?>

<changesets>

JENKINS-43176](https://image.slidesharecdn.com/c6w8y7ueqbiwstcvibhl-signature-b44bc92d6851ec2cbb91b0bd2feaef9cd53f6a5e6f090e27d0c8a6e4665fcf72-poli-171205140235/75/Moving-from-Jenkins-1-to-2-declarative-pipeline-adventures-30-2048.jpg)

![Un-stash test files and testPipeline {

stages {

stage ("Unit/RegressionTest") {

agent {

node {

label 'Test && Nvidia'

customWorkspace 'c:/j'

}

}

environment {

NUNIT_DIR = "${WORKSPACE}/libsrepo/NUnit-2.6.3/bin"

}

steps {

// Remove folder from previous unit tests run.

cleanWs(patterns: [[pattern: '*results.xml', type: 'INCLUDE']] )

unstash 'unittests'

echo 'running unit tests with graphics card'

// Run the actual unit tests.

bat '''

call set TESTROOTPATH=%%WORKSPACE:=/%%](https://image.slidesharecdn.com/c6w8y7ueqbiwstcvibhl-signature-b44bc92d6851ec2cbb91b0bd2feaef9cd53f6a5e6f090e27d0c8a6e4665fcf72-poli-171205140235/75/Moving-from-Jenkins-1-to-2-declarative-pipeline-adventures-41-2048.jpg)

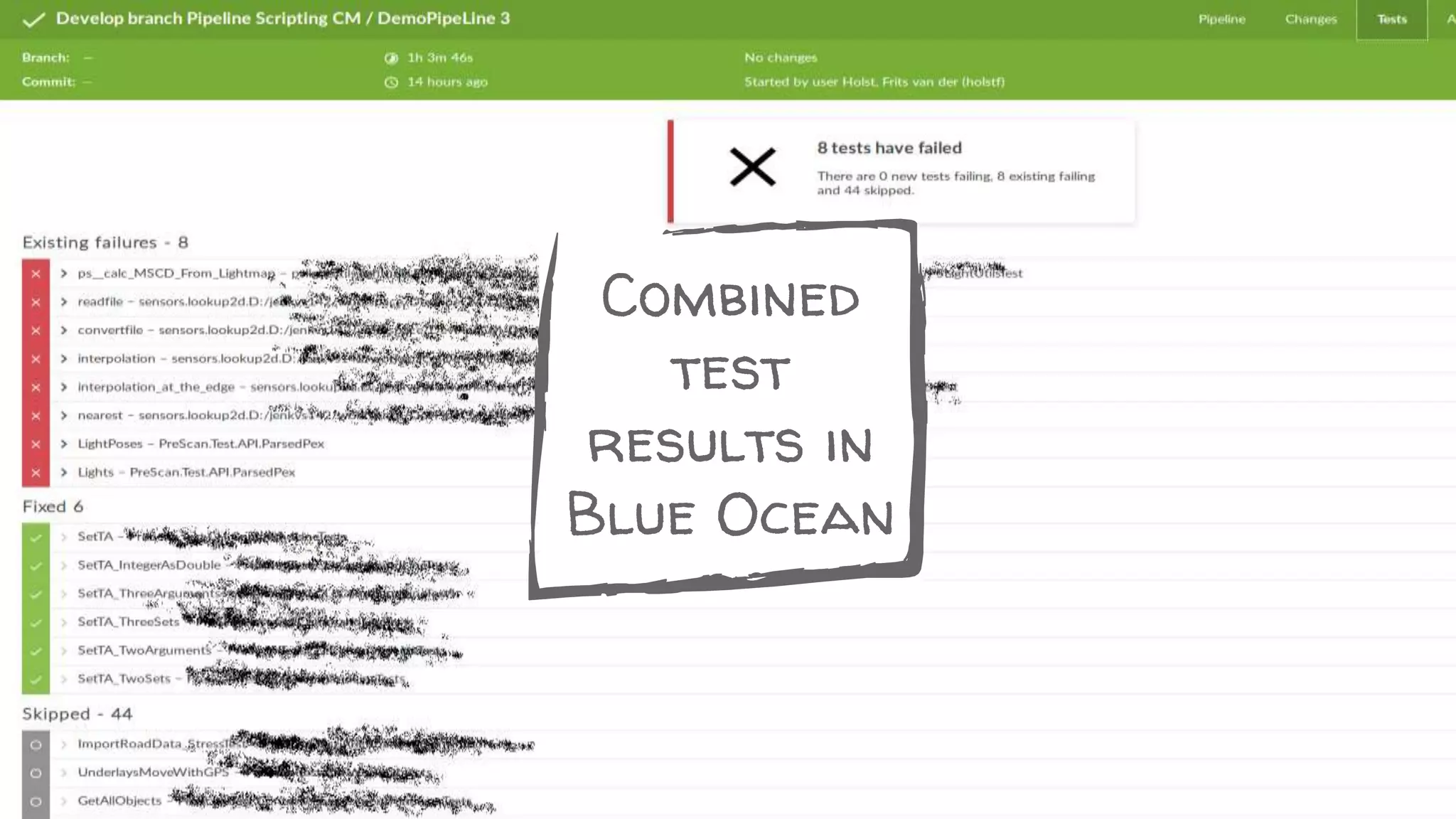

![Process combined test resultsPipeline {

post {

always {

unstash 'boosttests'

unstash 'regressiontestresult'

step([$class: 'JUnitResultArchiver', testResults: '**/reports/unittest_results.xml'])

step([$class: 'XUnitBuilder', testTimeMargin: '3000', thresholdMode: 1,

thresholds: [[$class: 'FailedThreshold', failureThreshold: '50' unstableThreshold: '30'],

[$class: 'SkippedThreshold', failureThreshold: '100', unstableThreshold: '50']],

tools: [[$class: 'BoostTestJunitHudsonTestType', deleteOutputFiles: true, failIfNotNew: true,

pattern: "*results.xml", skipNoTestFiles: false, stopProcessingIfError: false] ]])

step([$class: 'AnalysisPublisher', canRunOnFailed: true, healthy: '', unHealthy: ''])

step([$class: 'CoberturaPublisher', coberturaReportFile: '**/reports/coverageResult.xml',

failUnhealthy: false, failUnstable: false, onlyStable: false, zoomCoverageChart: false]

}

success {

emailext attachLog: ,

body: "${JOB_NAME} - Build # ${BUILD_NUMBER} - SUCCESS!!: nn Check console output at ….](https://image.slidesharecdn.com/c6w8y7ueqbiwstcvibhl-signature-b44bc92d6851ec2cbb91b0bd2feaef9cd53f6a5e6f090e27d0c8a6e4665fcf72-poli-171205140235/75/Moving-from-Jenkins-1-to-2-declarative-pipeline-adventures-42-2048.jpg)