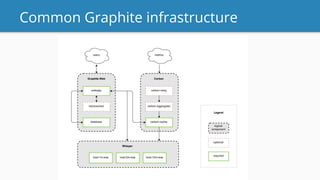

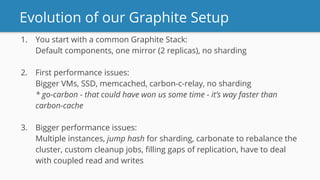

- Clickhouse is being used by GoEuro to replace their Graphite backend for monitoring, as their previous Graphite setup required too much maintenance and tuning over time to handle their scale of 20 million visitors per month, 150 engineers, and 600+ releases per week.

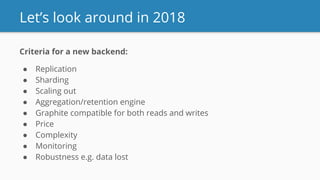

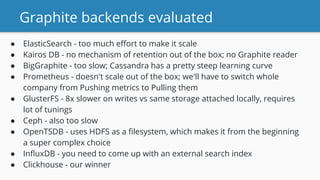

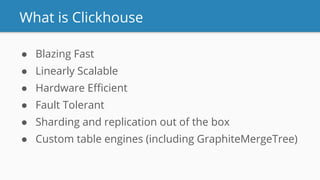

- Key reasons for choosing Clickhouse include its built-in replication, sharding, linear scalability, and a GraphiteMergeTree table engine that provides 100% compatibility with the Graphite query language.

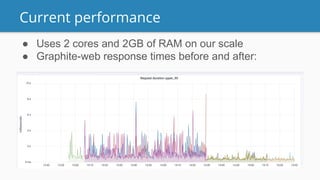

- Downsides of Clickhouse include initial dependency on Zookeeper for sharding/replication and slower read queries against sharded data, but it currently uses only 2 CPU cores and 2GB RAM to handle GoEuro's monitoring needs.