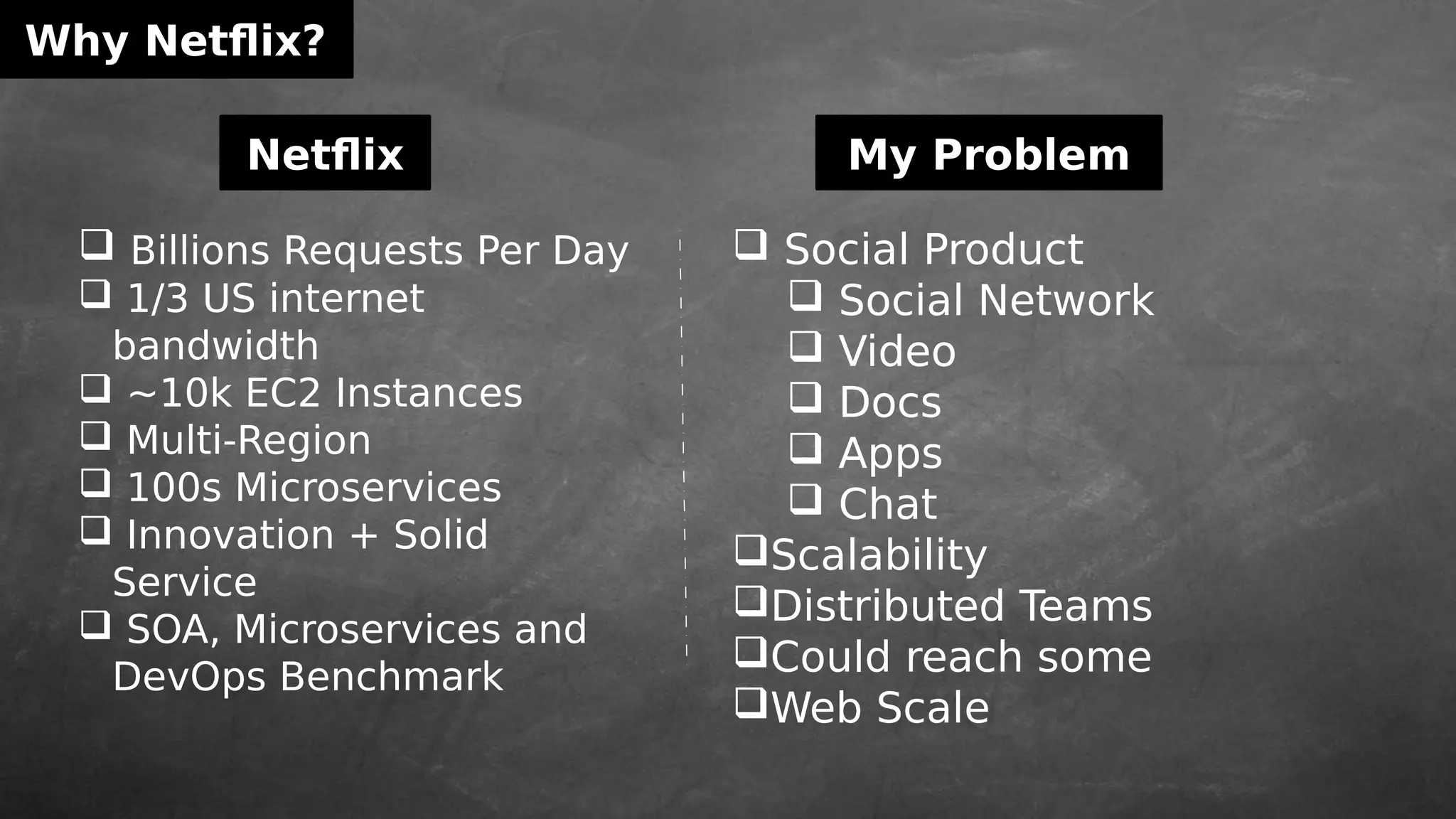

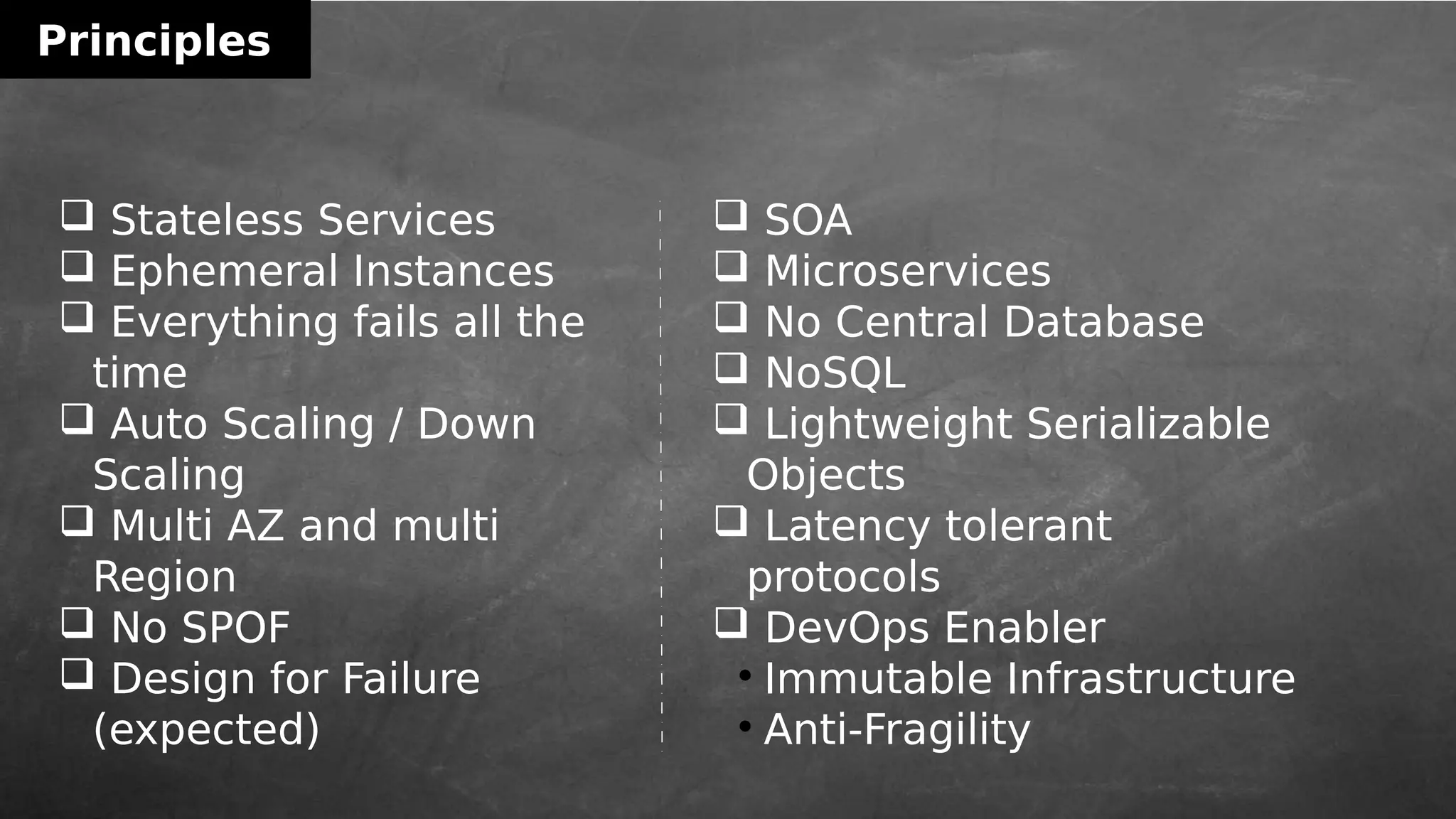

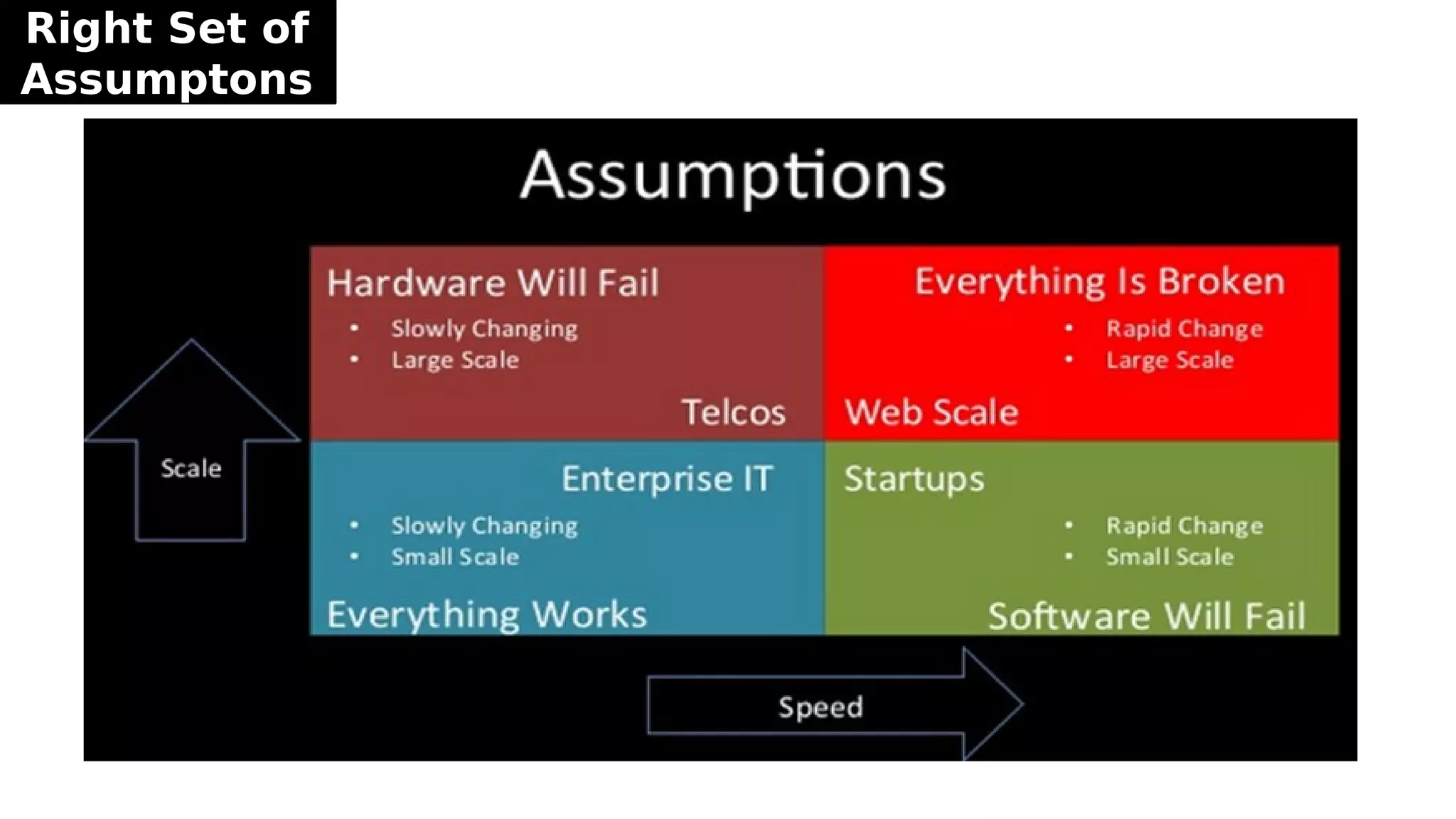

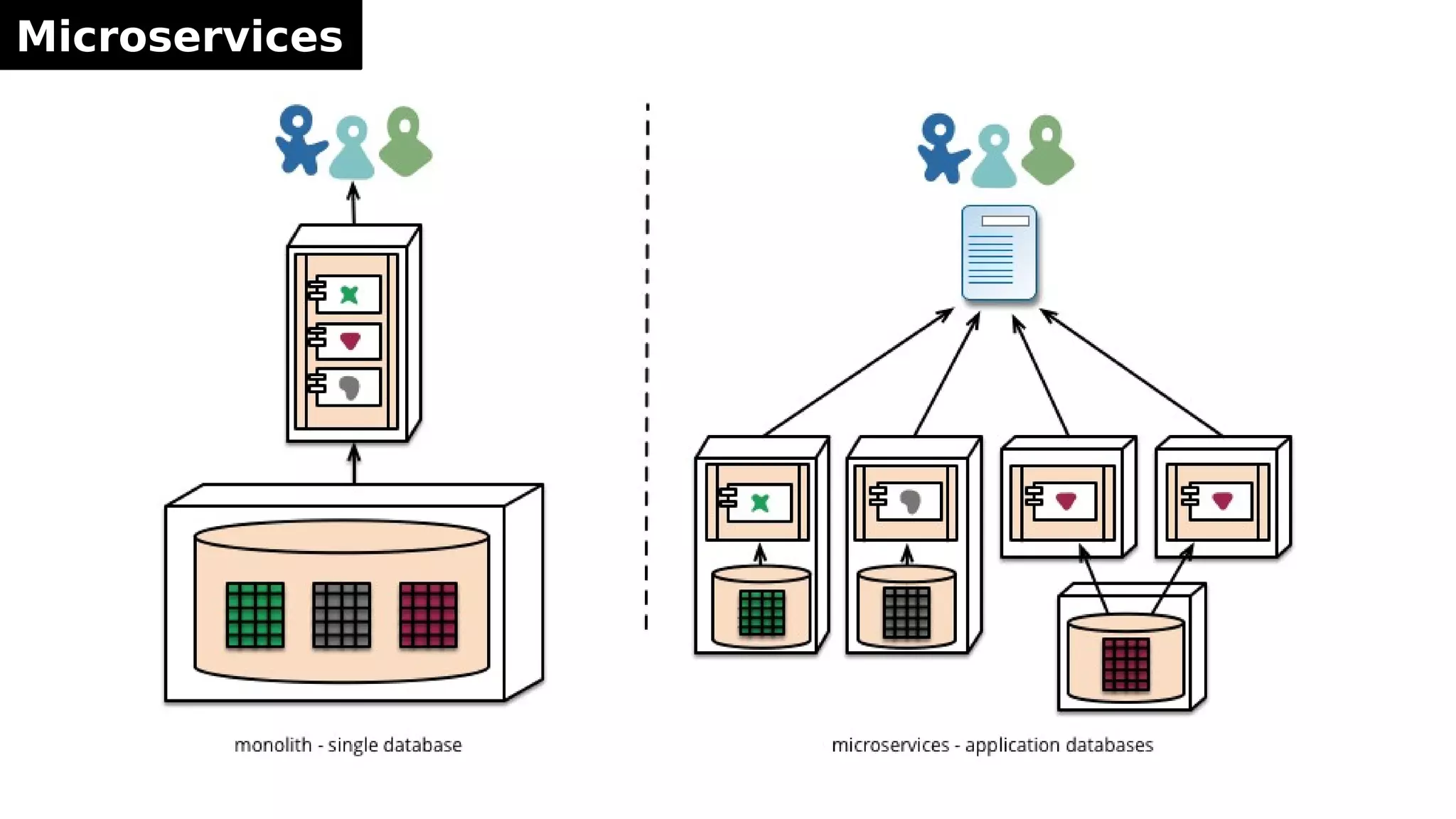

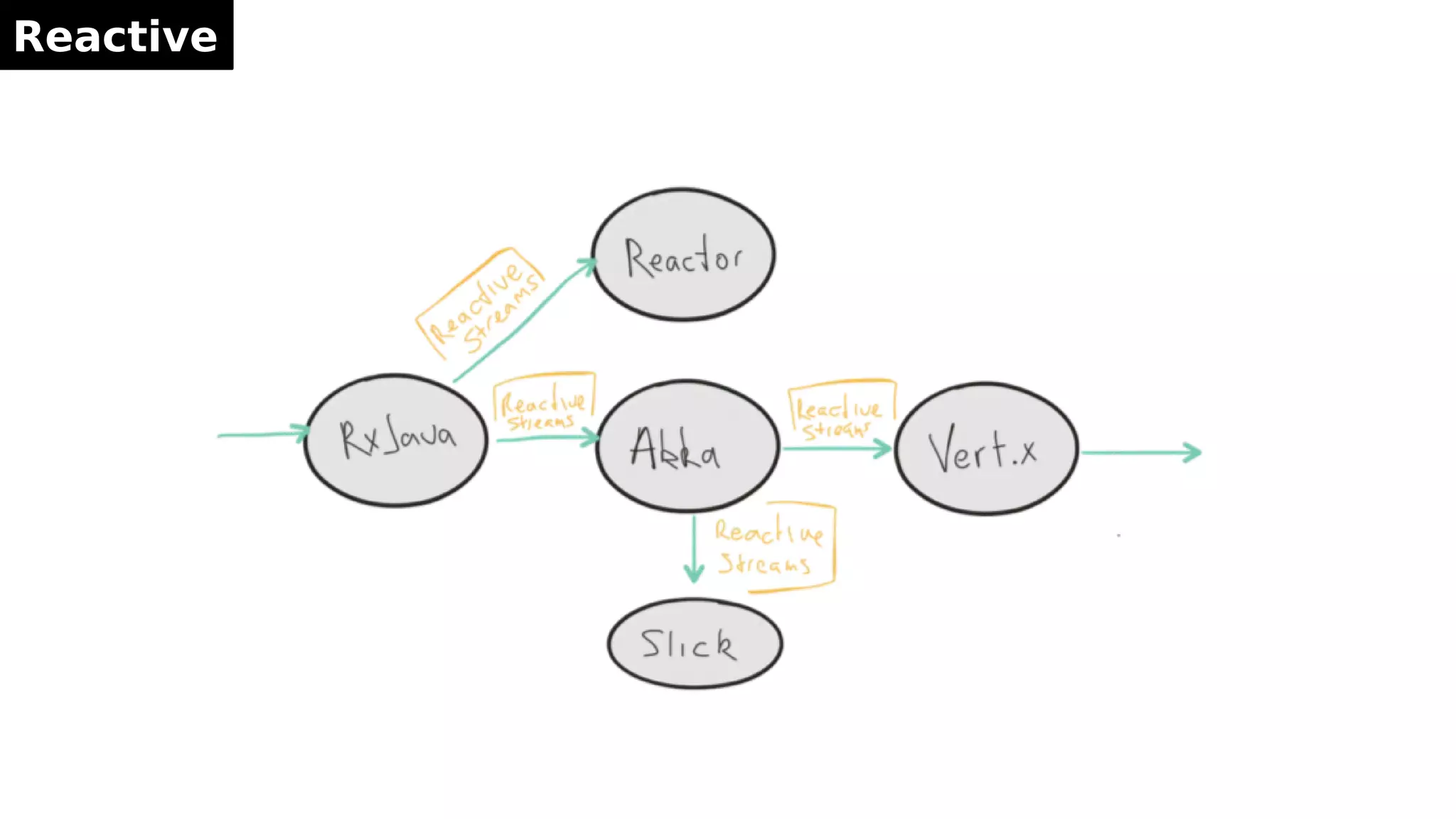

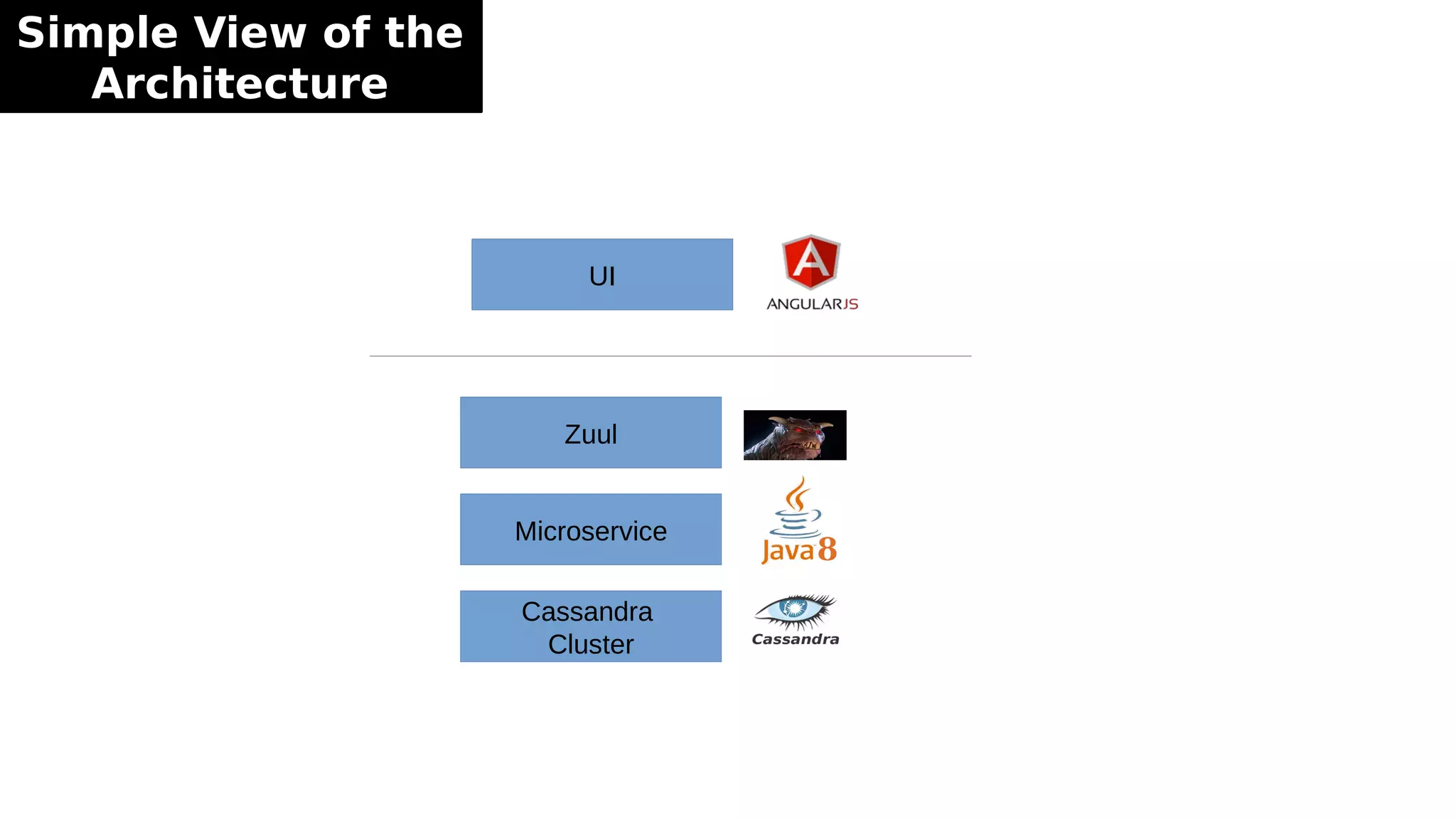

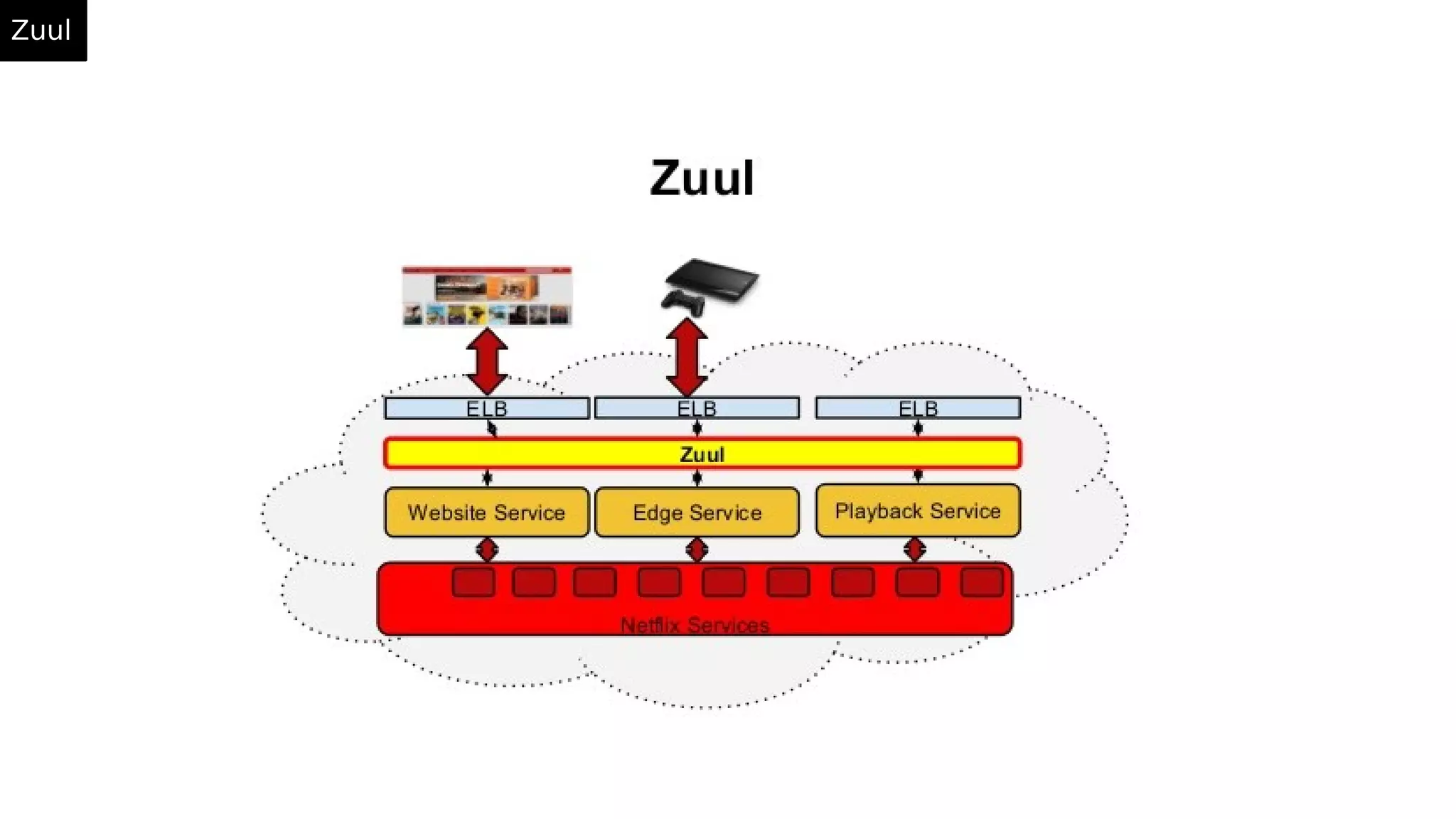

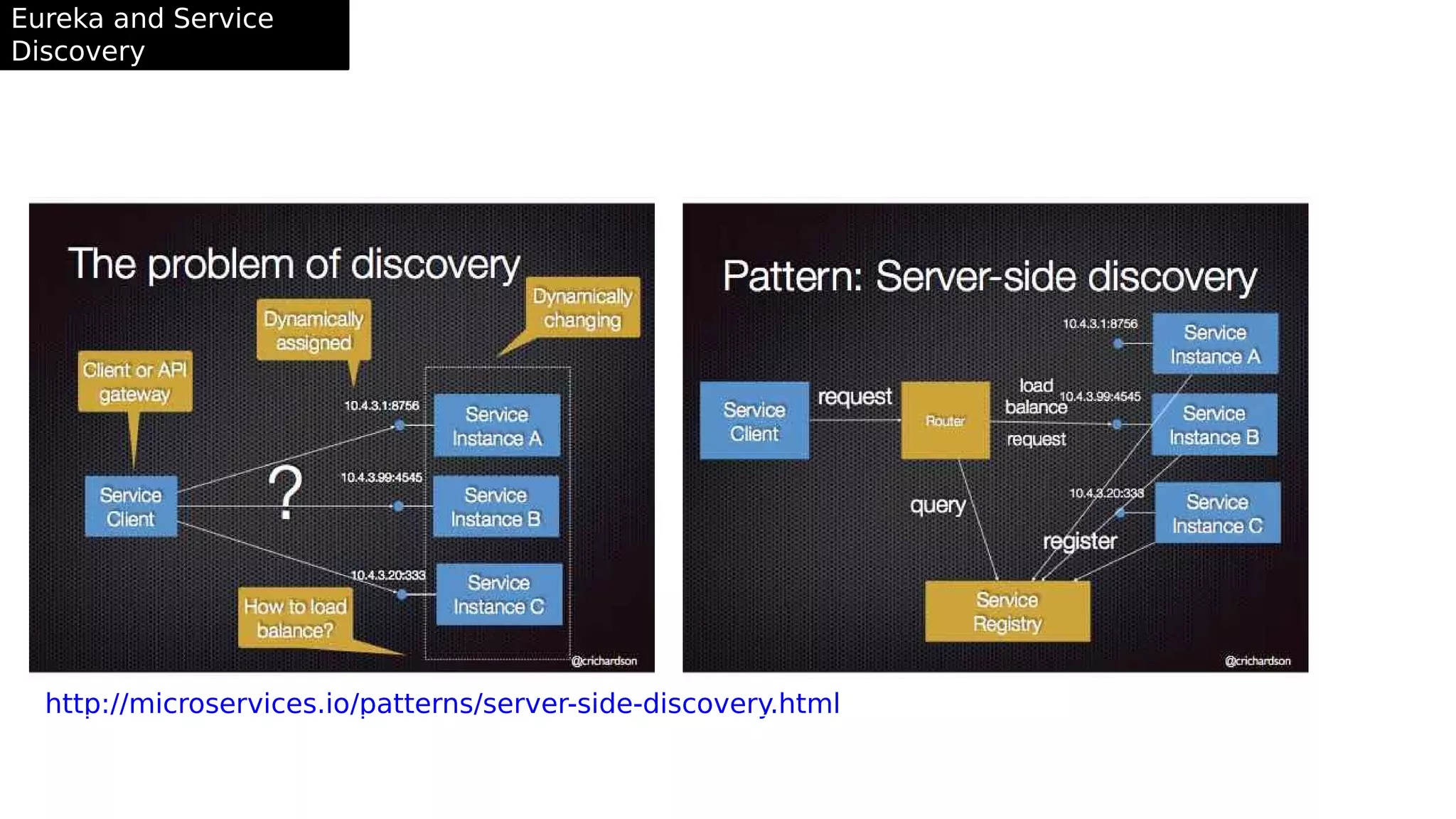

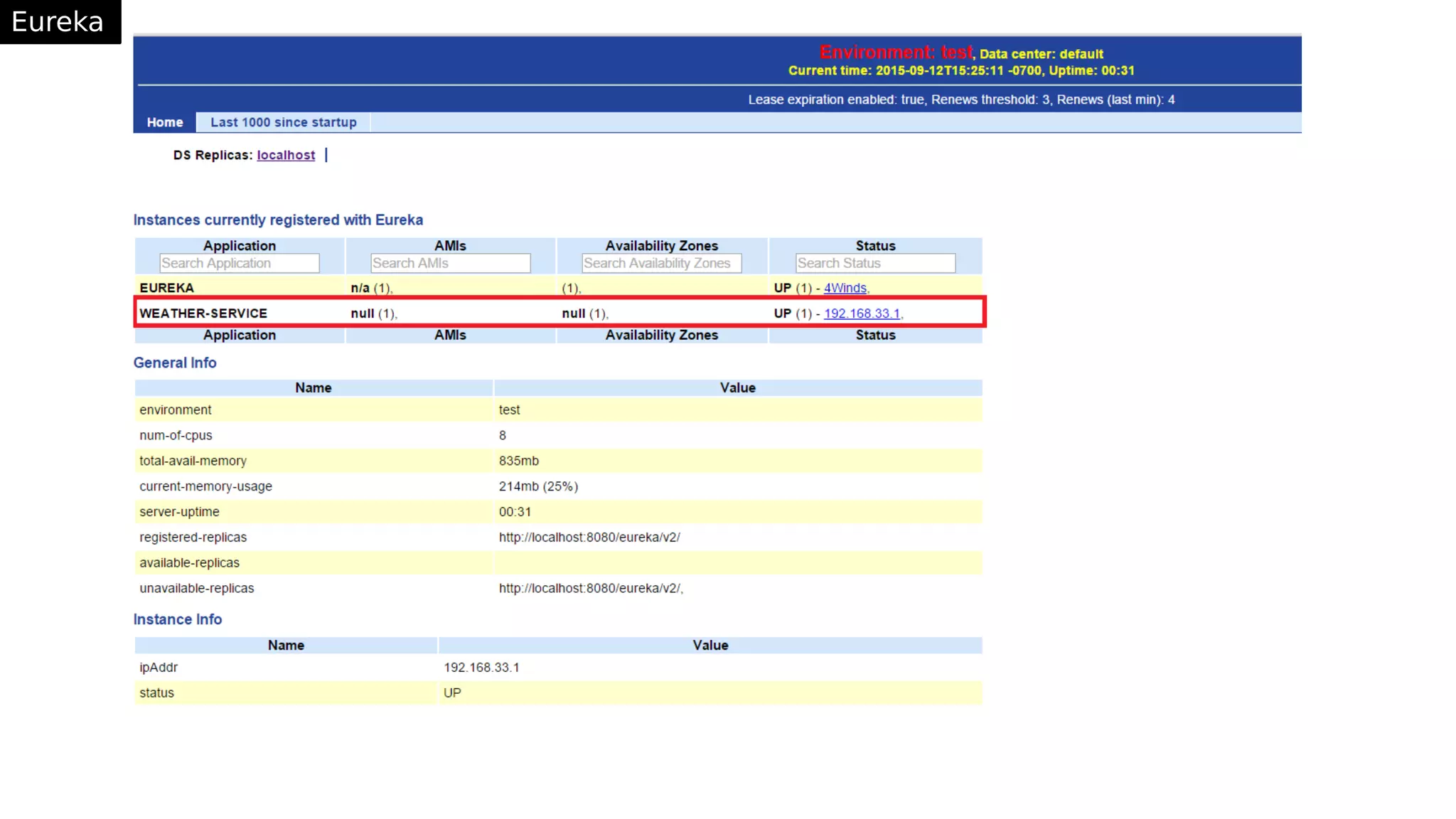

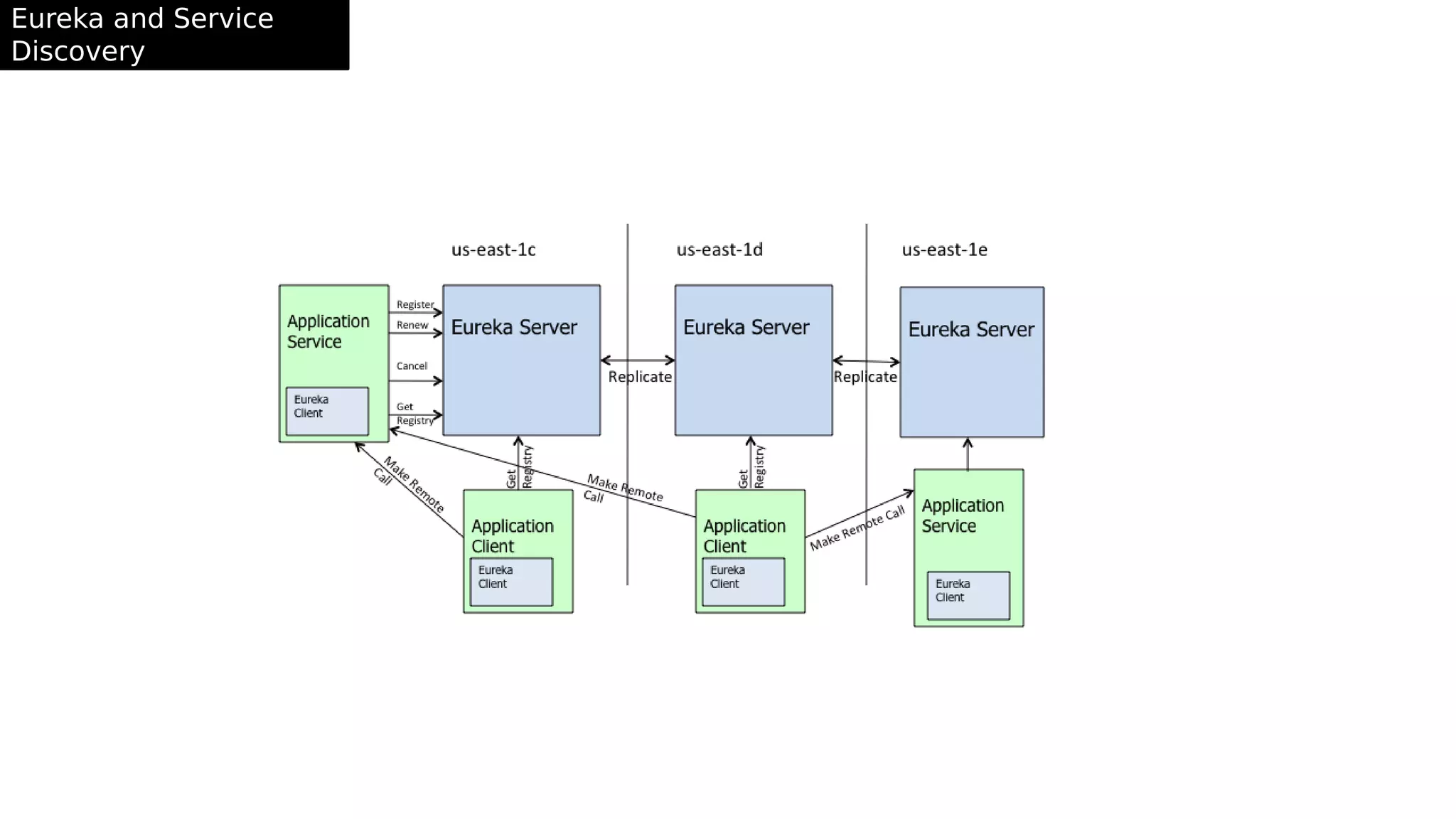

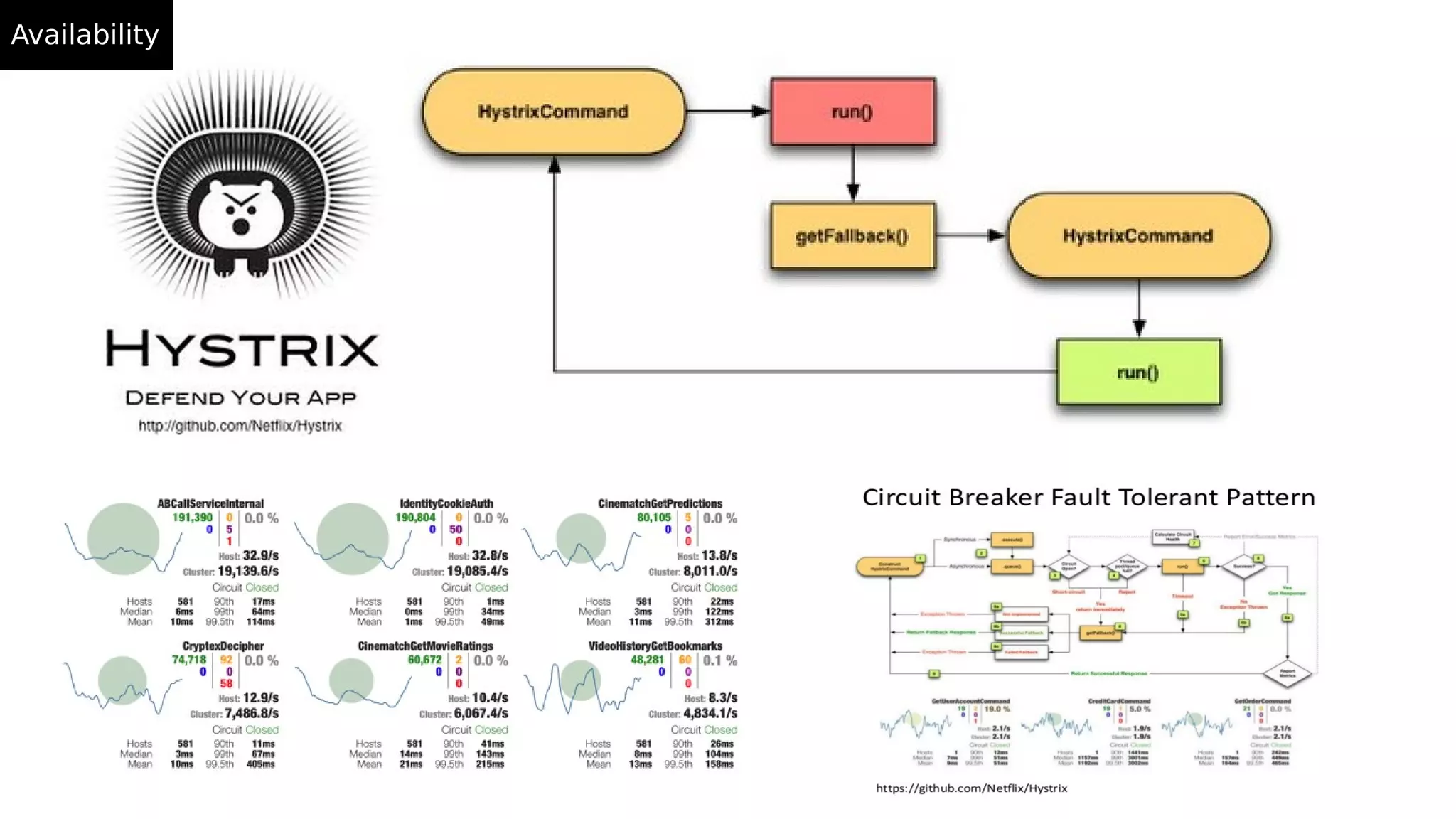

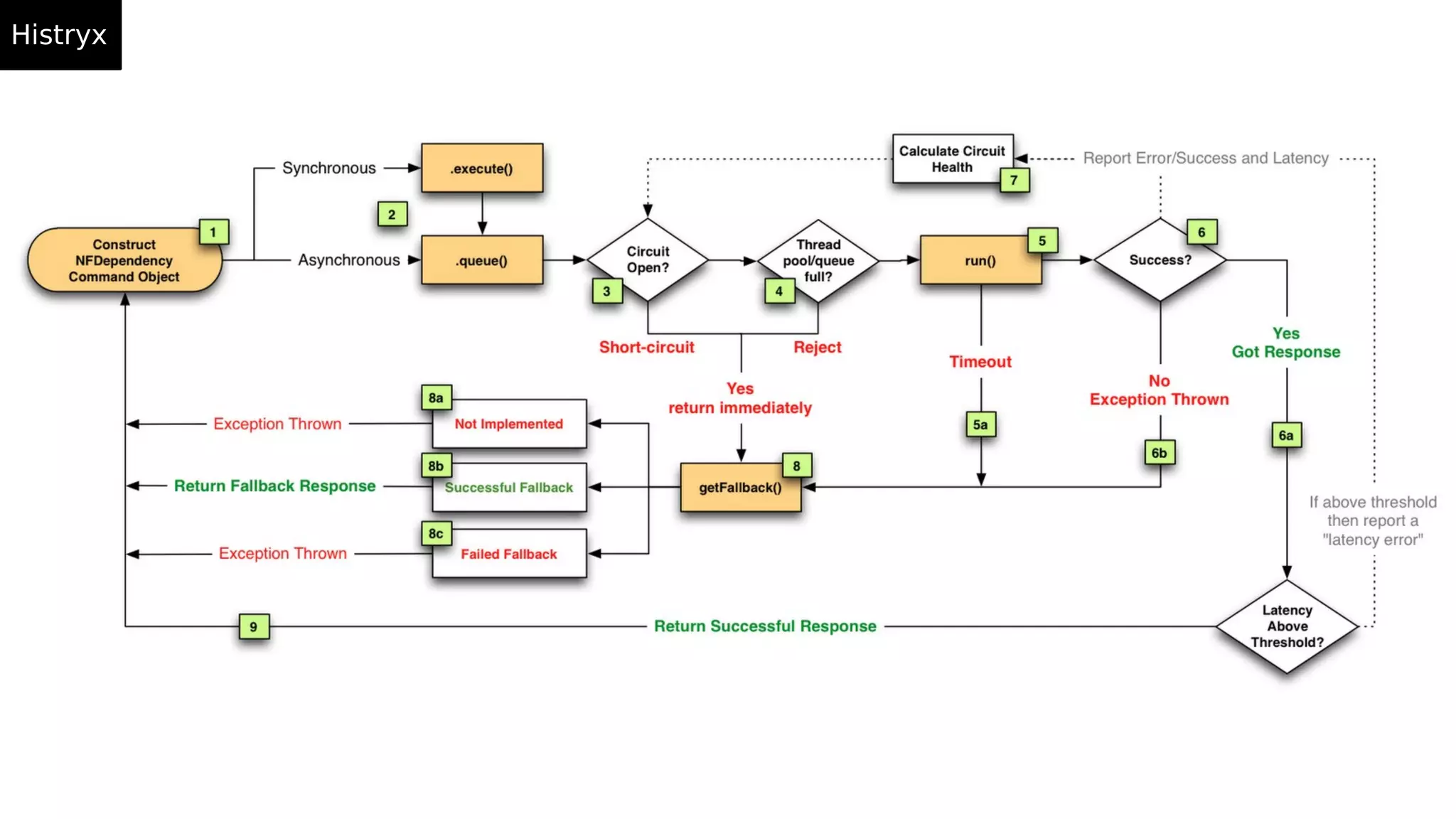

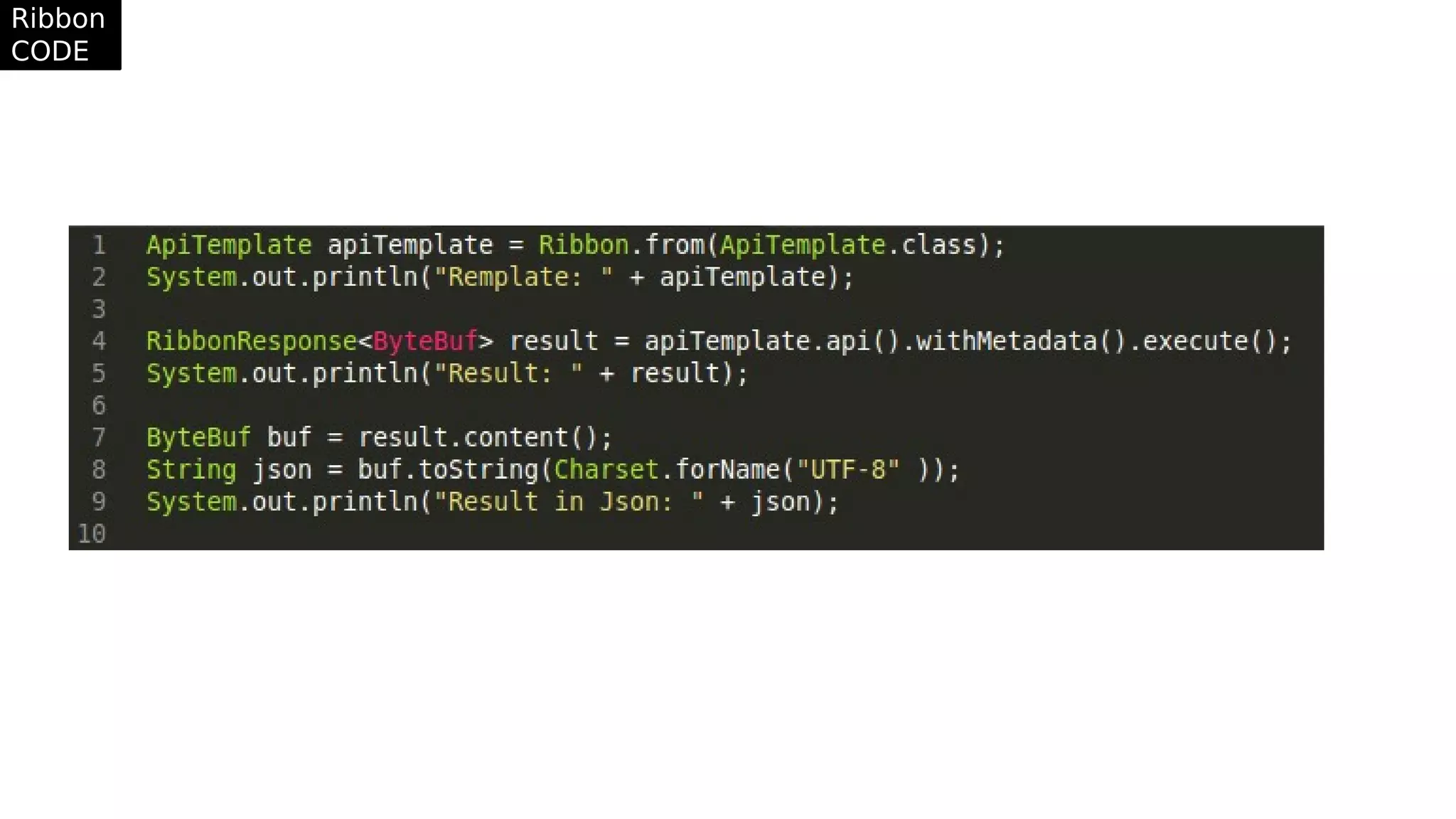

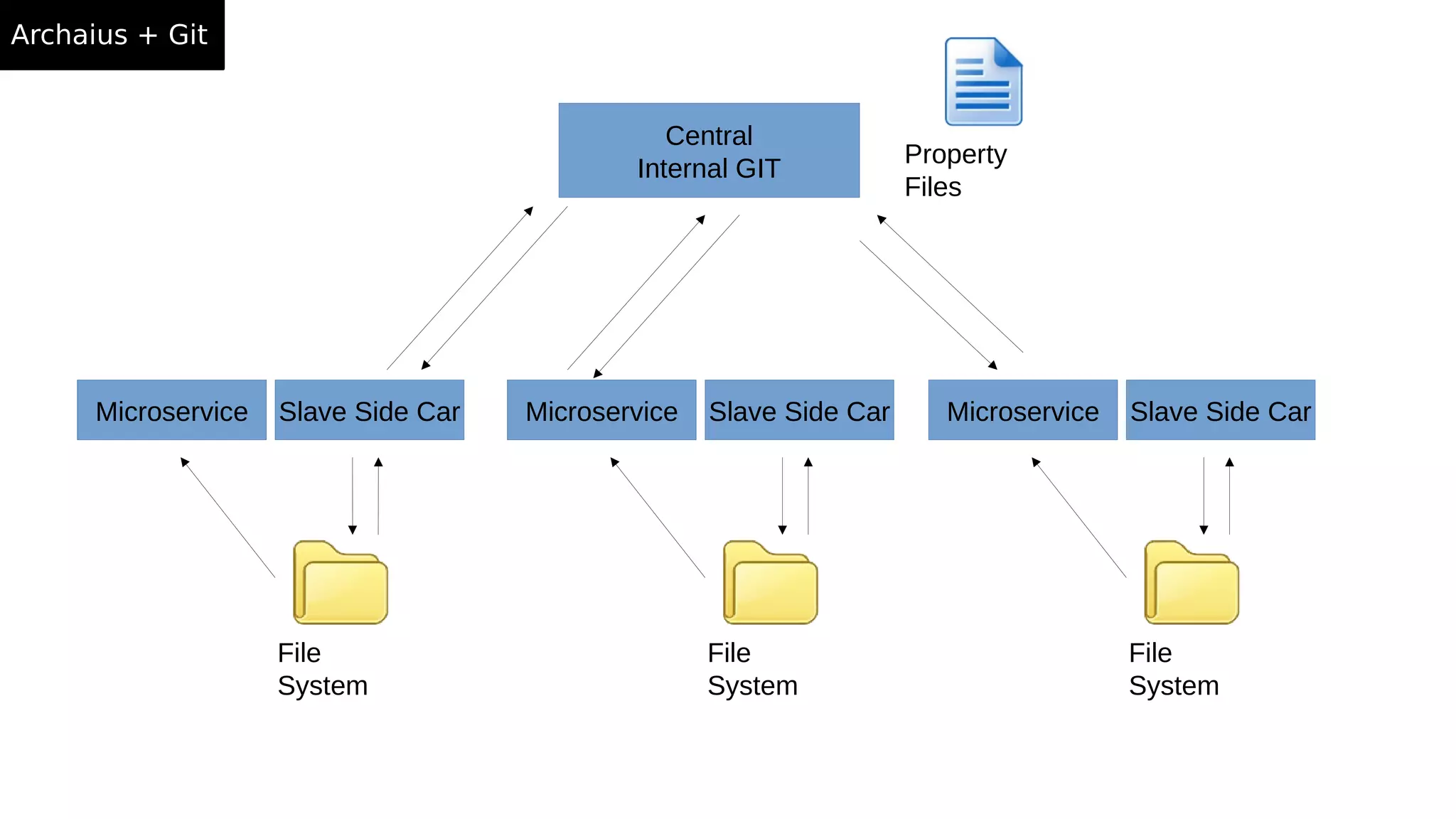

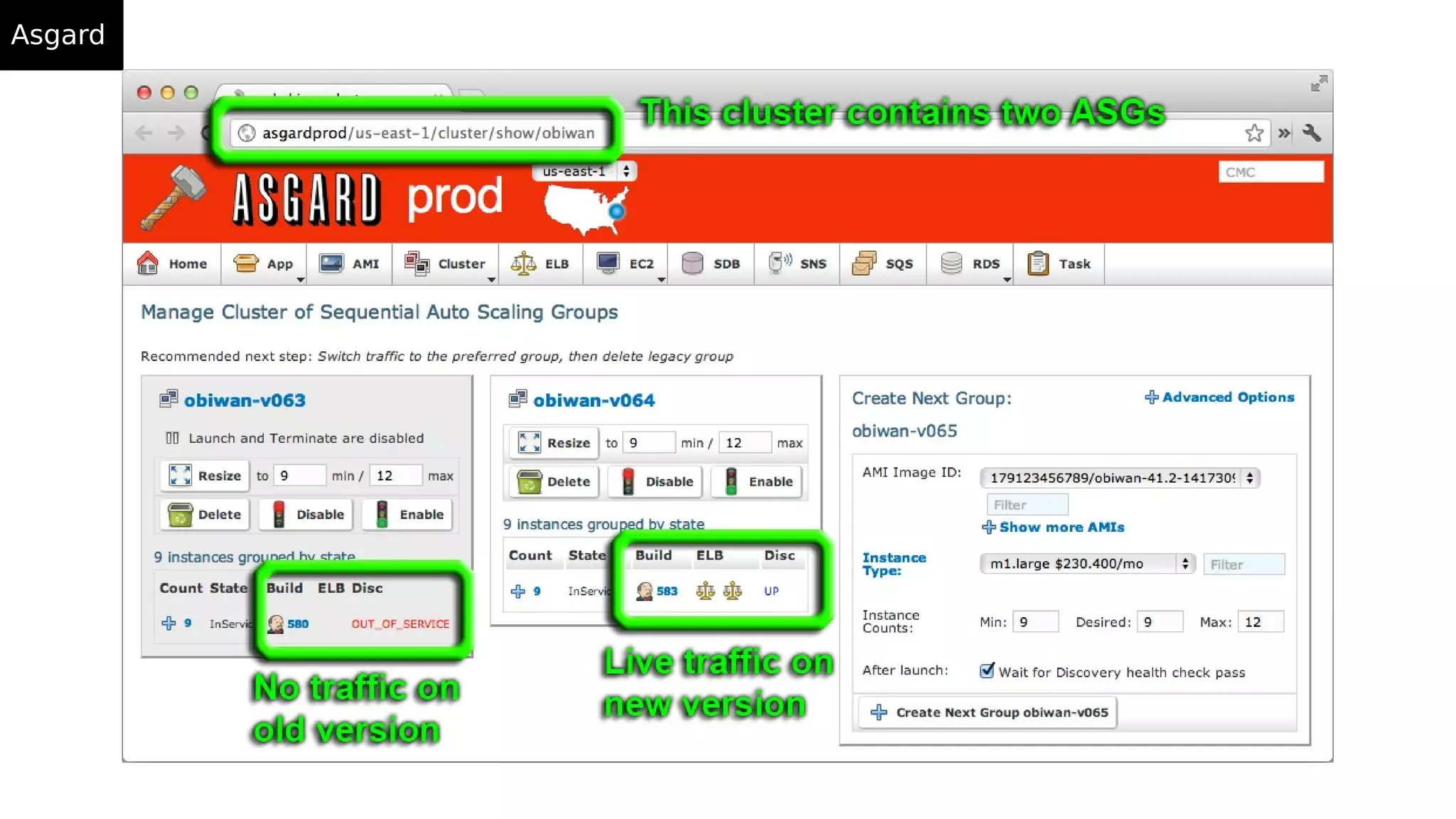

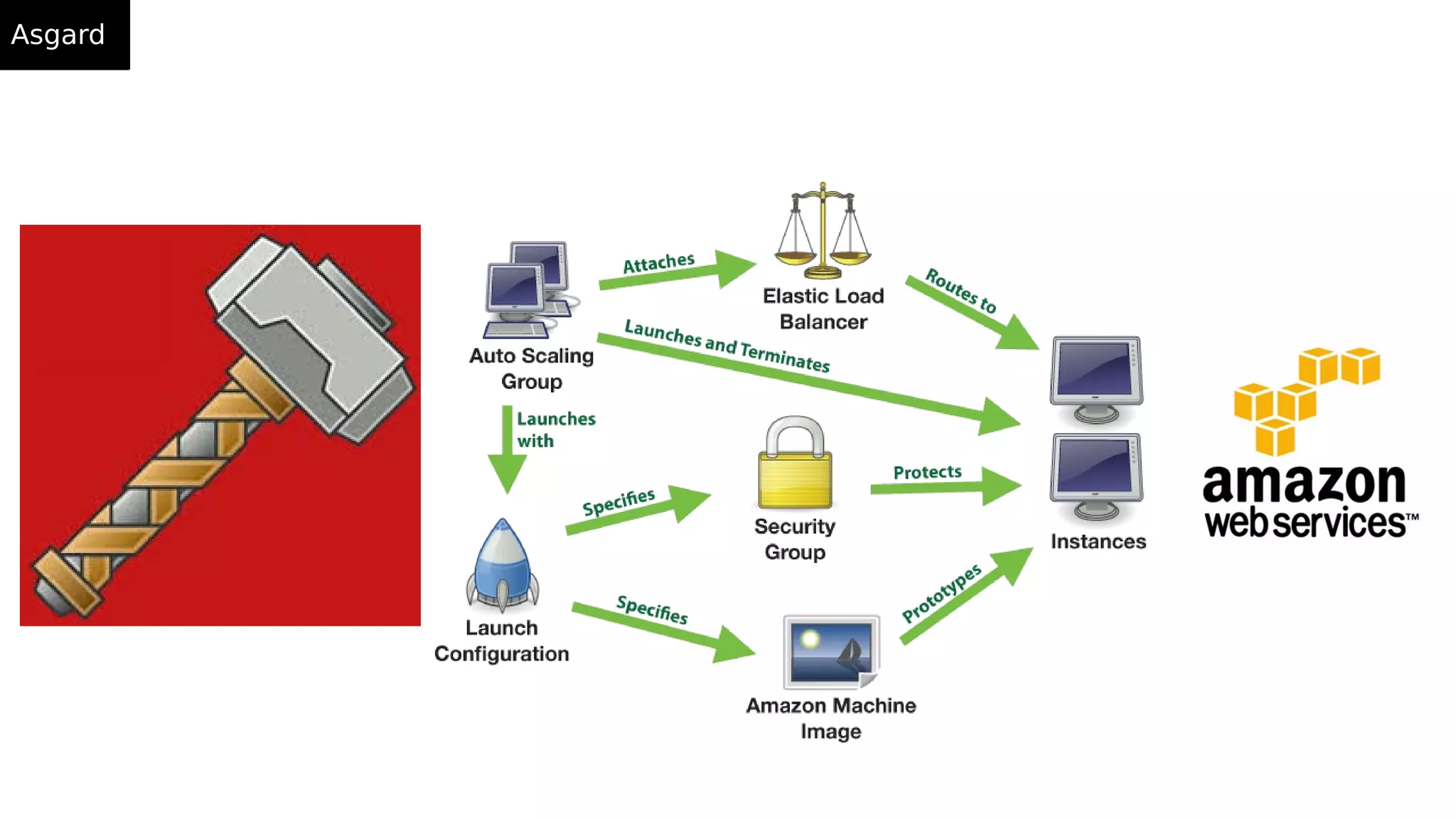

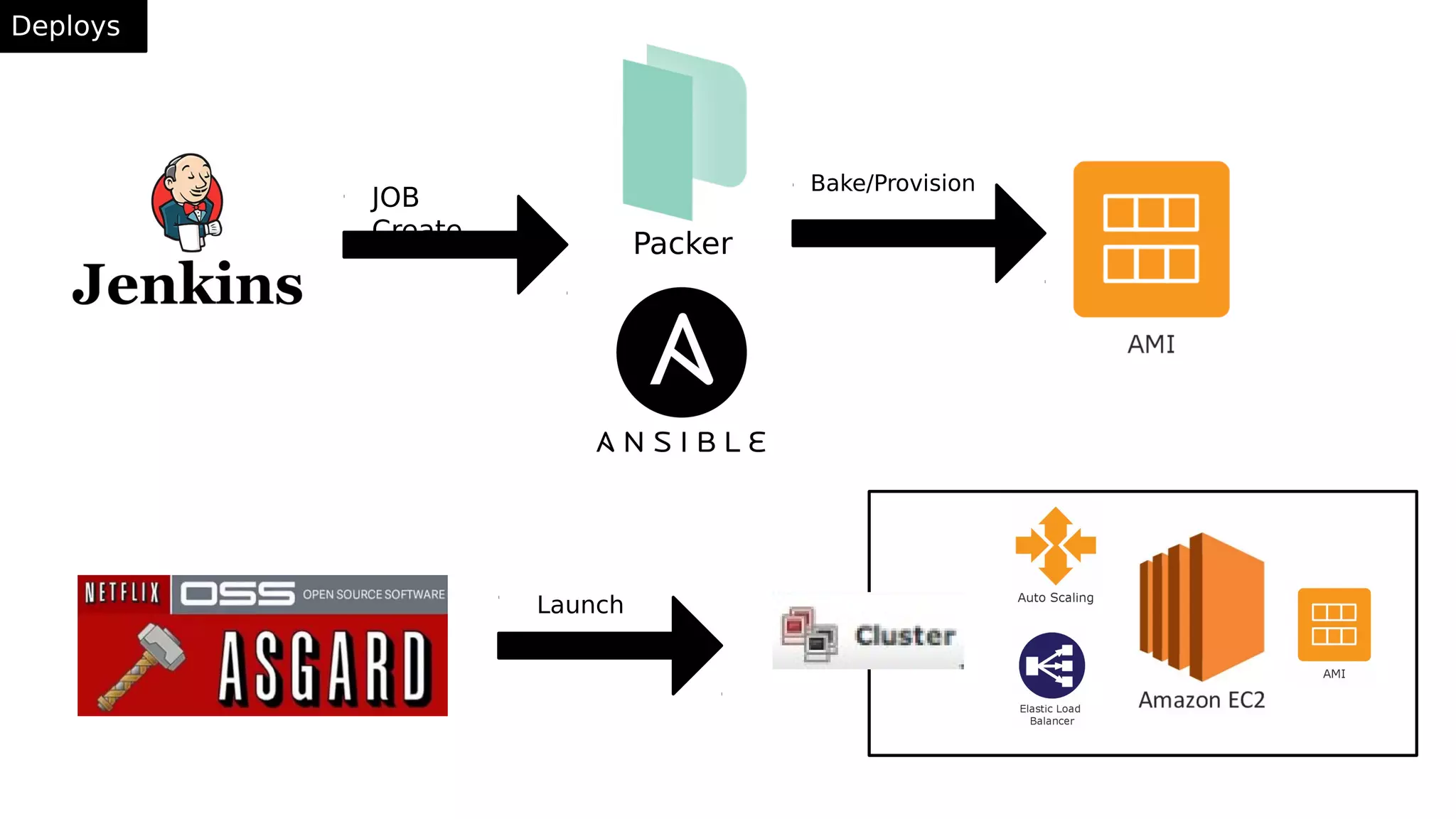

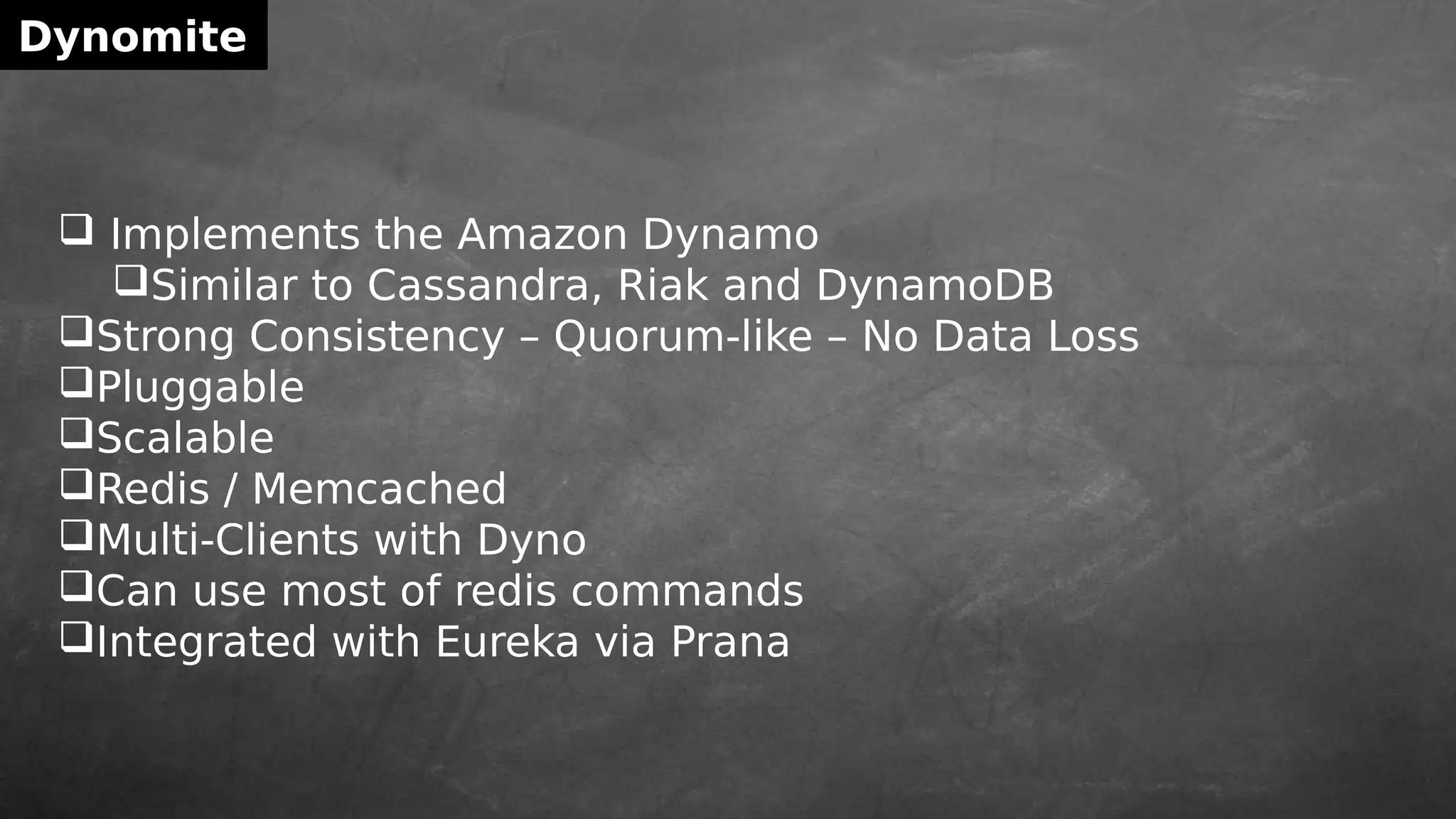

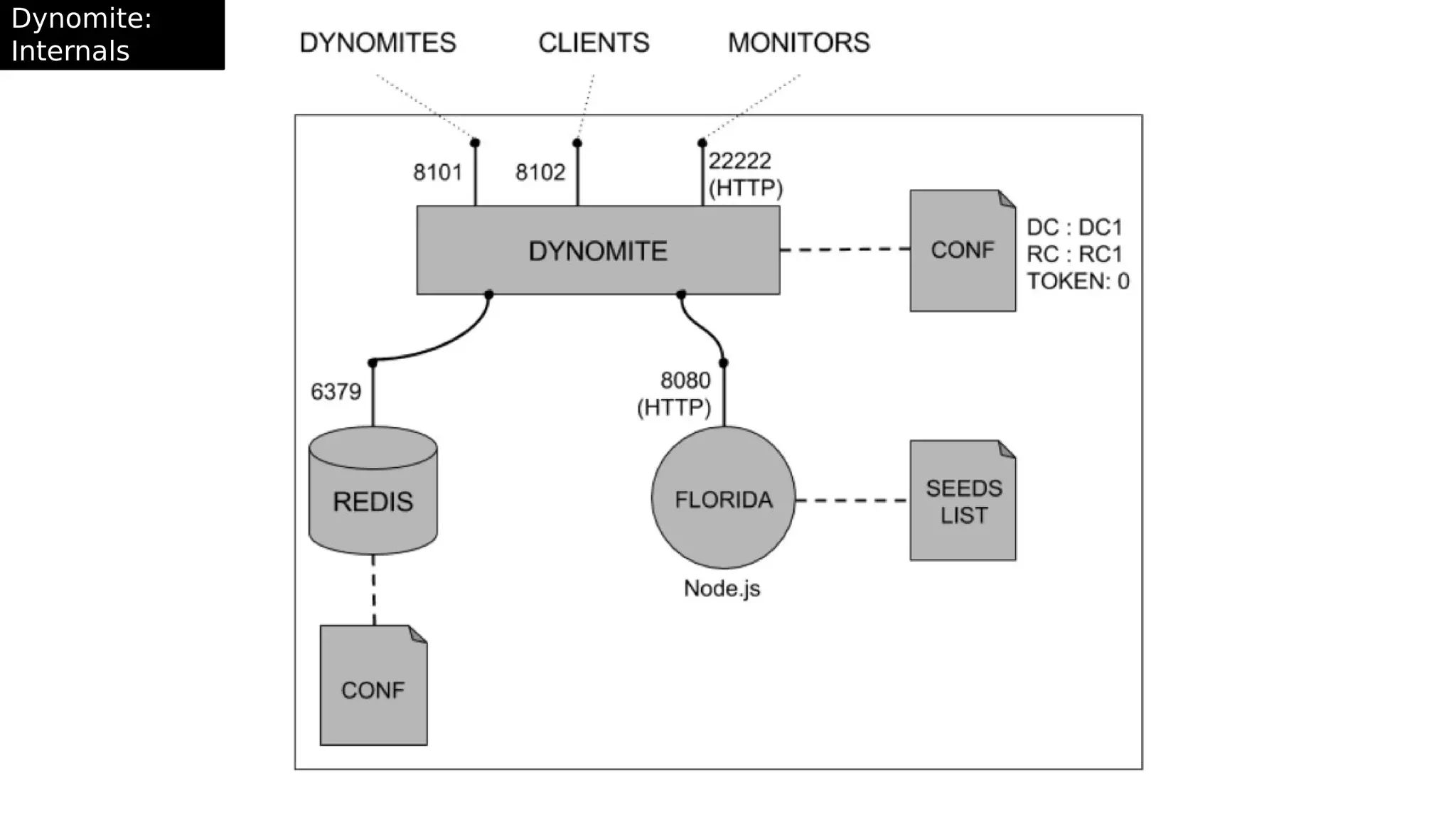

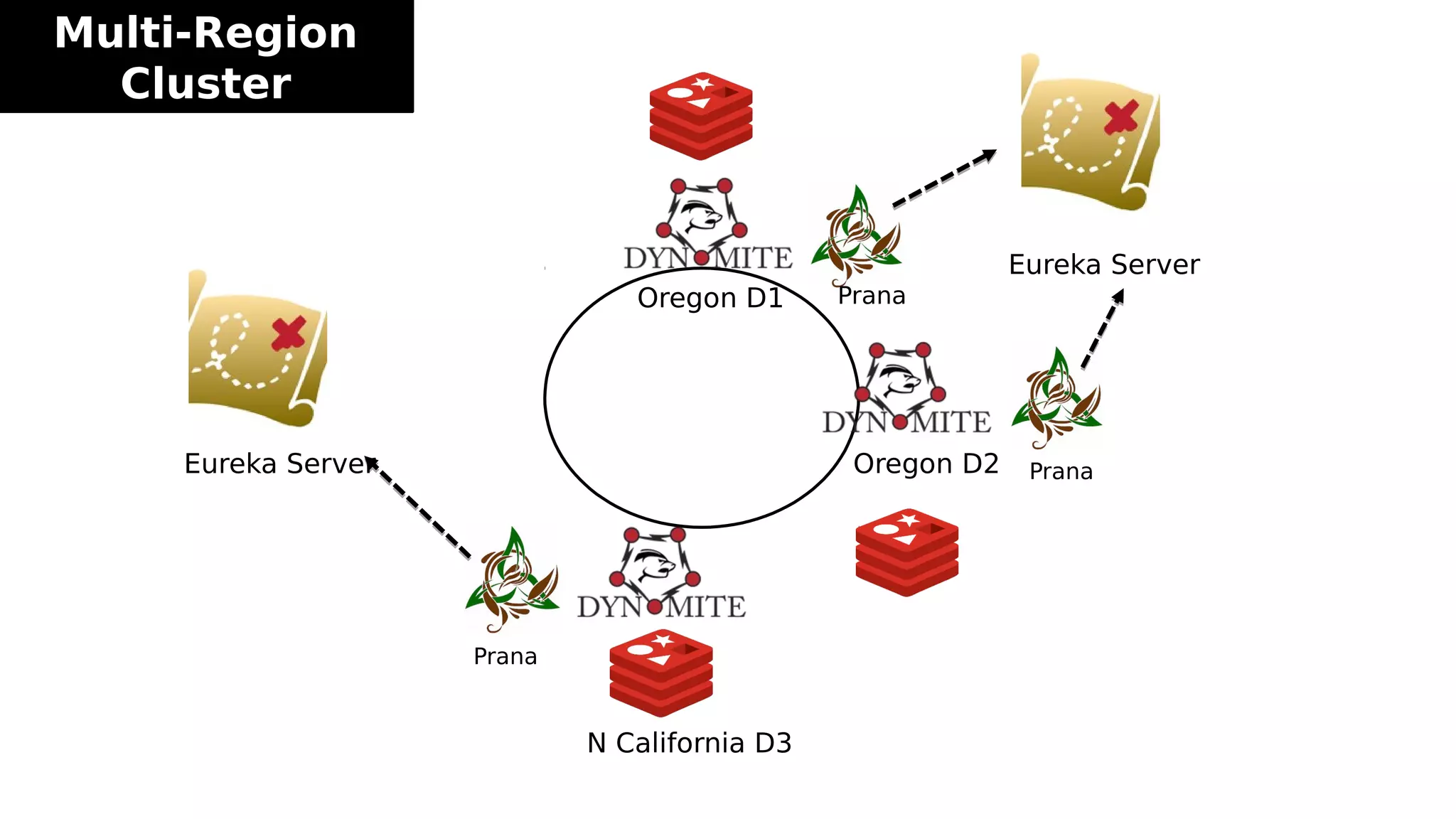

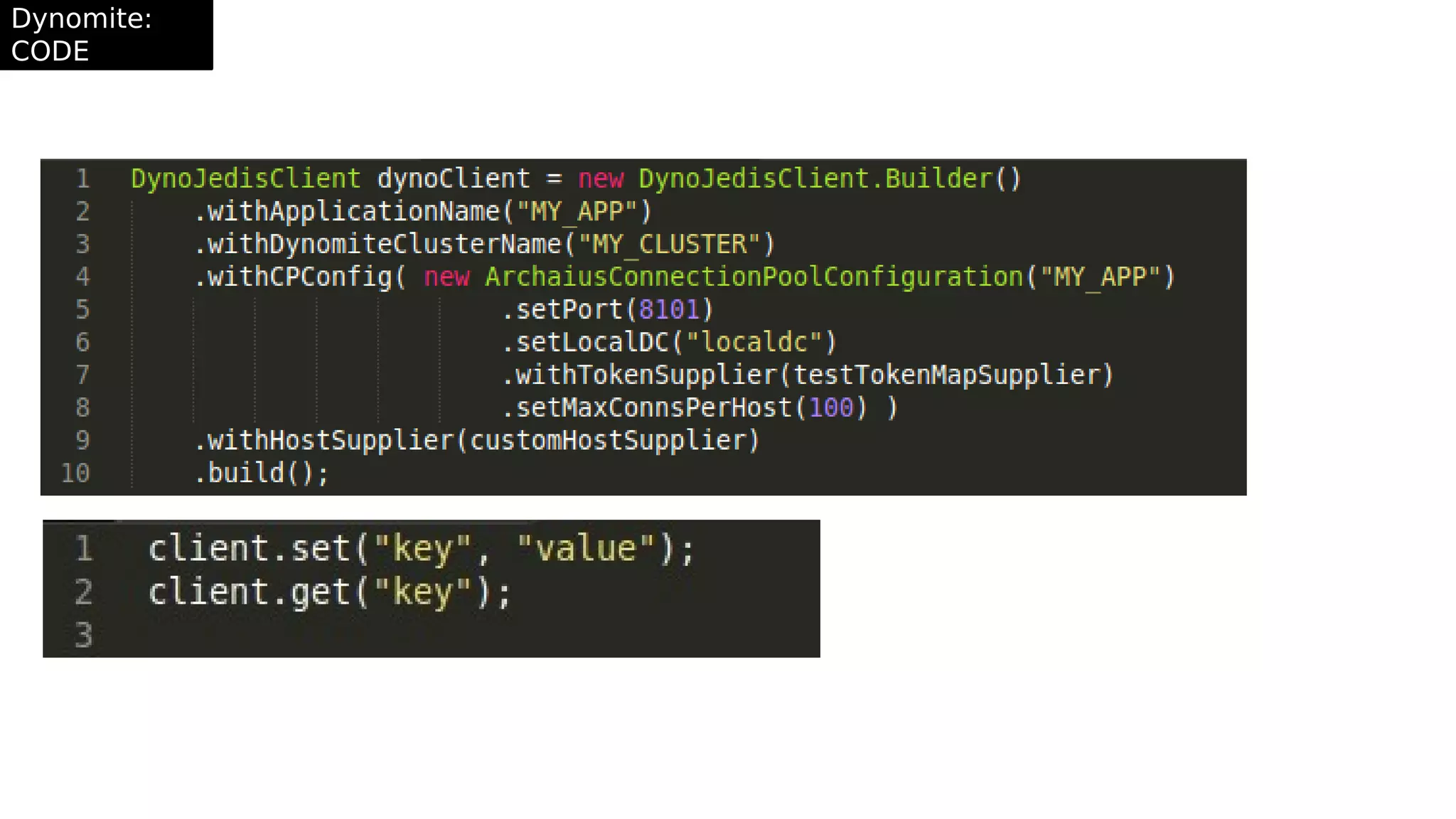

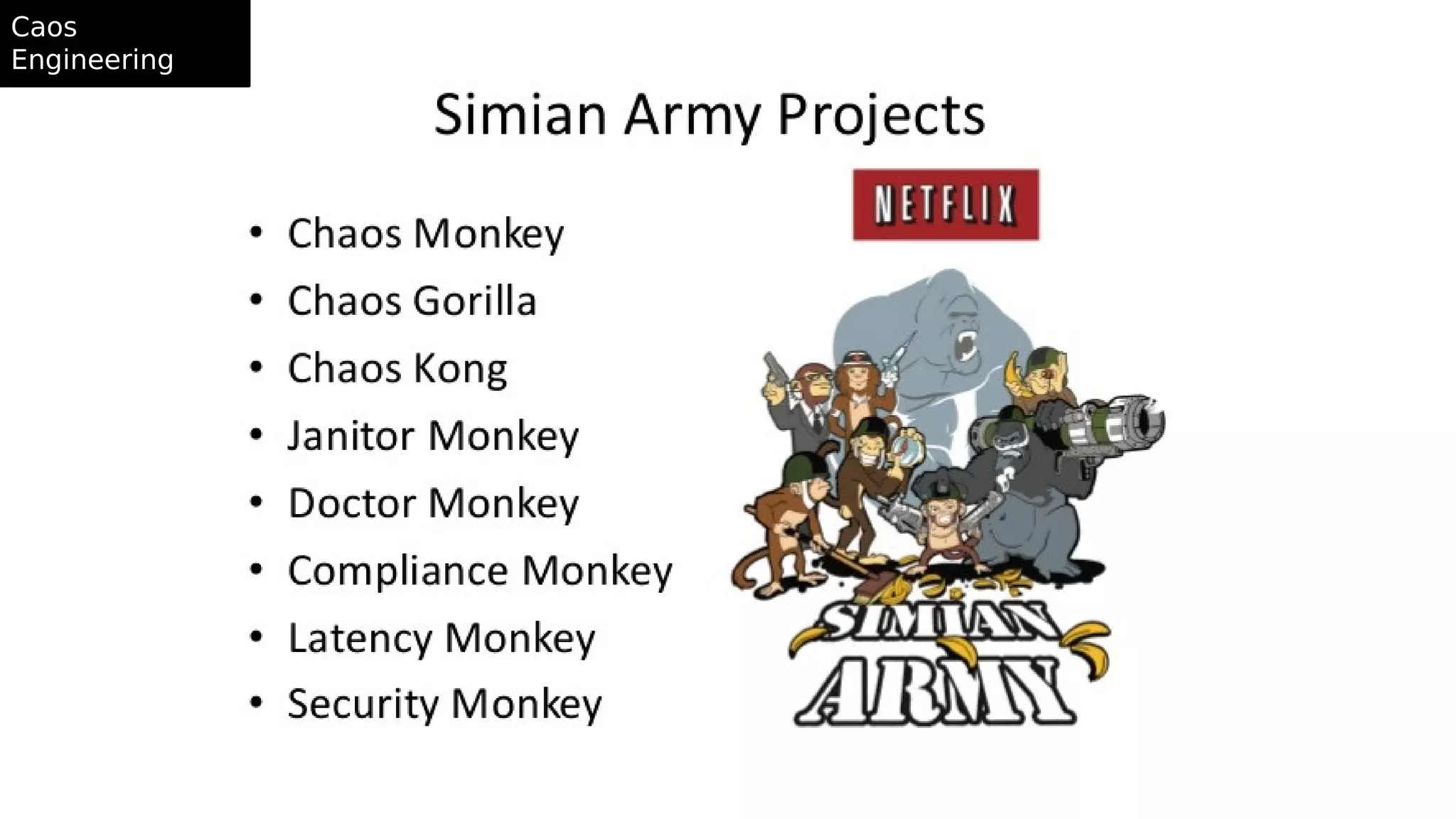

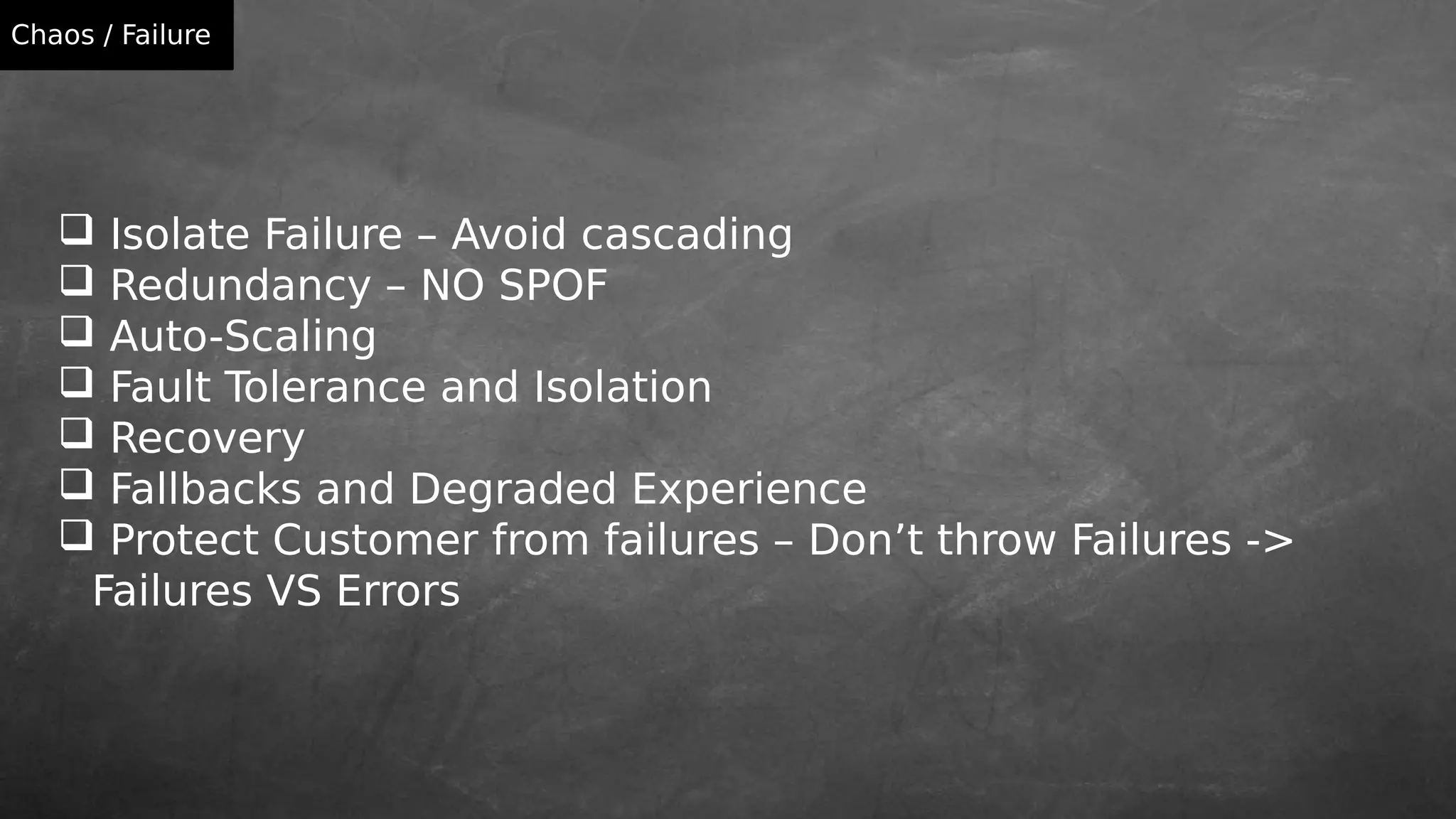

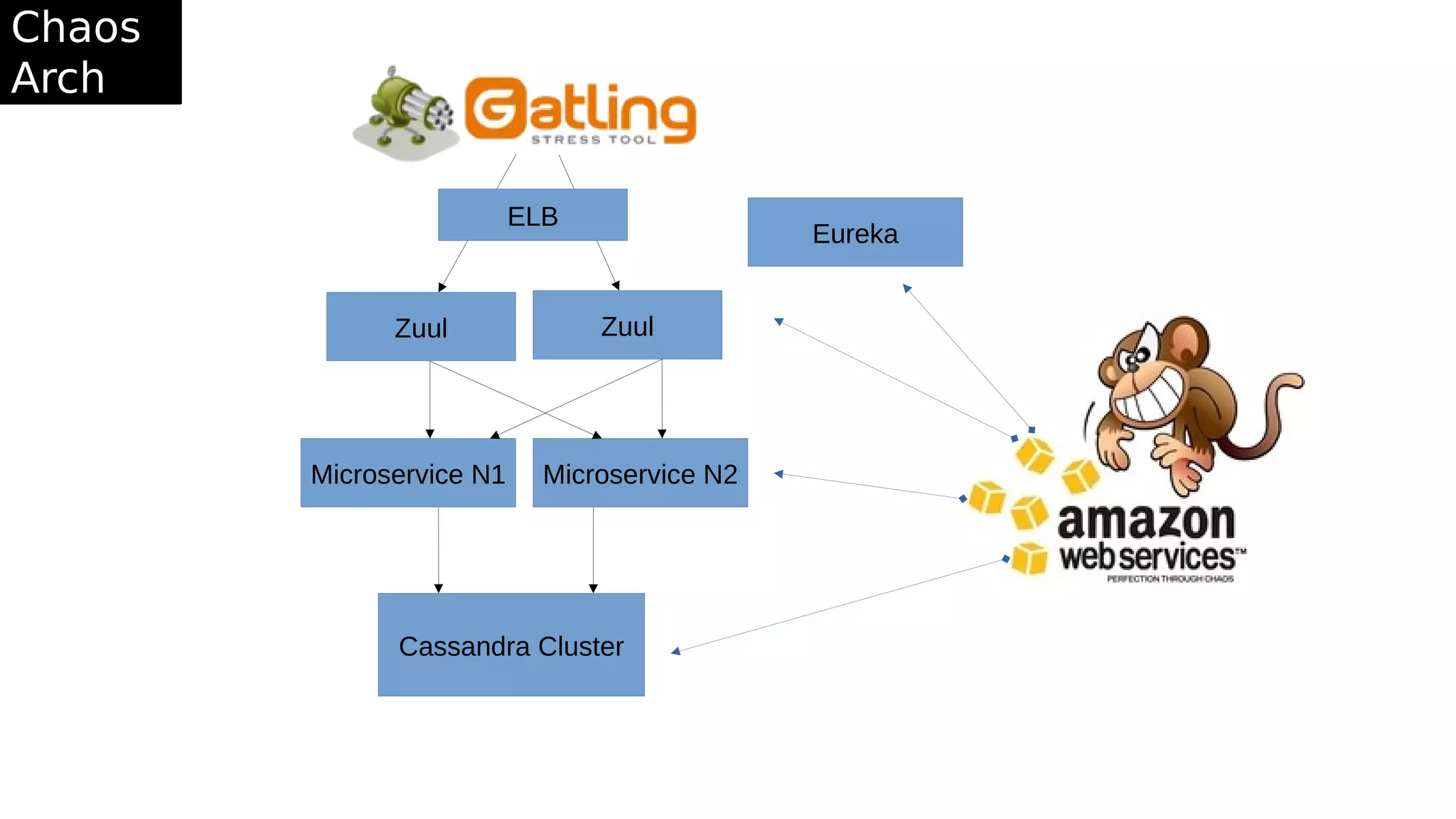

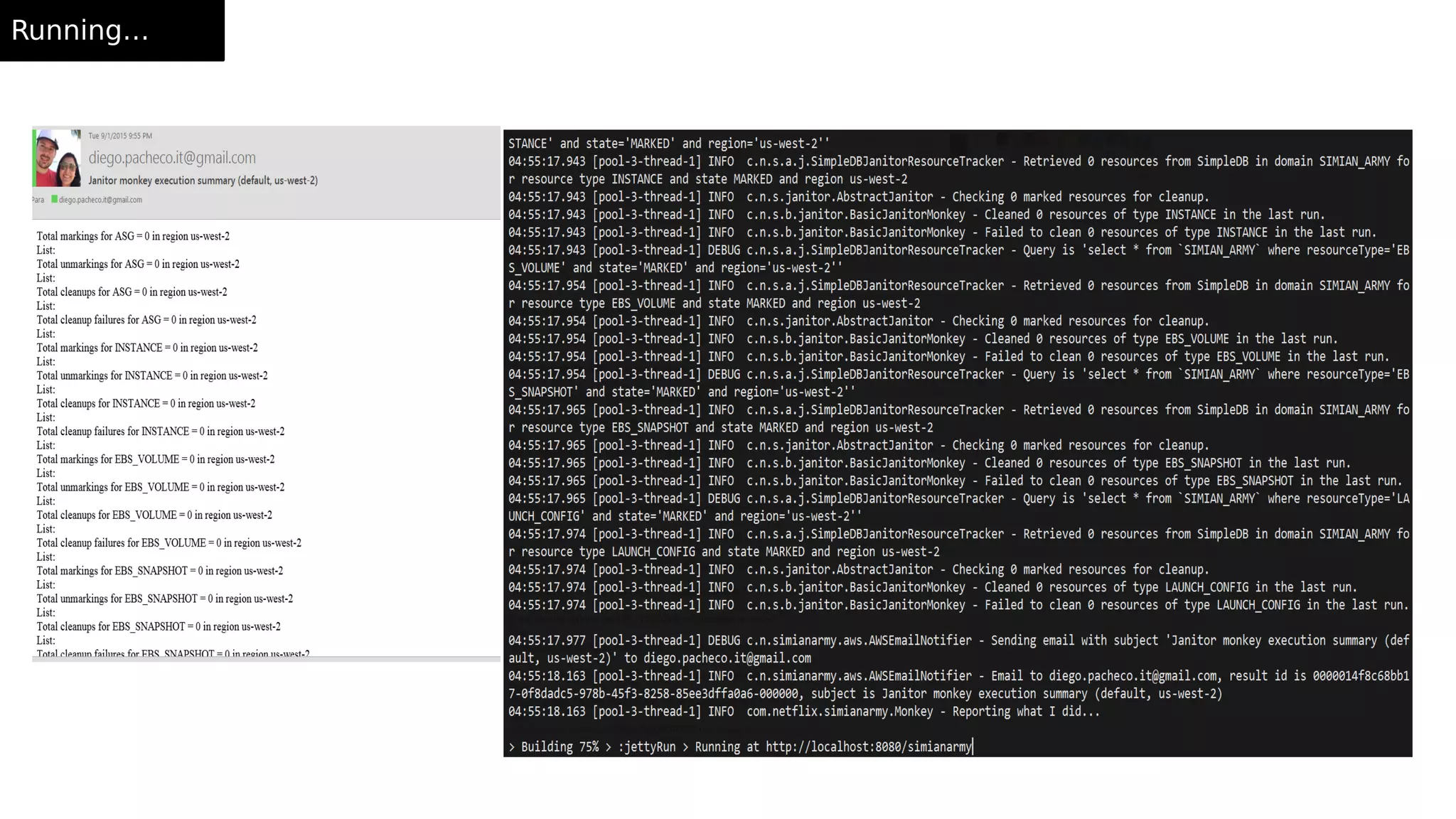

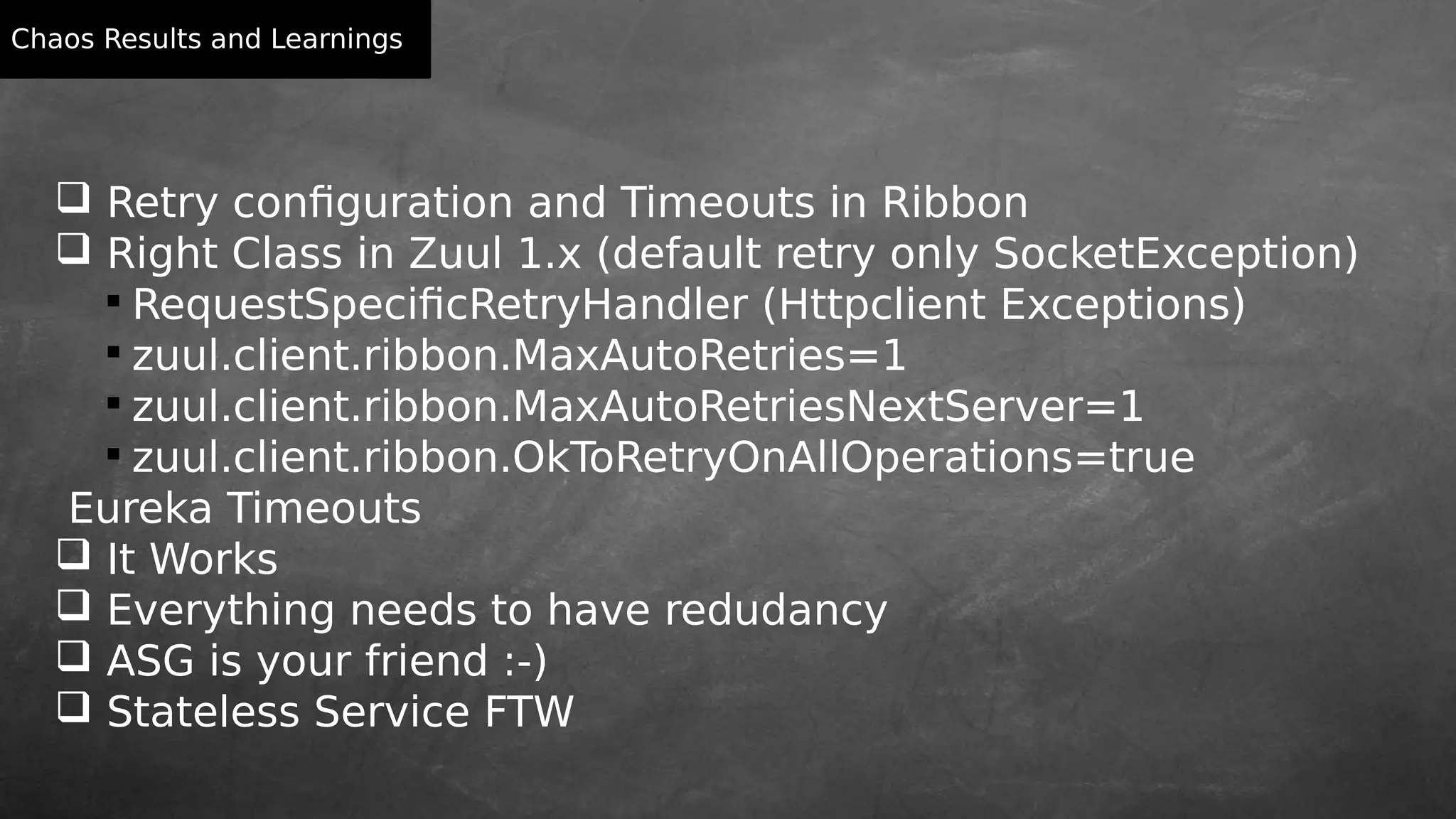

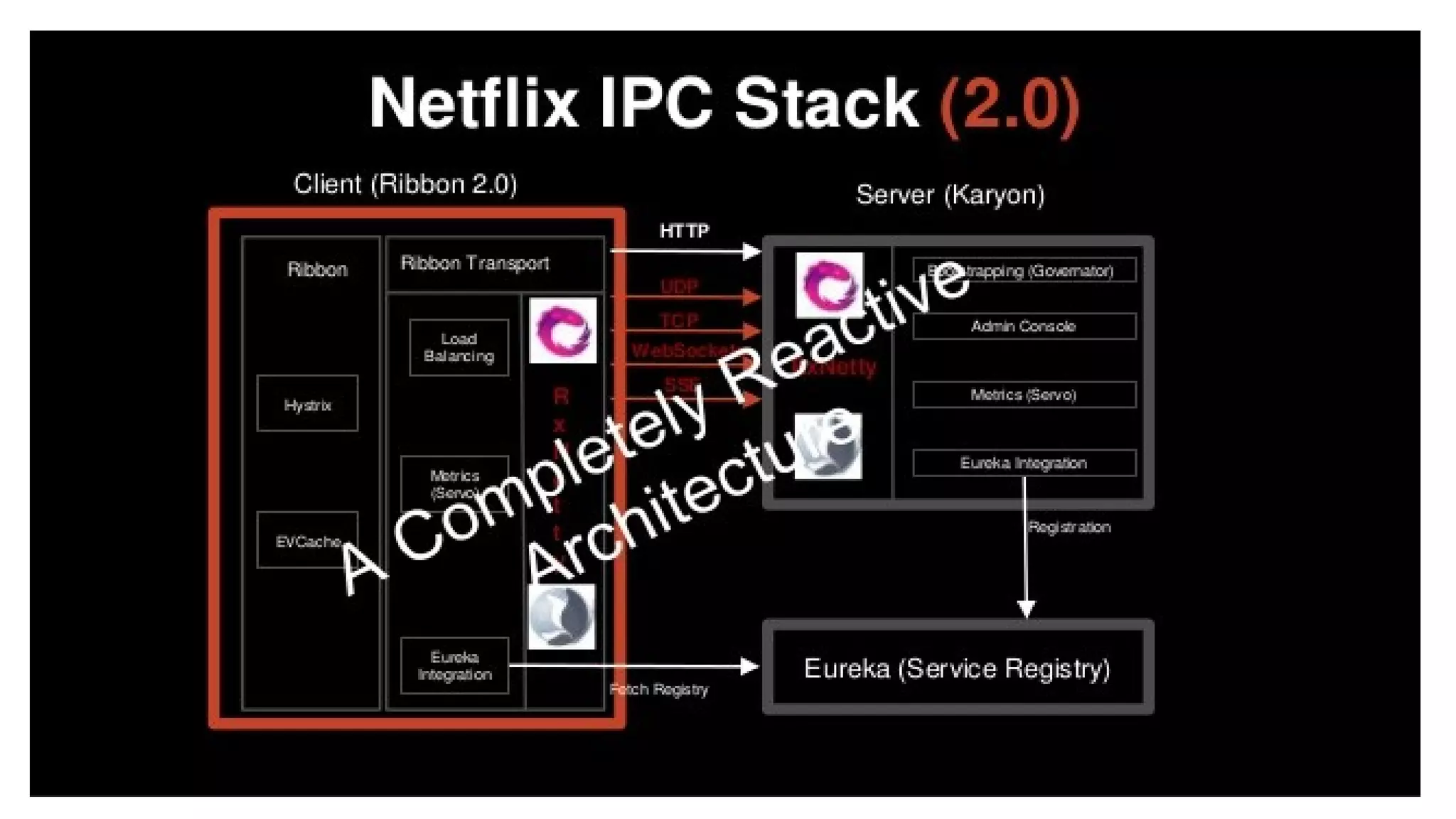

This document discusses using Netflix's microservices stack on AWS. It describes Netflix's architecture of using hundreds of microservices across multiple regions to handle billions of requests per day. It outlines the principles of Netflix's stack including stateless services, auto-scaling, no single points of failure, and designing for failures. Key technologies in Netflix's open source stack are explained like Eureka for service discovery, Ribbon for load balancing, Hystrix for latency and fault tolerance, RxJava for reactive programming, and Dynomite for distributed caching. Chaos engineering practices like fault injection testing are also covered.