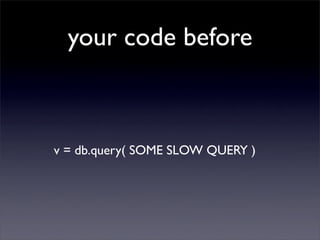

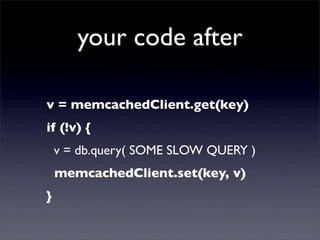

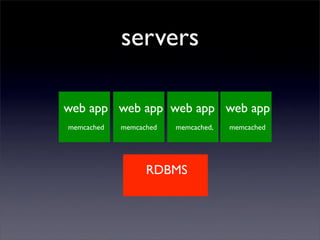

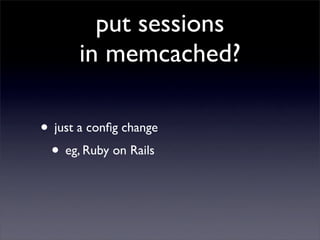

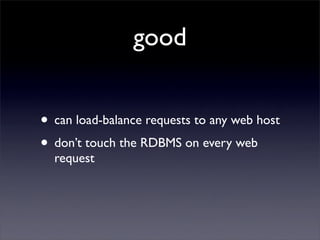

Memcached is an open-source distributed caching system that improves website performance by caching data and objects in RAM to reduce the number of times an application must access a backend data store. It works by storing objects in memory to speed up access and reduce load on databases. Memcached is simple to implement, scalable, and helps websites run faster by caching frequently accessed data in memory rather than accessing the database on every request. The presentation provides an overview of memcached, how to implement it, best practices, and common use cases.