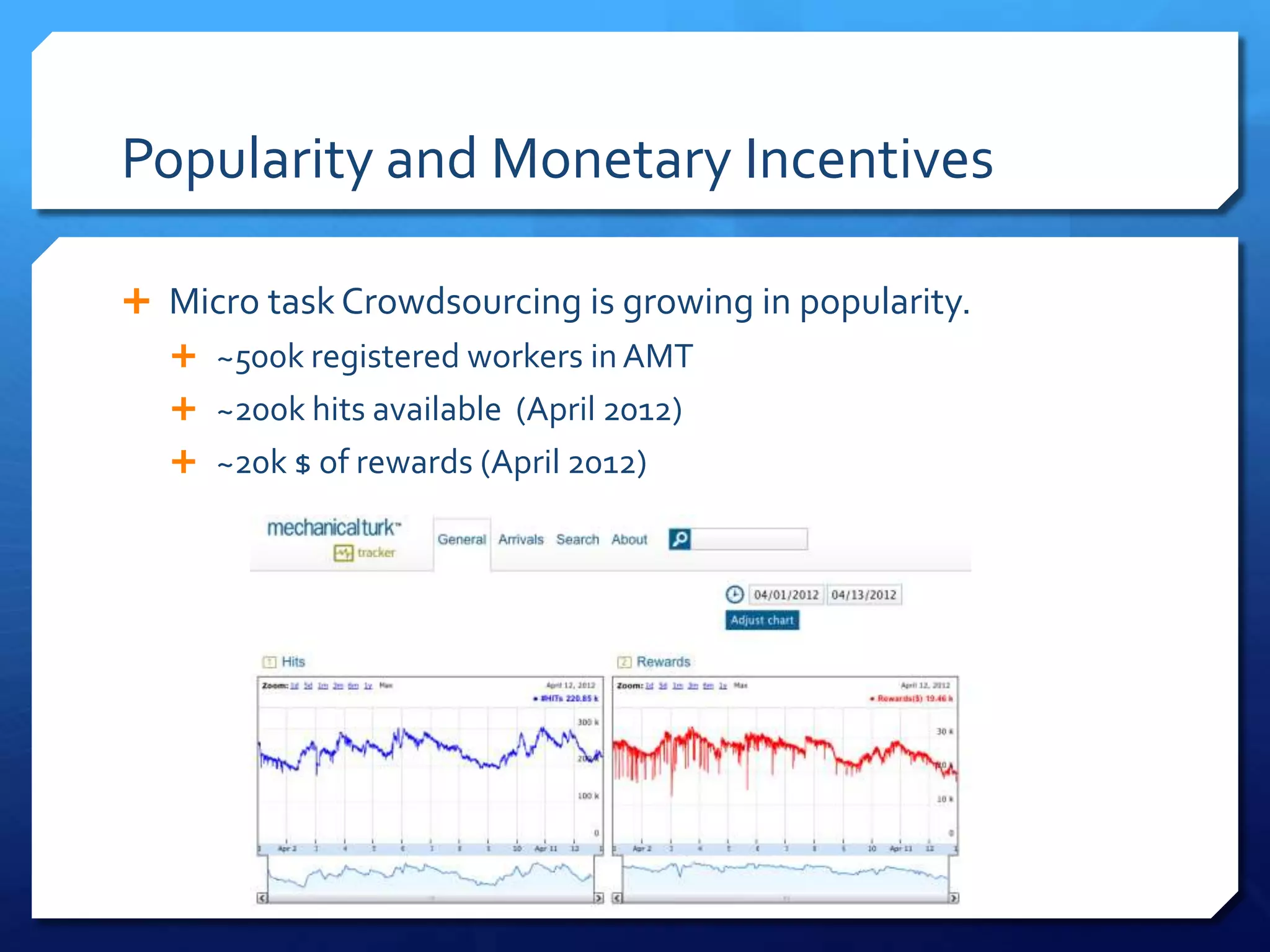

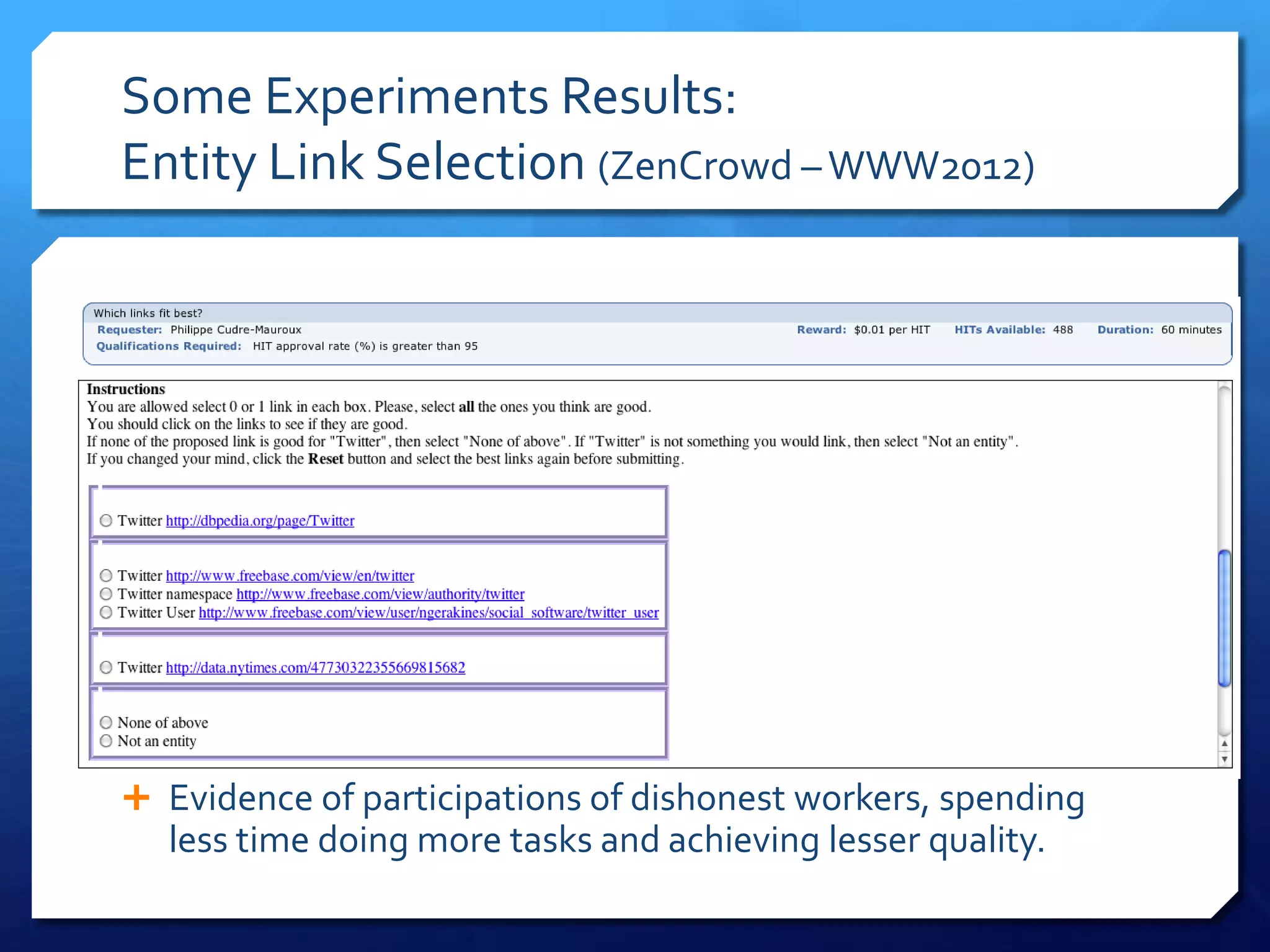

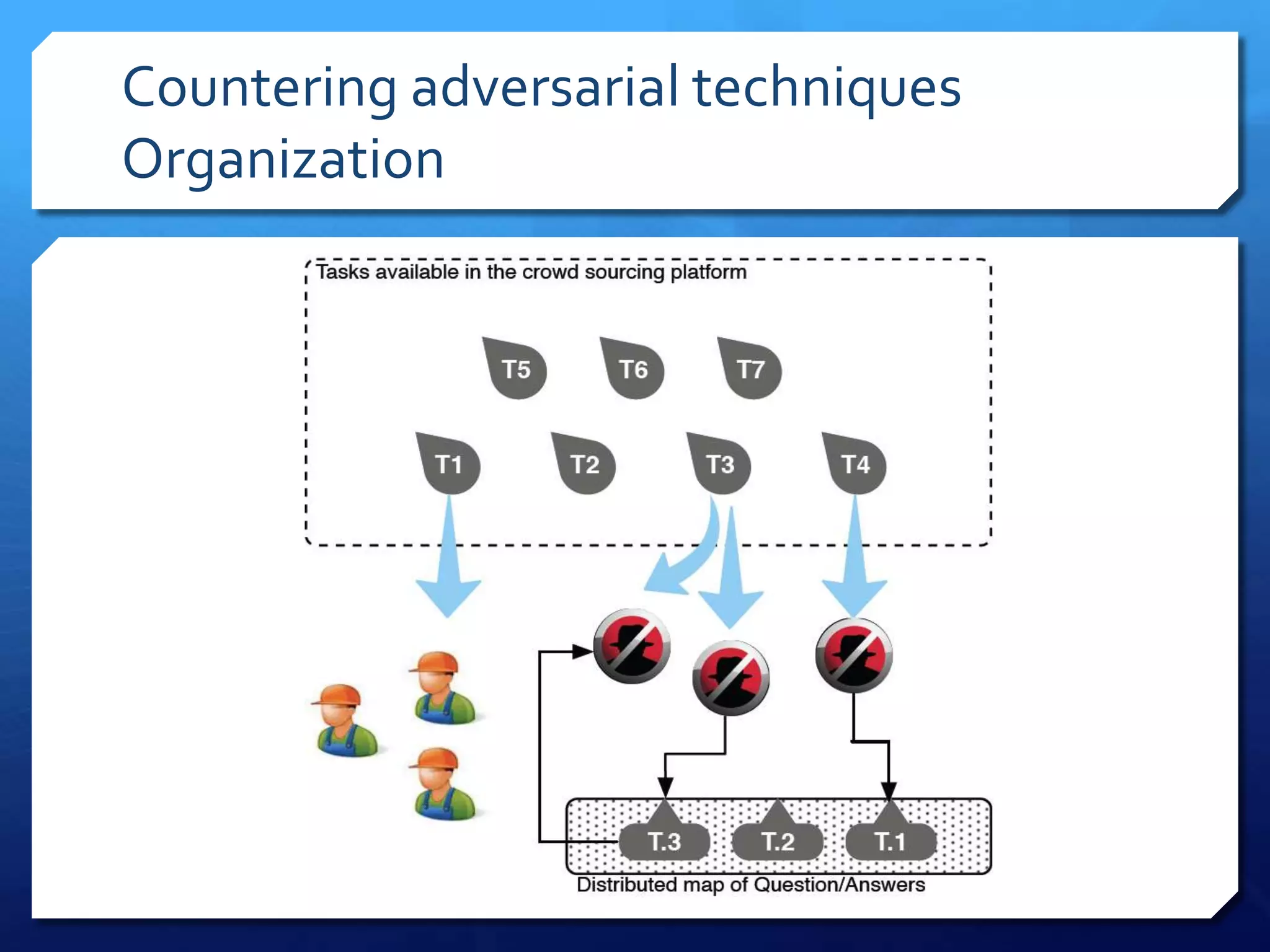

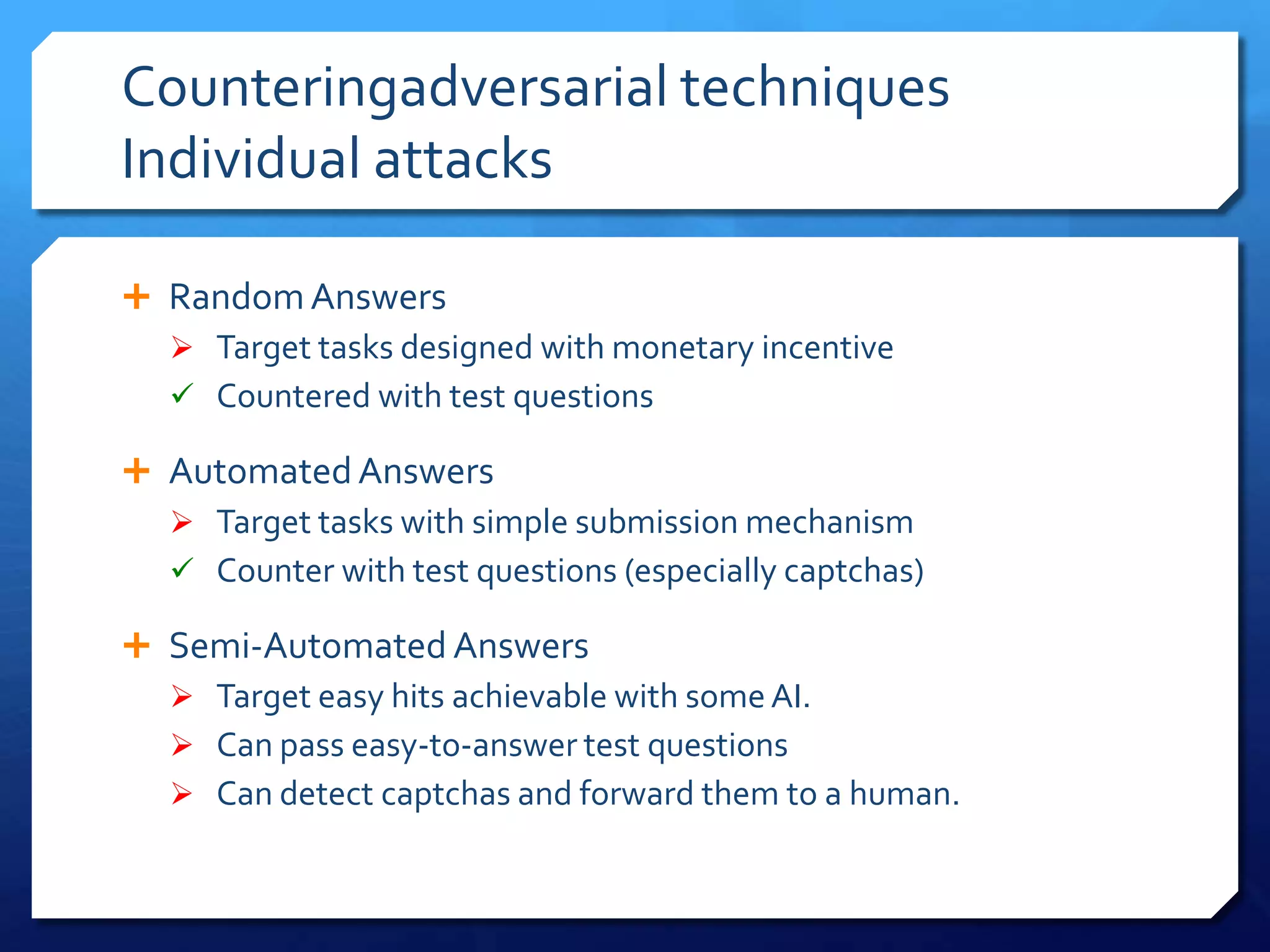

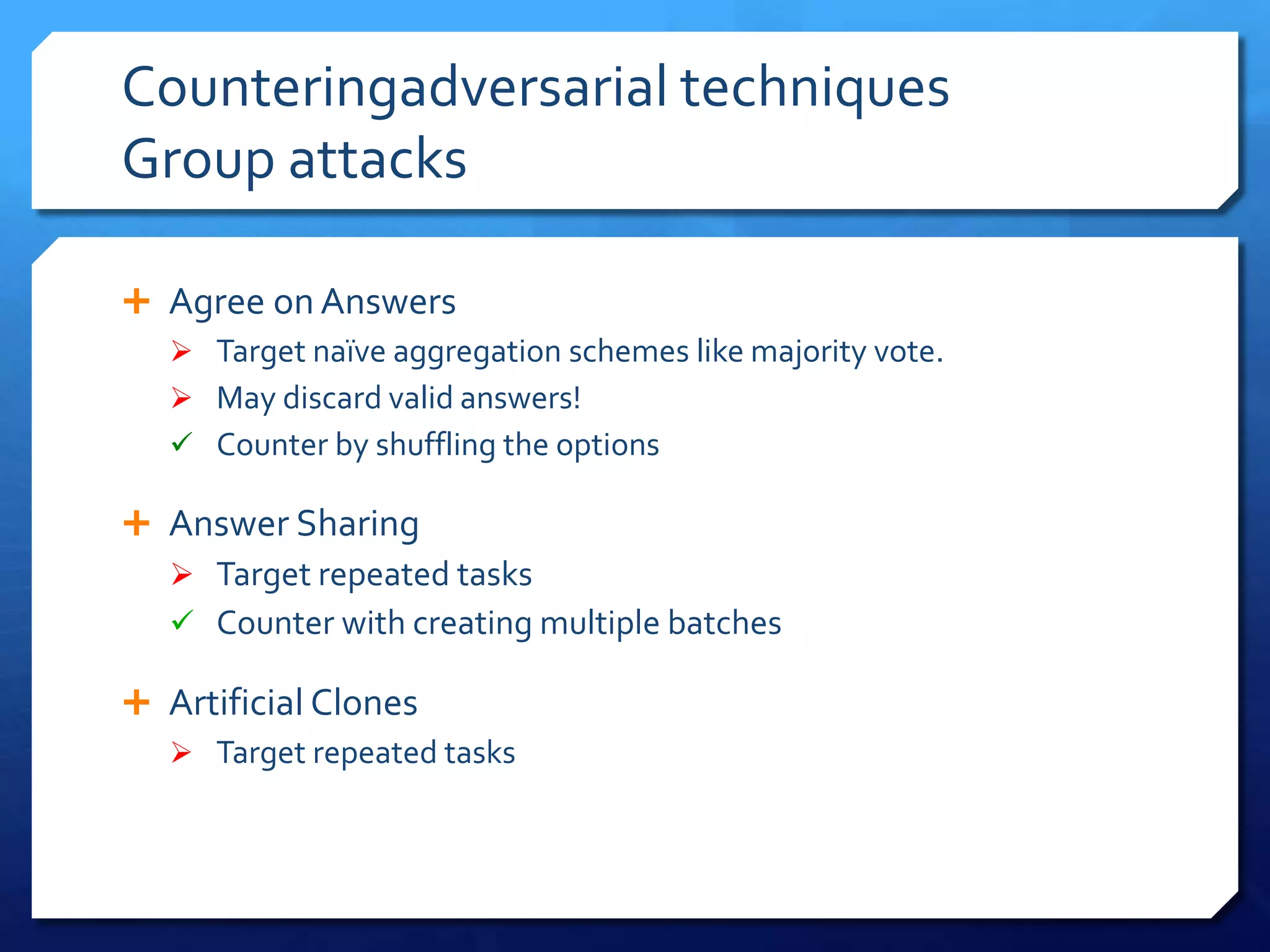

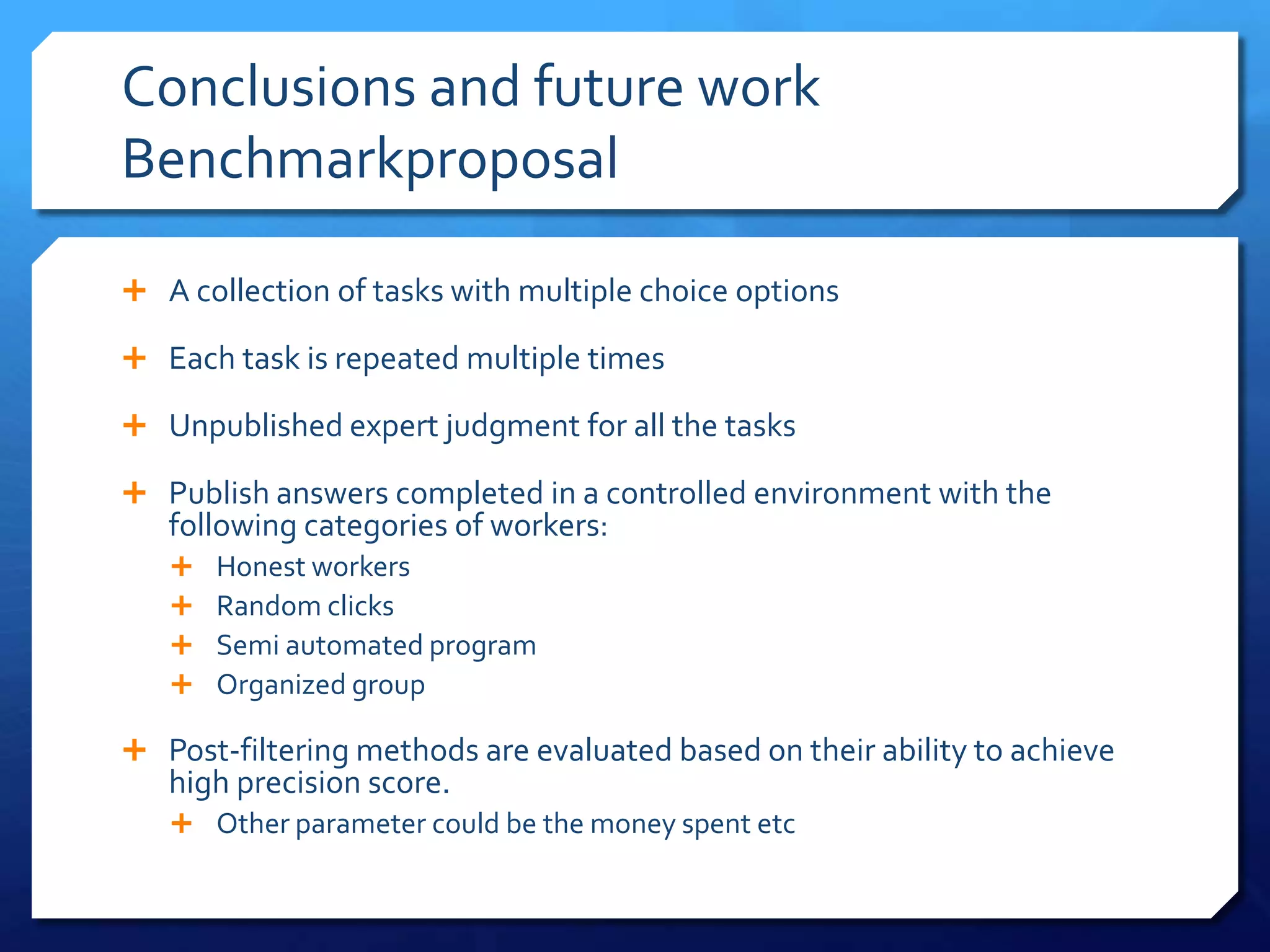

This document discusses adversarial techniques used by spammers on crowdsourcing platforms and methods to counter them. It finds that some quality control tools are inefficient against resourceful spammers. It proposes combining multiple techniques like test questions, task repetition, and machine learning for post-filtering. Crowdsourcing platforms should provide more tools while evaluation of filtering algorithms needs to be repeatable and generic. A benchmark is proposed to evaluate post-filtering methods' ability to achieve high precision scores against different categories of workers.