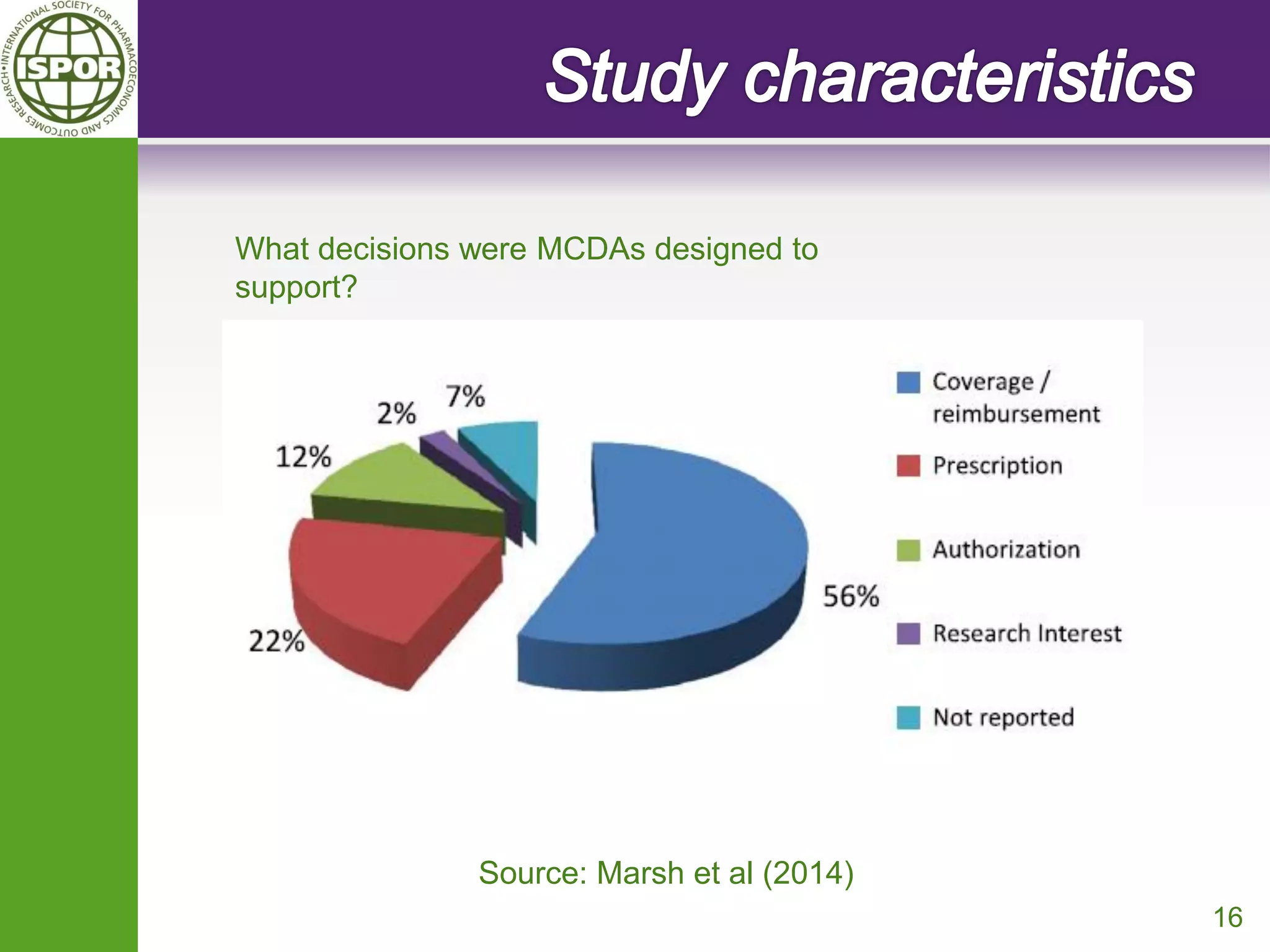

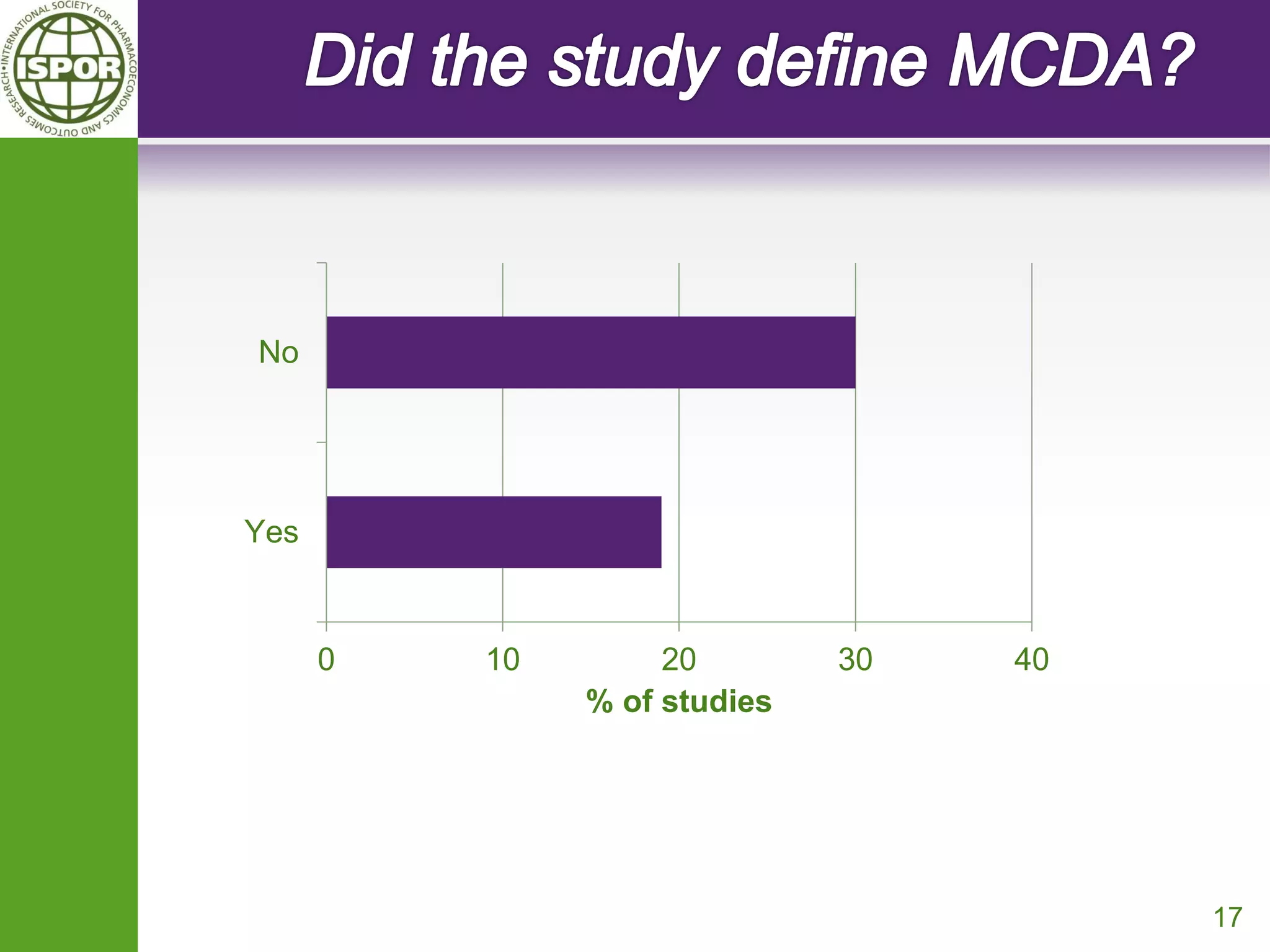

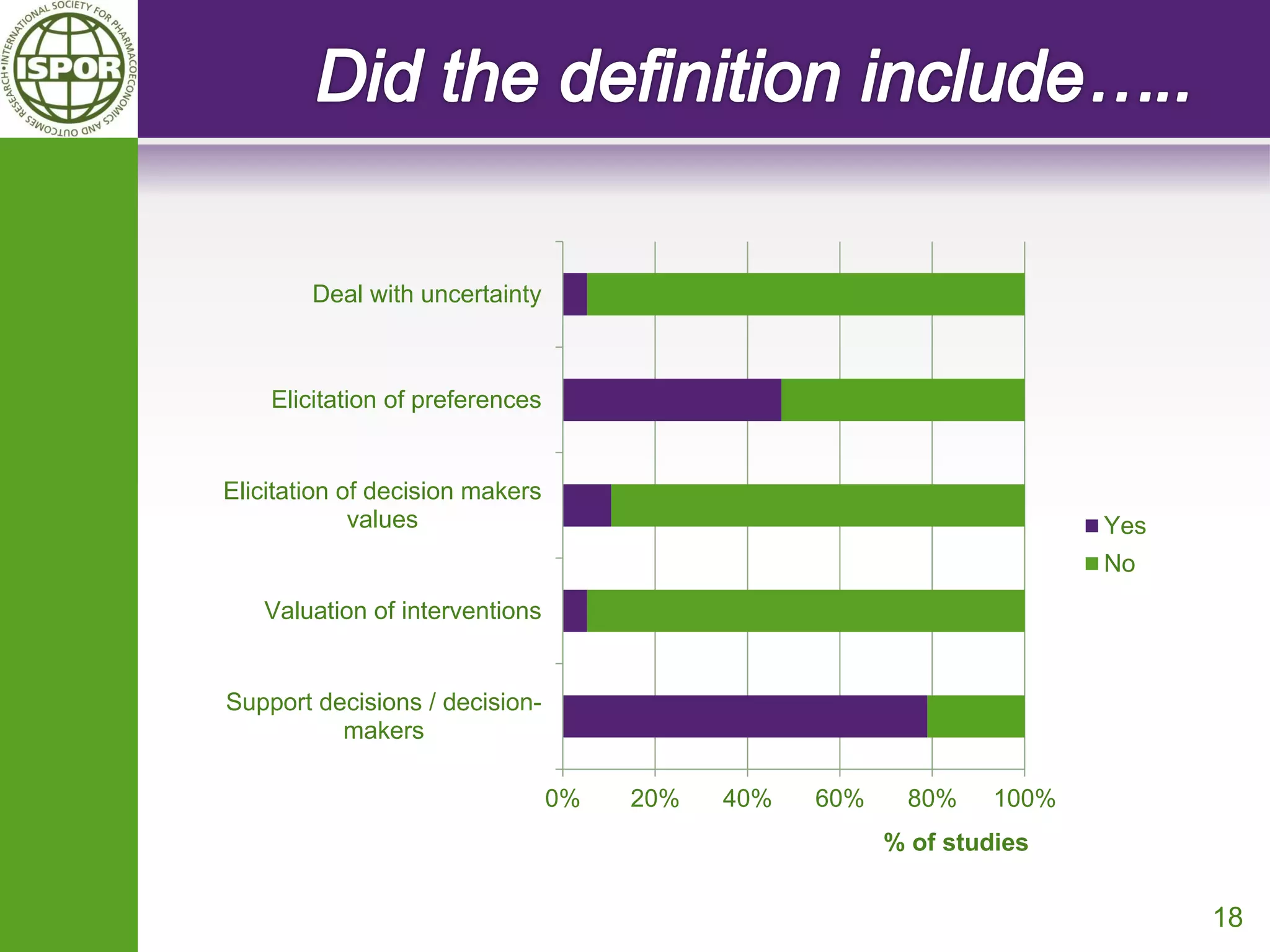

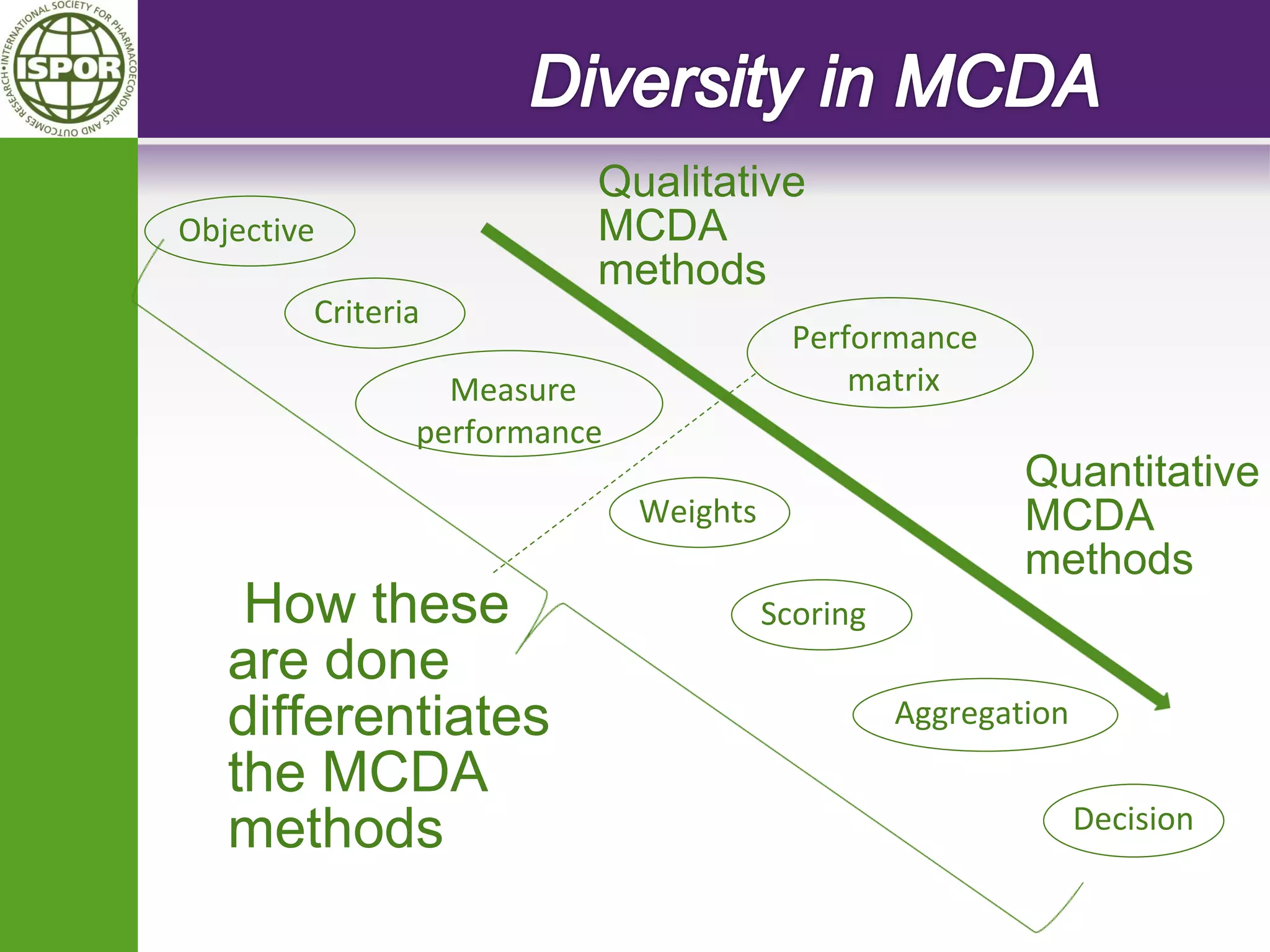

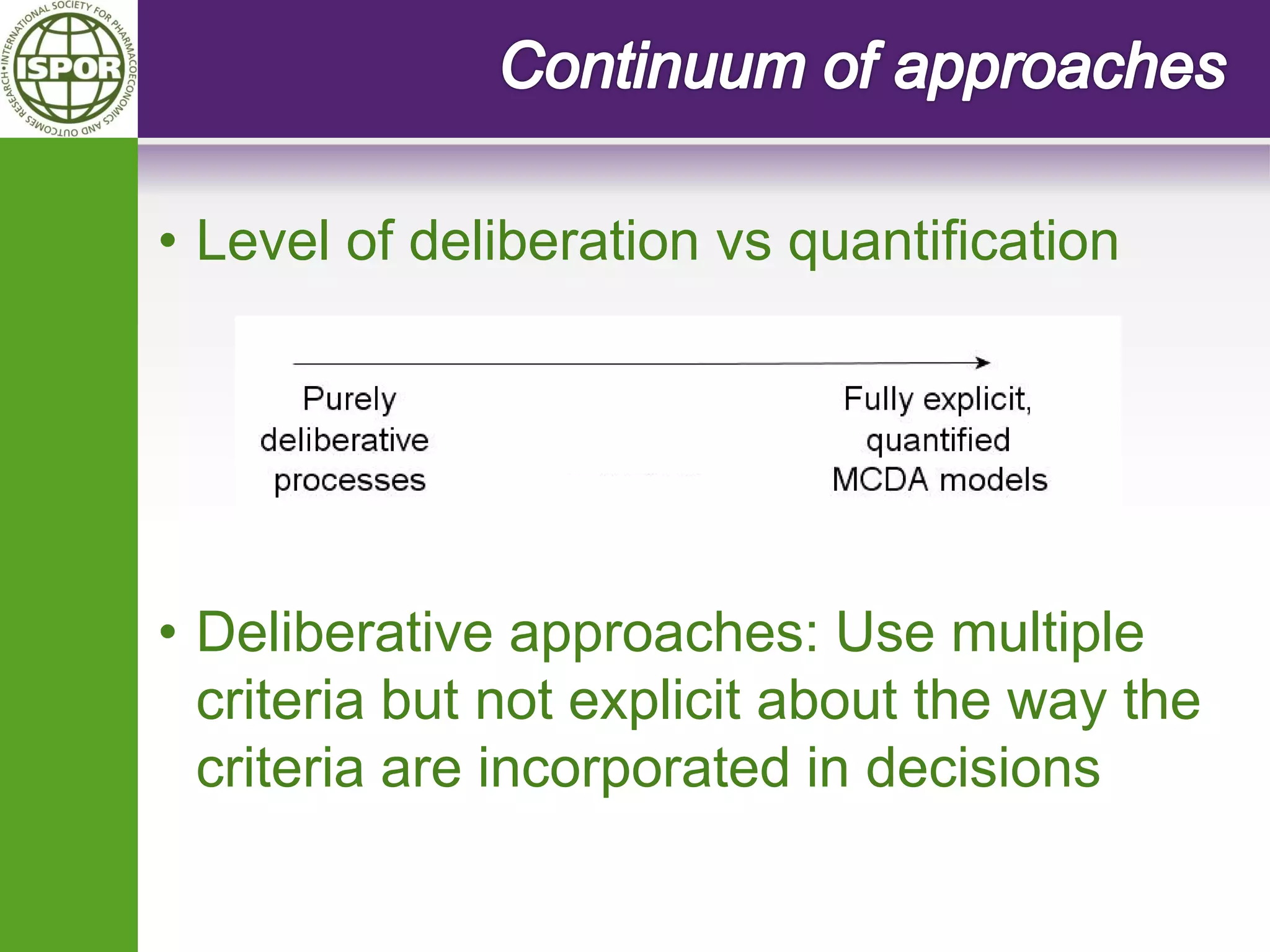

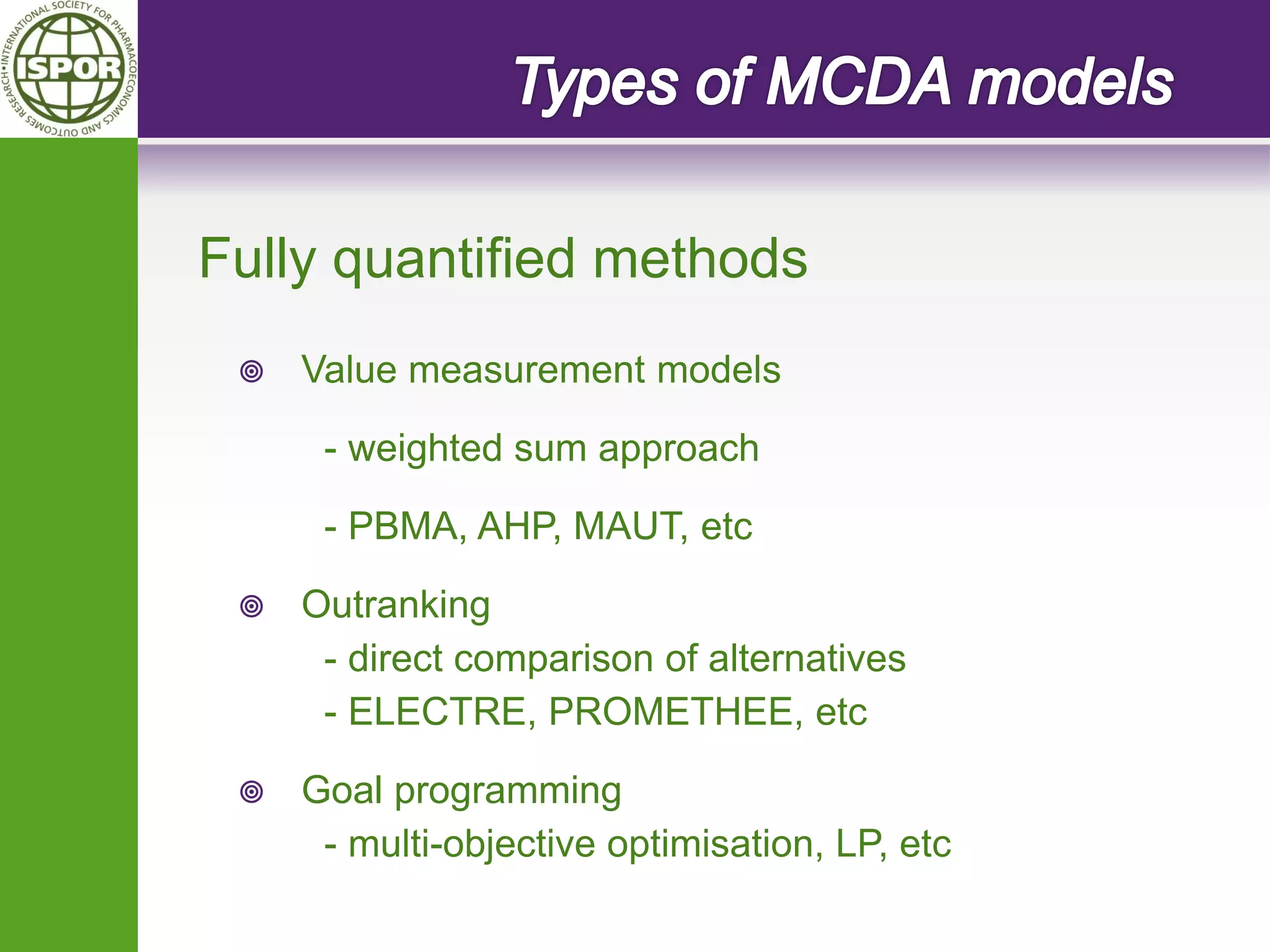

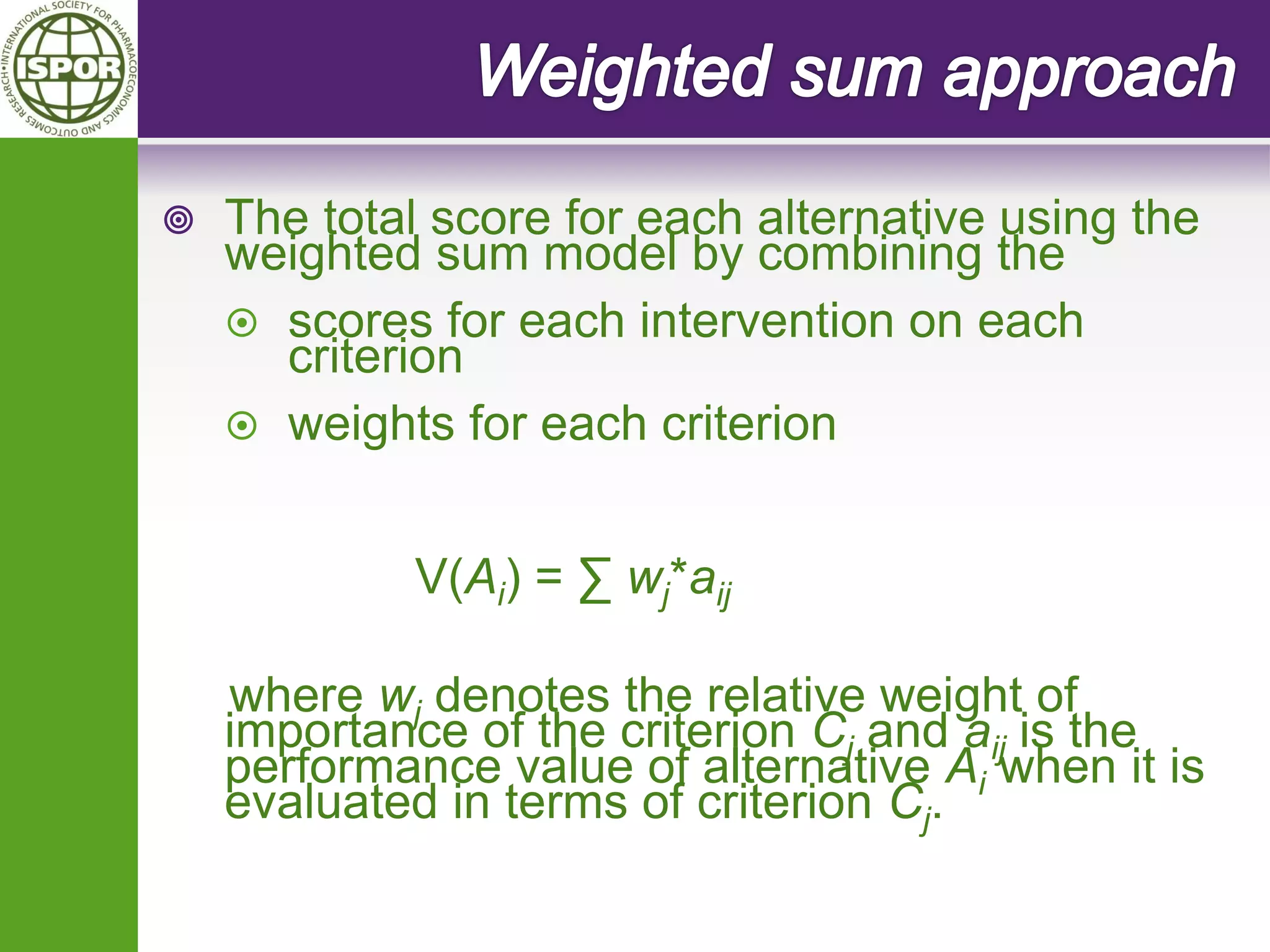

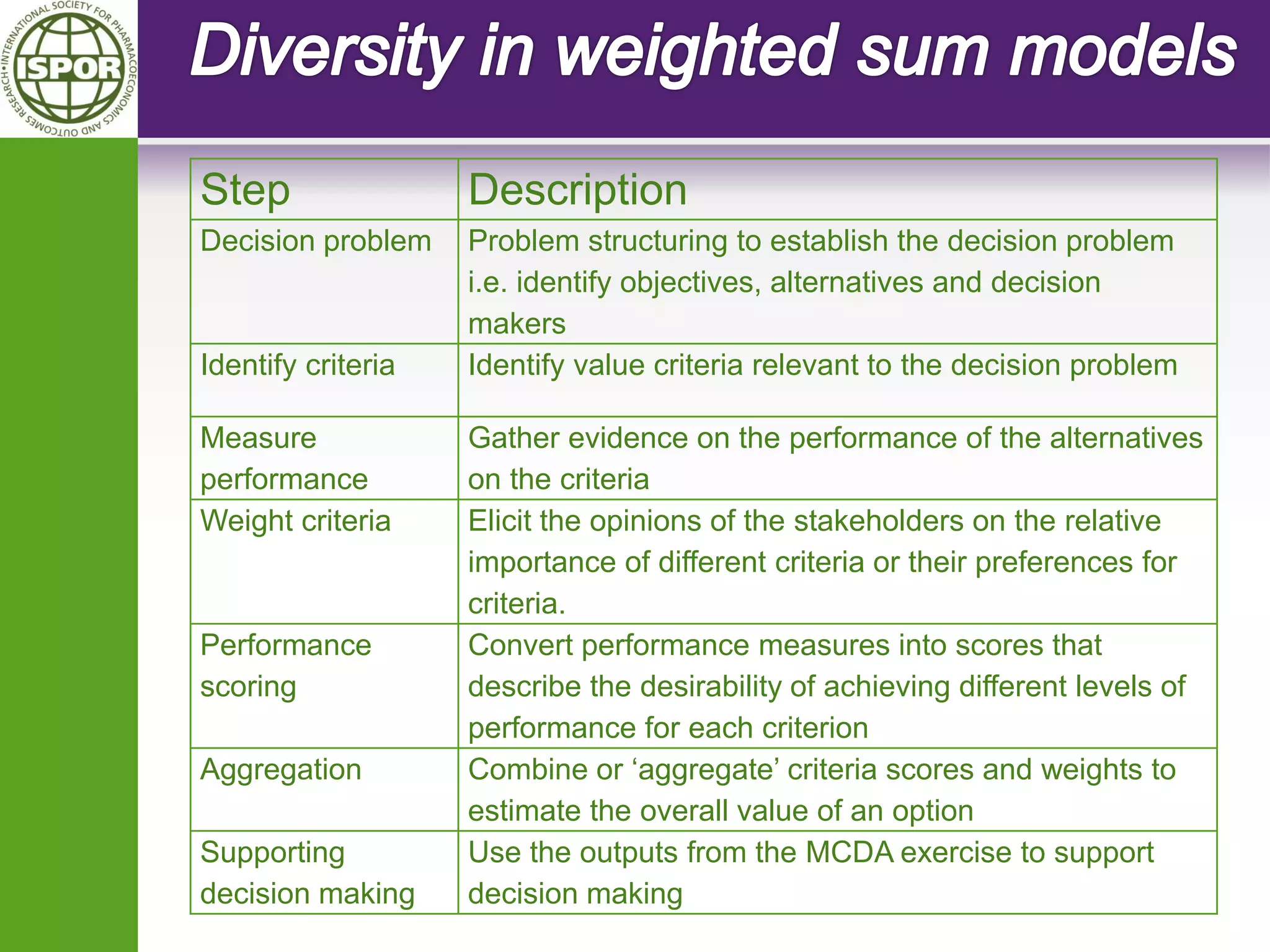

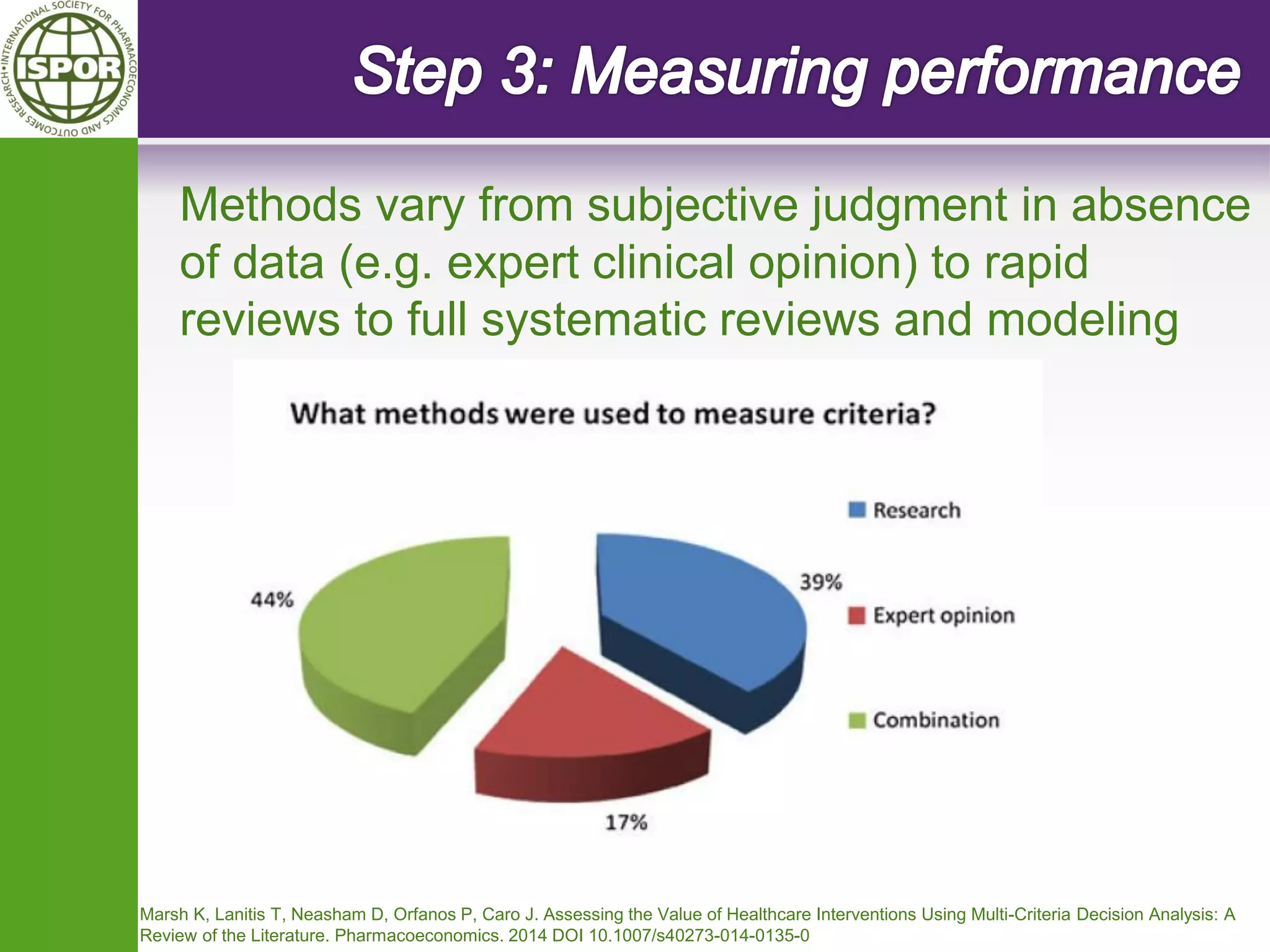

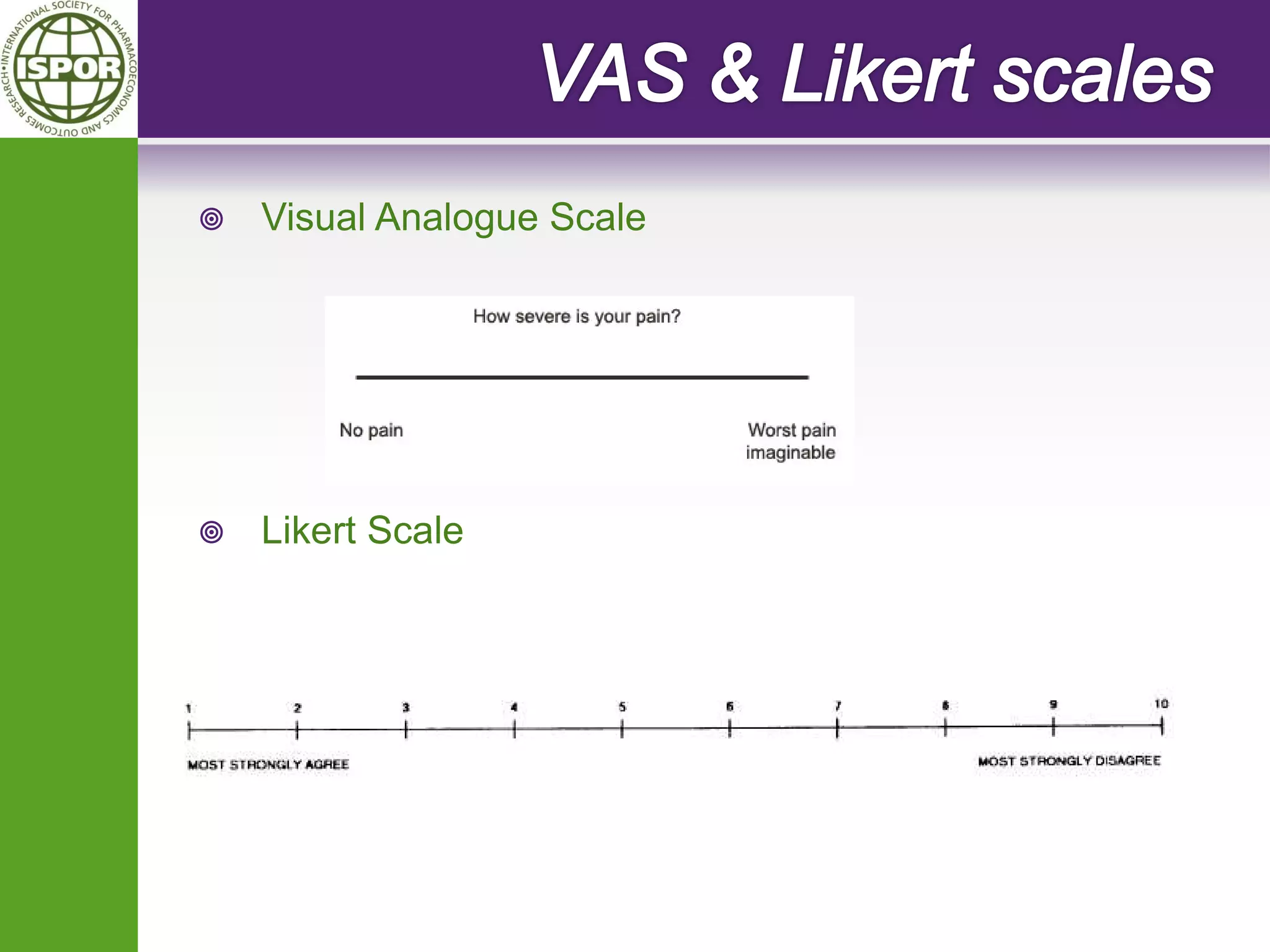

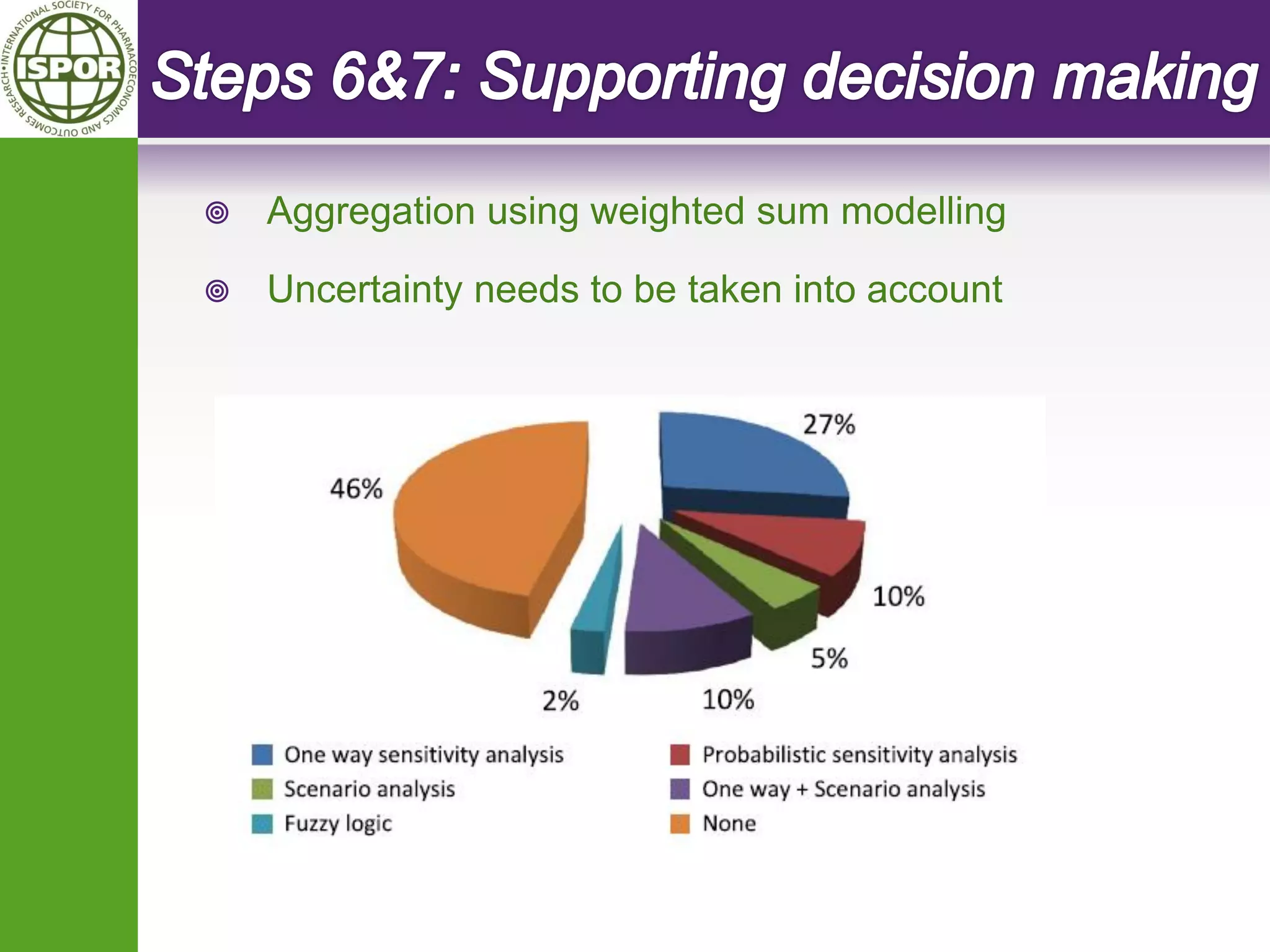

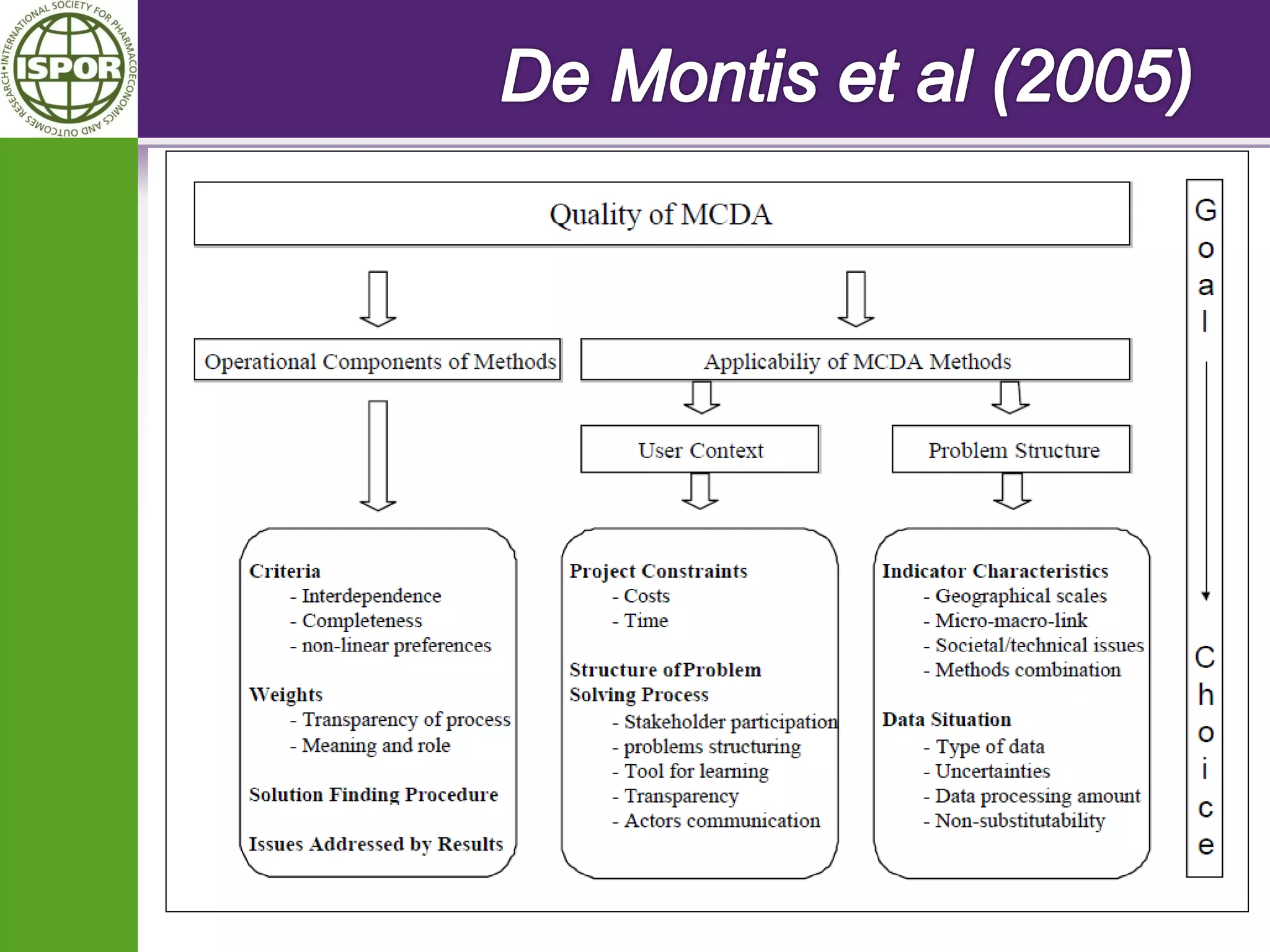

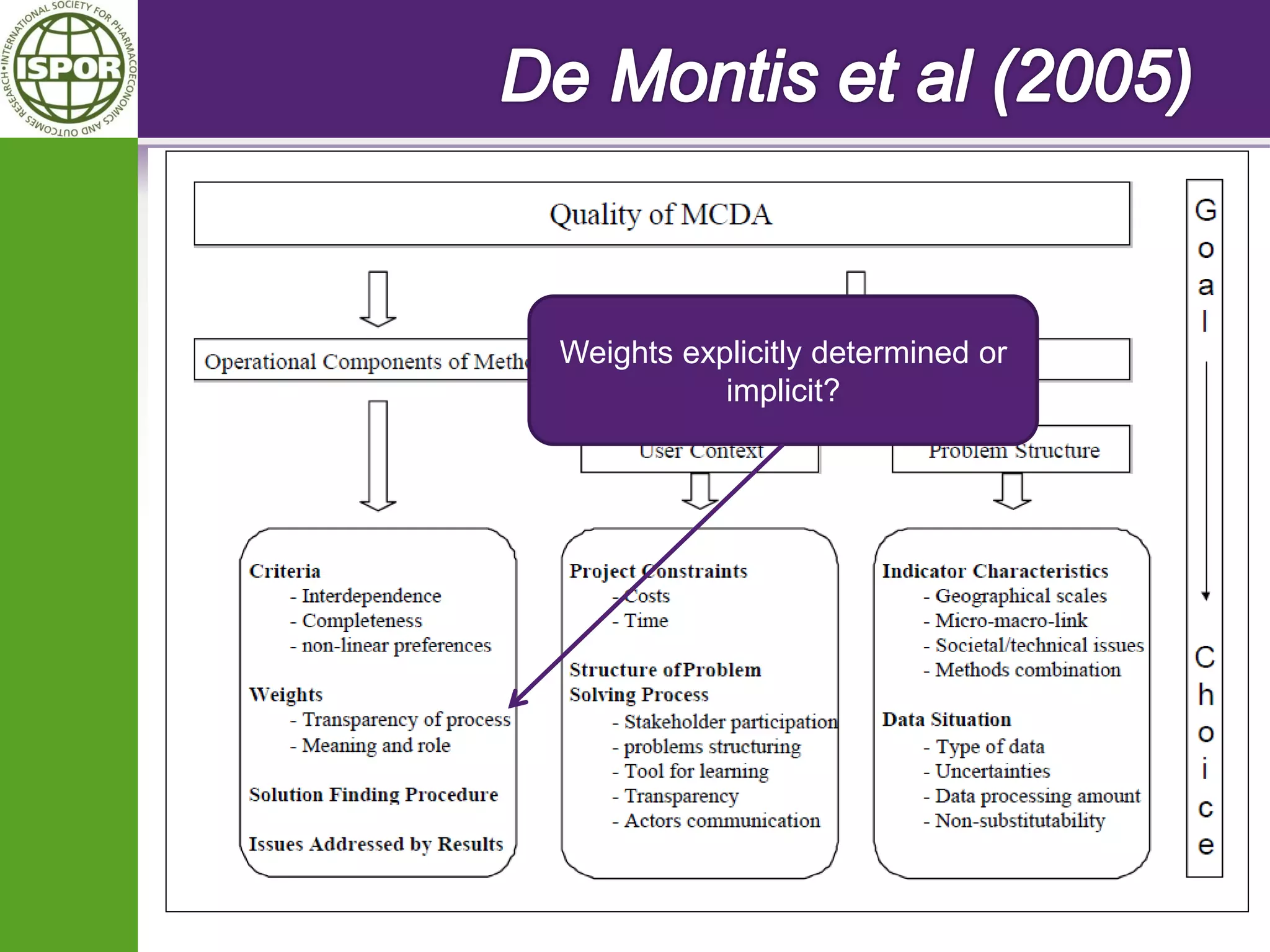

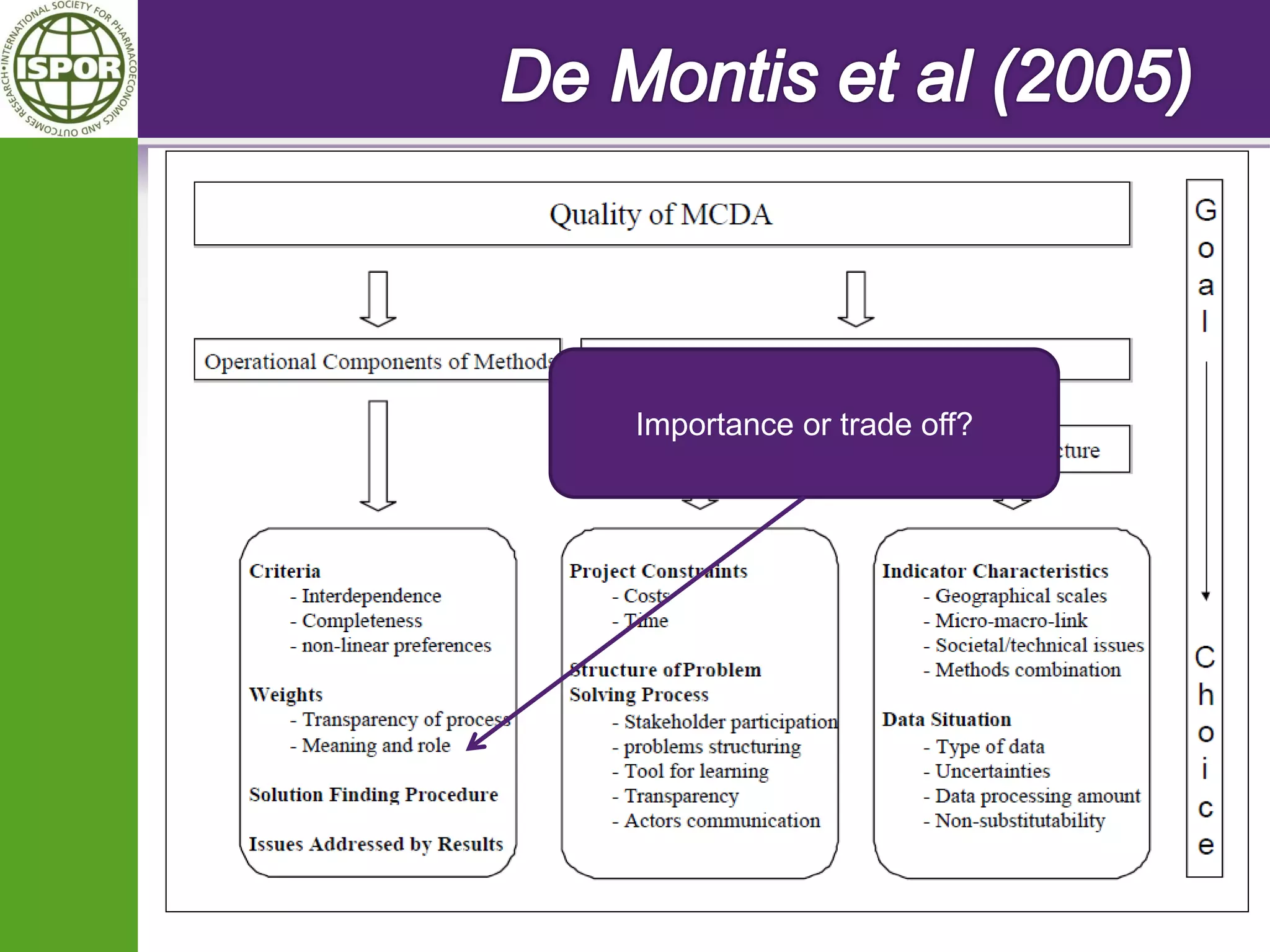

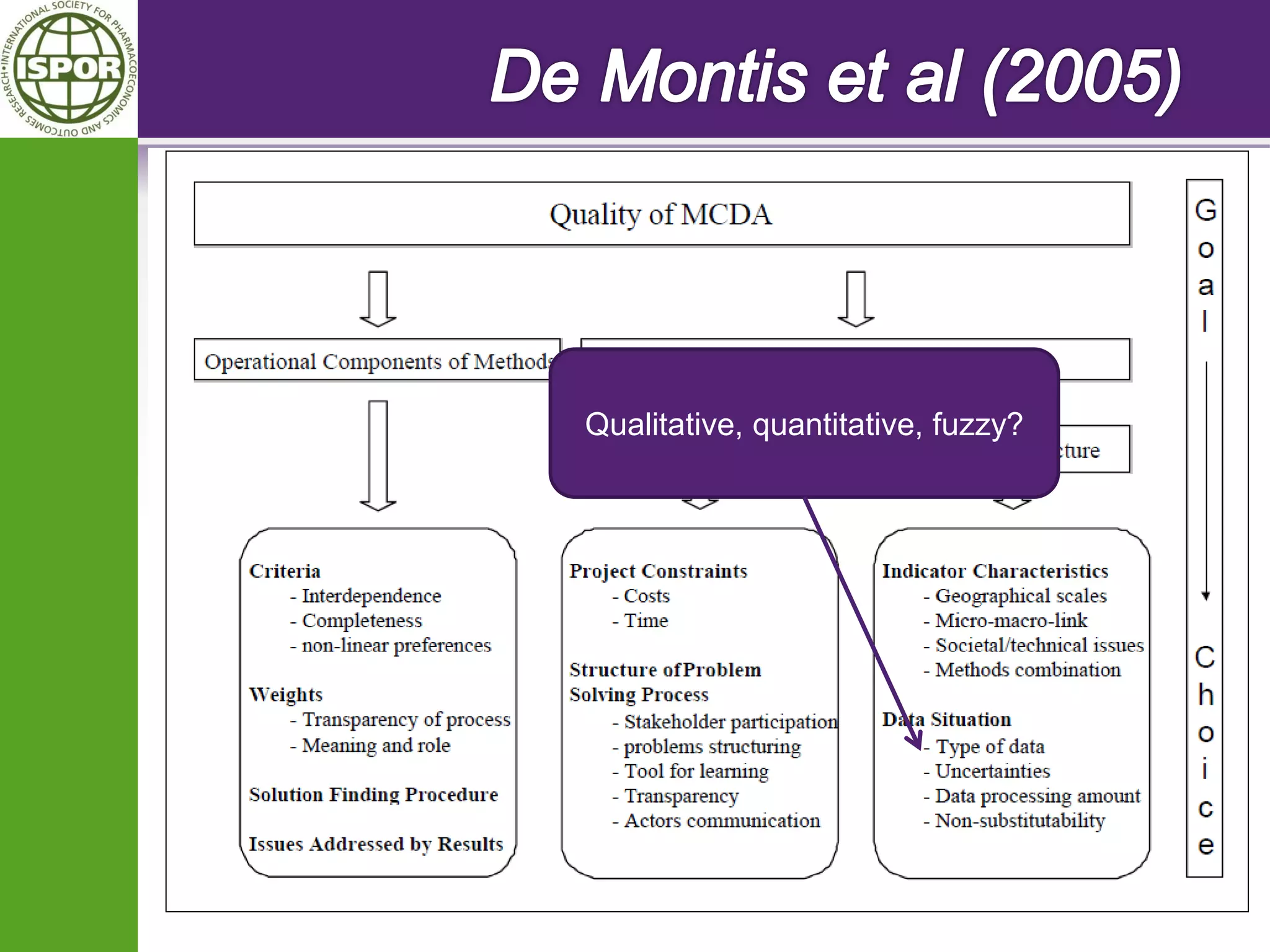

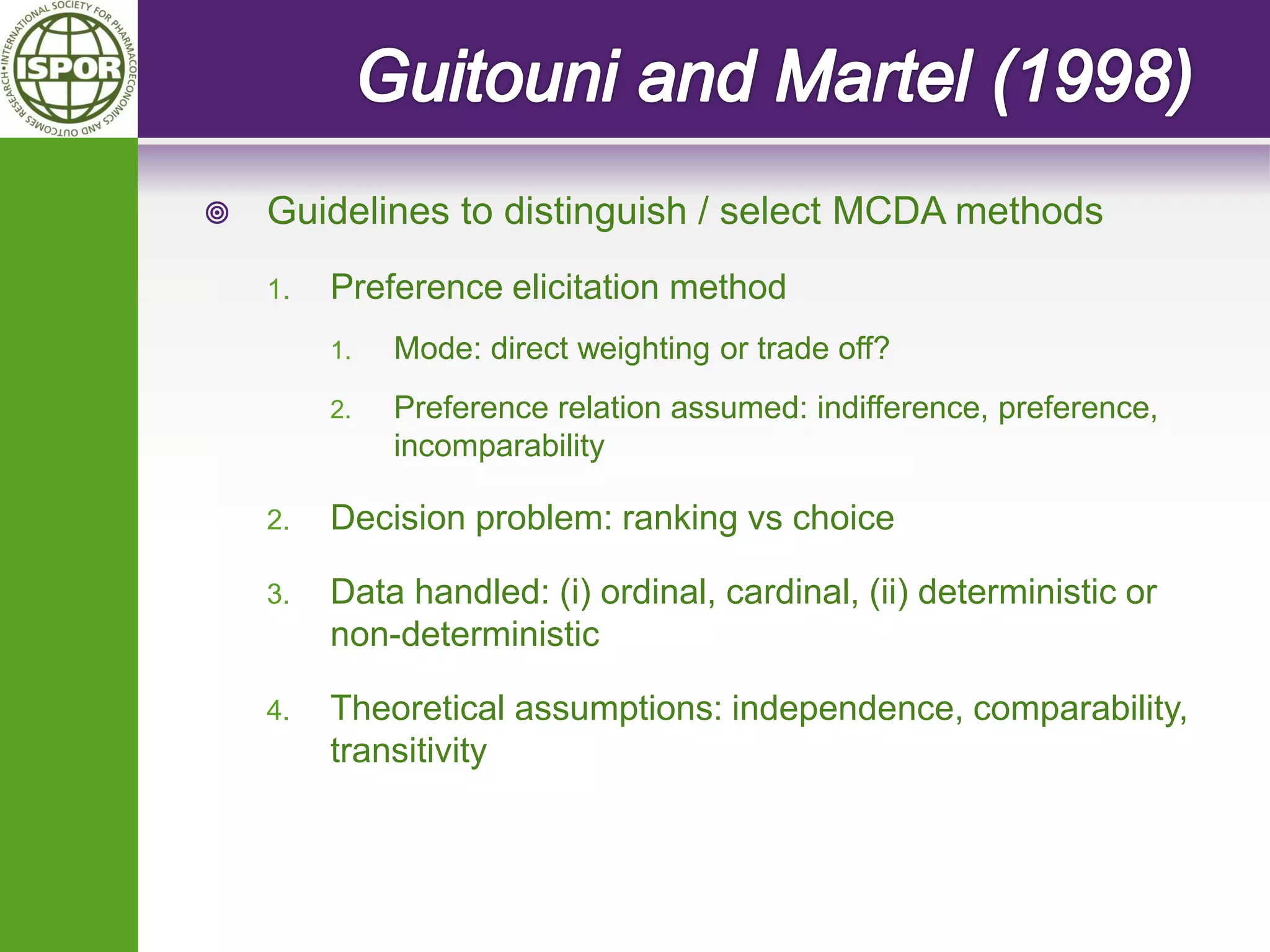

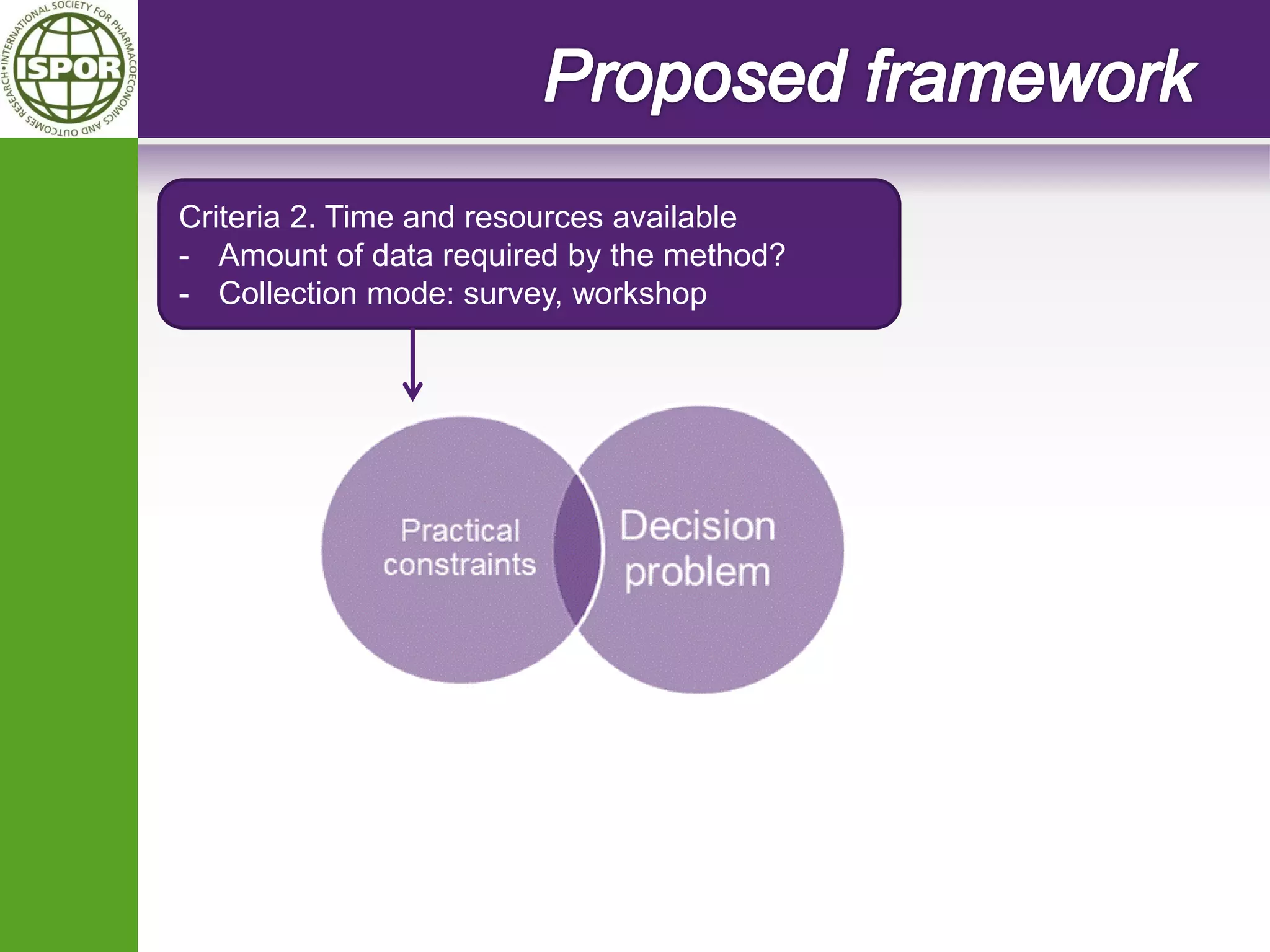

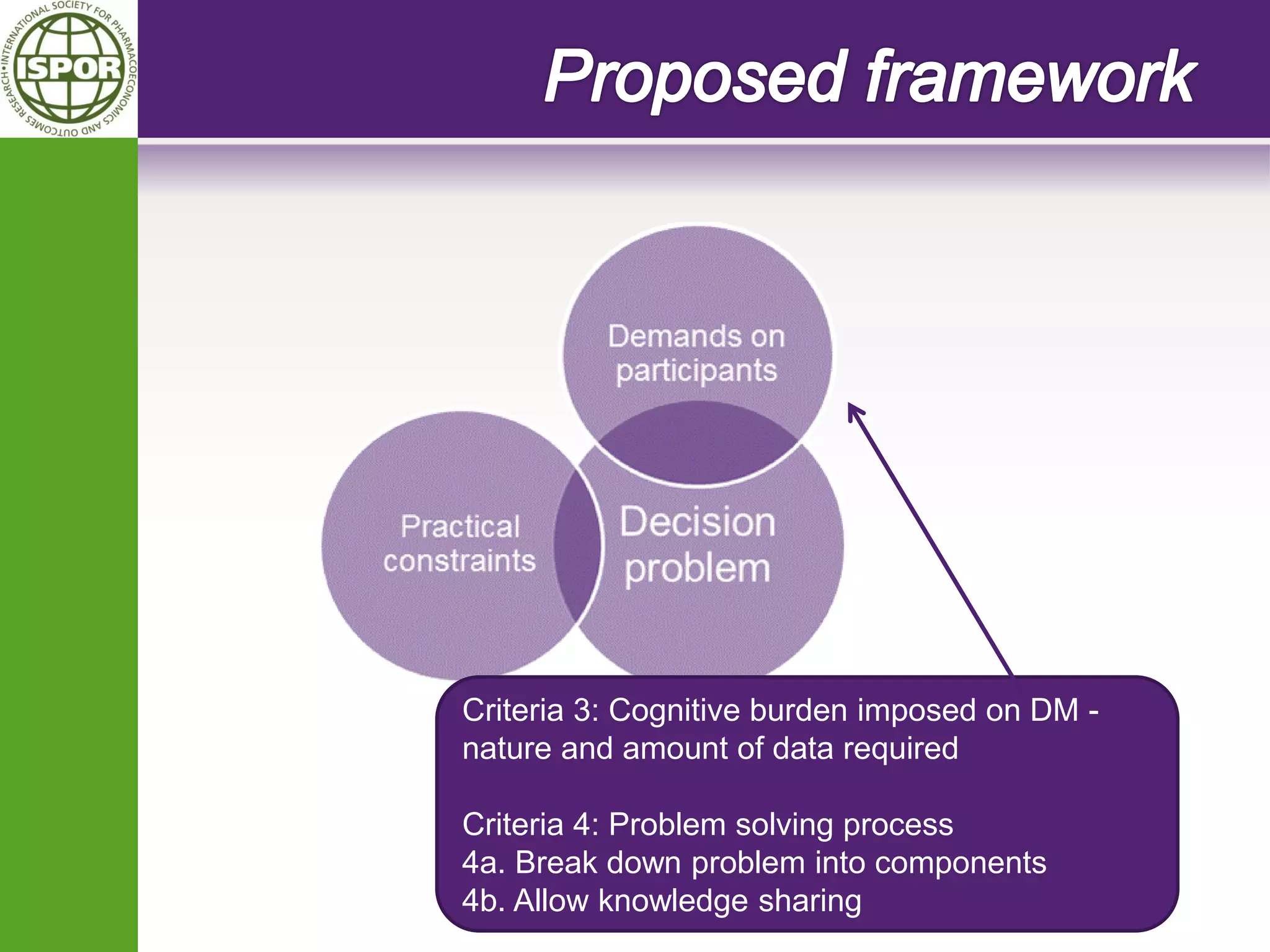

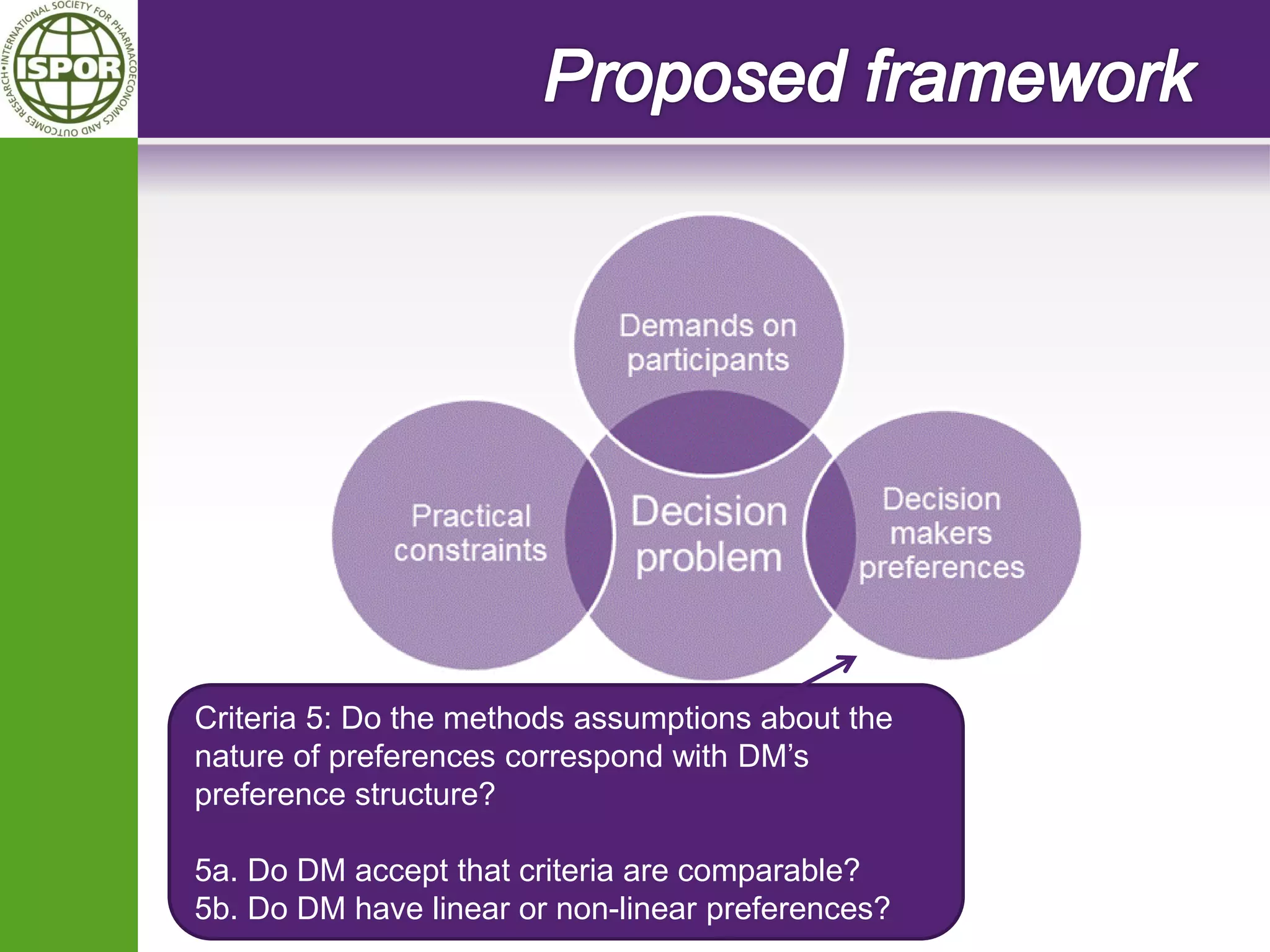

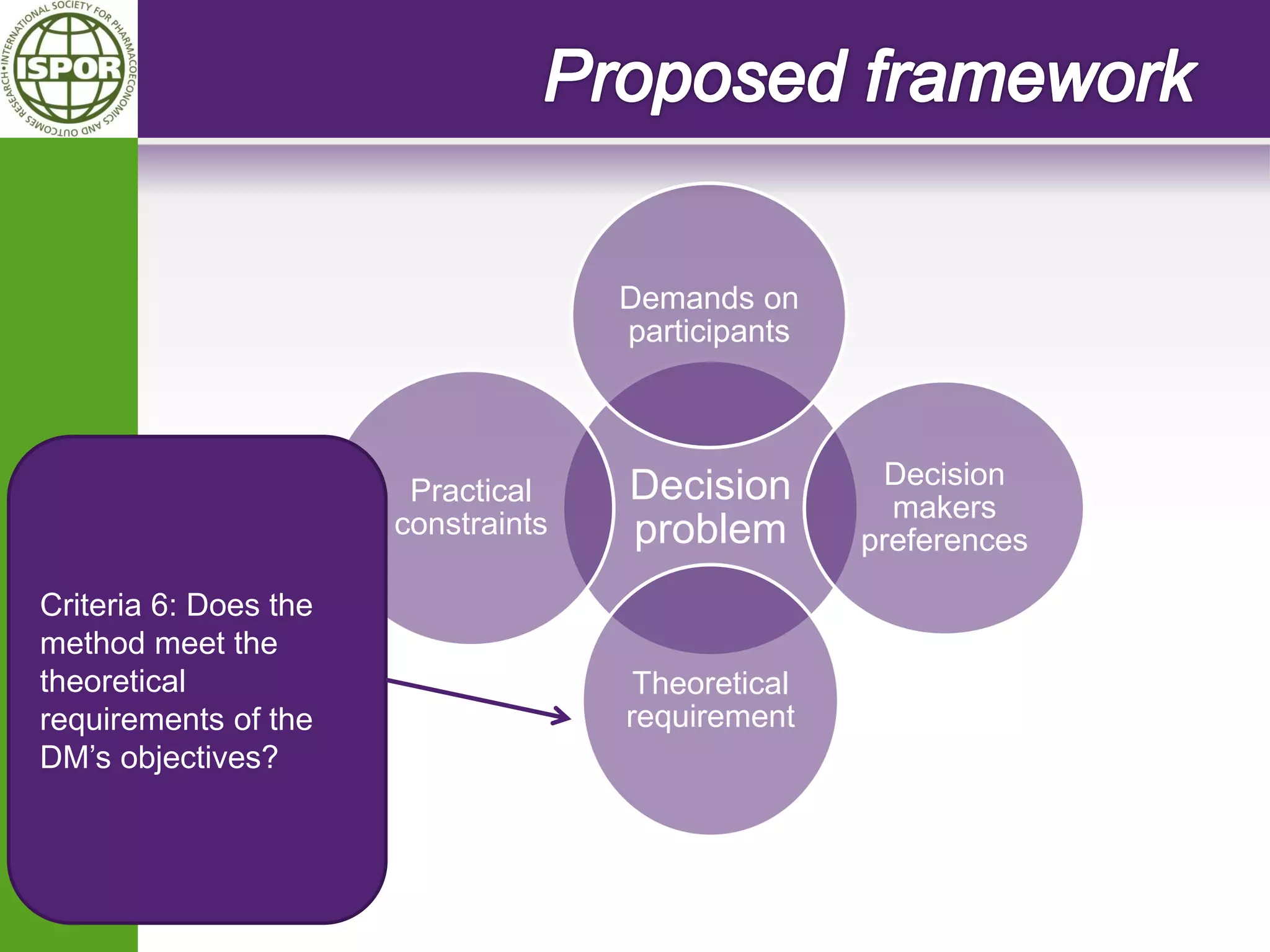

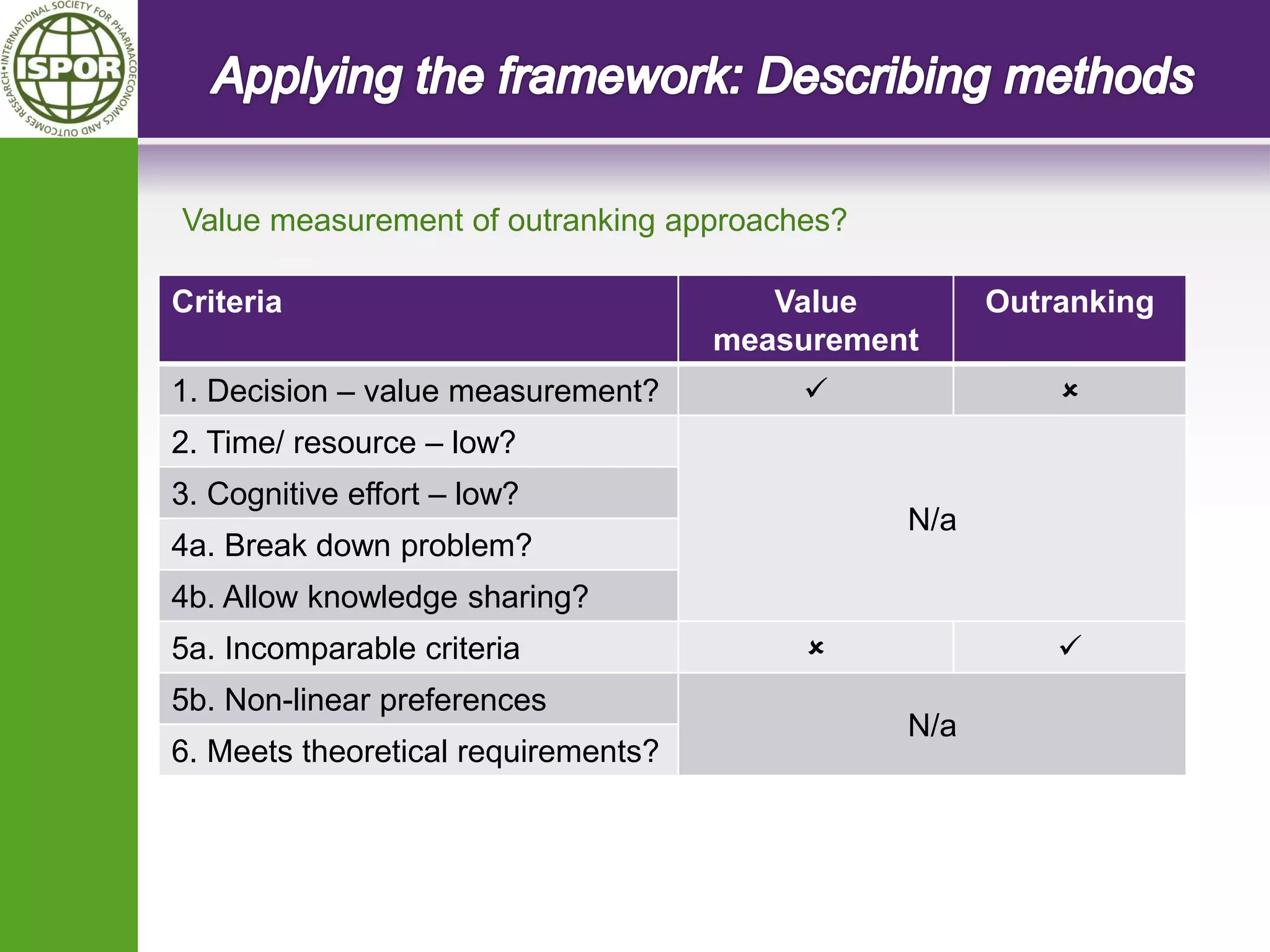

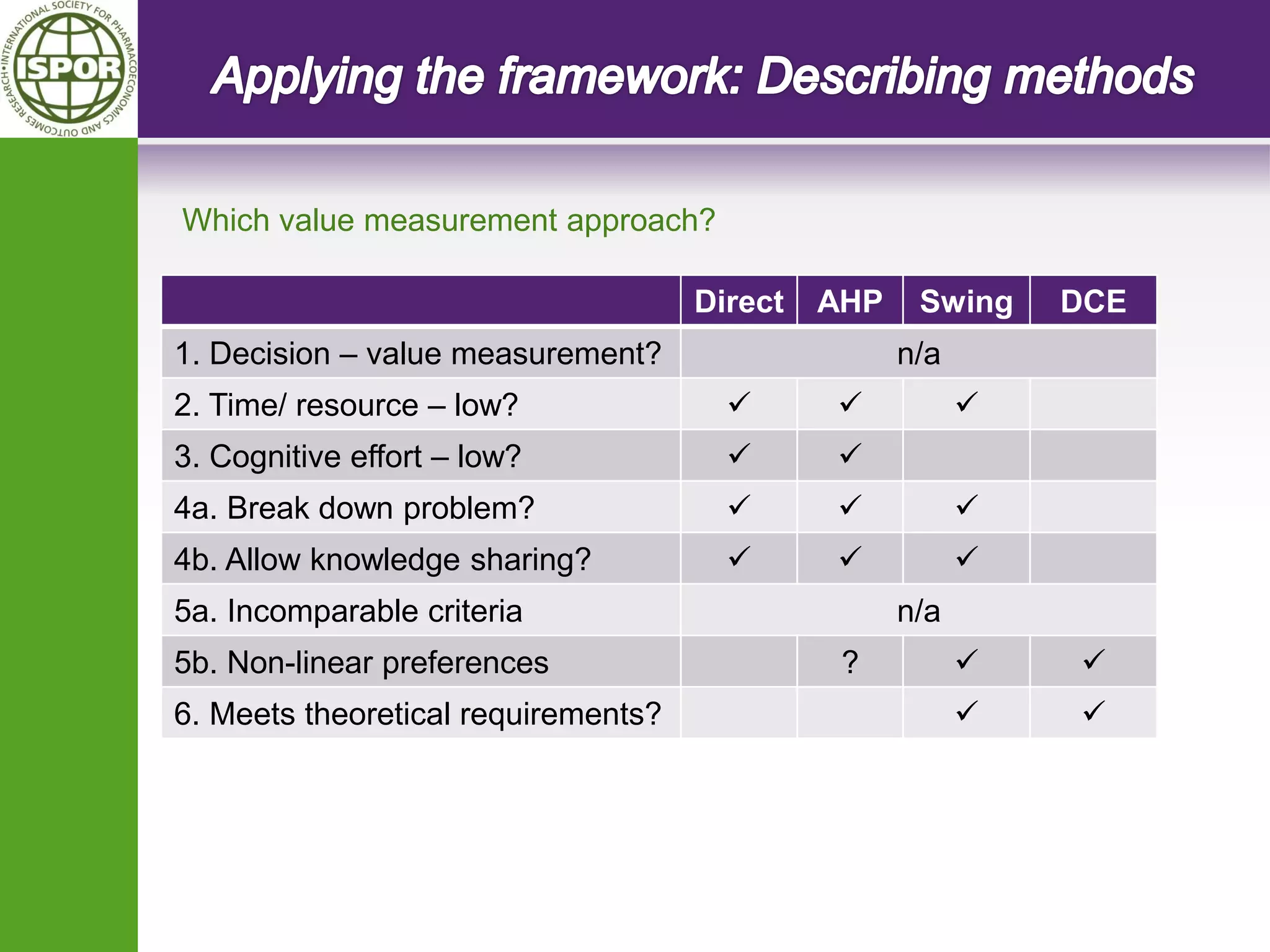

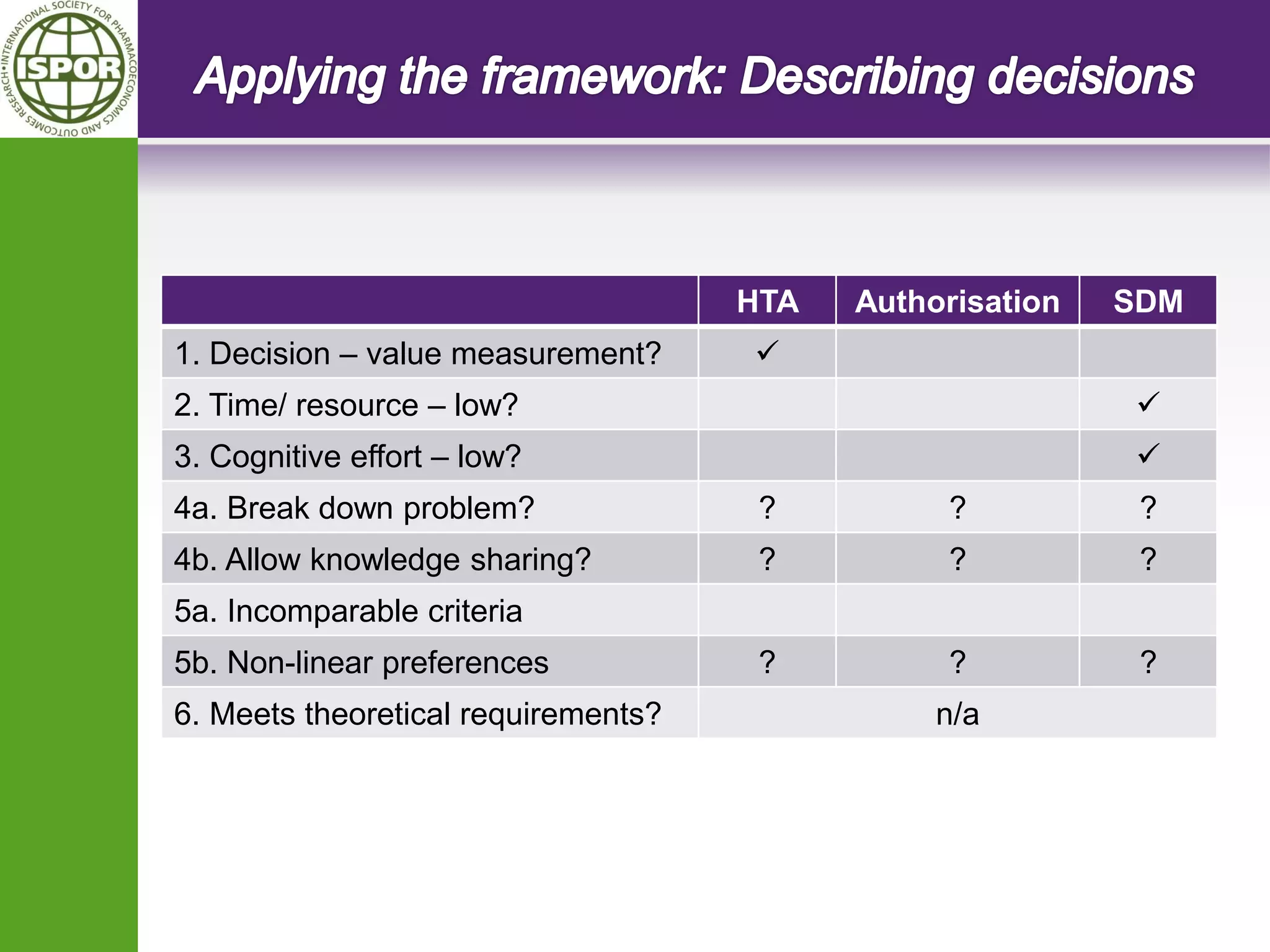

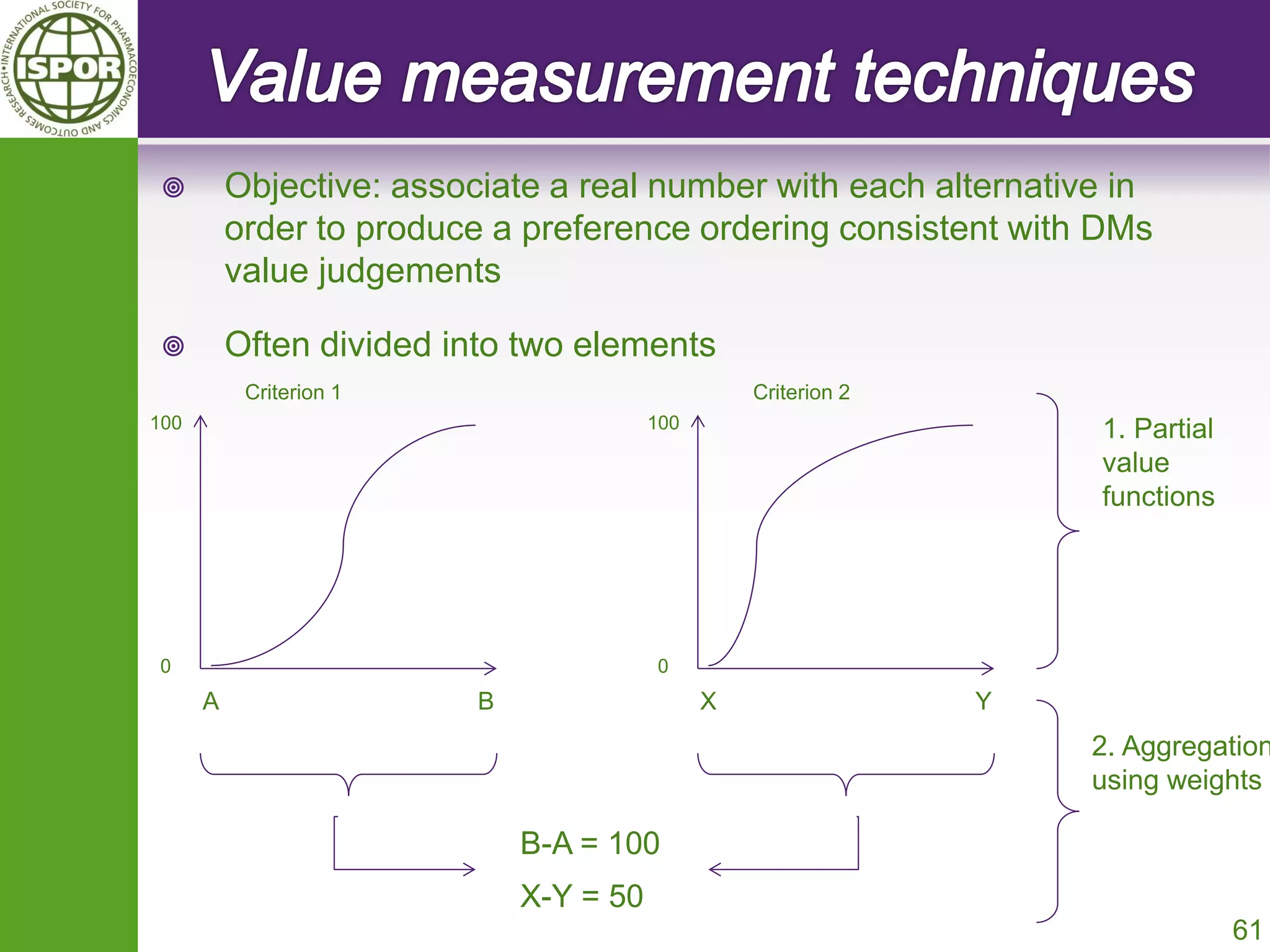

The document discusses the formation of an ISPOR task force to develop guidance on the use of multi-criteria decision analysis (MCDA) in healthcare decision making. The task force will define MCDA, identify different MCDA techniques, and provide guidance on which techniques are best suited for different types of healthcare decisions. The document also discusses proposed definitions of MCDA and debates the appropriate scope and focus of the task force's work.