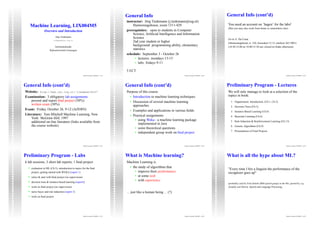

1) This document provides an overview of a machine learning course, including the instructor, schedule, prerequisites, literature, and examination requirements.

2) The course will introduce machine learning techniques and discuss several approaches, using examples and applications from various fields. Students will complete practical assignments using Weka machine learning software.

3) Machine learning allows algorithms to improve their performance at tasks through experience, like humans. It is used in many complex applications like robot control, data analysis, and pattern recognition in various fields.