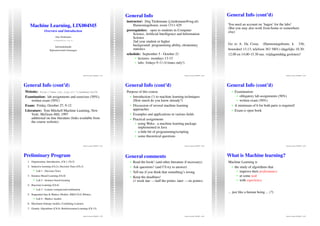

This document provides an overview of a machine learning course. It discusses the schedule, prerequisites, evaluation criteria, and preliminary program topics. The course will cover machine learning techniques including decision trees, instance-based learning, Bayesian learning, sequential data models, and combining learners. Students will complete lab assignments using a machine learning package and the exam will evaluate both lab assignments and theoretical questions. The goal of the course is to introduce students to machine learning approaches and their applications through examples, assignments, and lectures.

![What is all the hype about ML? Why machine learning? Typical Data Mining Task

data mining: pattern recognition, knowledge

discovery, use historical data to improve future

"Every time I fire a linguist the performance of the decisions, prediction (classification, regression),

recognizer goes up" data discripton (clustering, summarization,

visualization)

(probably) said by Fred Jelinek (IBM speech group) in the 80s, quoted by, e.g., complex applications: we cannot program by hand,

Jurafsky and Martin, Speech and Language Processing. (efficient) processing of complex signals Given:

self-customizing programs: automatic adjustments • 9714 patient records, each describing a pregnancy and birth

according to usage, dynamic systems • Each patient record contains 215 features

Learn to predict:

• Classes of future patients at high risk for Emergency Cesarean Section

Machine Learning, LIX004M5 – p.10/50 Machine Learning, LIX004M5 – p.11/50 Machine Learning, LIX004M5 – p.12/50

Pattern Recognition Complex applications Classification

Object detection Operating robots: Personal home page? Company website? Educational site?

ALVINN [Pomerleau] drives 70 mph on highways

Machine Learning, LIX004M5 – p.13/50 Machine Learning, LIX004M5 – p.14/50 Machine Learning, LIX004M5 – p.15/50

Automatic customization Machine learning is growing Questions to ask

many more applications: Learning = improve with experience at some task

• speech recognition • What experience?

• robot control • What exactly should be learned?

• spam filtering, data sorting • How shall it be represented?

• machine translation • What specific algorithm to learn it?

• financial data analysis and market predictions Goal: handle unseen data correctly according to the

• hand writing recognition task (use your knowledge inferred from experience!)

• data clustering and visualization

• pattern recognition in genetics (e.g. DNA

sequences)

Machine Learning, LIX004M5 – p.16/50 Machine Learning, LIX004M5 – p.17/50 Machine Learning, LIX004M5 – p.18/50](https://image.slidesharecdn.com/machine-learning-lix004m52561/85/Machine-Learning-LIX004M5-2-320.jpg)