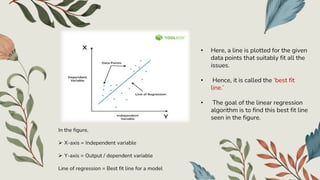

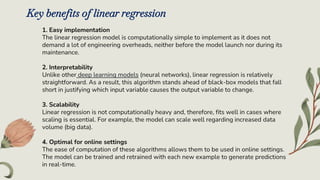

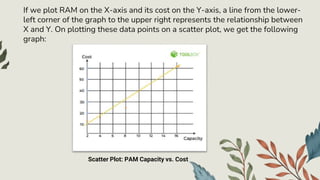

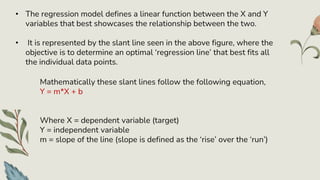

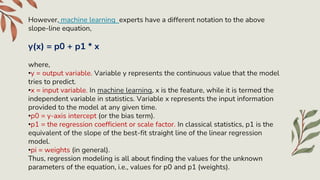

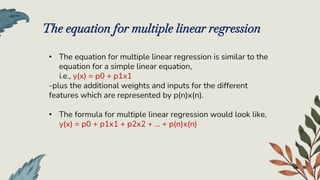

Linear regression is a statistical method that establishes a linear relationship between independent and dependent variables for predictive analysis, widely used in data science and machine learning. It is easy to implement, interpretable, scalable, and optimal for online settings, forming a 'best fit' line through data points to predict outcomes. The mathematical representation includes simple and multiple regression equations, which can be illustrated through programming with tools like Python.

![PYTHON CODE FOR OBTAINING THE LINEAR REGRESSION RAM CAPACITY VS COST DATASET:

import numpy as np

from sklearn.linear_model import LinearRegression

import matplotlib.pyplot as plt

# Get the data from the image

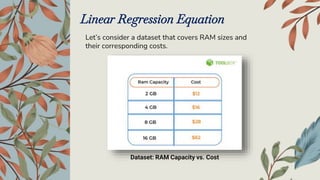

ram_capacity = np.array([2, 4, 8, 16])

ram_cost = np.array([12, 16, 28, 62])

# Create a linear regression model

model = LinearRegression()

# Fit the model to the data

model.fit(ram_capacity[:, np.newaxis], ram_cost)

# Get the slope and intercept of the regression line

slope = model.coef_[0][0] intercept = model.intercept_[0]

# Generate the regression line

ram_reg = np.linspace(min(ram_capacity), max(ram_capacity), 100)

cost_reg = slope * ram_reg + intercept

# Plot the data and the regression line

plt.scatter(ram_capacity, ram_cost)

plt.plot(ram_reg, cost_reg, color='red’)

plt.xlabel('Capacity’)

plt.ylabel('Cost’)

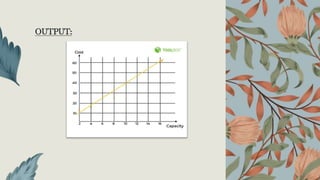

plt.title('Linear Regression of RAM Cost vs. RAM Capacity’)

plt.show()](https://image.slidesharecdn.com/fdslinreg-240728113406-74d2b273/85/linear-regression-in-machine-learning-pptx-11-320.jpg)