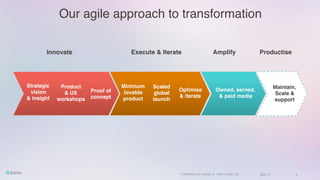

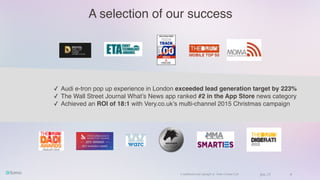

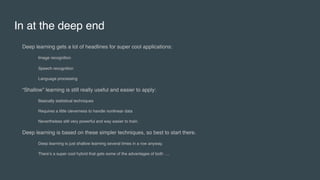

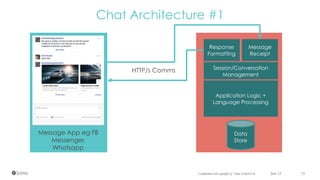

SOMO Custom Ltd. focuses on accelerating mobile transformation through innovative products and agile methodologies for diverse sectors including finance, retail, and automotive. Their successful campaigns have achieved remarkable ROI and lead generation, while they continue to explore advancements in areas like machine learning and messaging platforms. The document outlines SOMO's commitment to innovation, strategic partnerships, and the integration of deep learning technologies to address complex challenges in digital engagement.