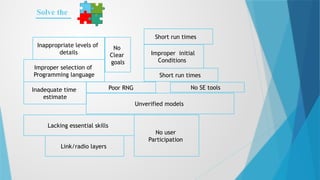

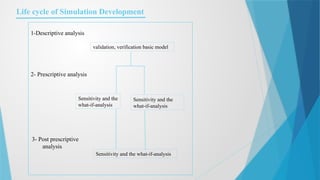

The document discusses common pitfalls in simulation, including general mistakes, simulation inaccuracies, and misleading results, emphasizing the importance of proper methodology and conditions. Key issues include inappropriate programming language selection, unverified models, and inadequate user participation, fostering false results. It outlines a structured development process for simulations, from problem formulation to validation and sensitivity analysis.