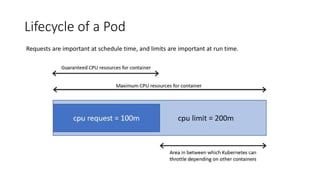

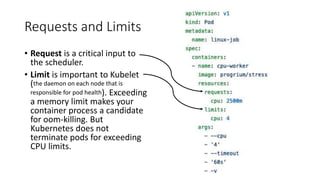

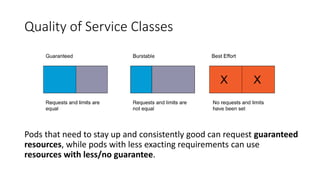

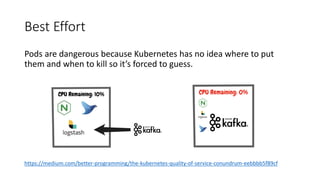

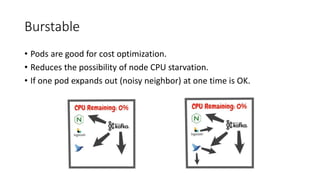

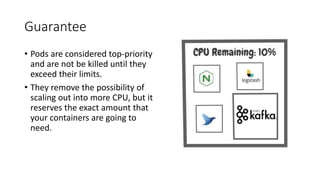

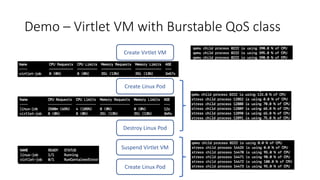

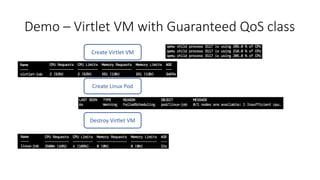

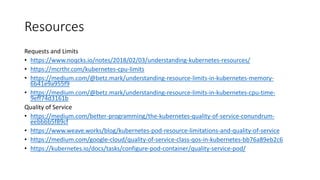

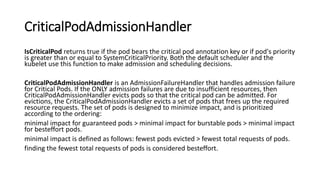

The document discusses Kubernetes resource allocation, focusing on the lifecycle of a pod, resource requests and limits, and quality of service (QoS) classes such as Best Effort, Burstable, and Guaranteed. It emphasizes that requests are crucial during scheduling while limits are vital at runtime, particularly for managing memory to avoid out-of-memory situations. Additionally, it covers CPU management policies and critical pod admission handling to optimize placement of sensitive workloads.