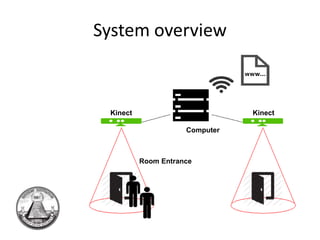

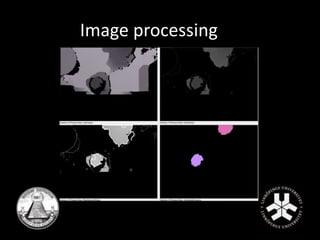

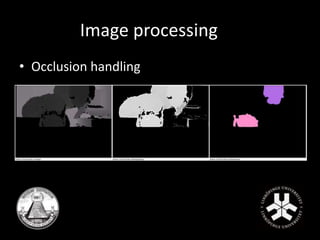

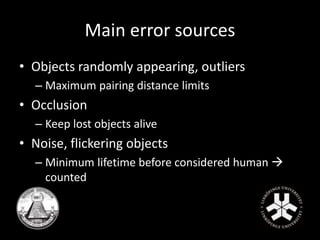

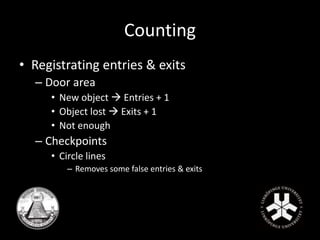

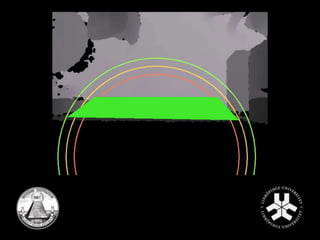

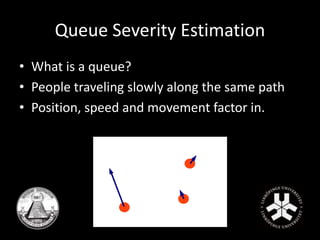

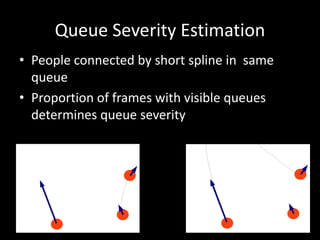

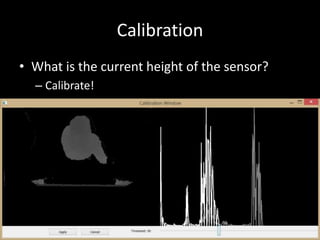

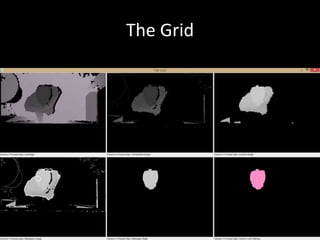

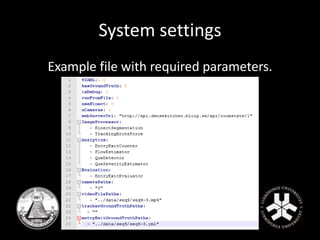

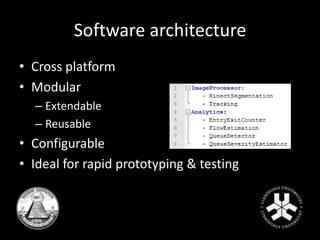

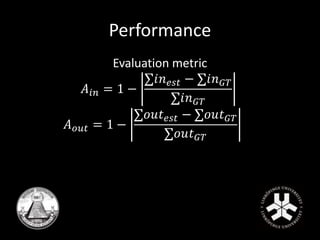

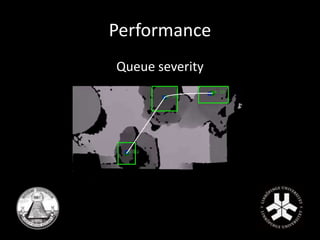

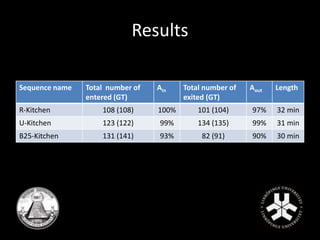

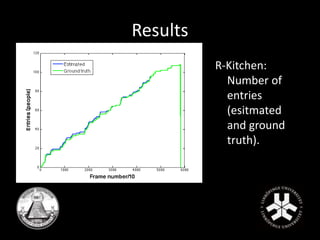

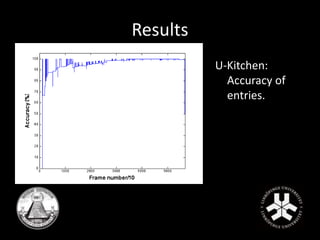

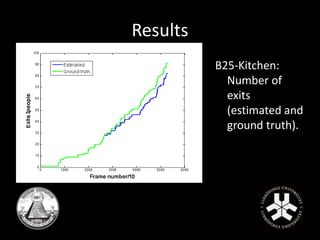

The document describes a project aimed at improving kitchen usage monitoring for students by developing a cost-effective system using computer vision techniques. The system counts entries and exits, estimates queue severity, and is designed to be modular and easily extendable with various sensor inputs, including Microsoft Kinect. Key contributions from the team involved diverse specializations in software architecture, system integration, and user interfaces to ensure robust operation and performance evaluation.