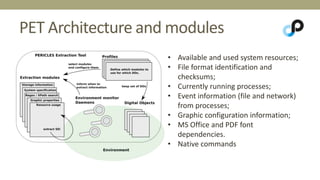

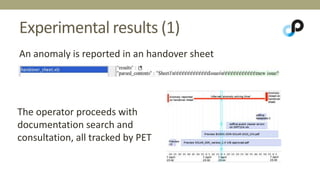

This document discusses significant environment information (SEI) needed to ensure long-term usability of digital objects. SEI is defined as relationships between a digital object and related environment information, qualified by purpose and weights. A tool called the Pericles Extraction Tool collects SEI by monitoring digital object usage. An experiment uses the tool to record environment, events, and inferred documentation when resolving anomalies in spacecraft payload data. Future work includes improving dependency inference and defining significance weights.