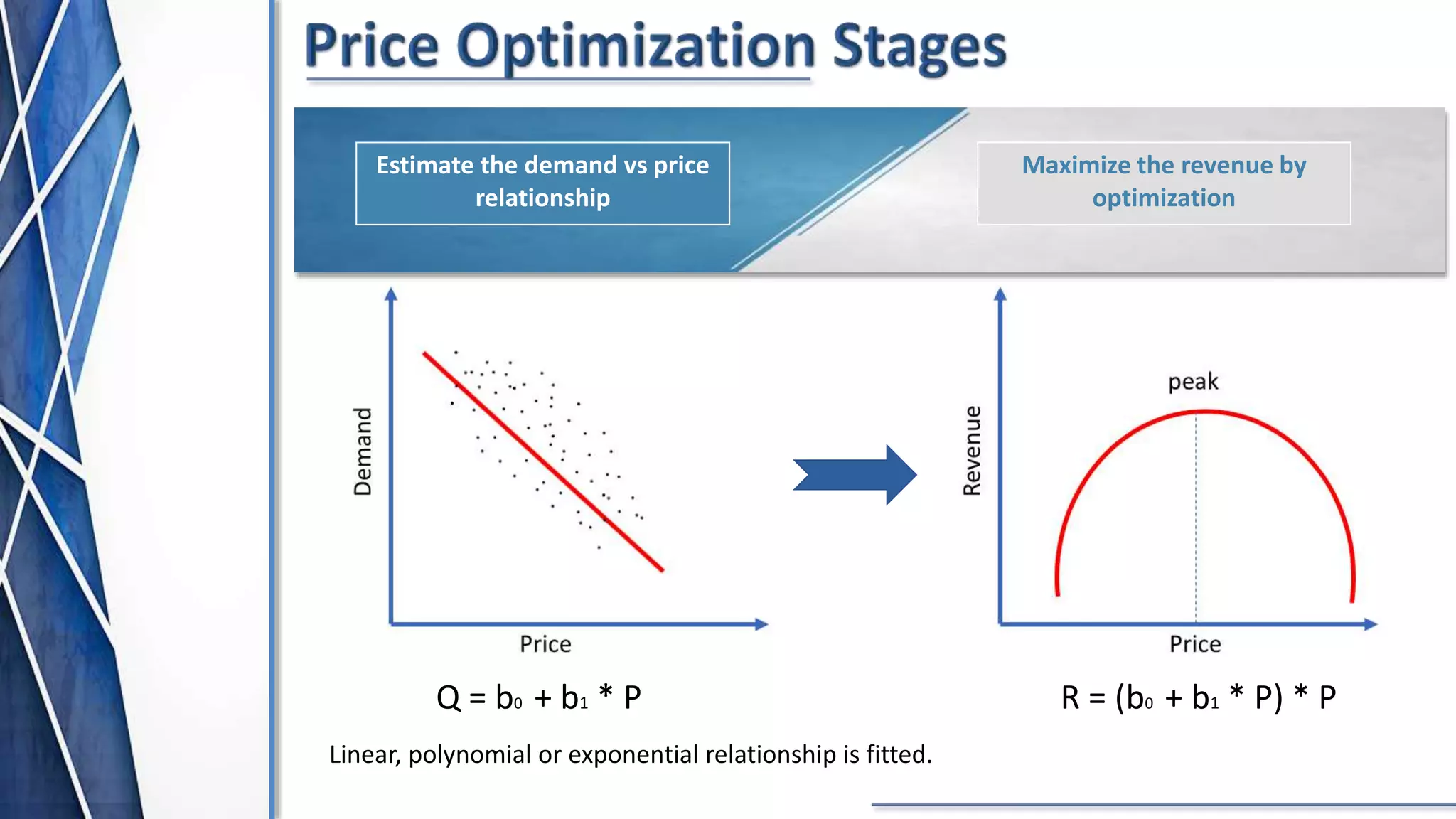

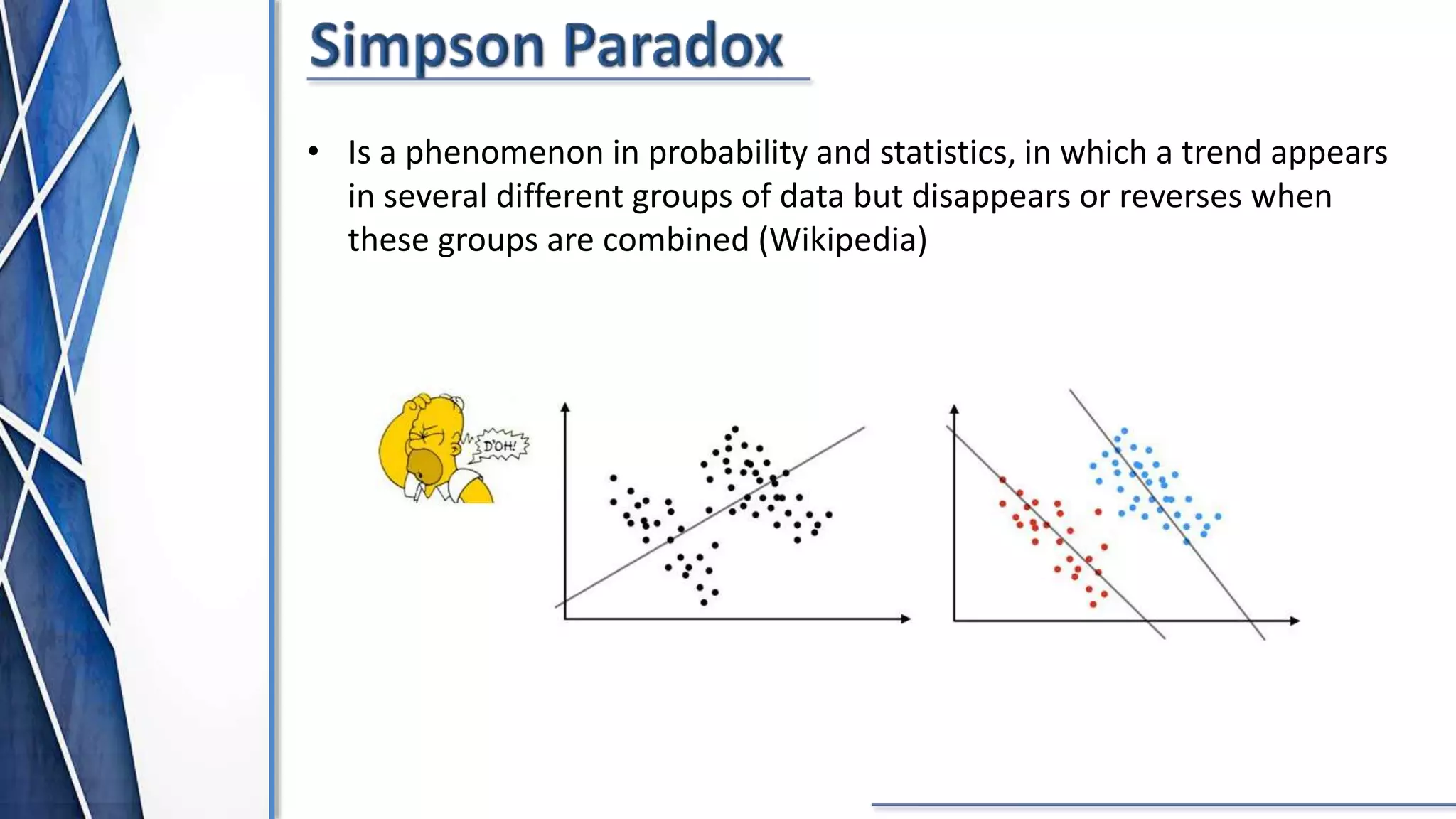

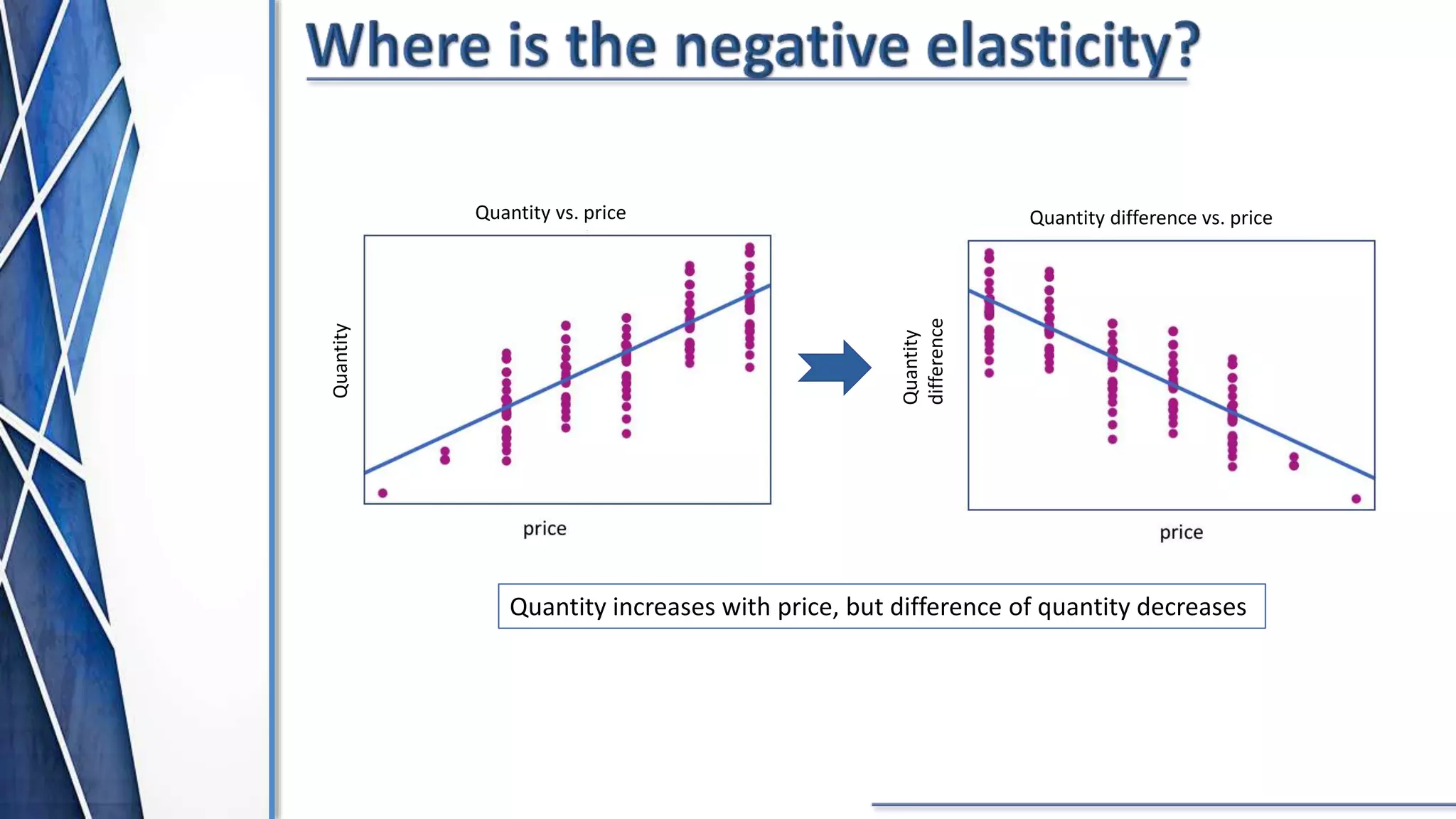

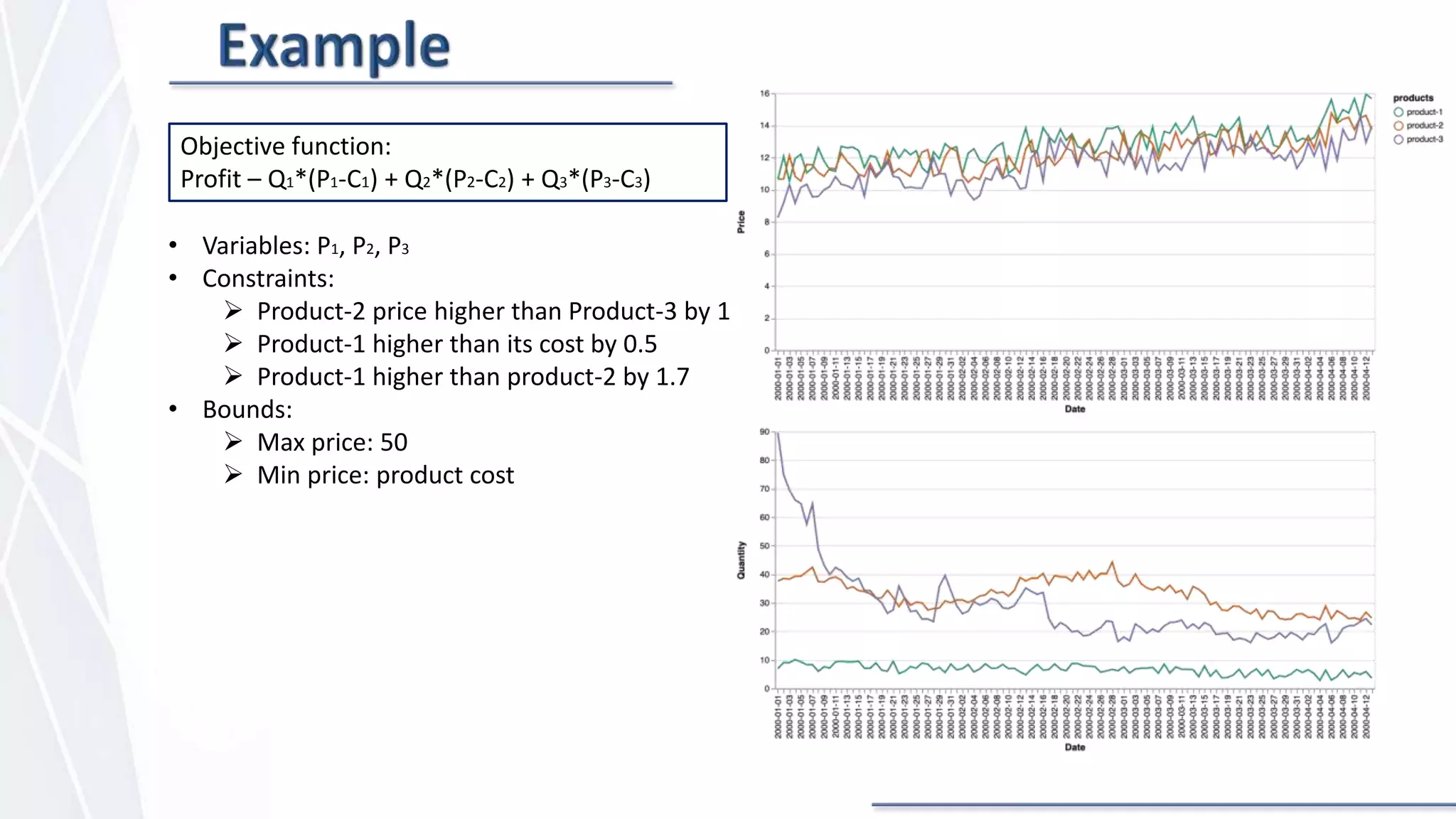

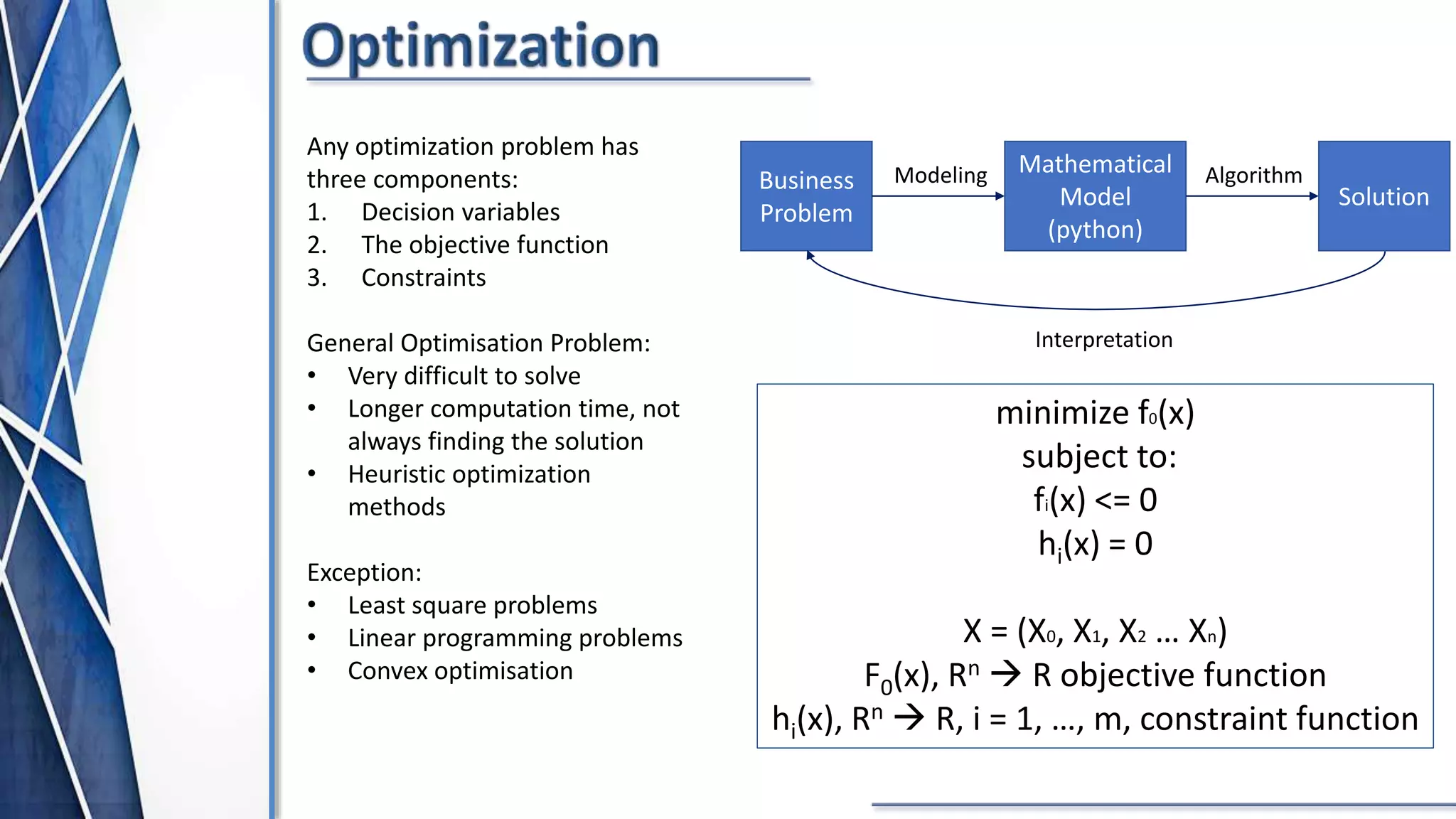

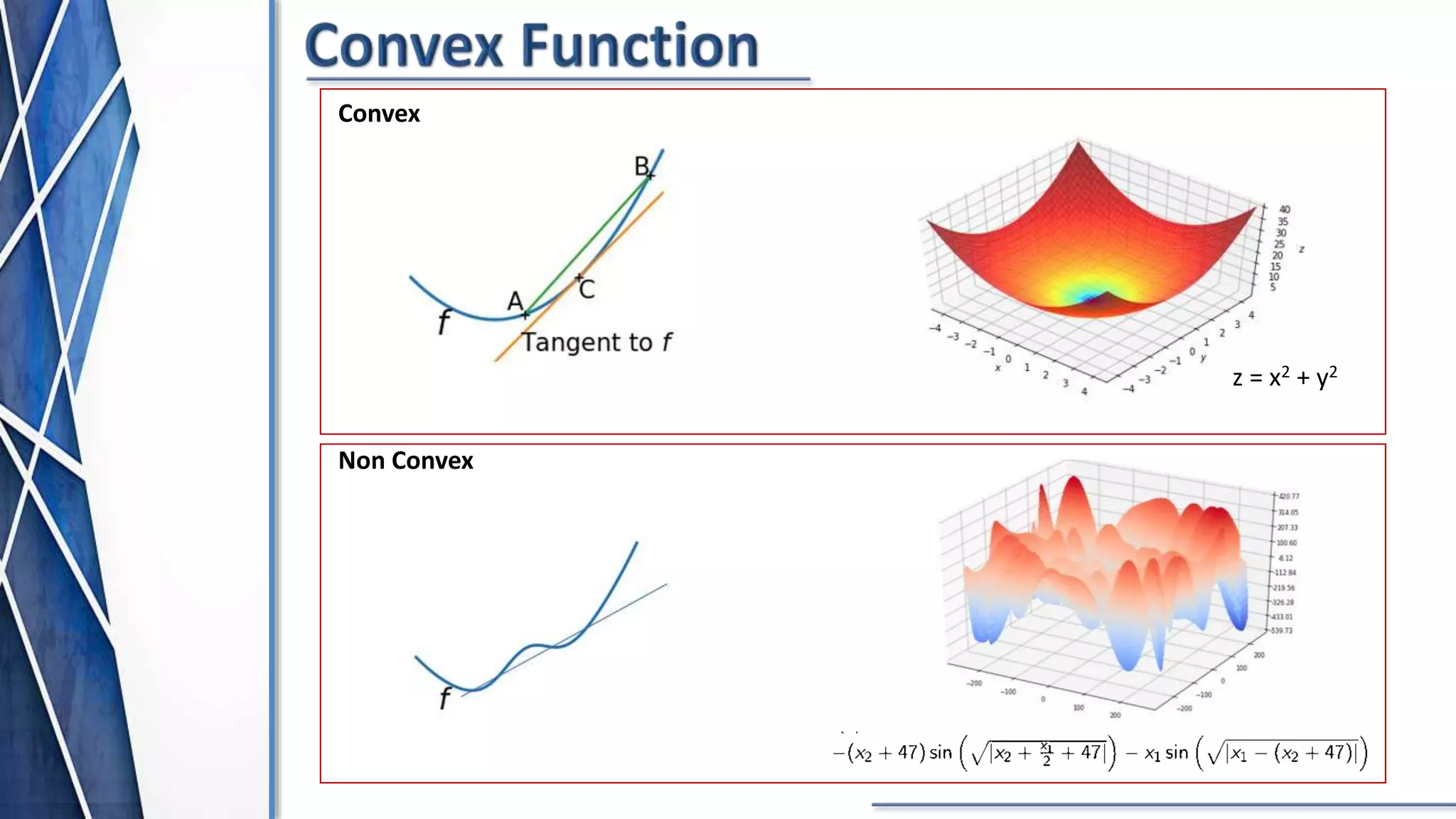

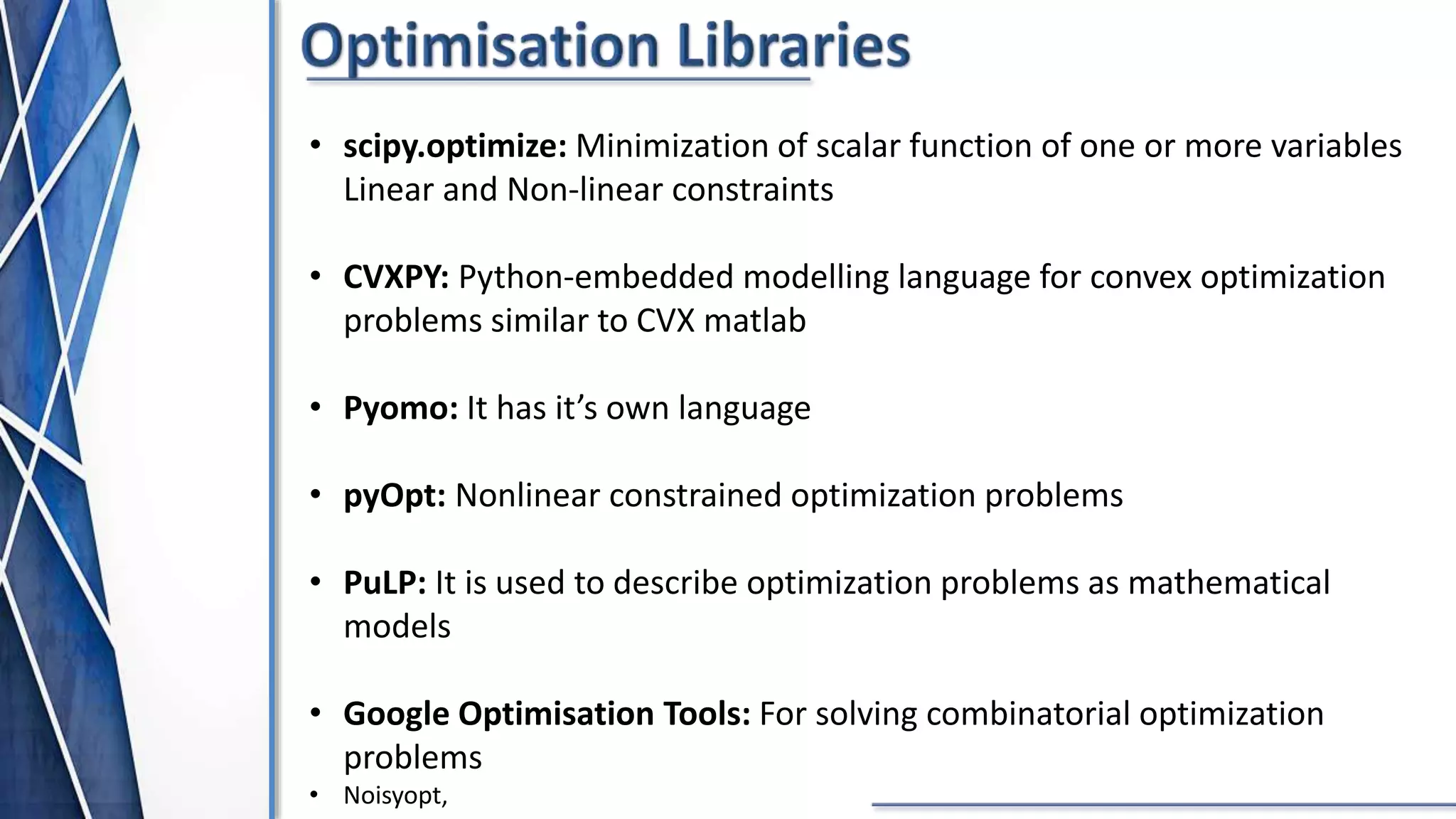

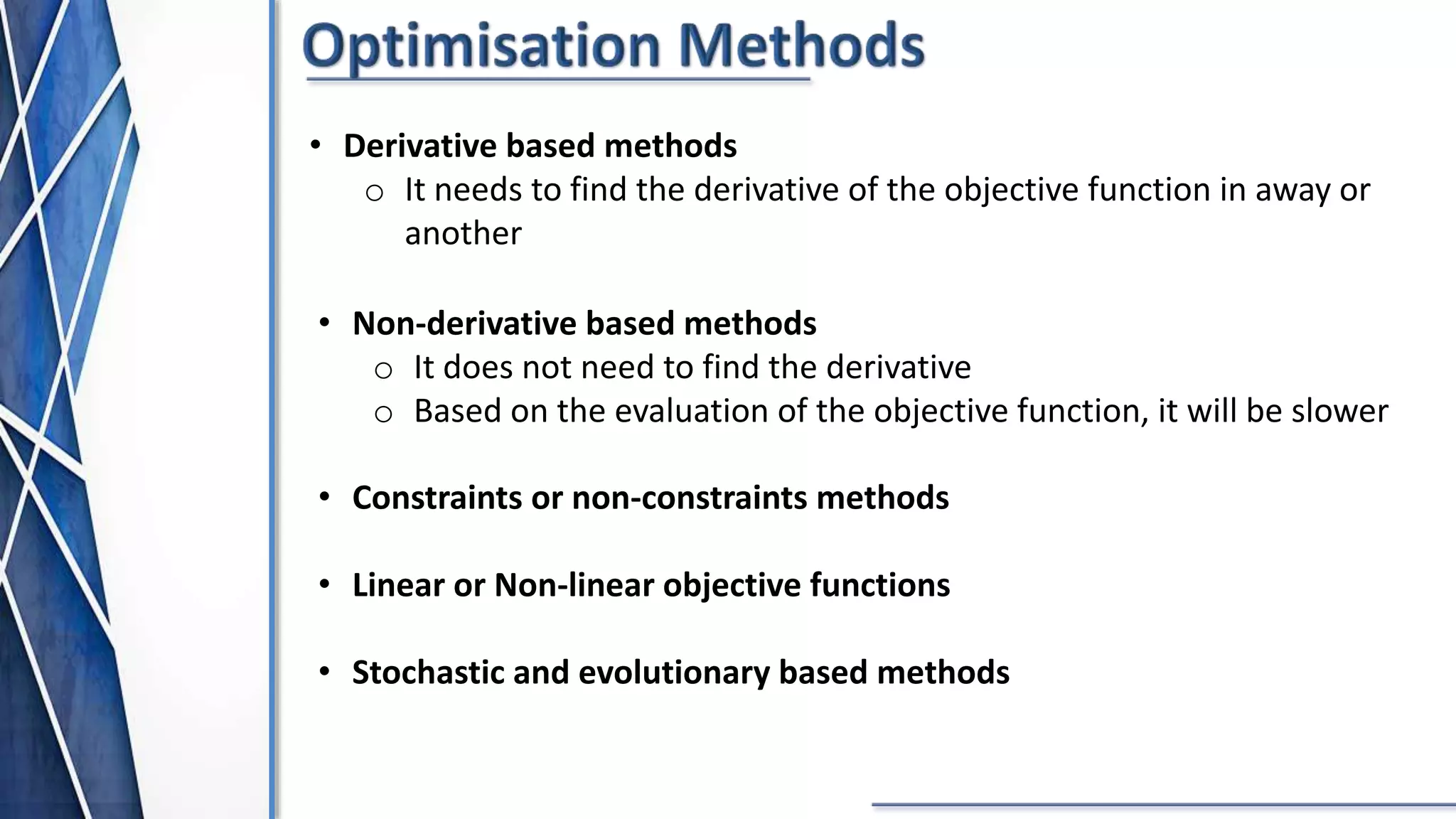

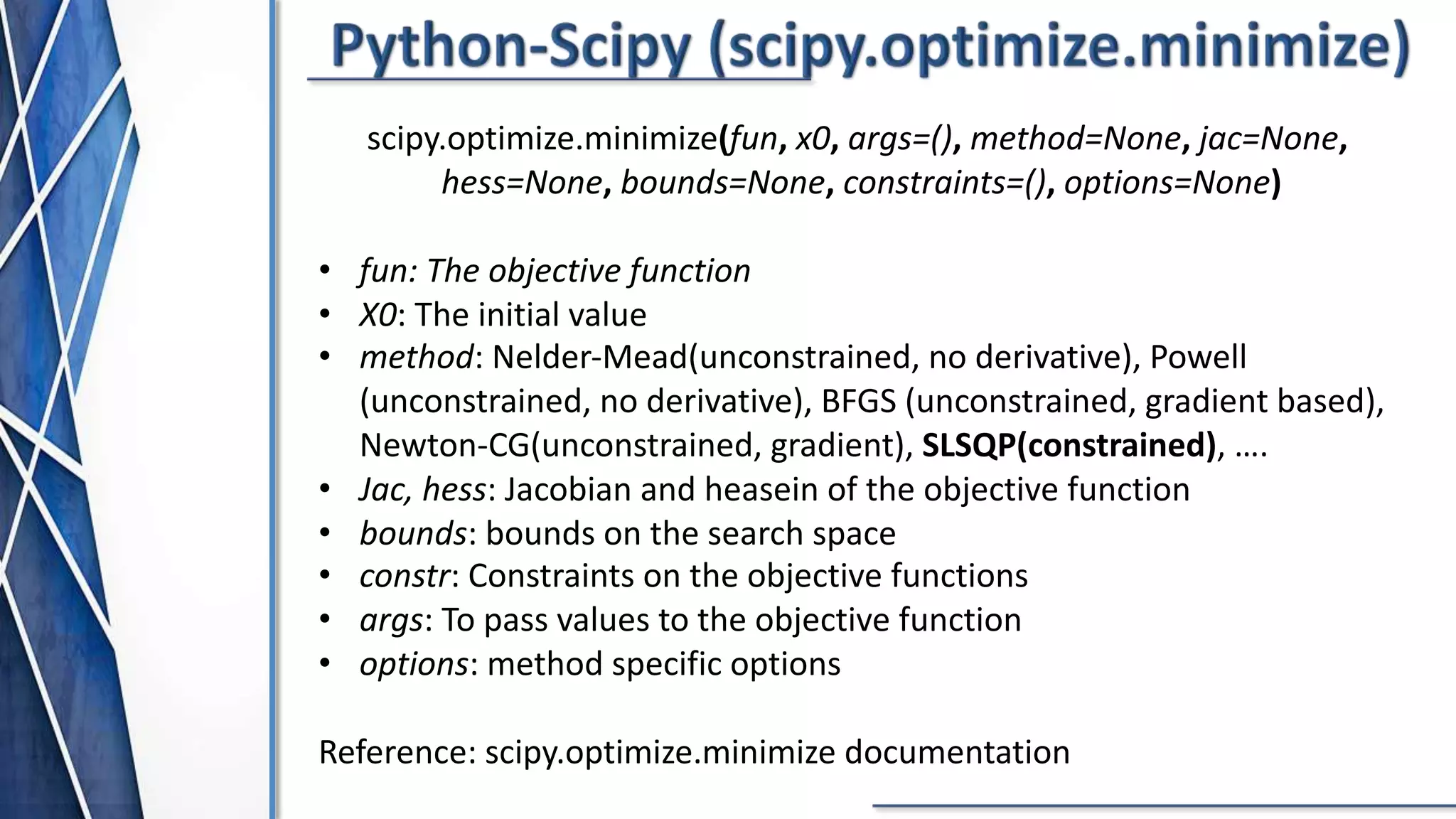

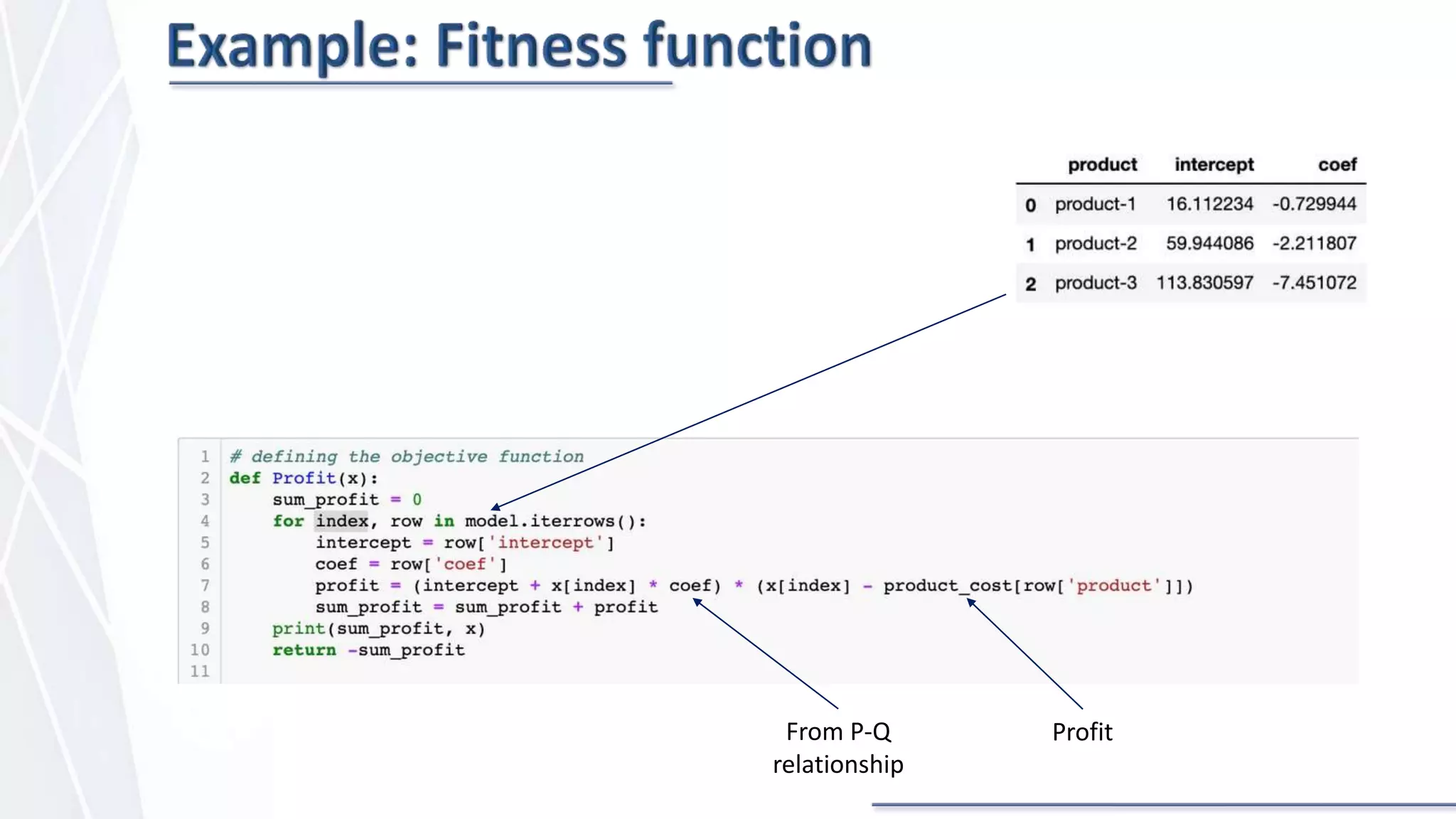

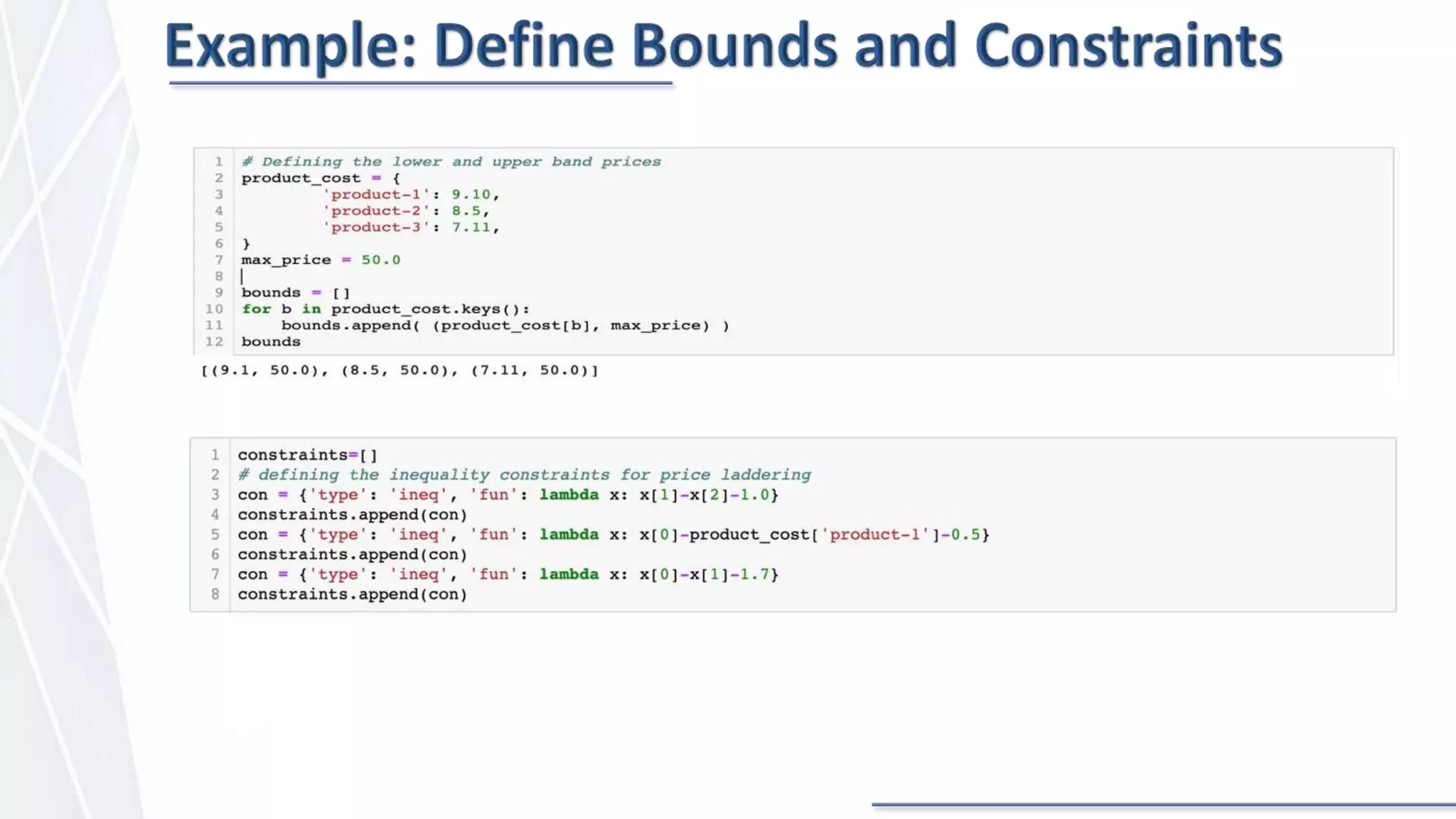

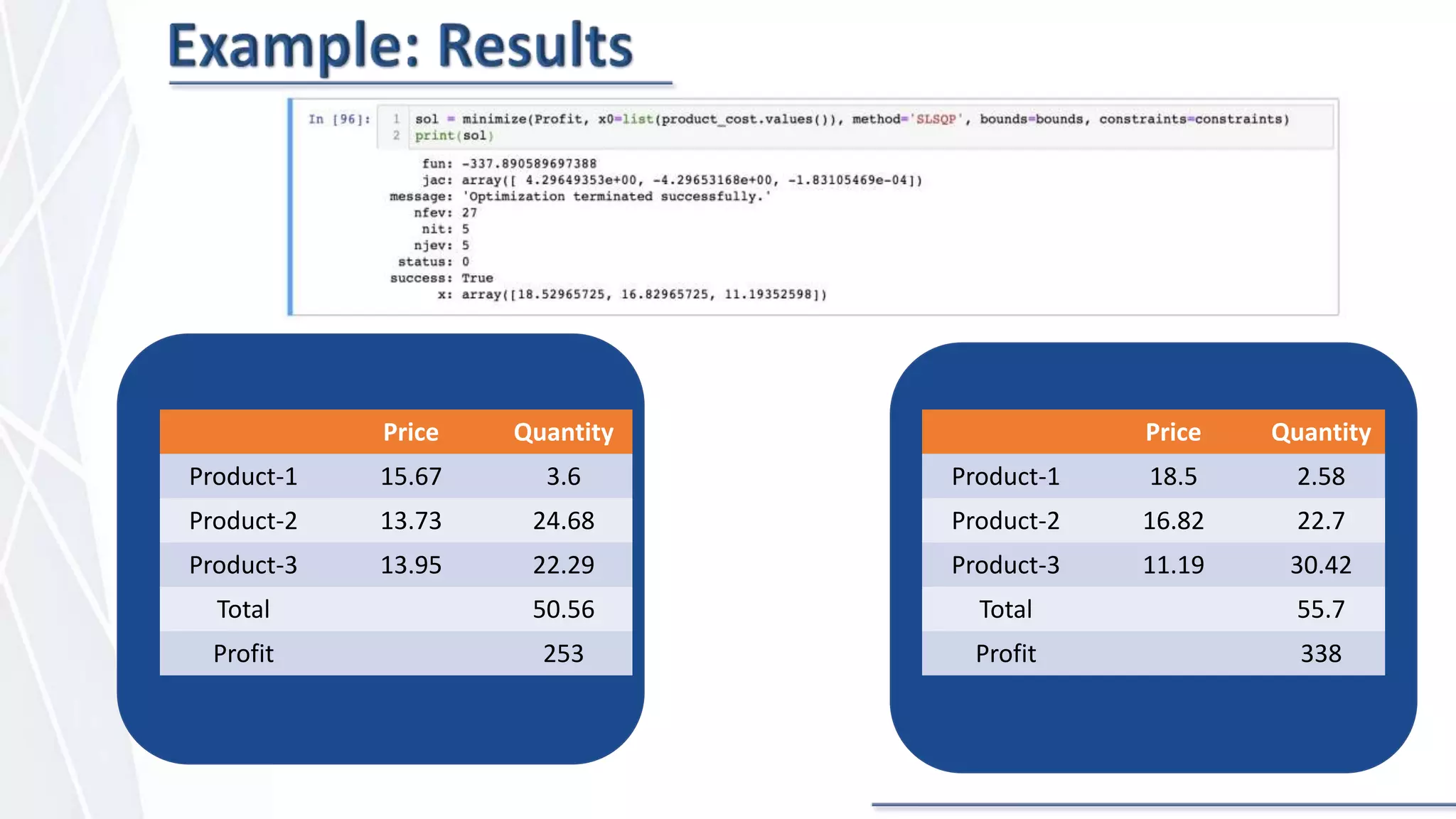

The document discusses price optimization with Python. It describes how companies like American Airlines and Orbitz have used algorithms to dynamically set prices. The key aspects of price optimization are discussed, including maximizing revenue by estimating demand curves and fitting relationships. Different optimization techniques in Python are also covered, such as scipy.optimize and constraint methods like SLSQP. Overall the document provides an overview of using Python for price optimization problems.