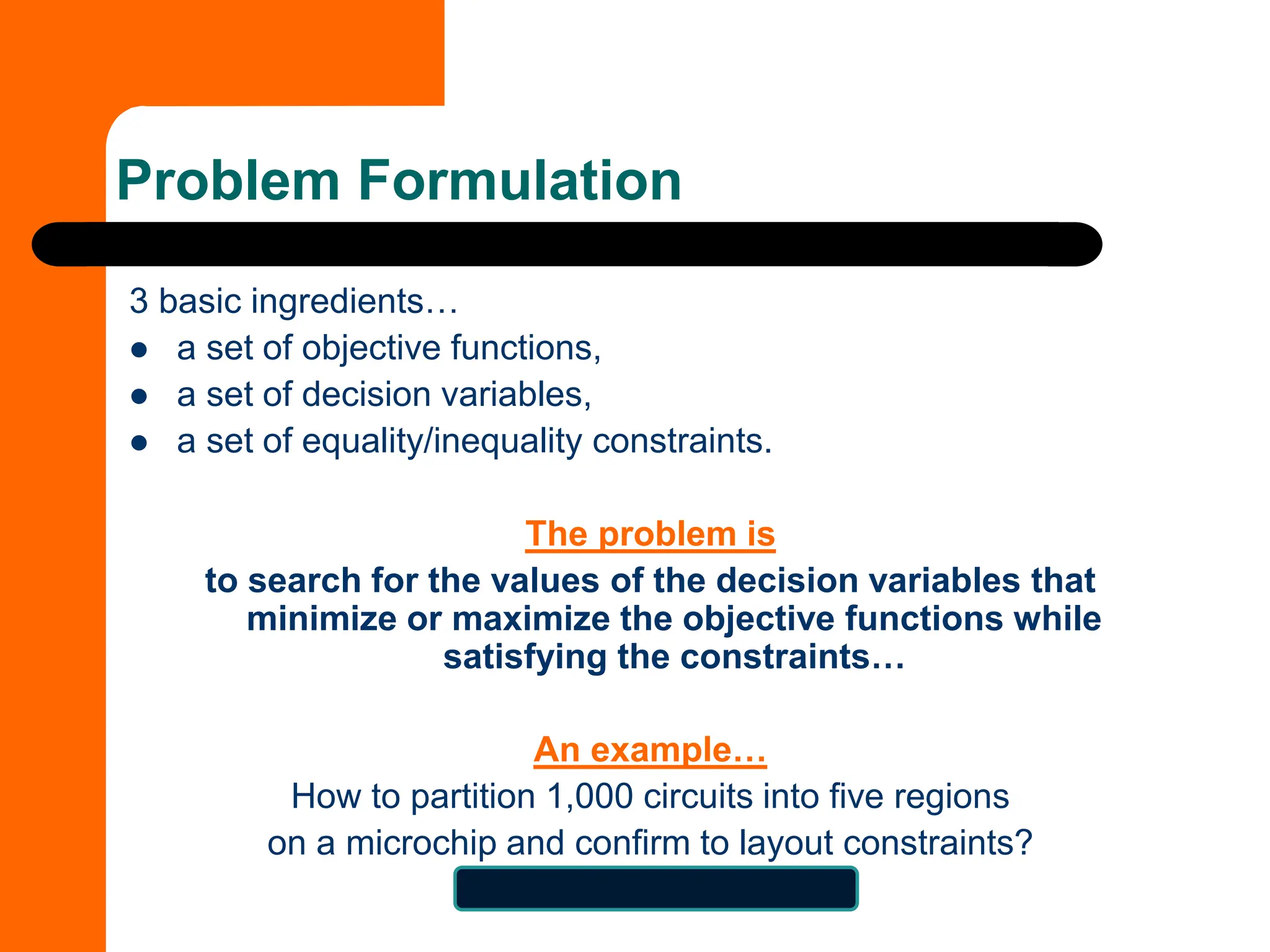

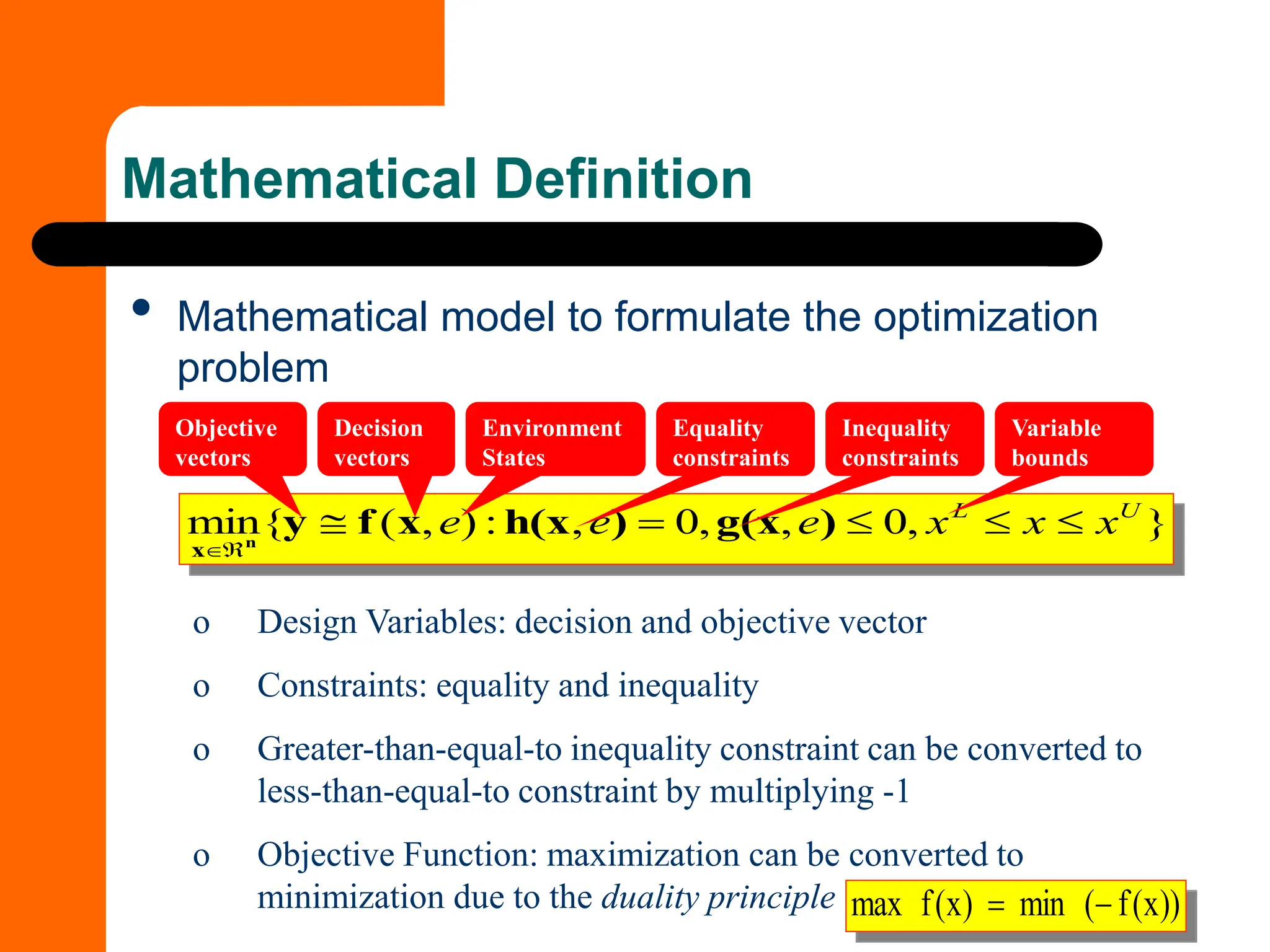

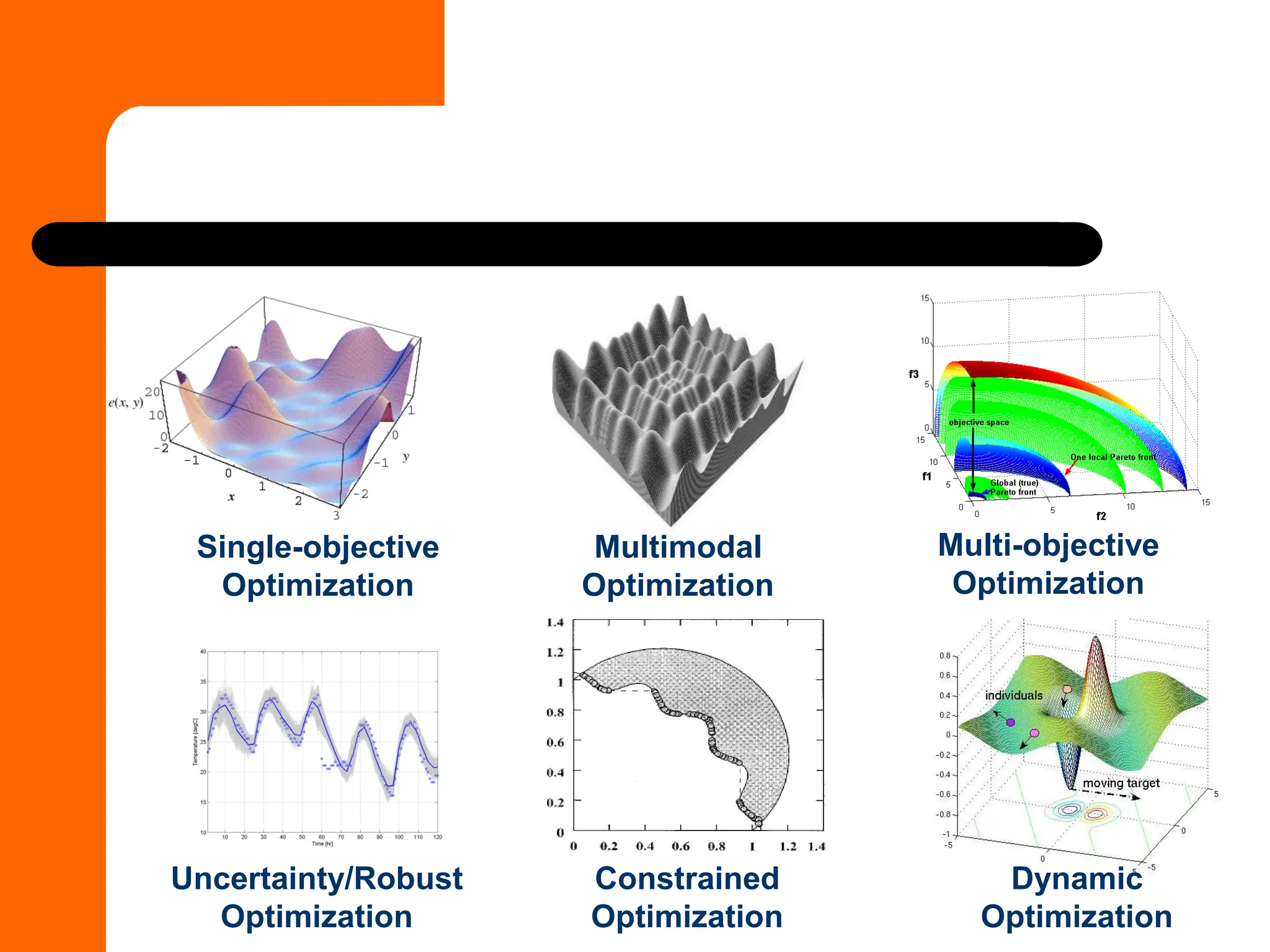

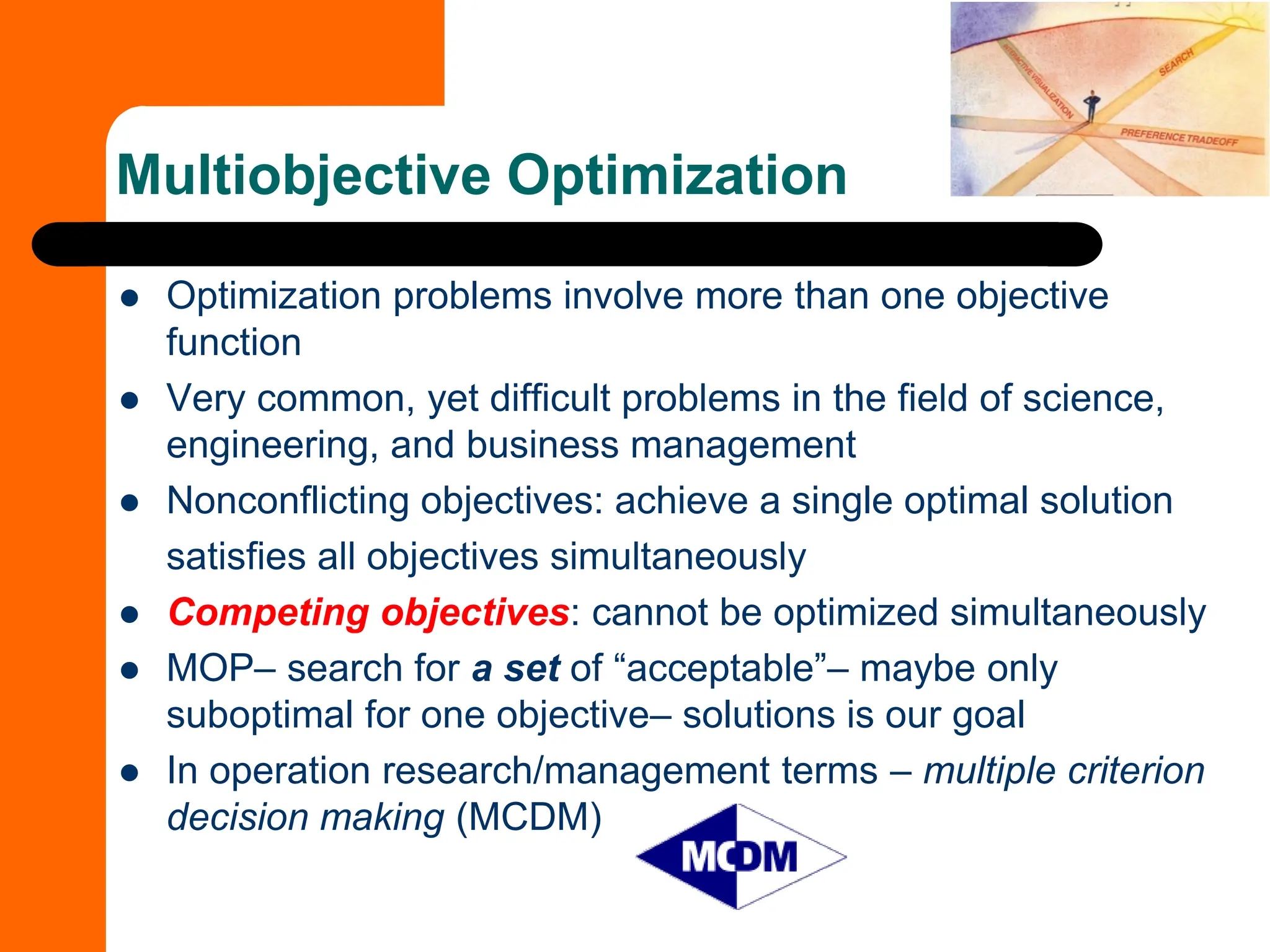

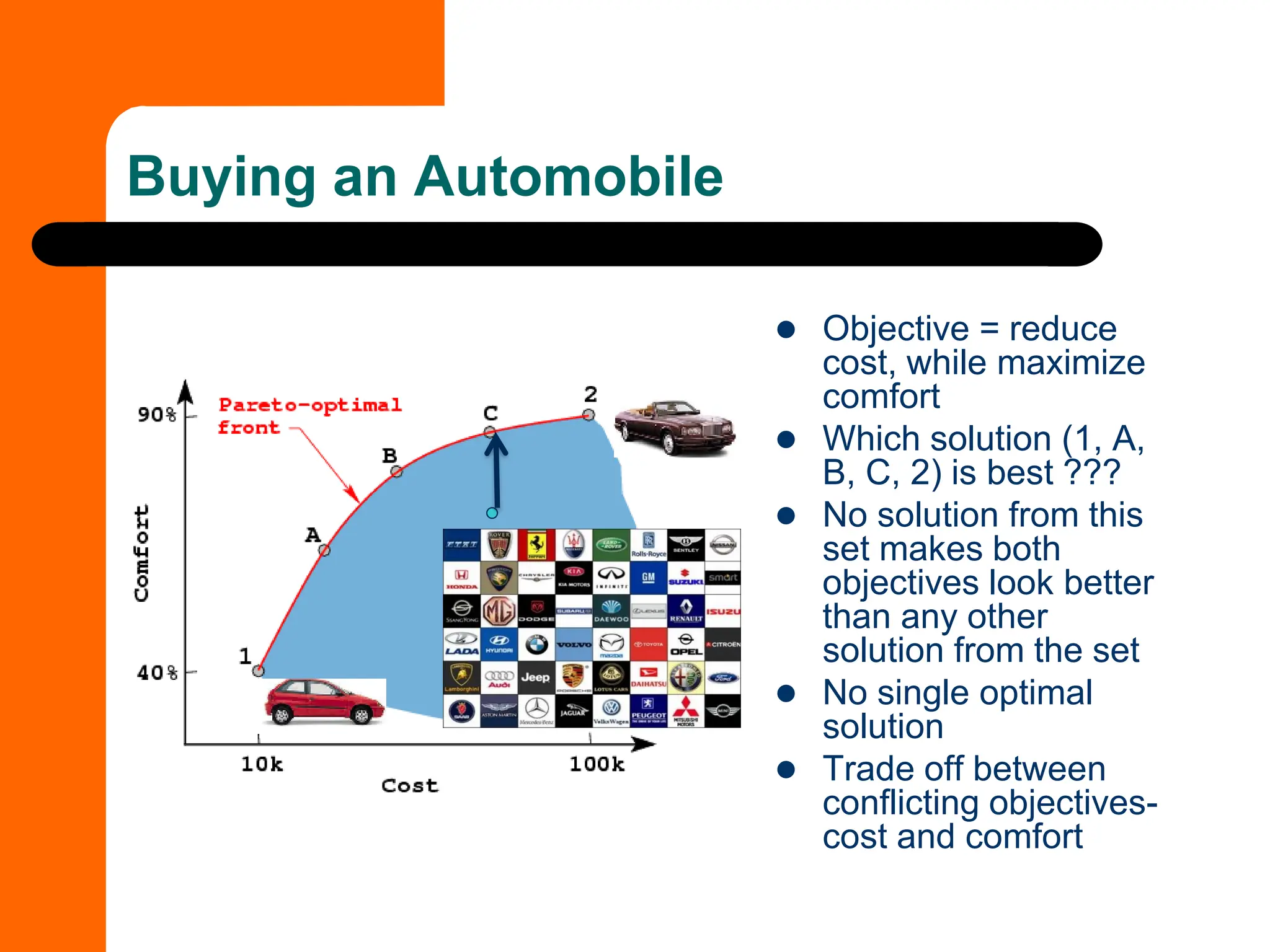

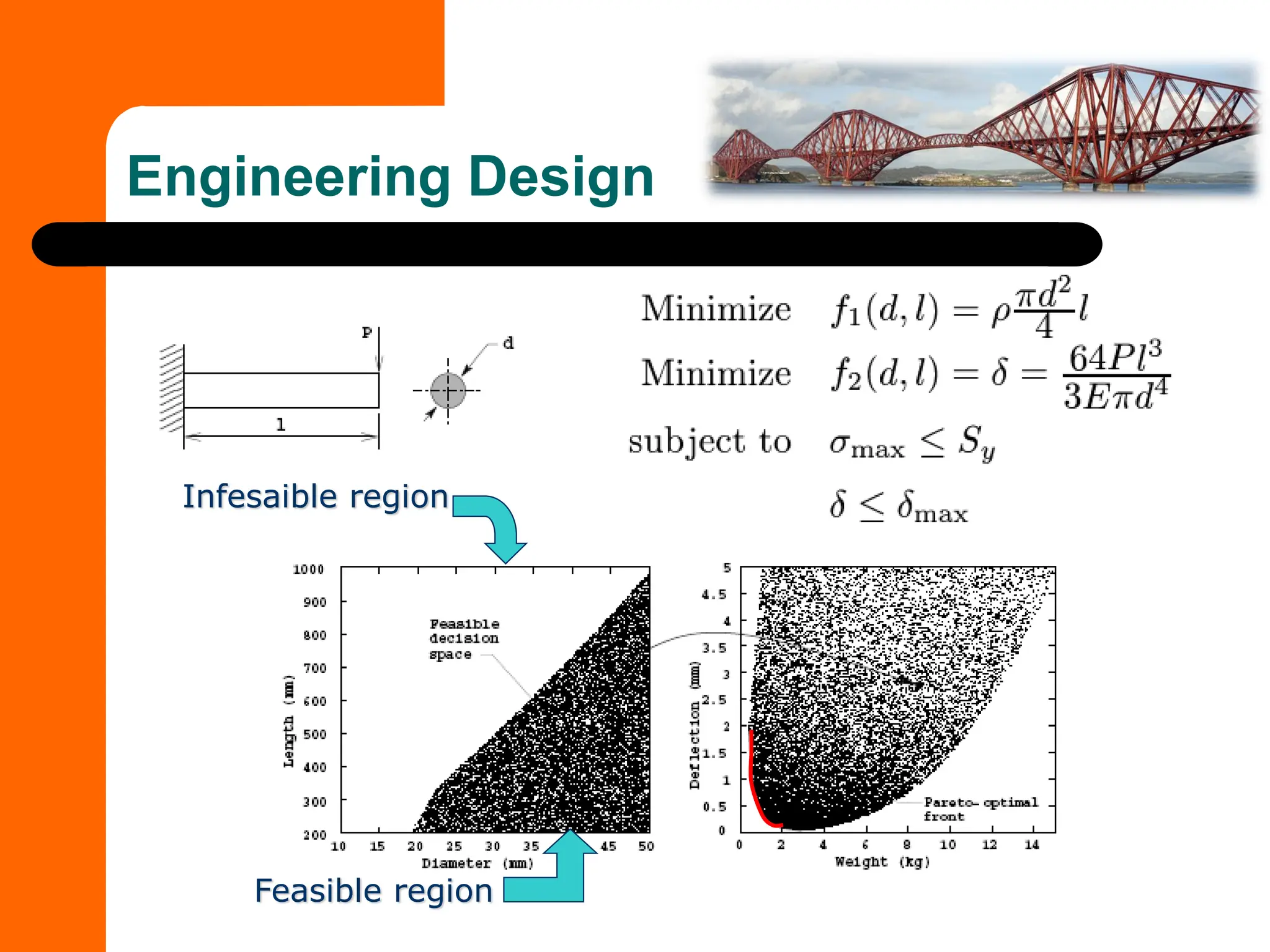

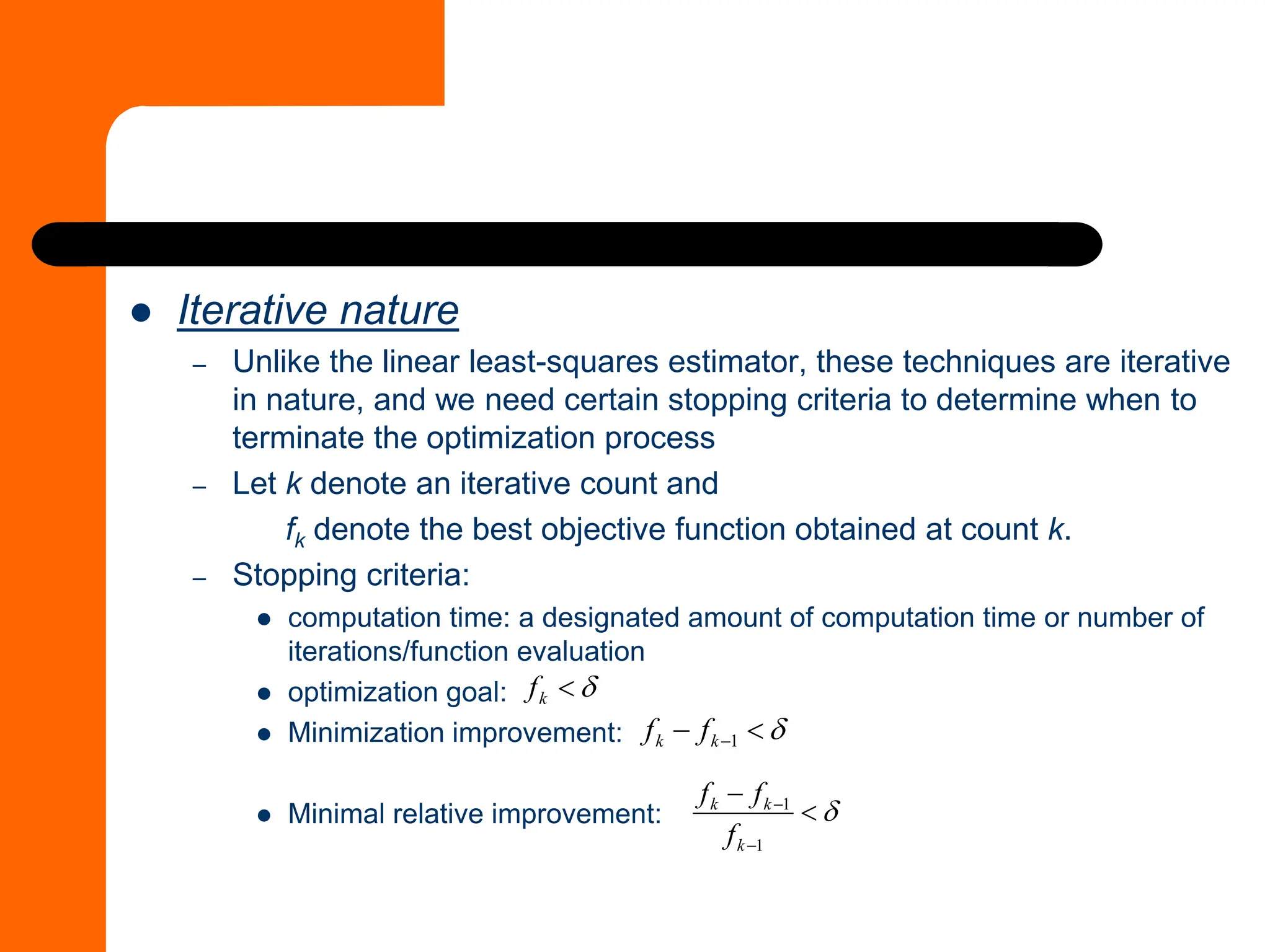

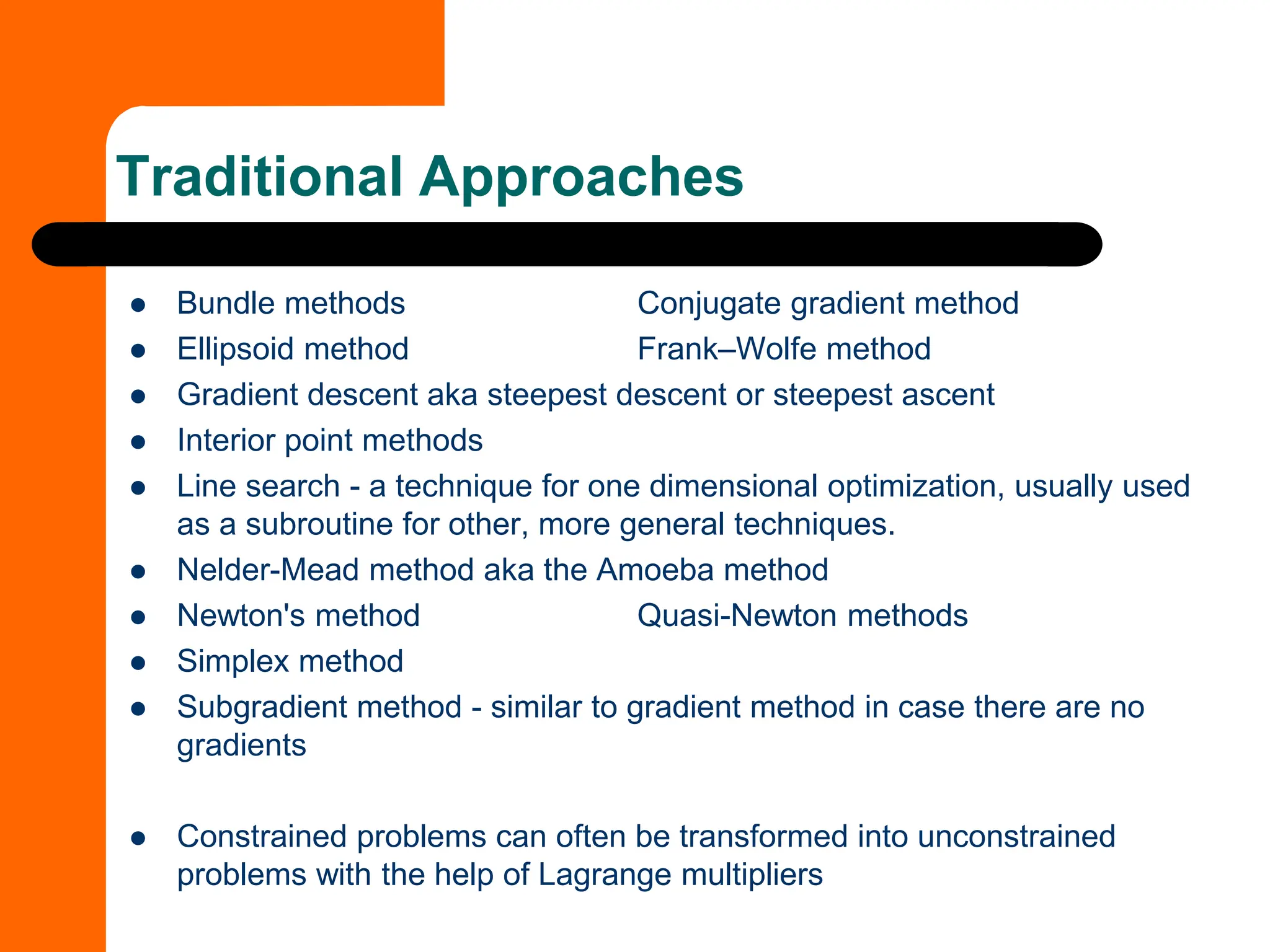

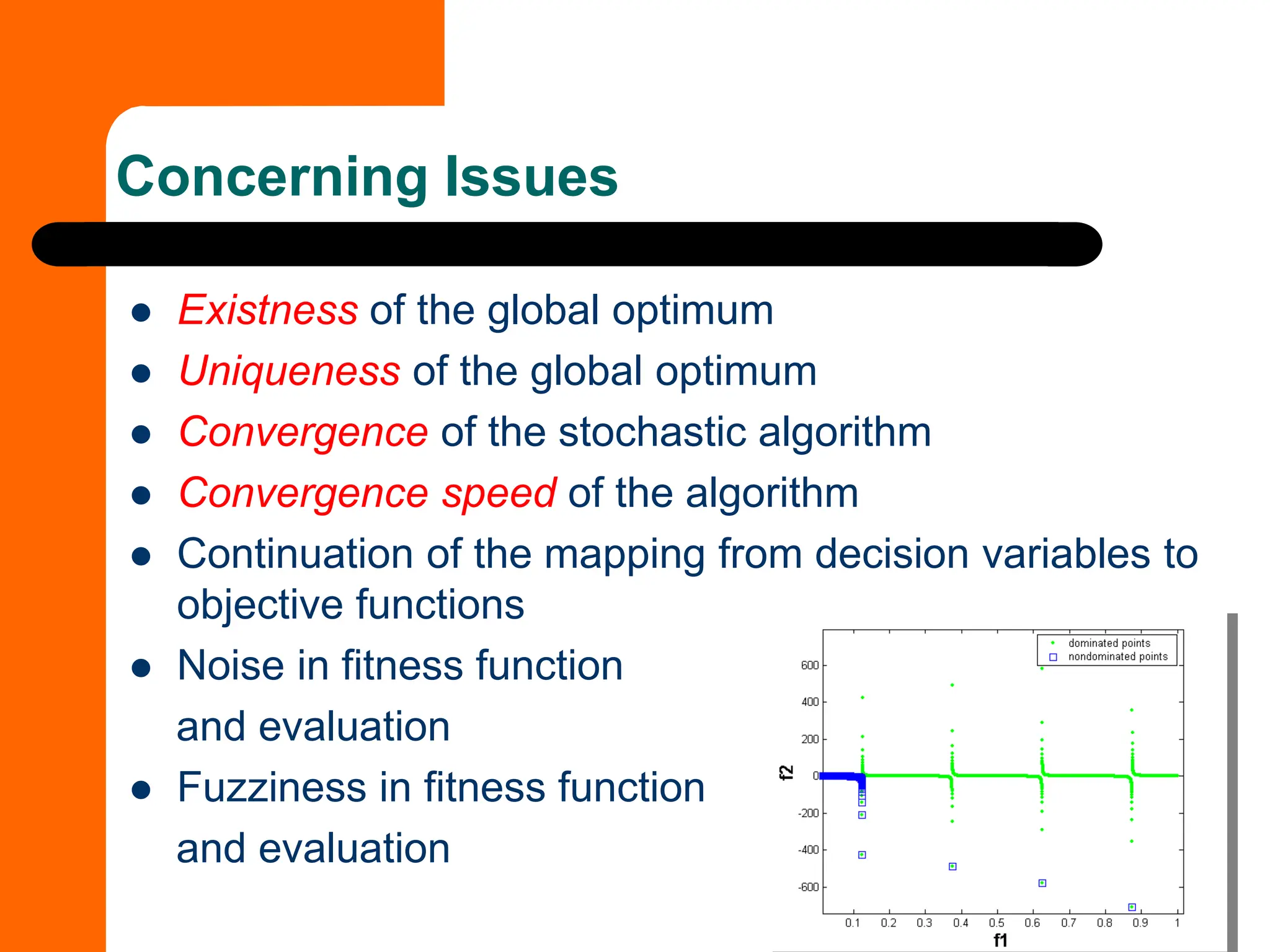

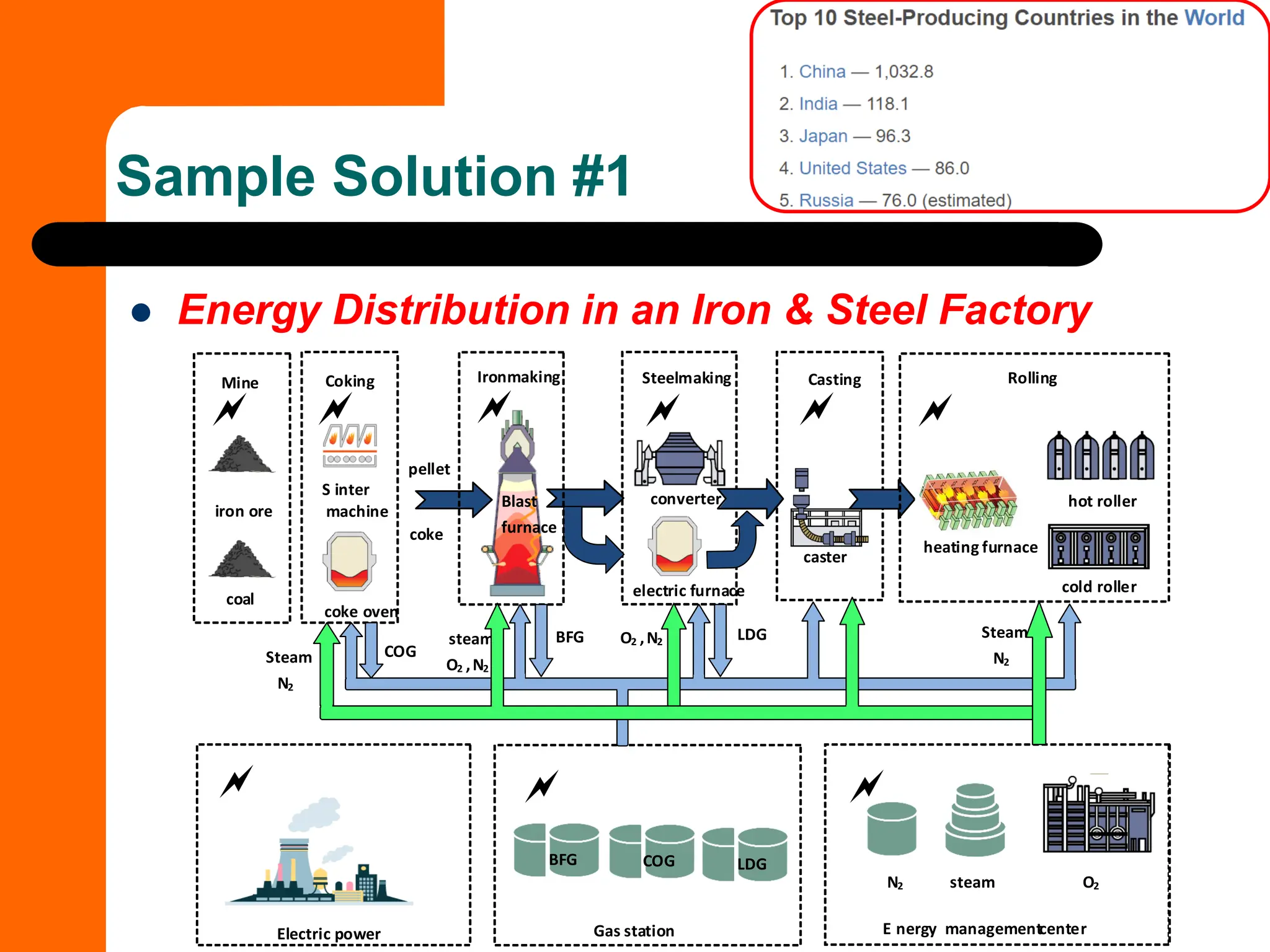

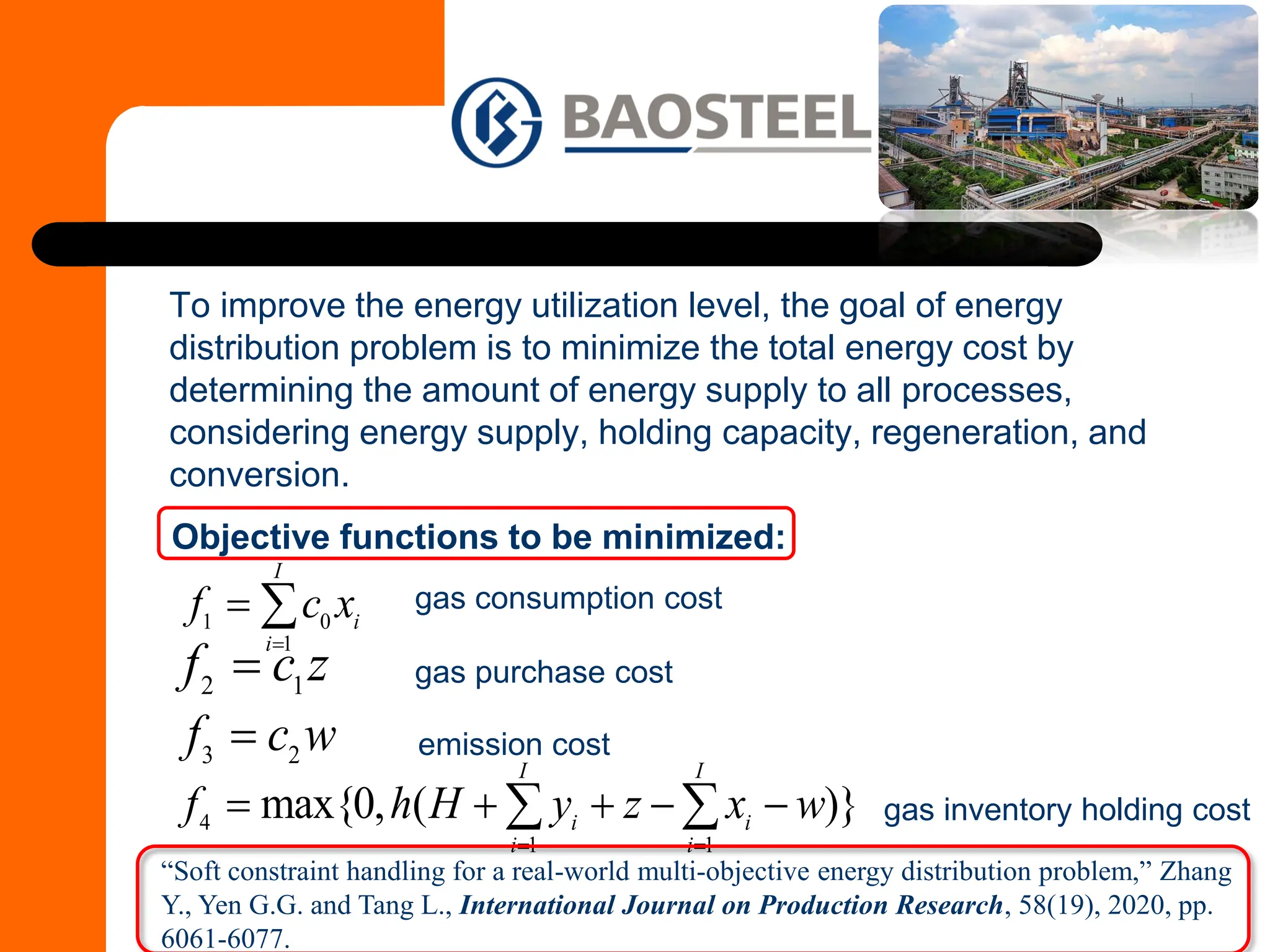

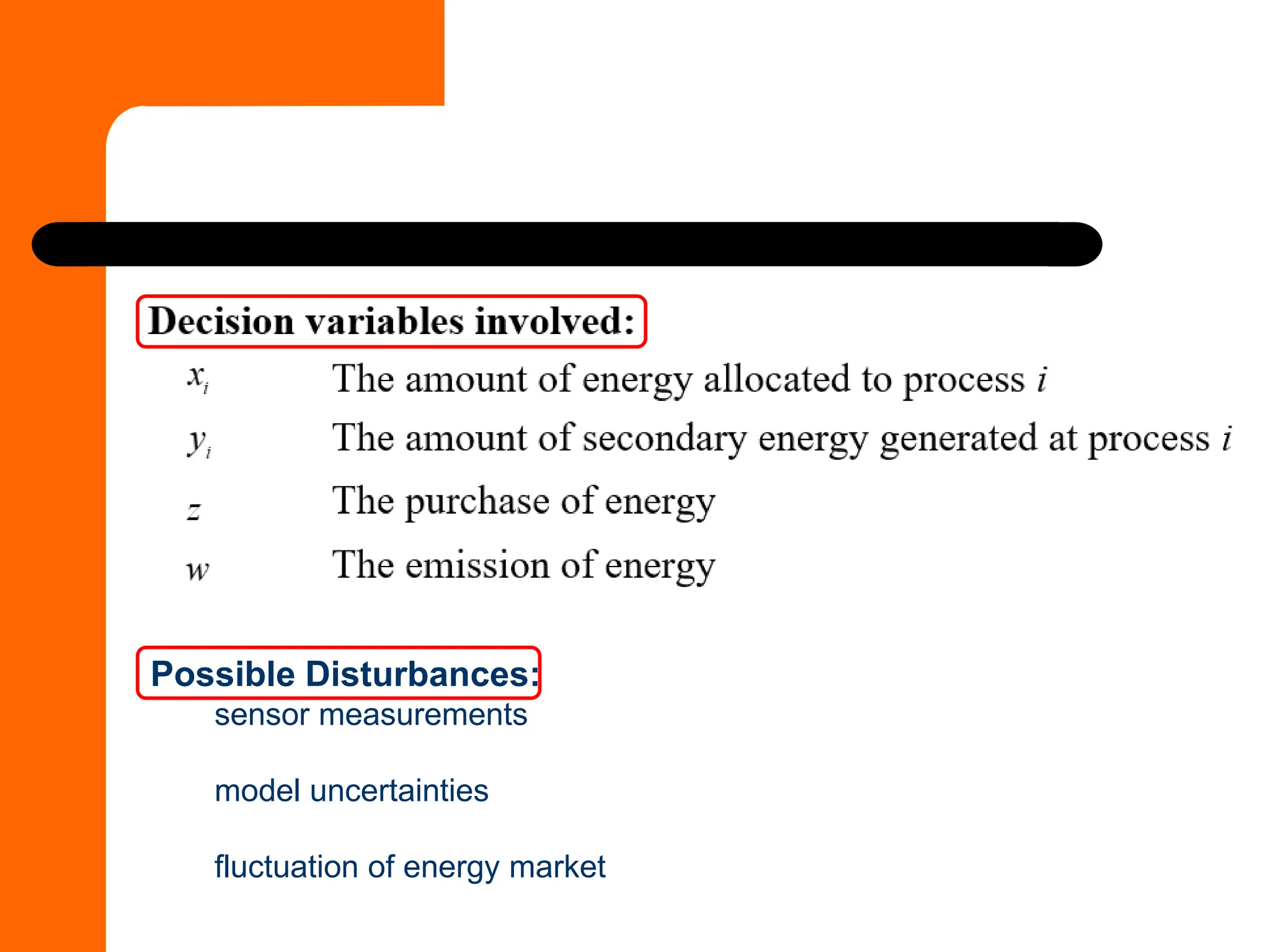

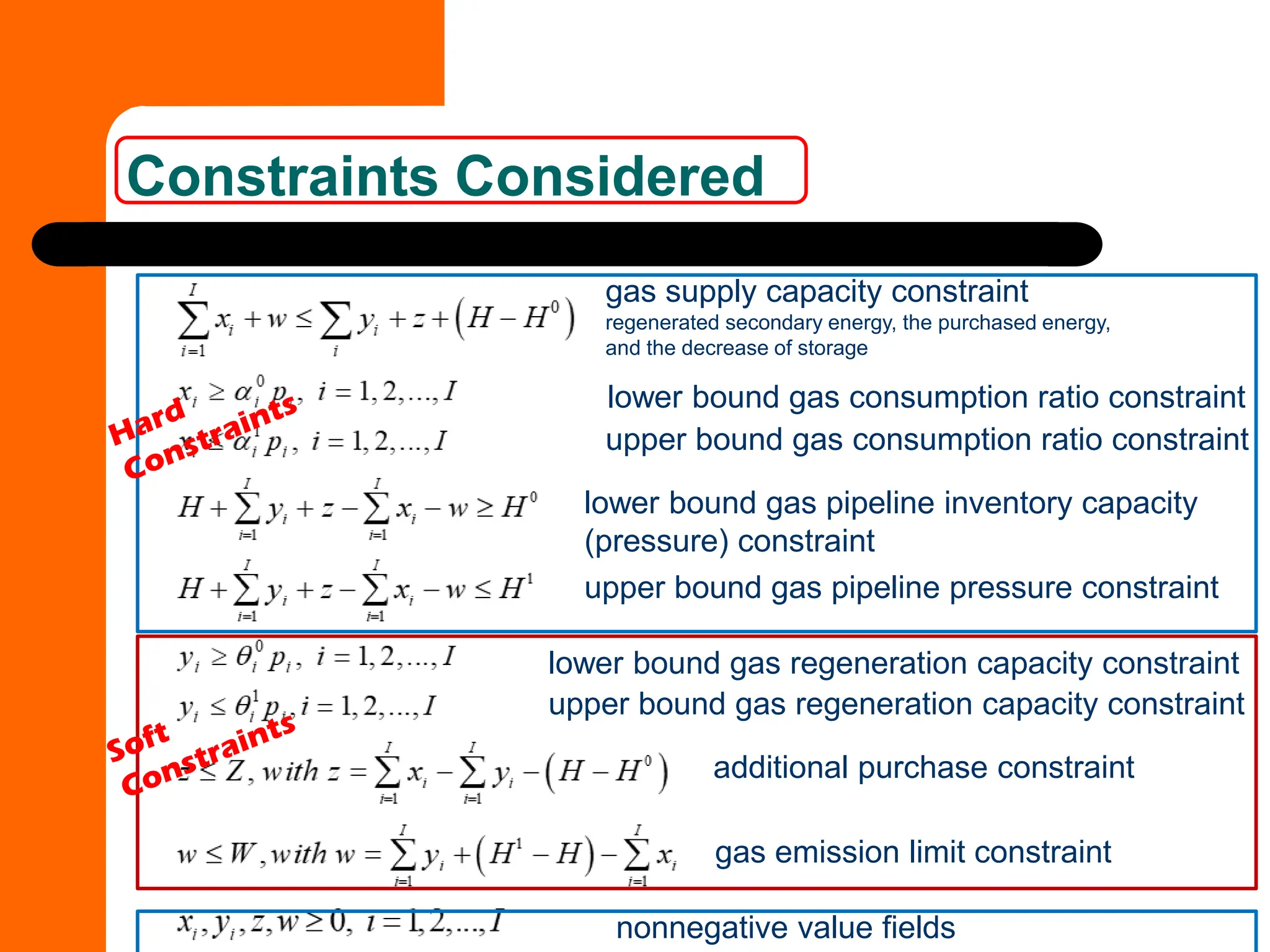

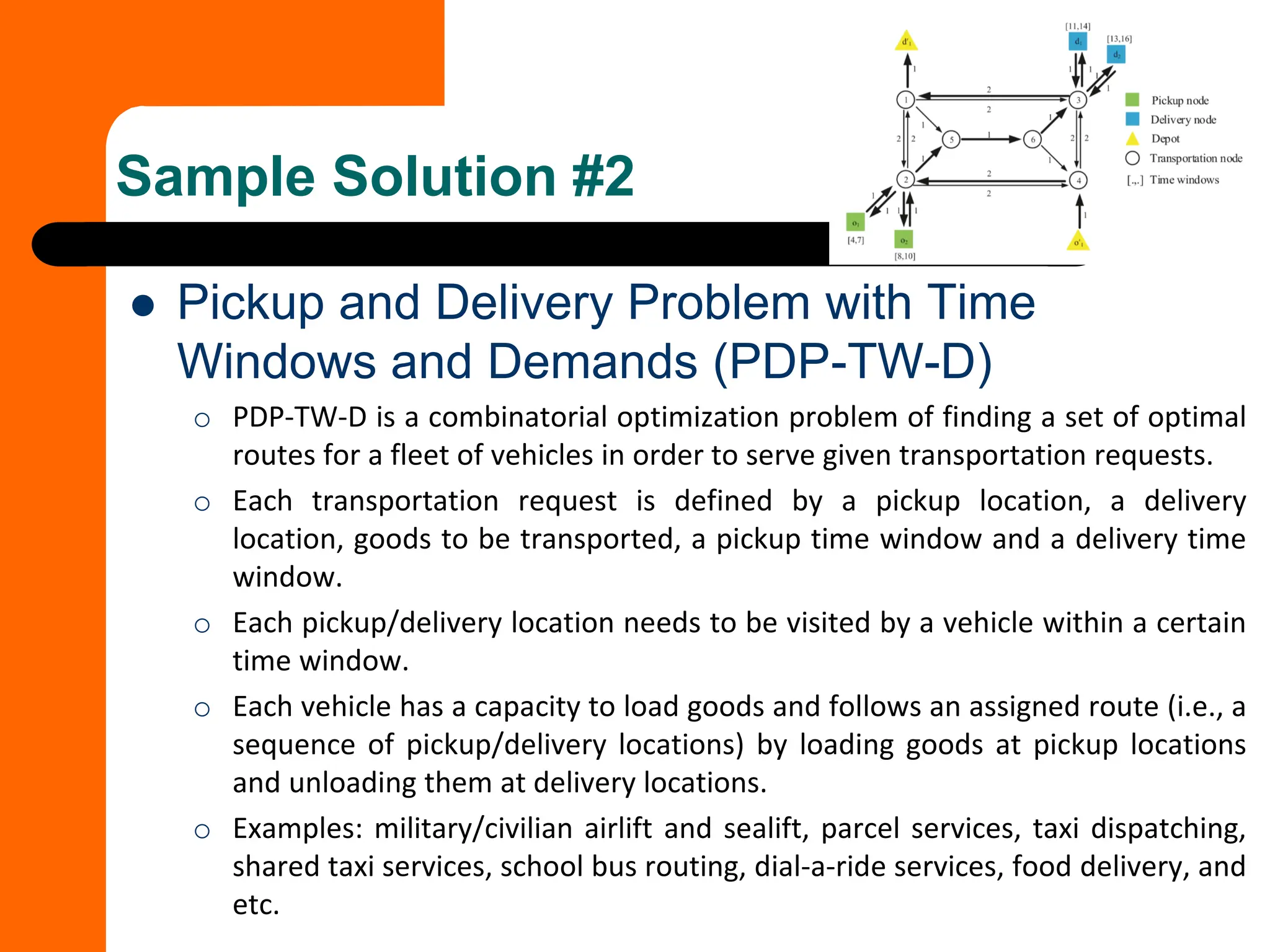

This document discusses the formulation and types of optimization problems in evolutionary computation, emphasizing the importance of decision variables, objective functions, and constraints. It covers various optimization methods, including both derivative-based and derivative-free techniques, as well as challenges like multi-objective optimization and real-world applications. Additionally, it outlines different solution strategies, homework assignments, and examples relevant to optimization in engineering and management contexts.