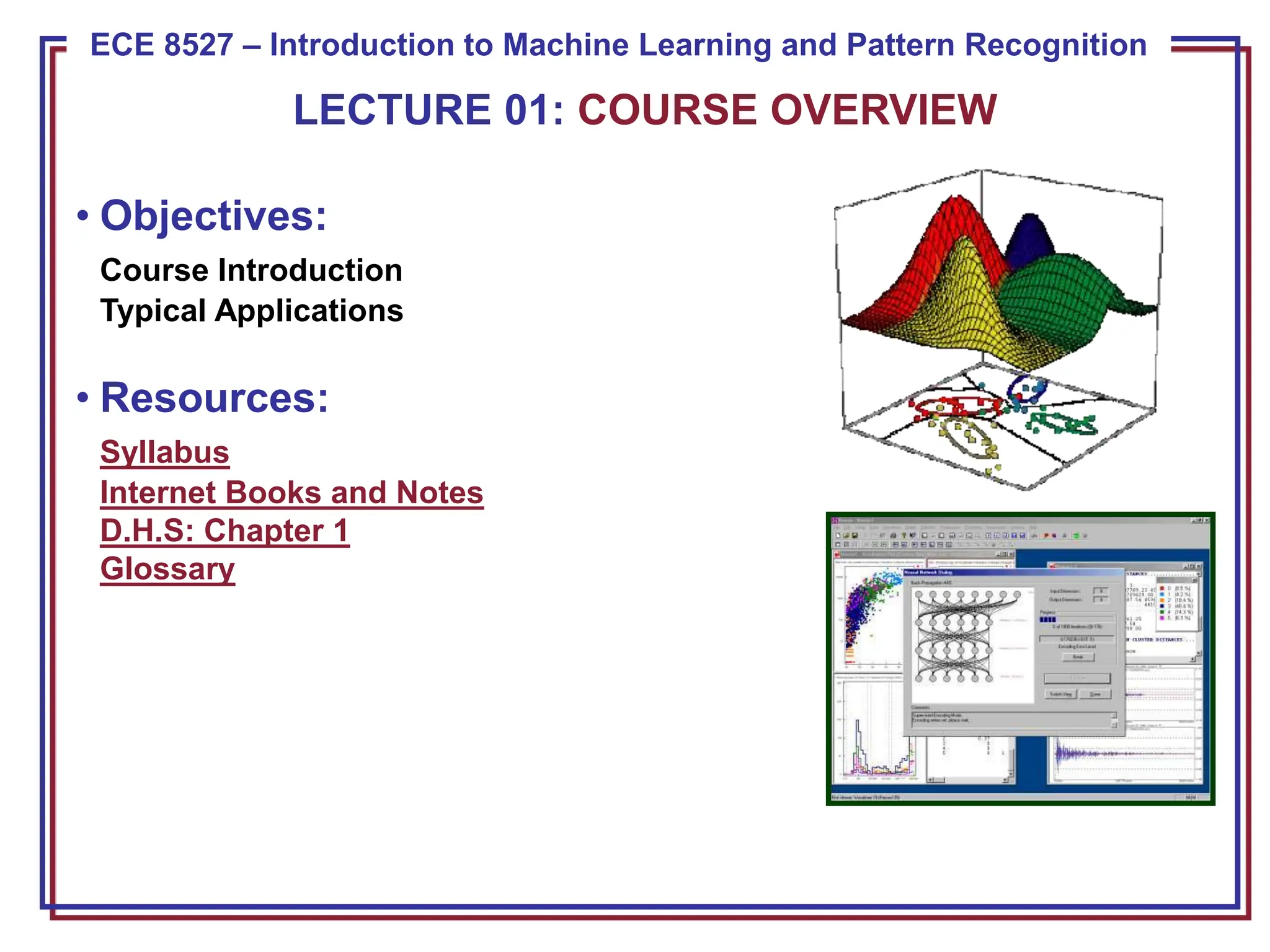

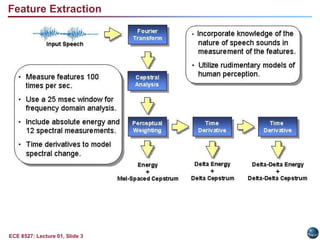

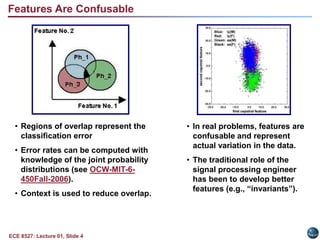

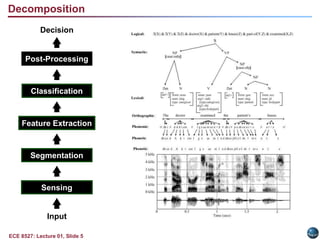

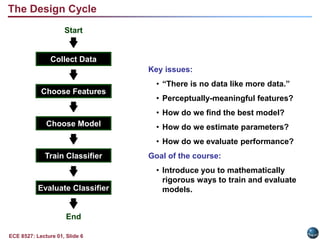

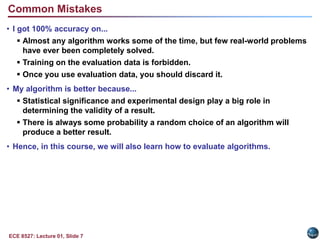

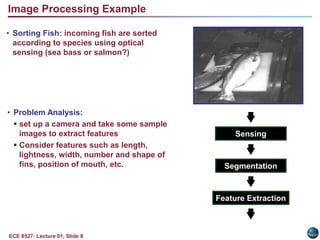

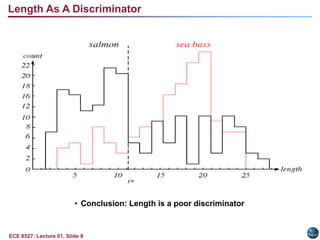

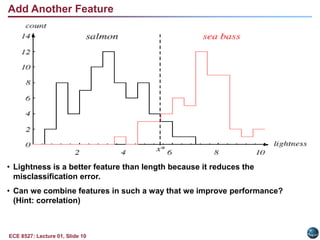

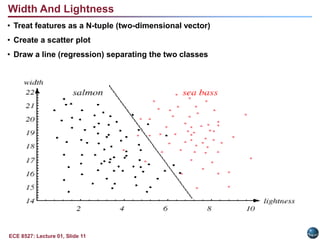

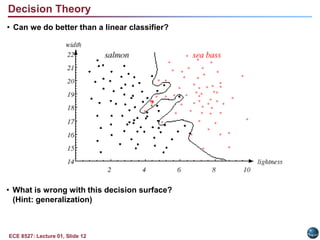

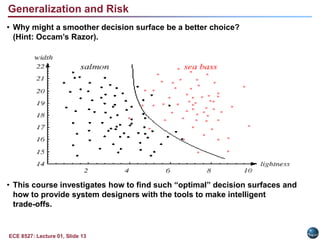

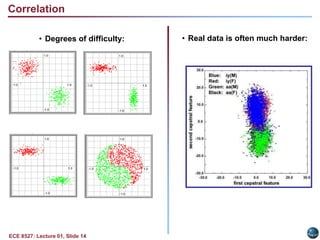

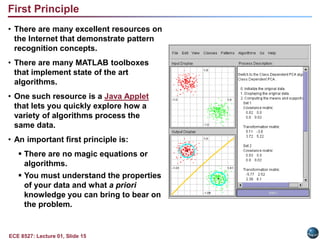

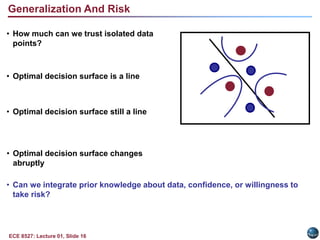

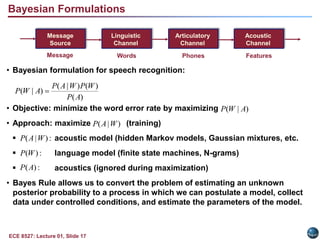

This document summarizes the key topics from the first lecture of an introduction to machine learning and pattern recognition course. It discusses the objectives of the course, including typical applications like speech and fingerprint recognition. It also covers related terminology like machine learning, machine understanding, and feature extraction. The document provides an example of using features like length, lightness, and width to classify fish images. It emphasizes applying statistical methods and understanding properties of the data to develop optimal classification models.