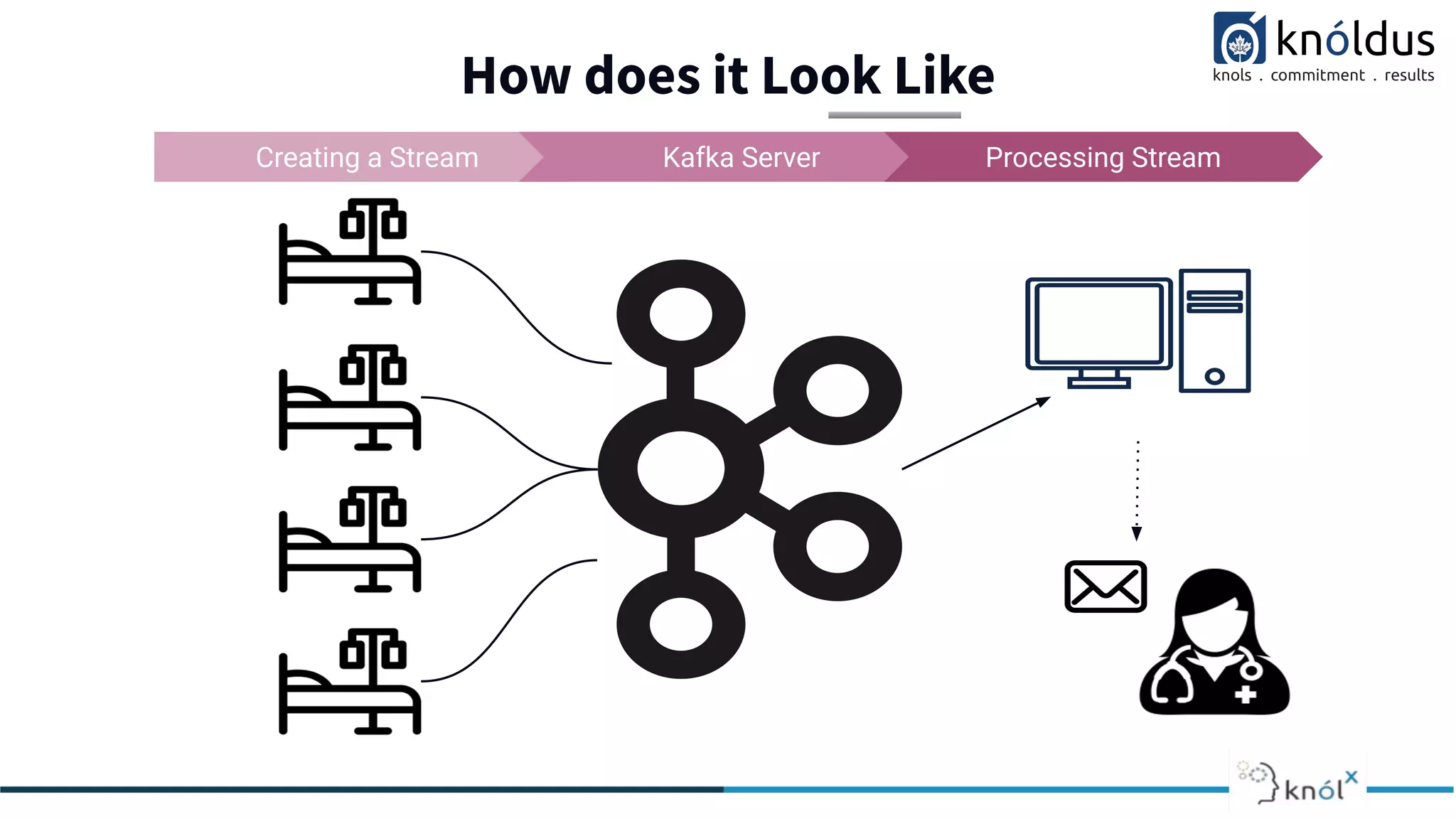

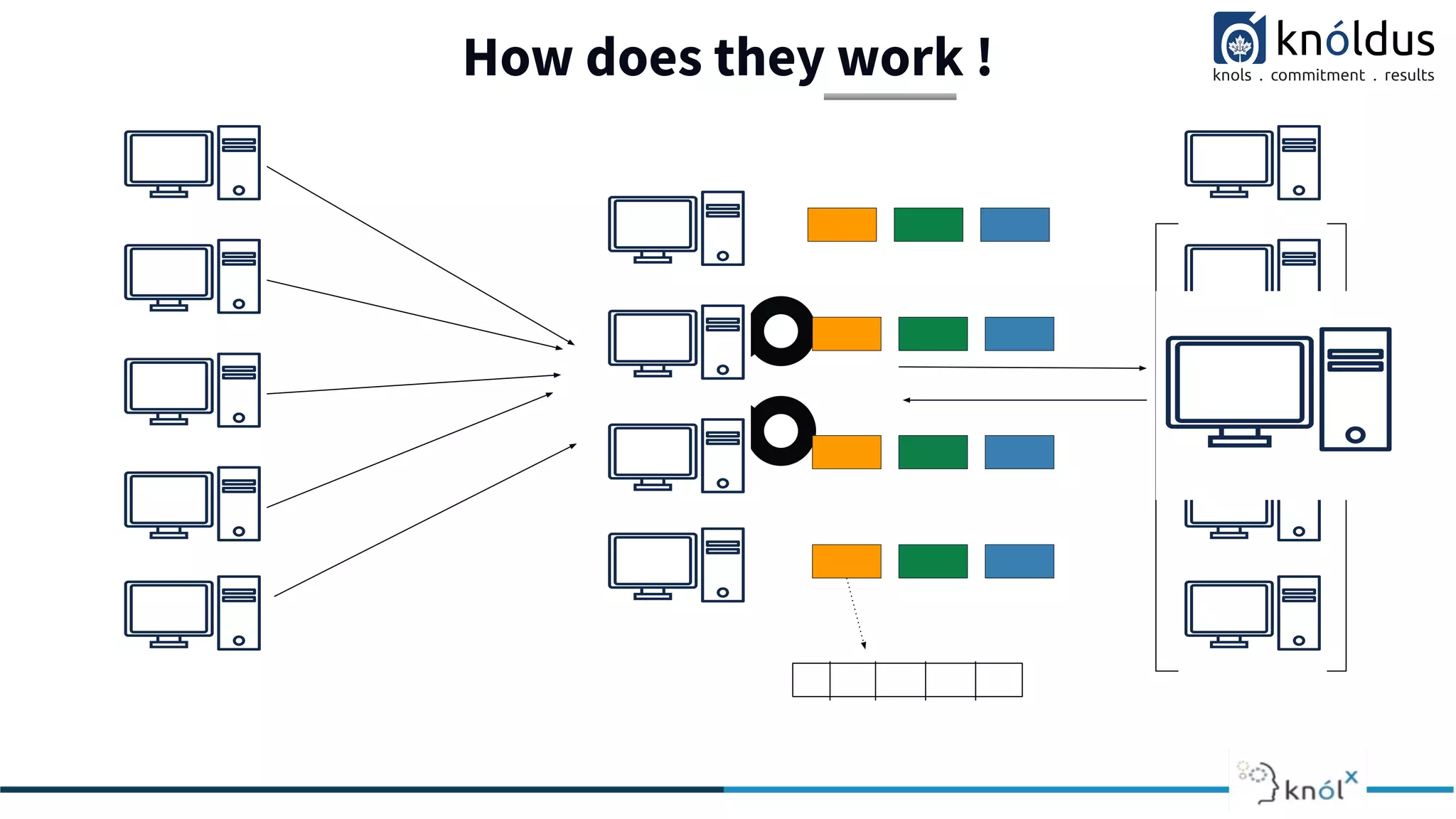

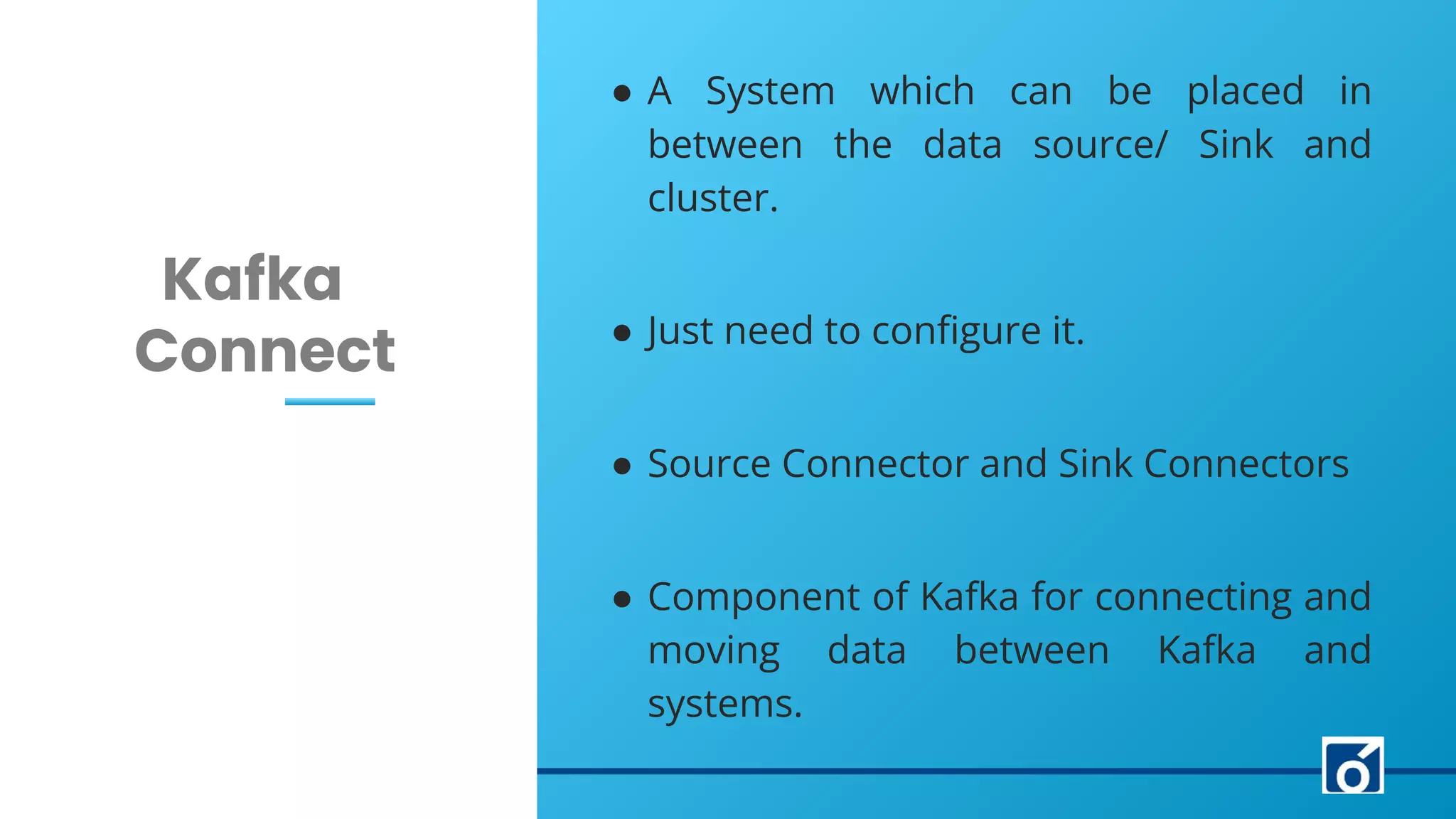

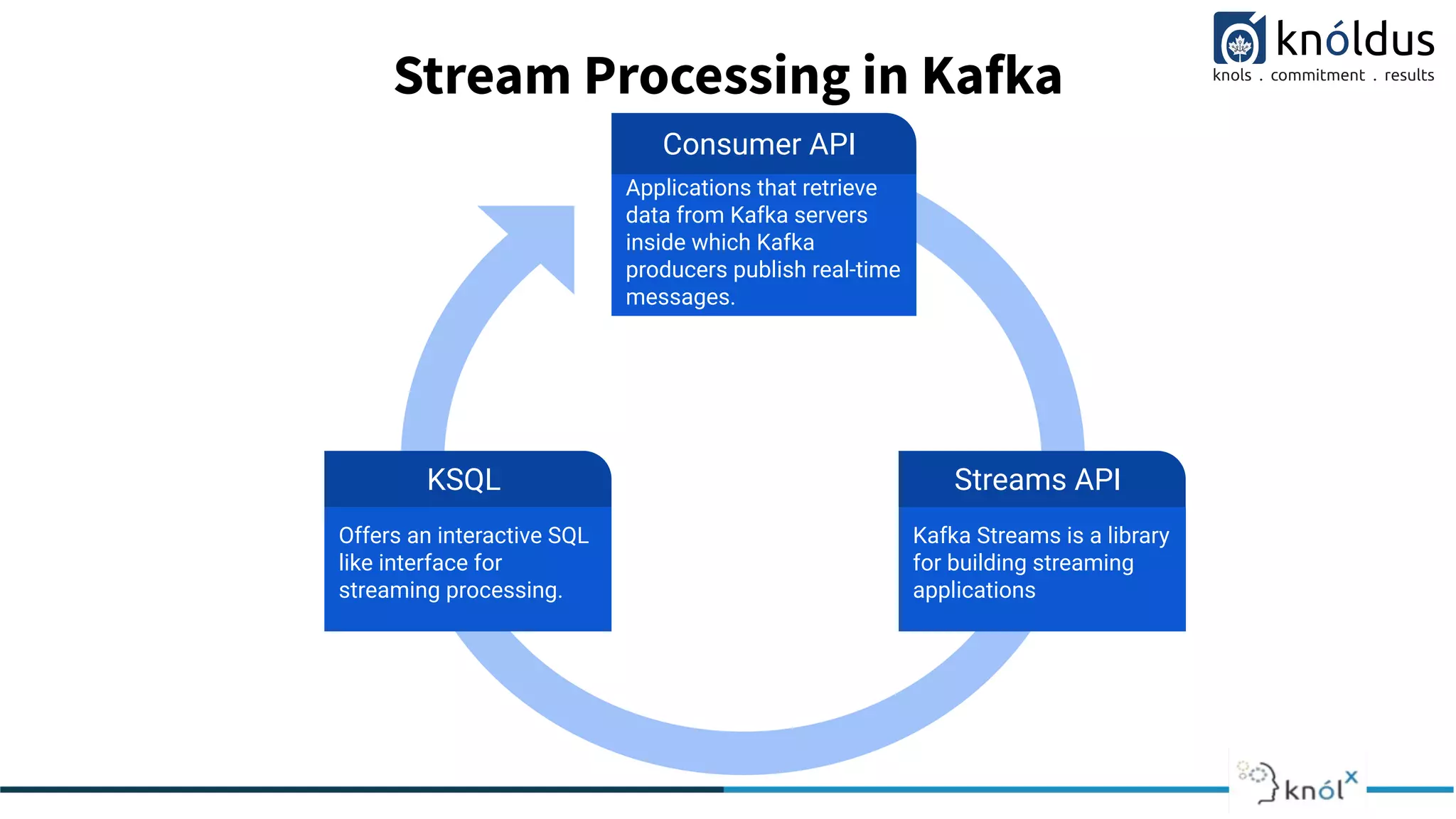

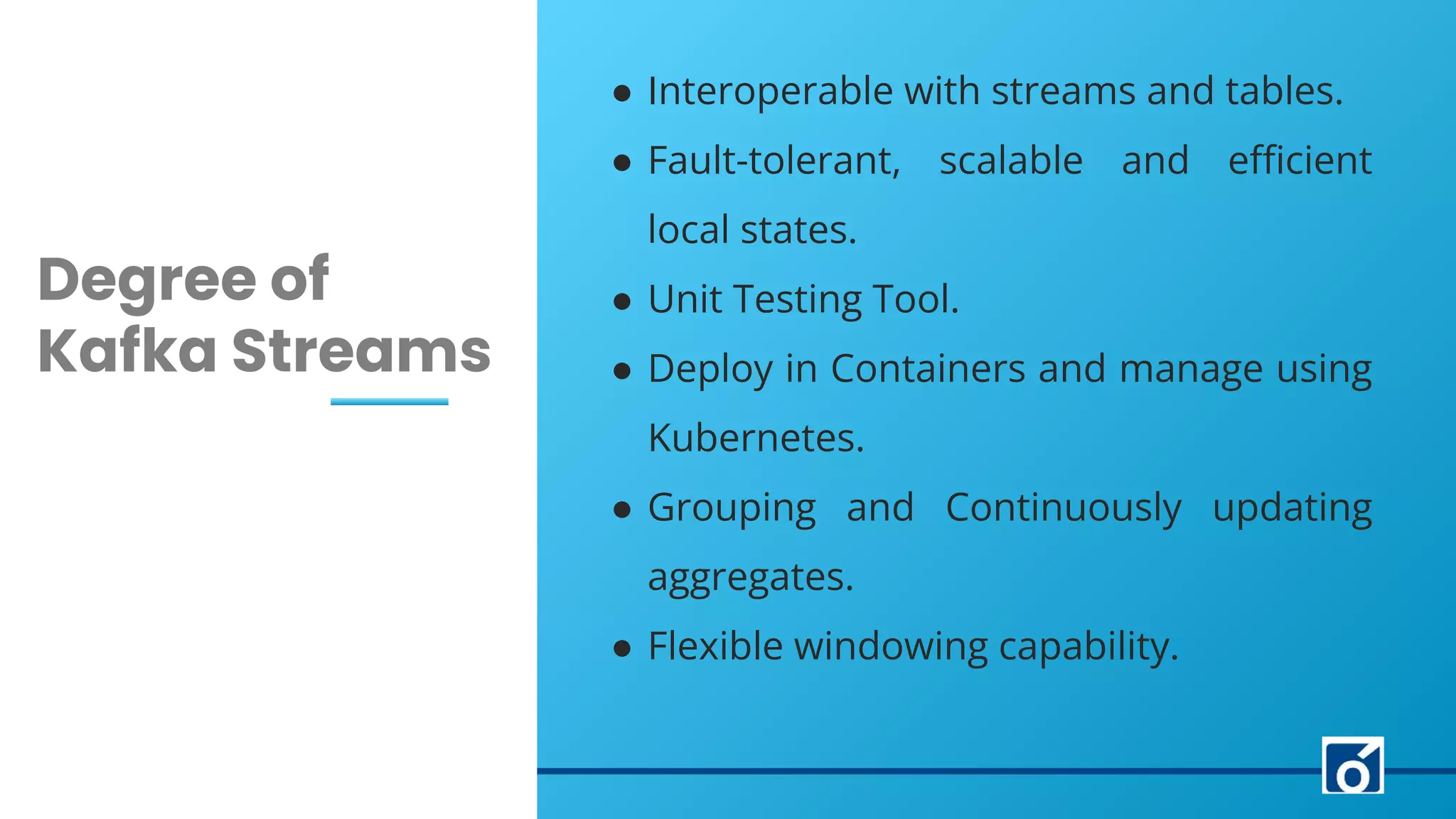

The document is an introduction to Kafka Streams, highlighting essential etiquette for attendees during a session presented by Anshika Agrawal. It covers key concepts of Apache Kafka, including its components, Kafka Connect, and how Kafka Streams facilitates real-time data processing. A demo is included to showcase implementation, emphasizing Kafka's scalability and efficiency.