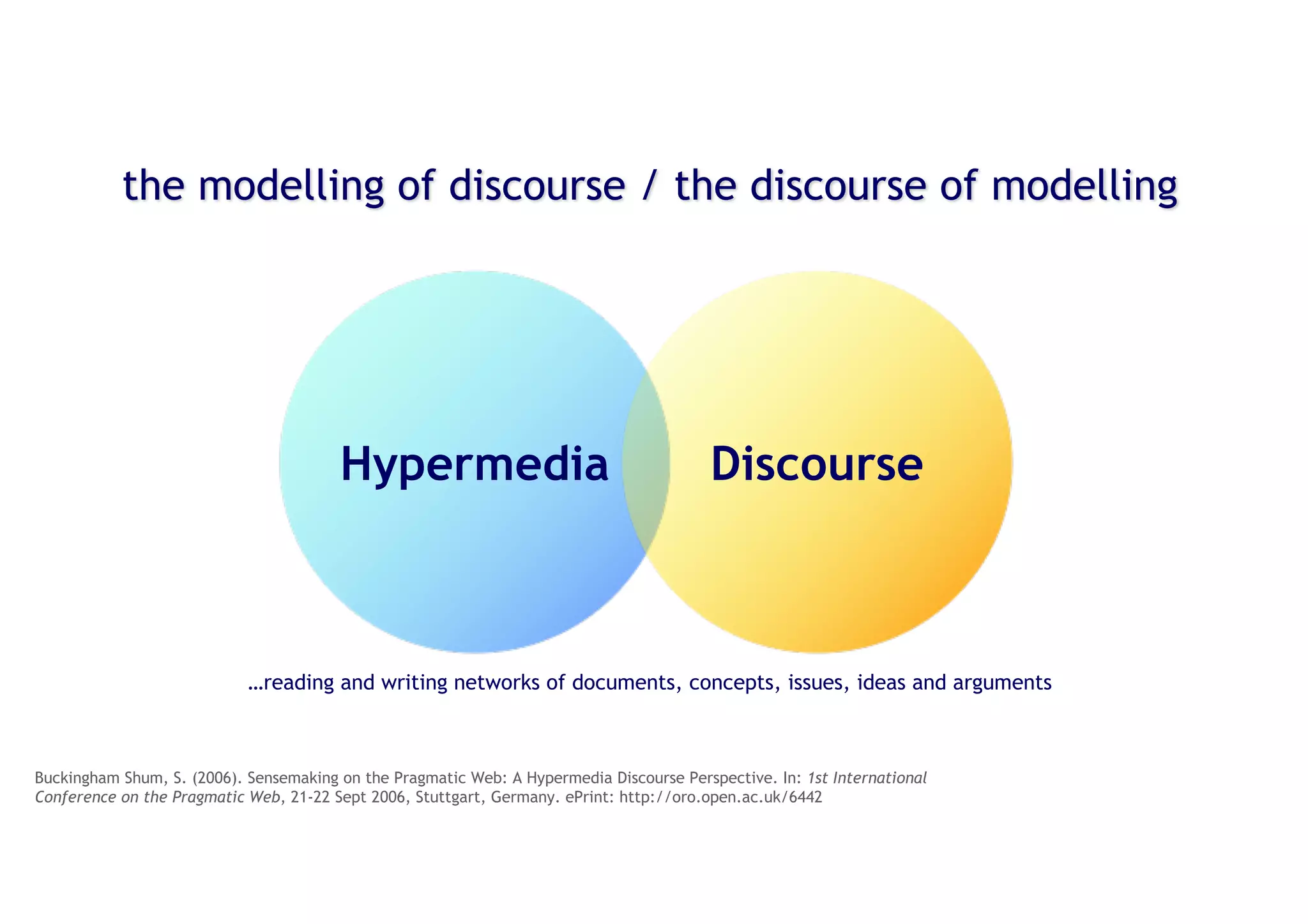

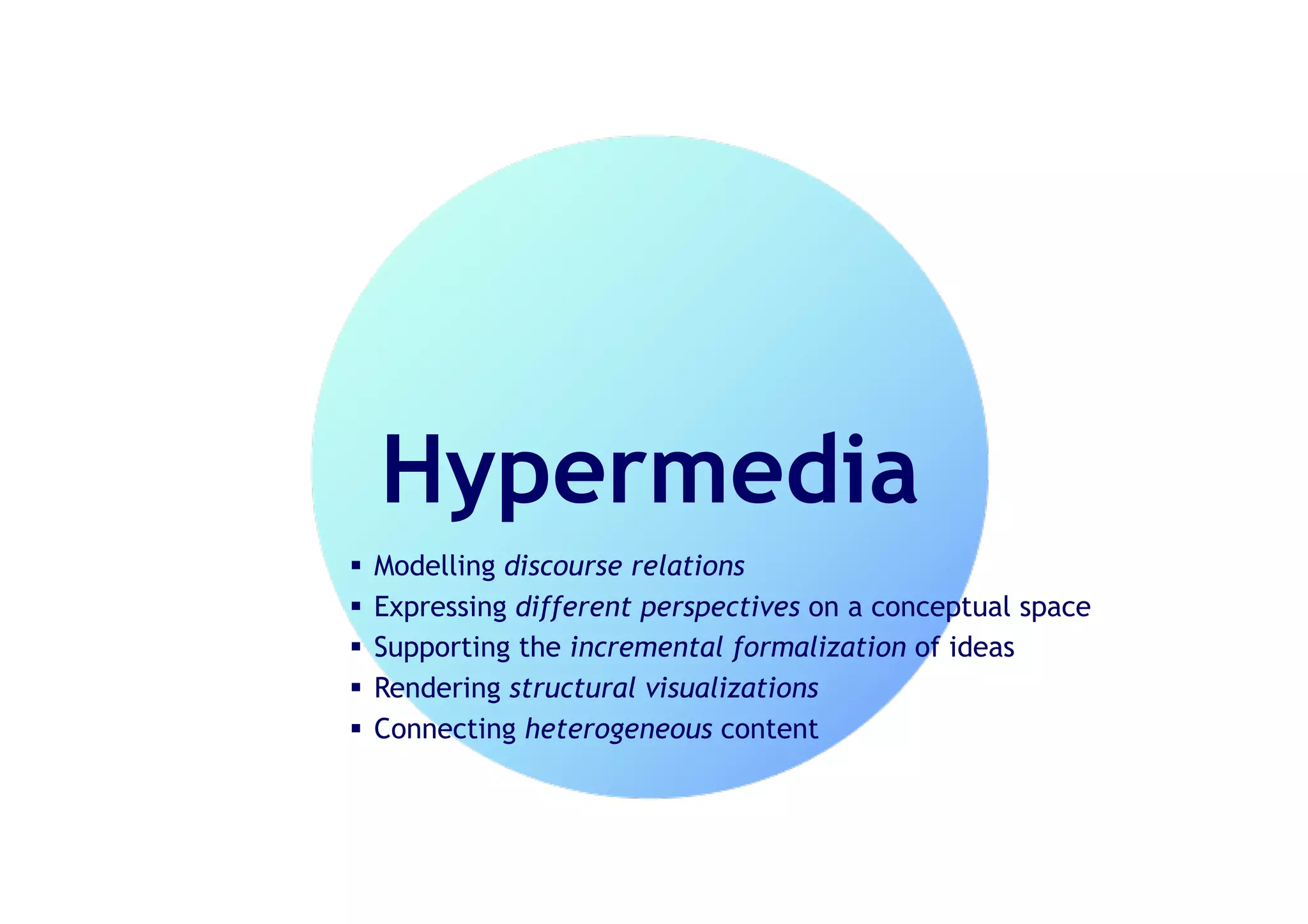

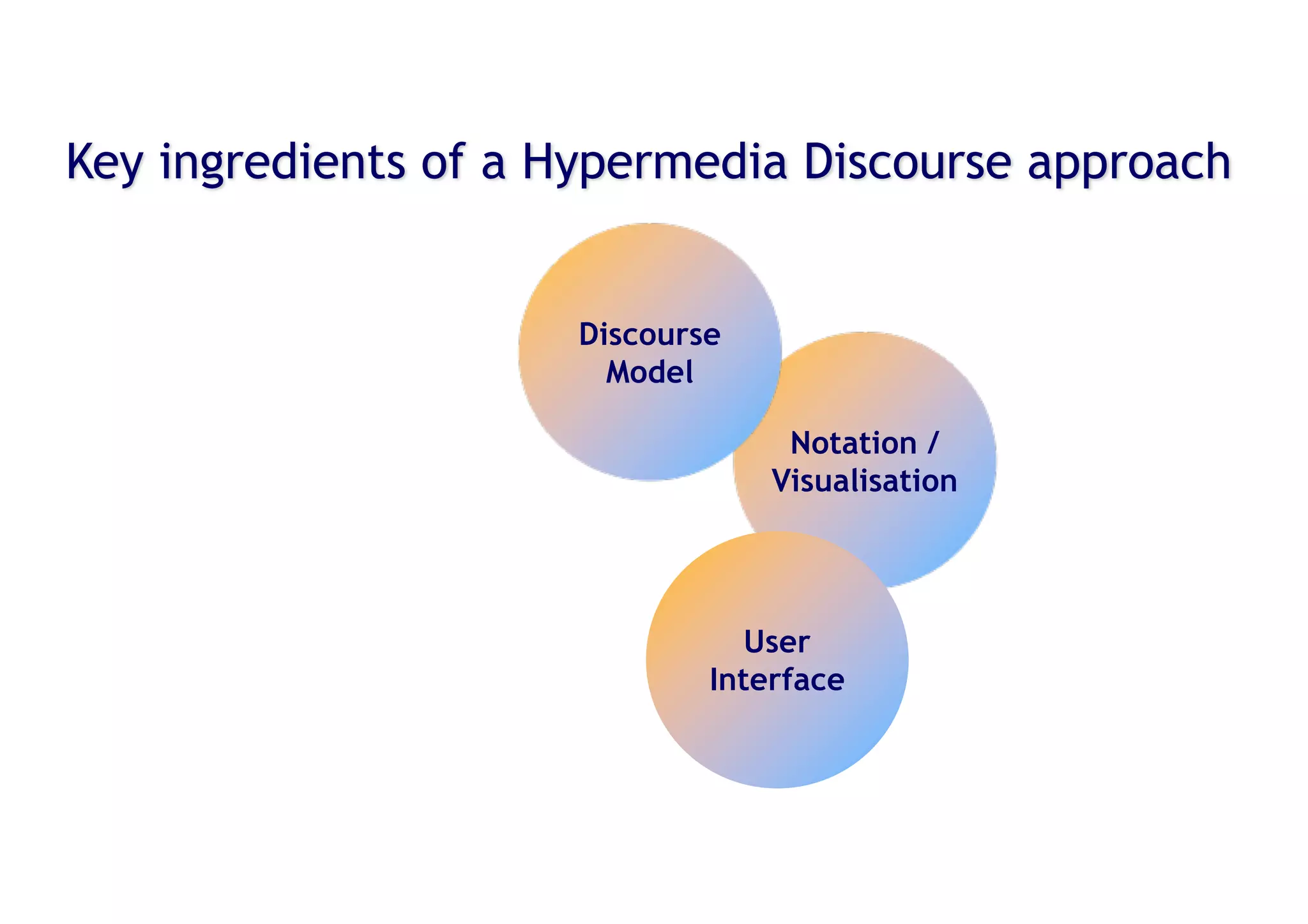

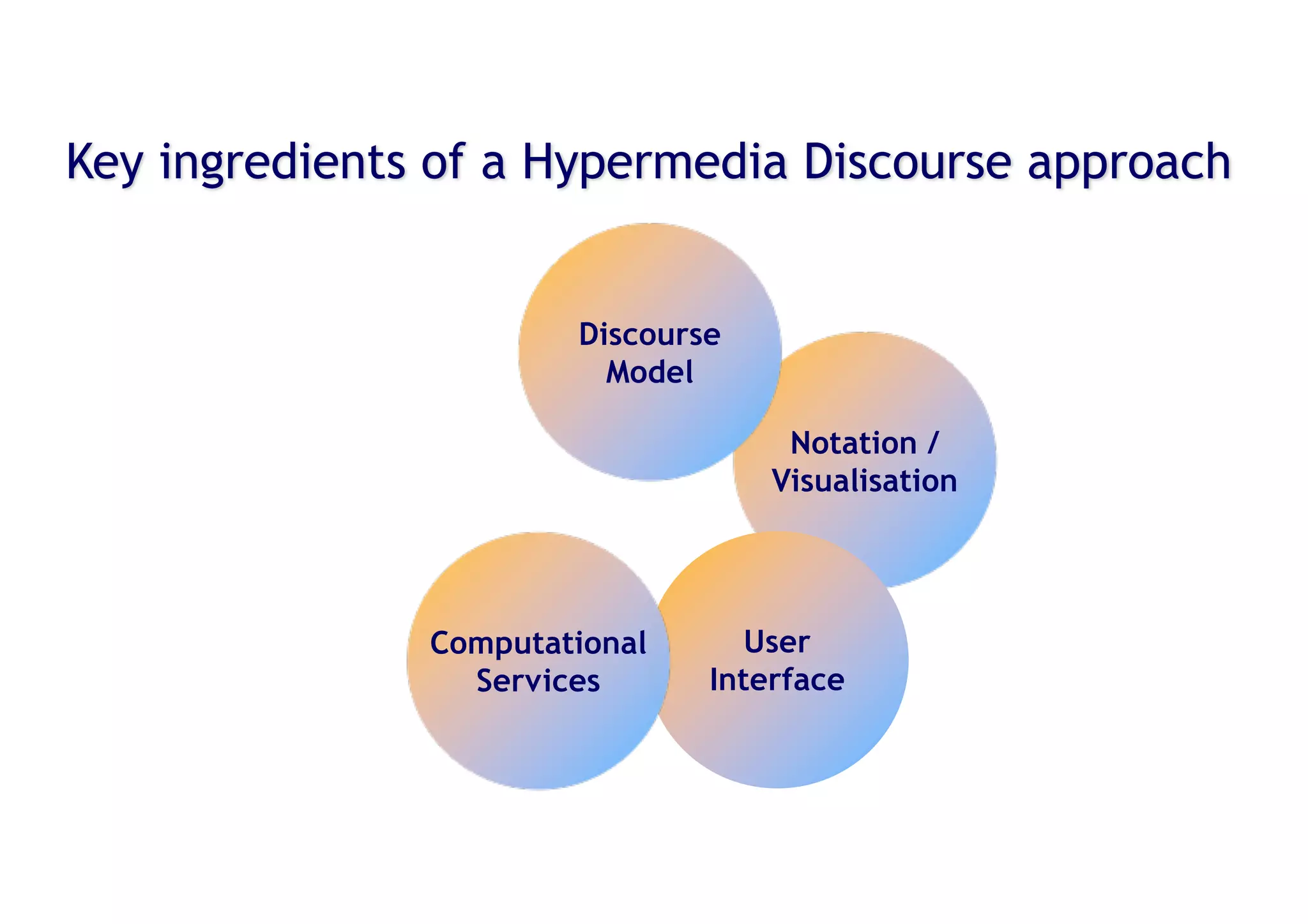

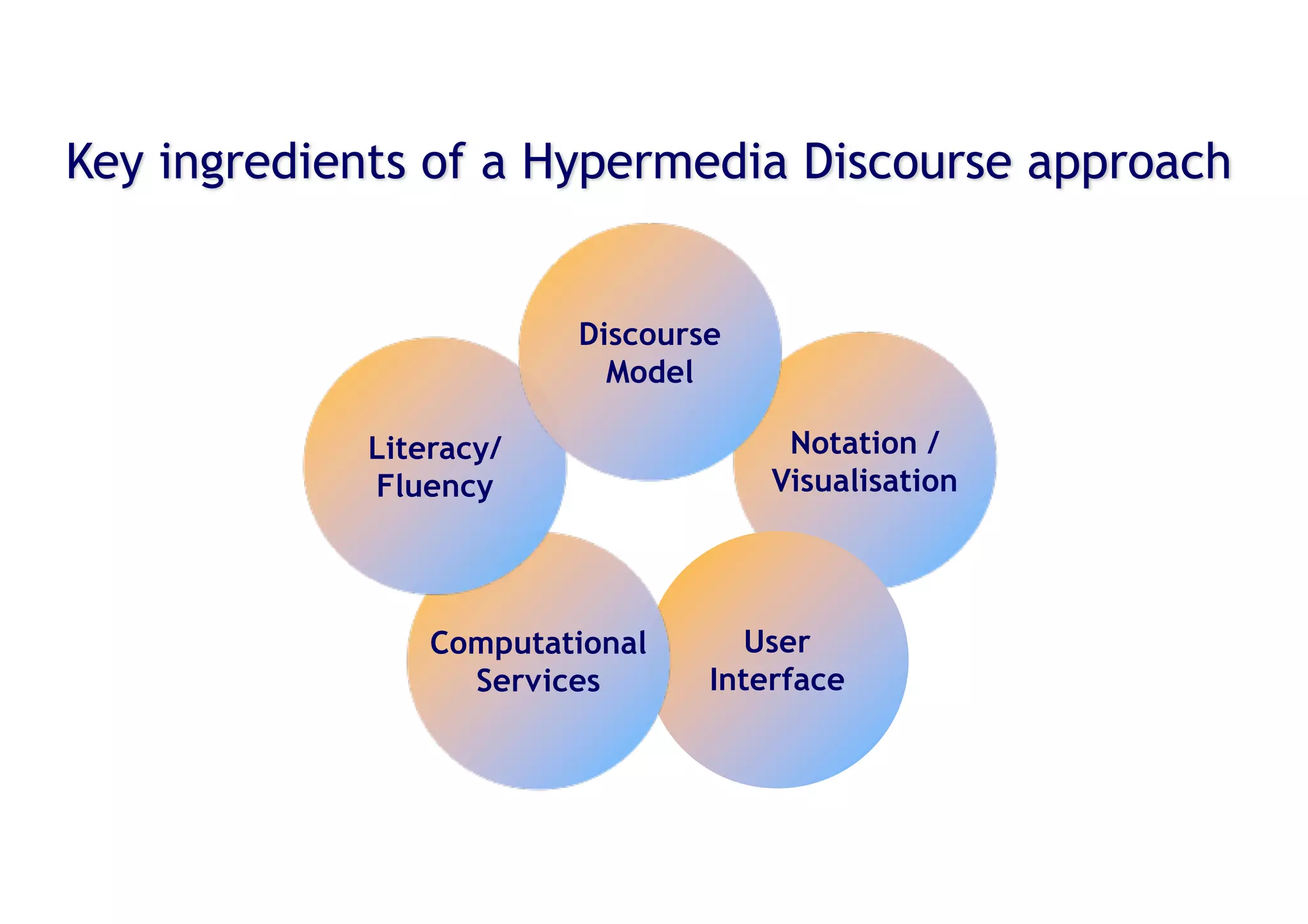

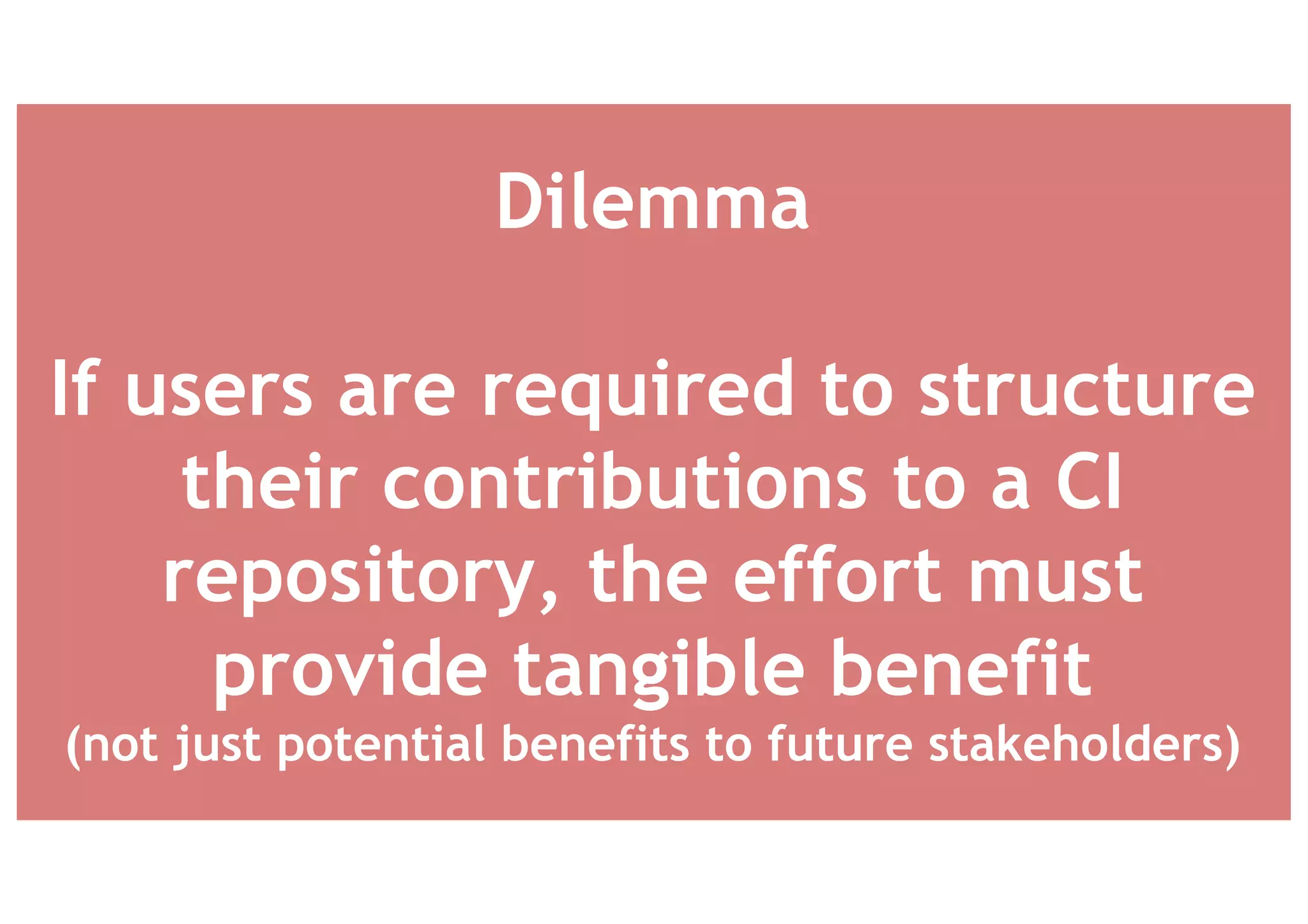

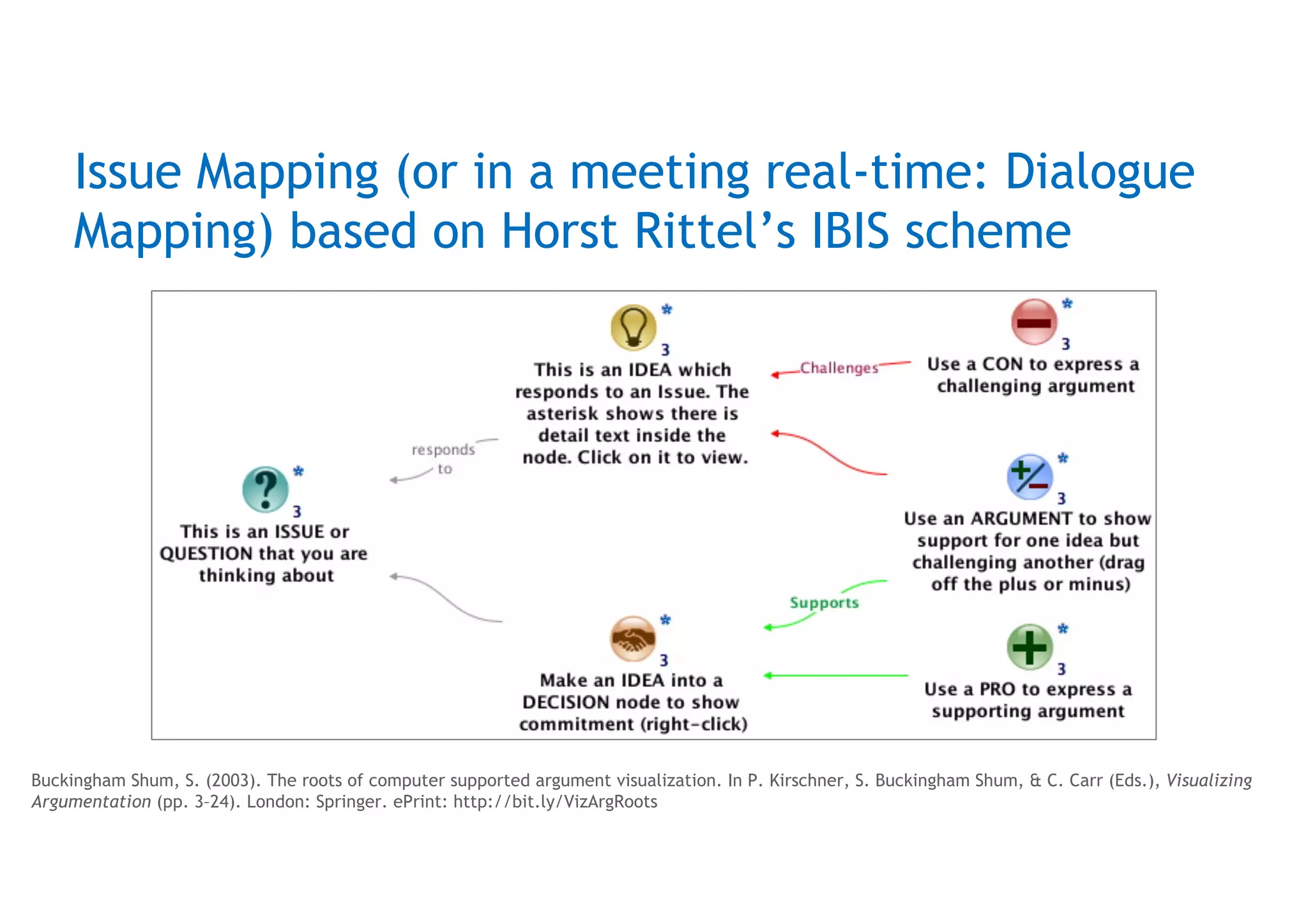

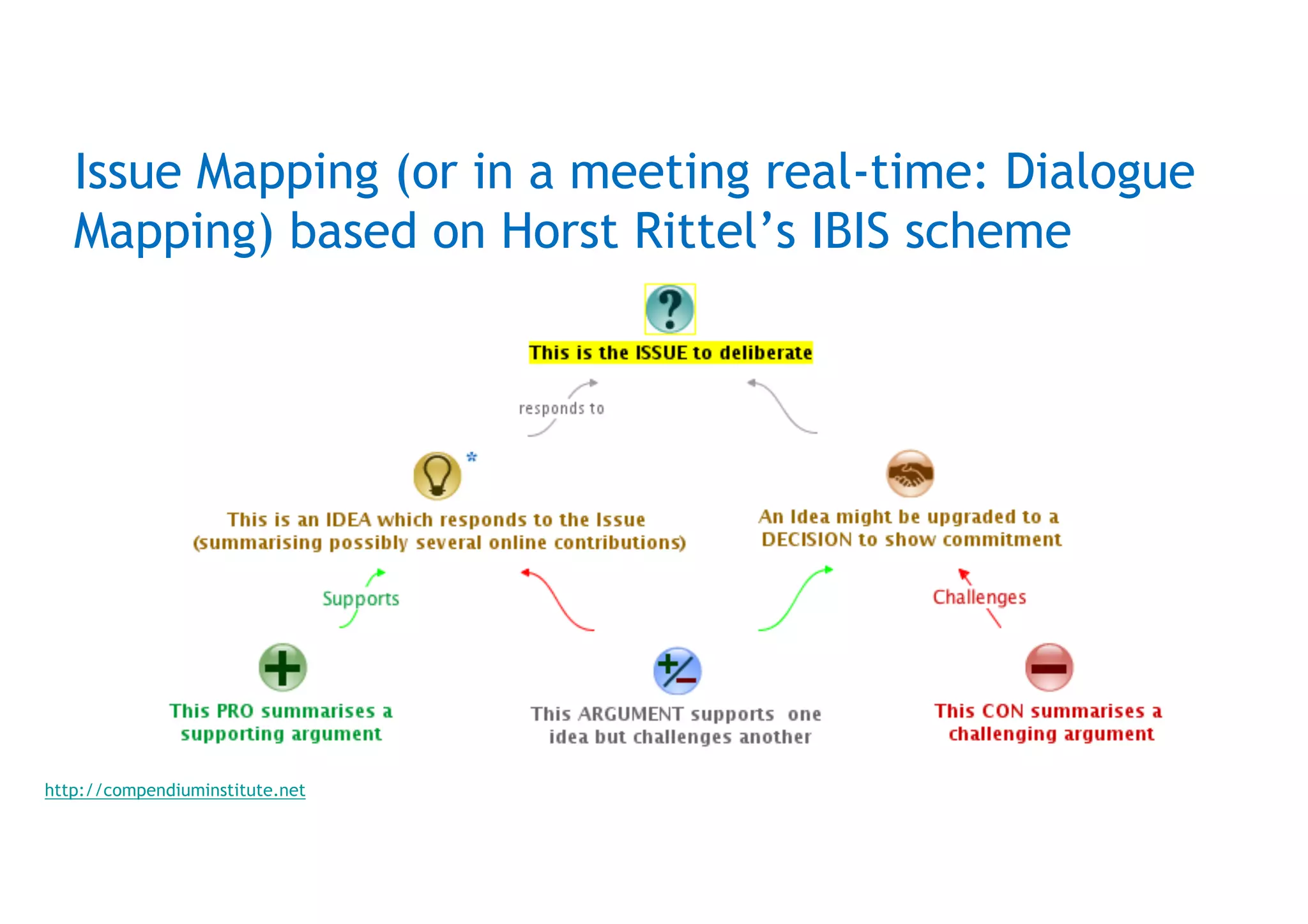

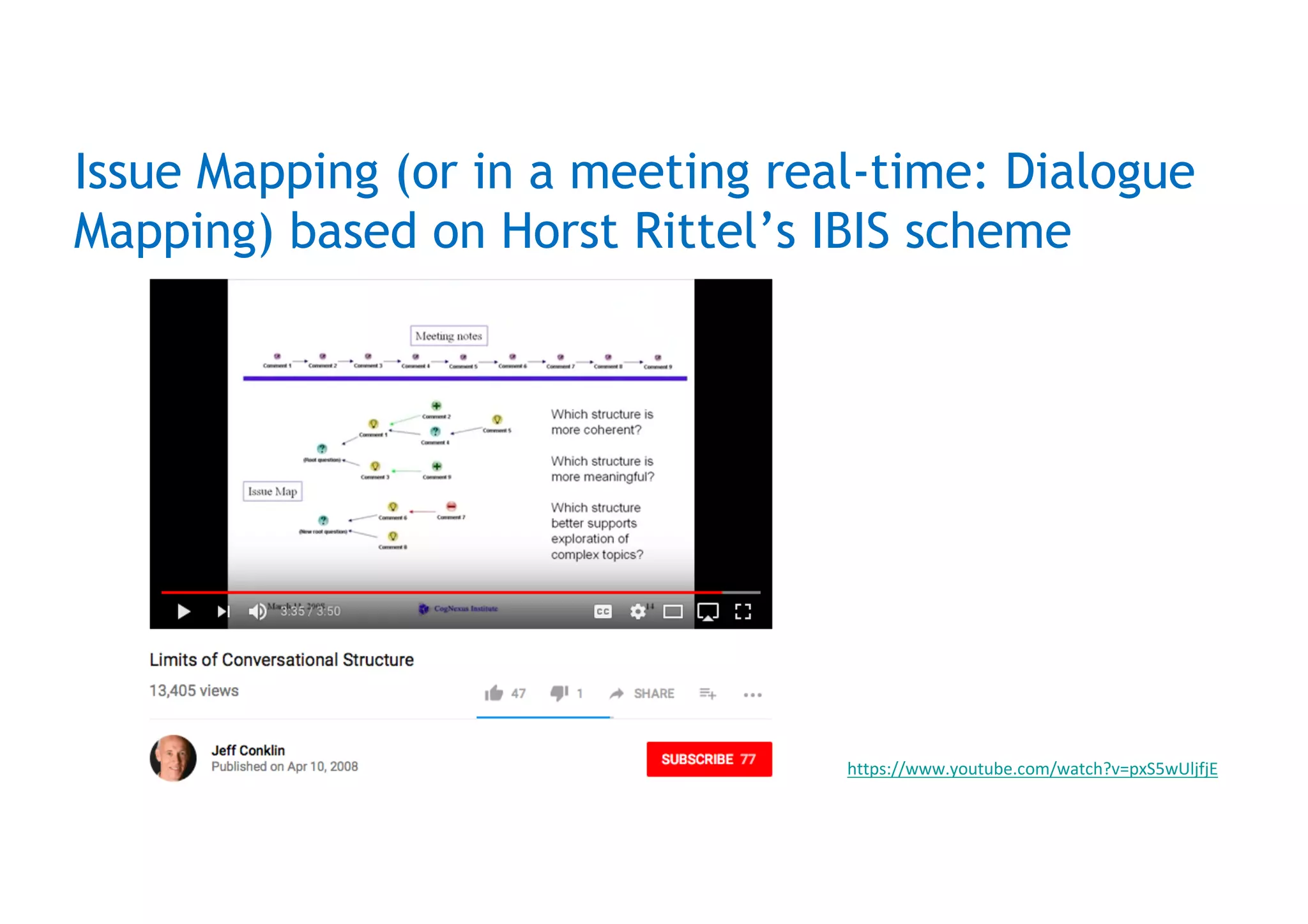

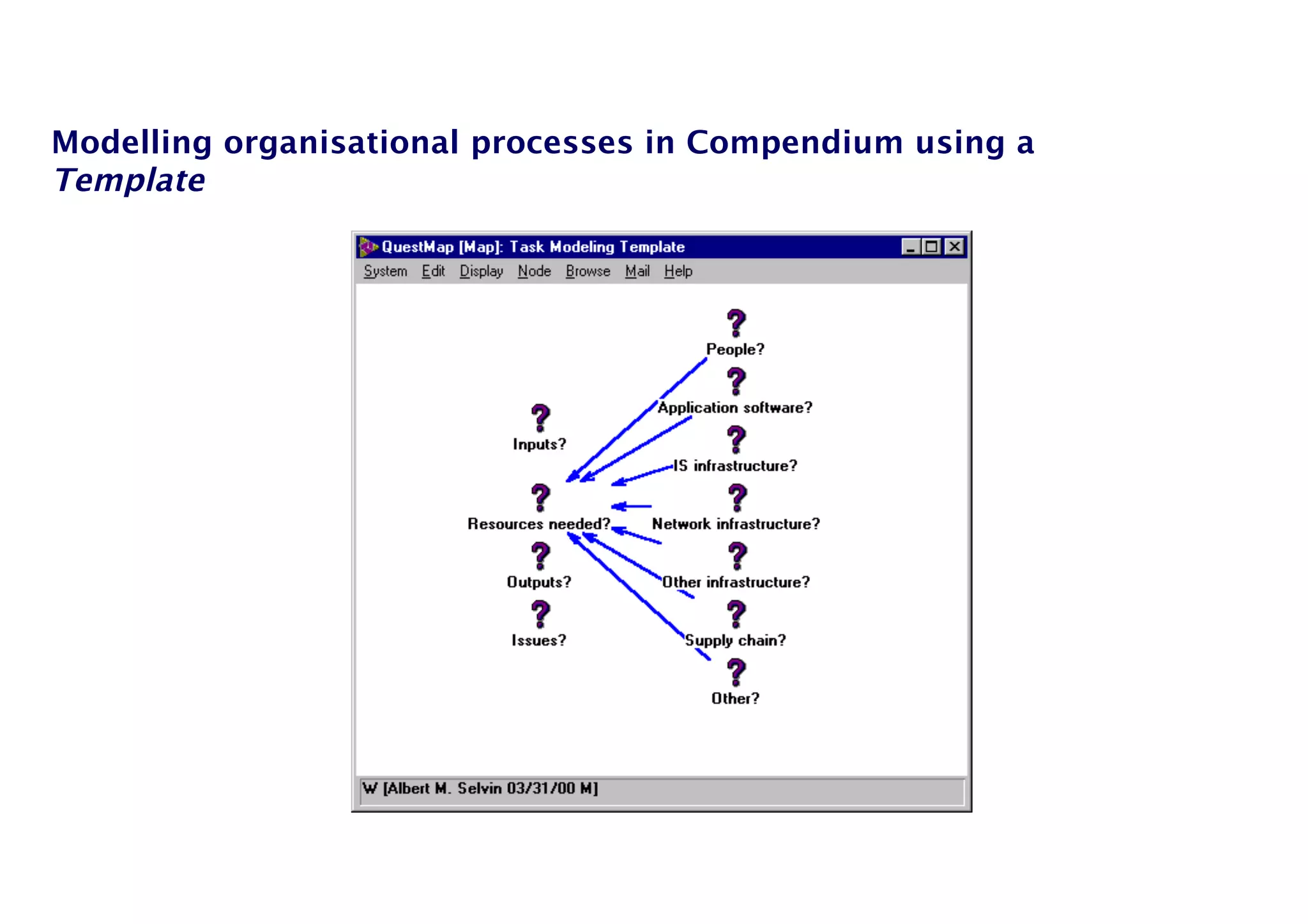

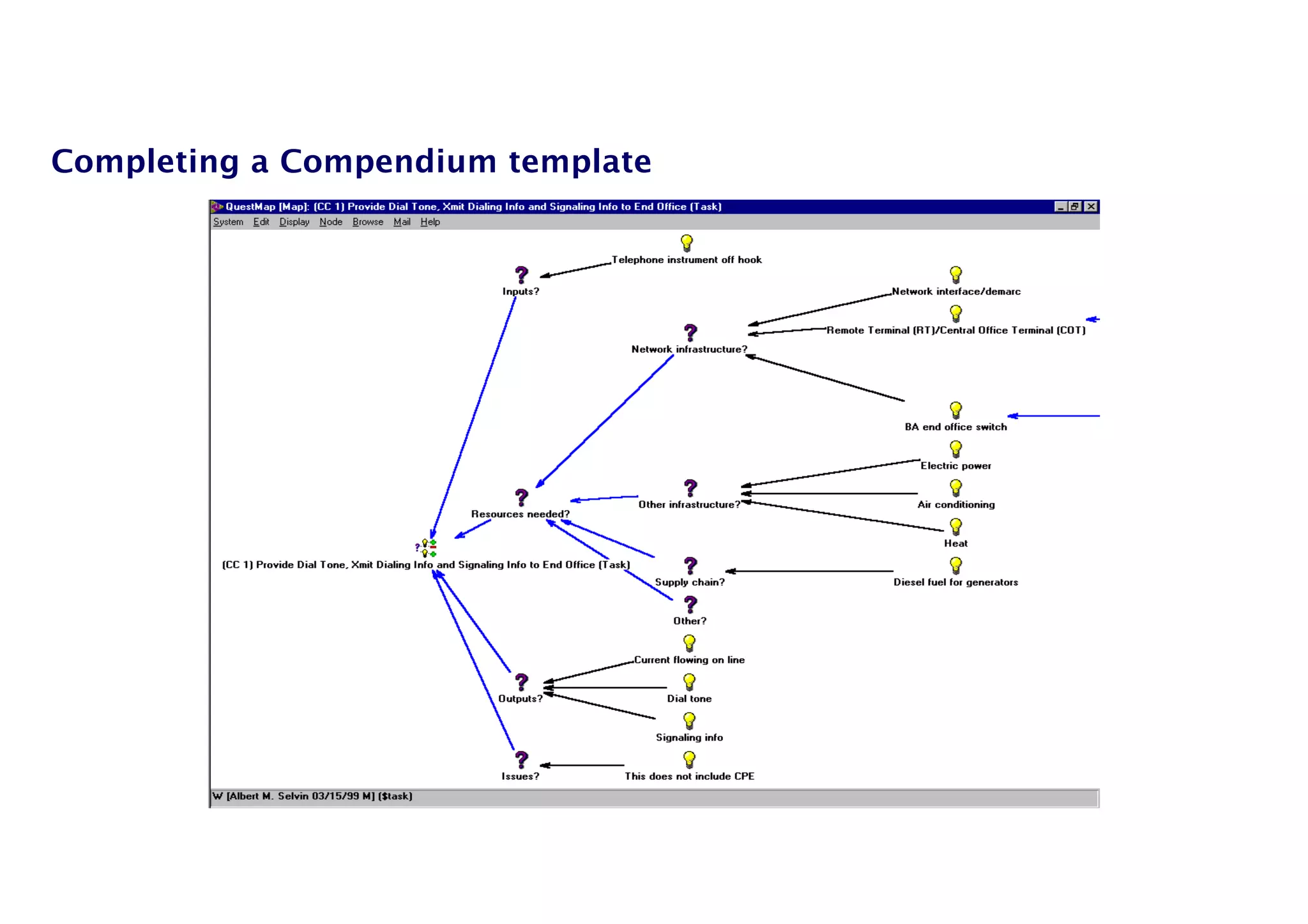

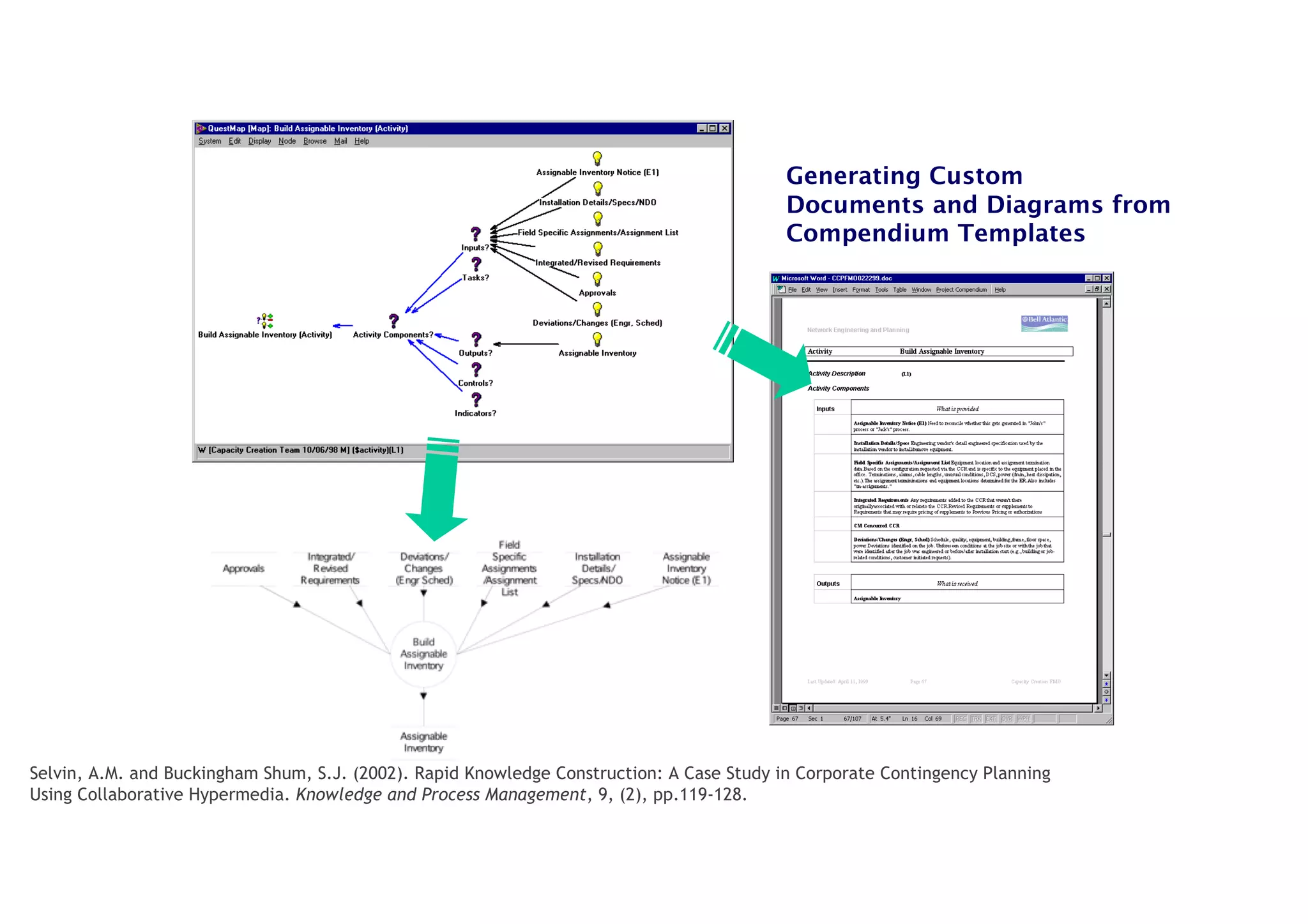

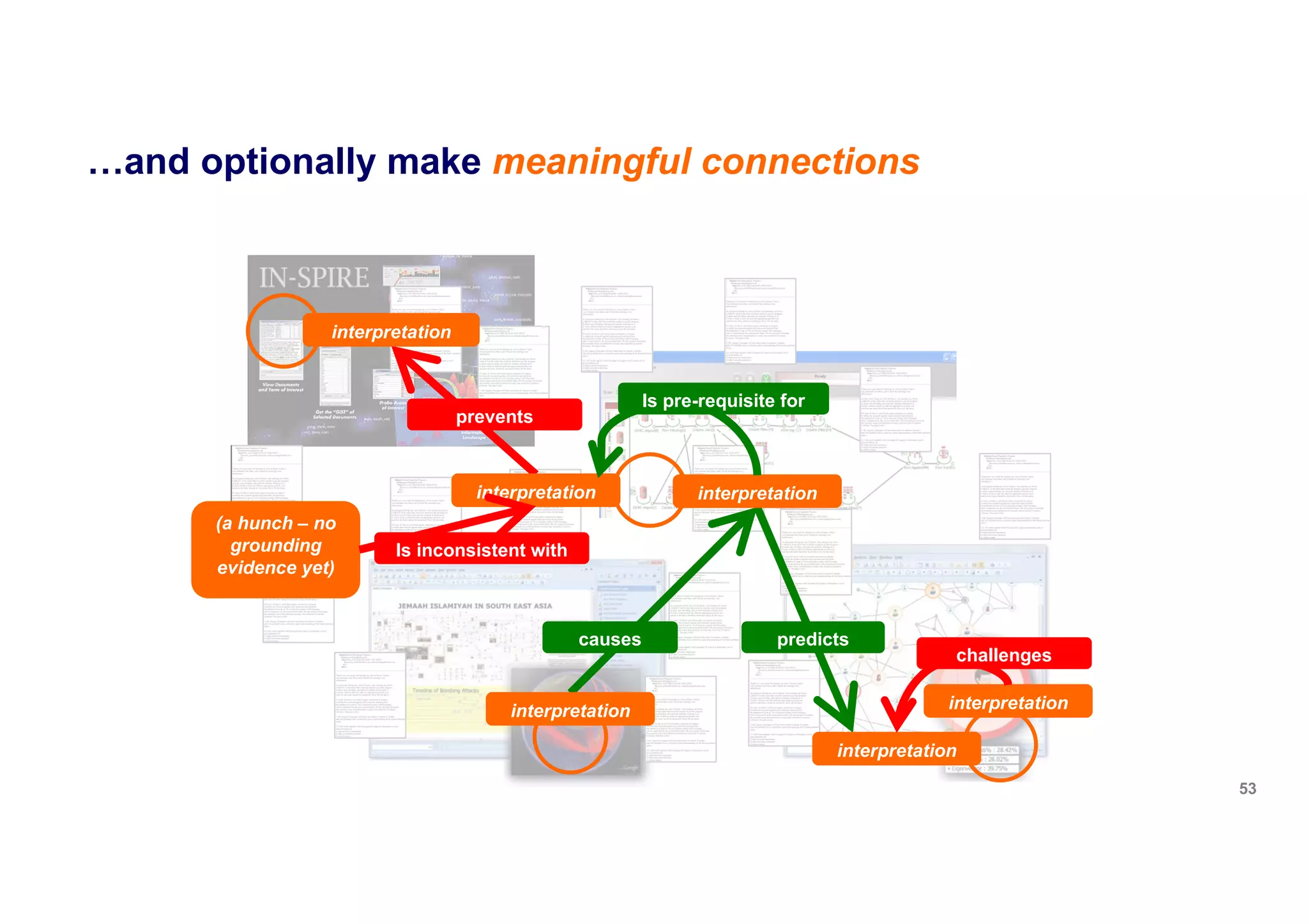

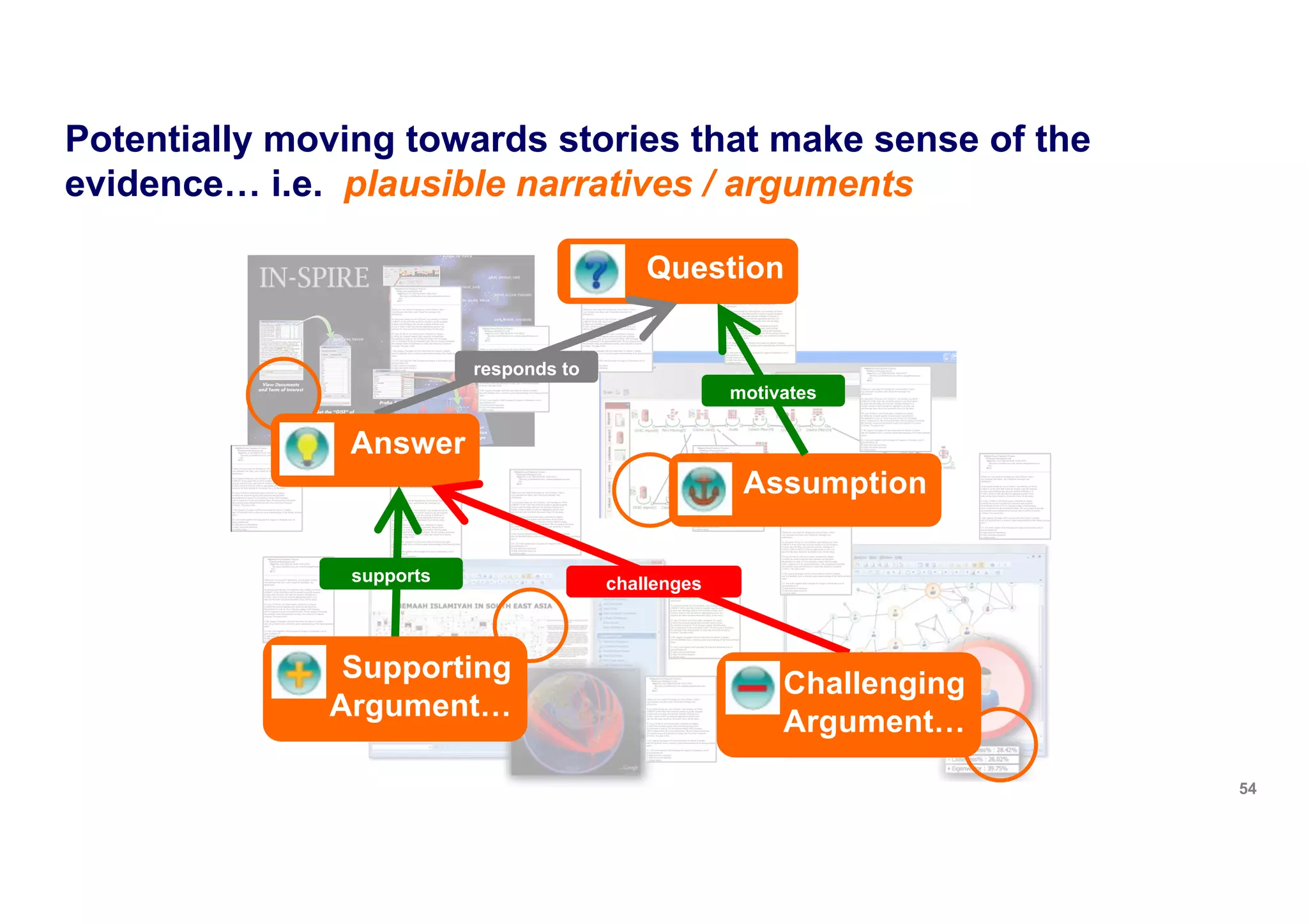

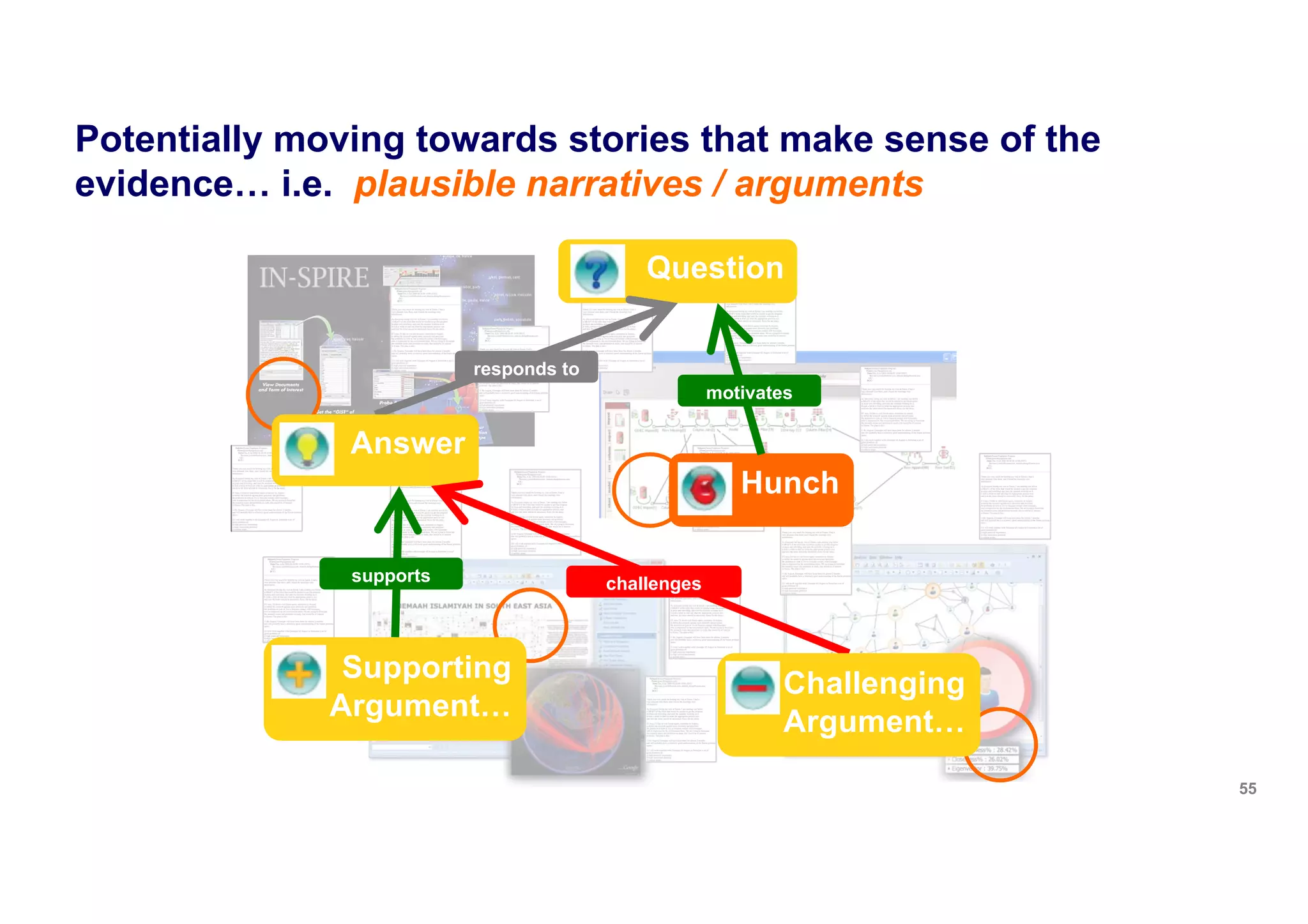

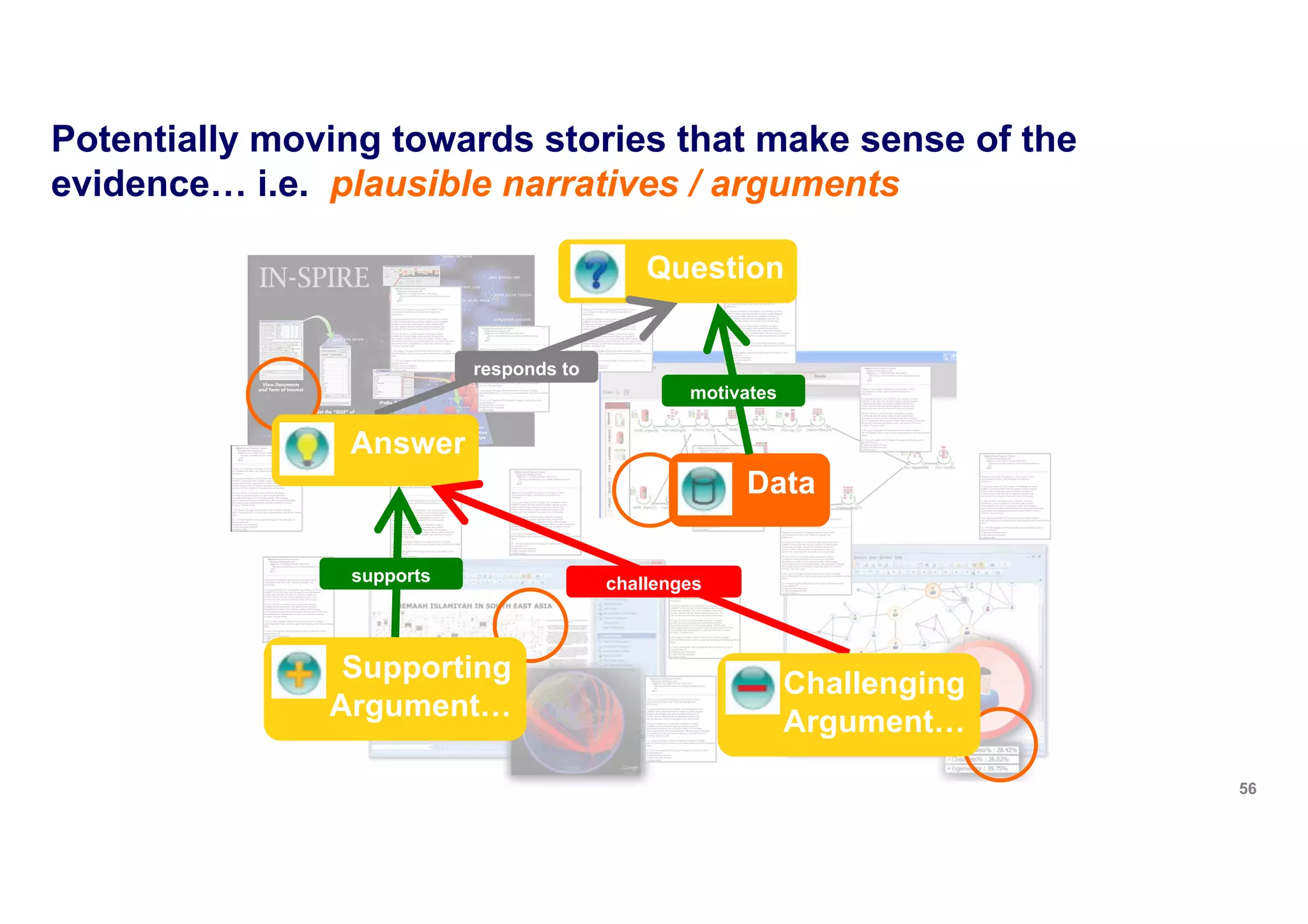

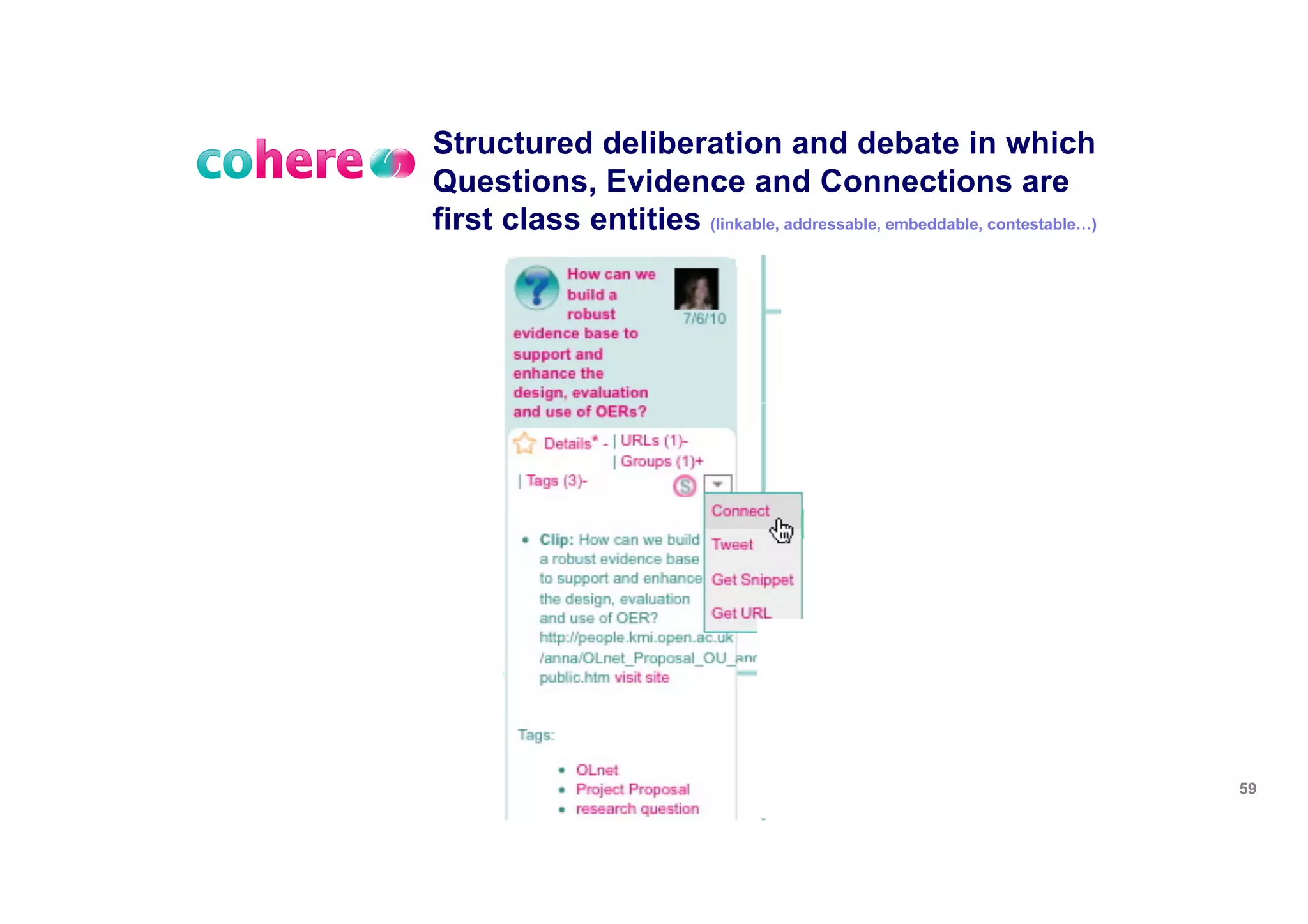

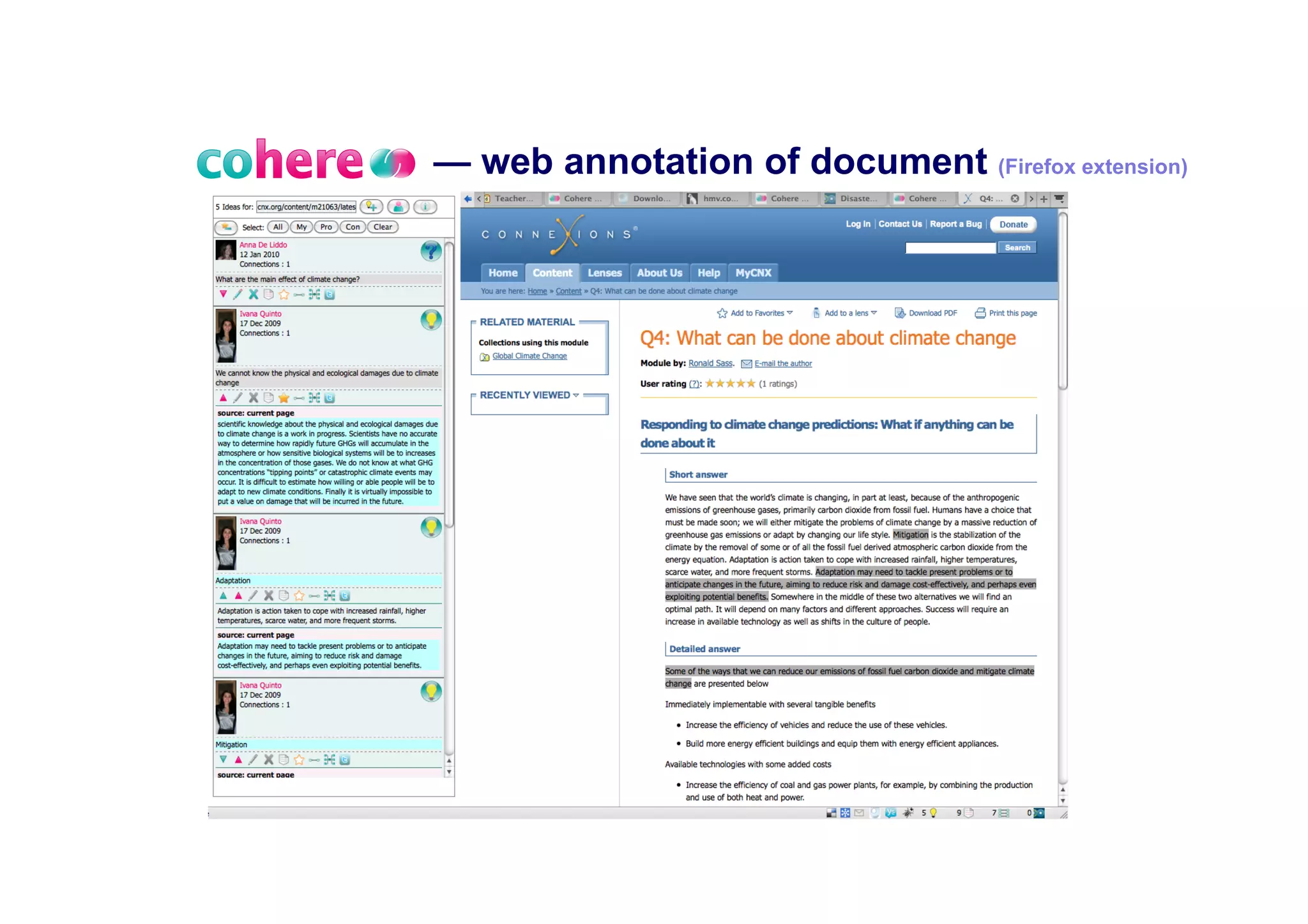

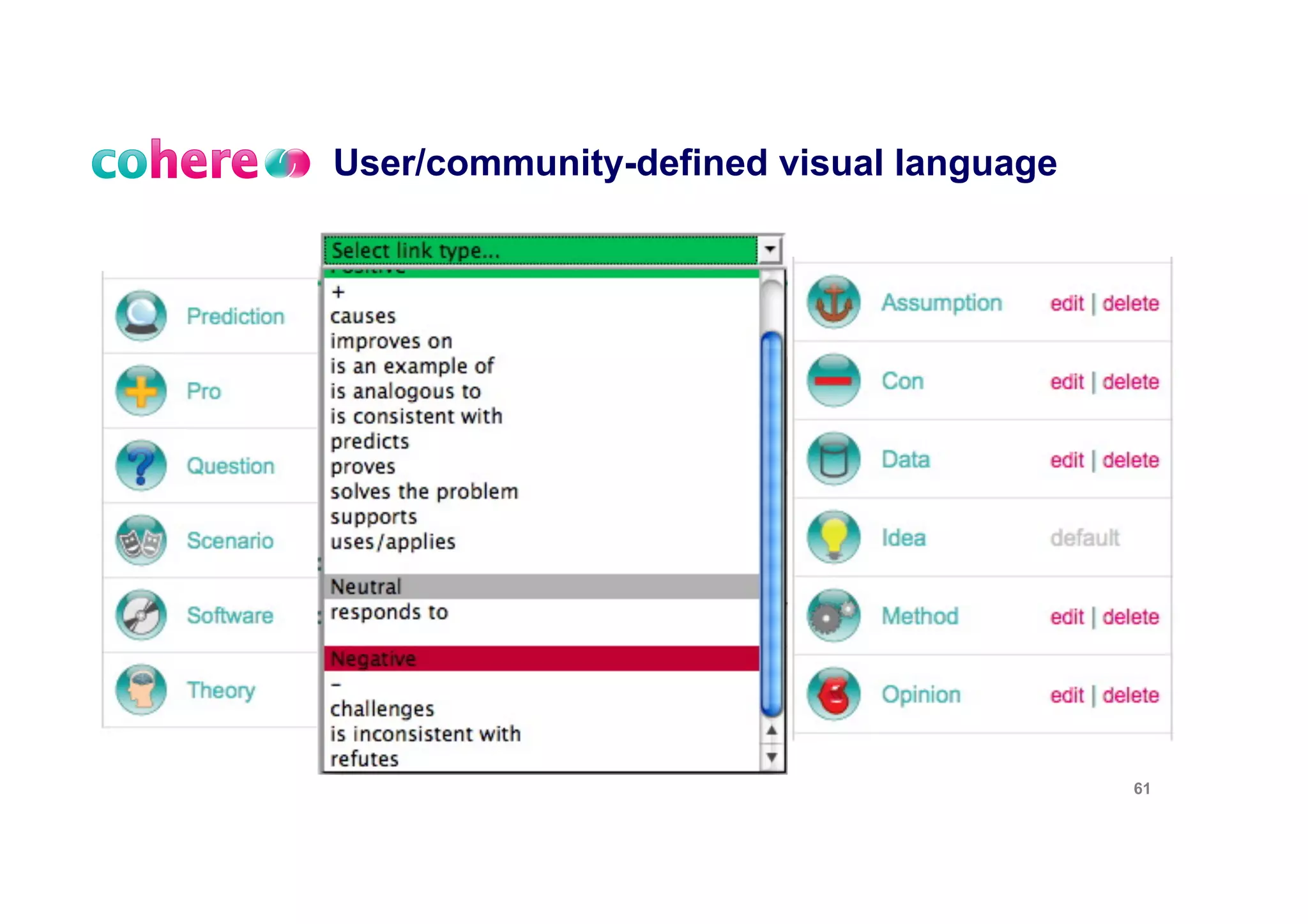

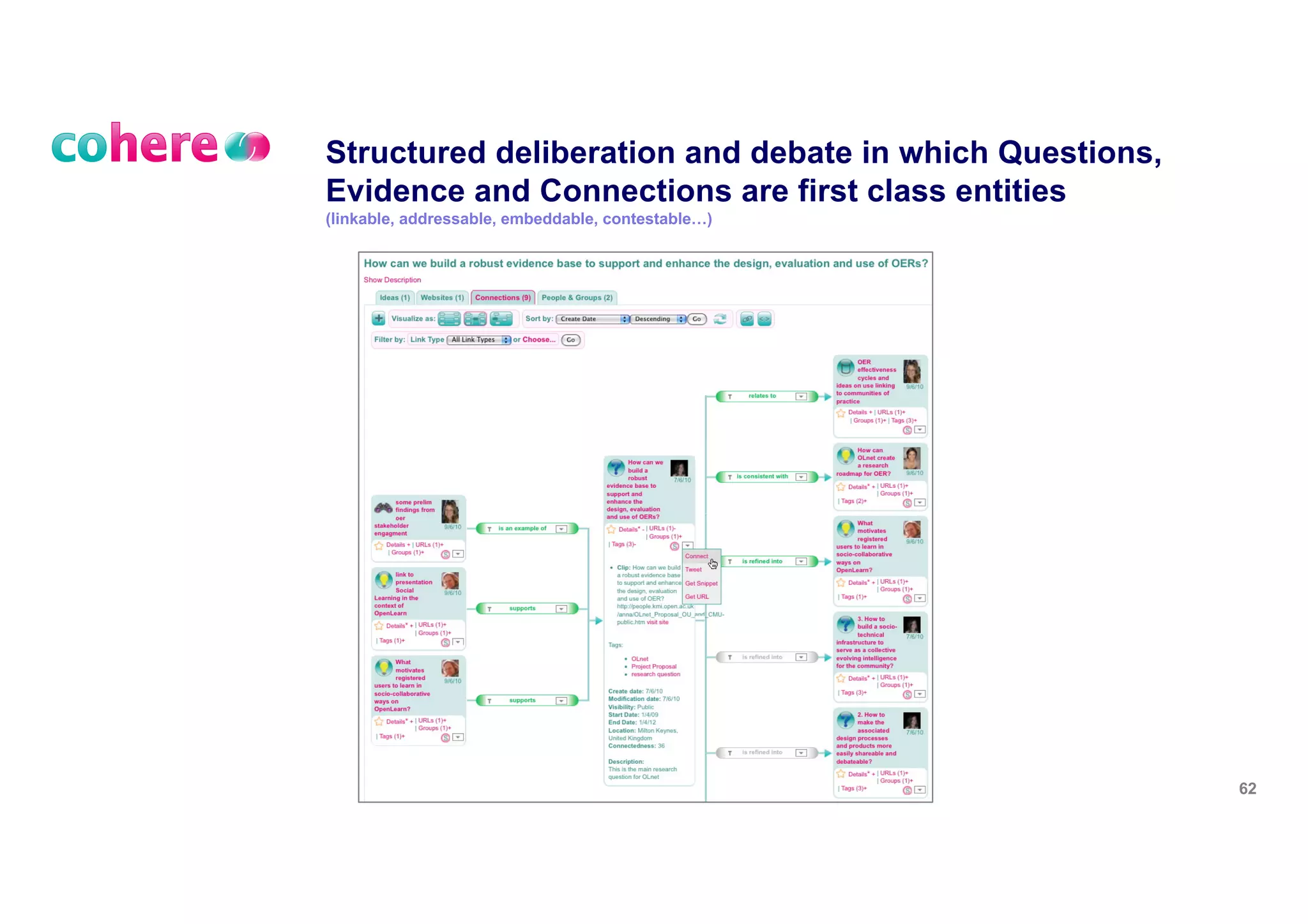

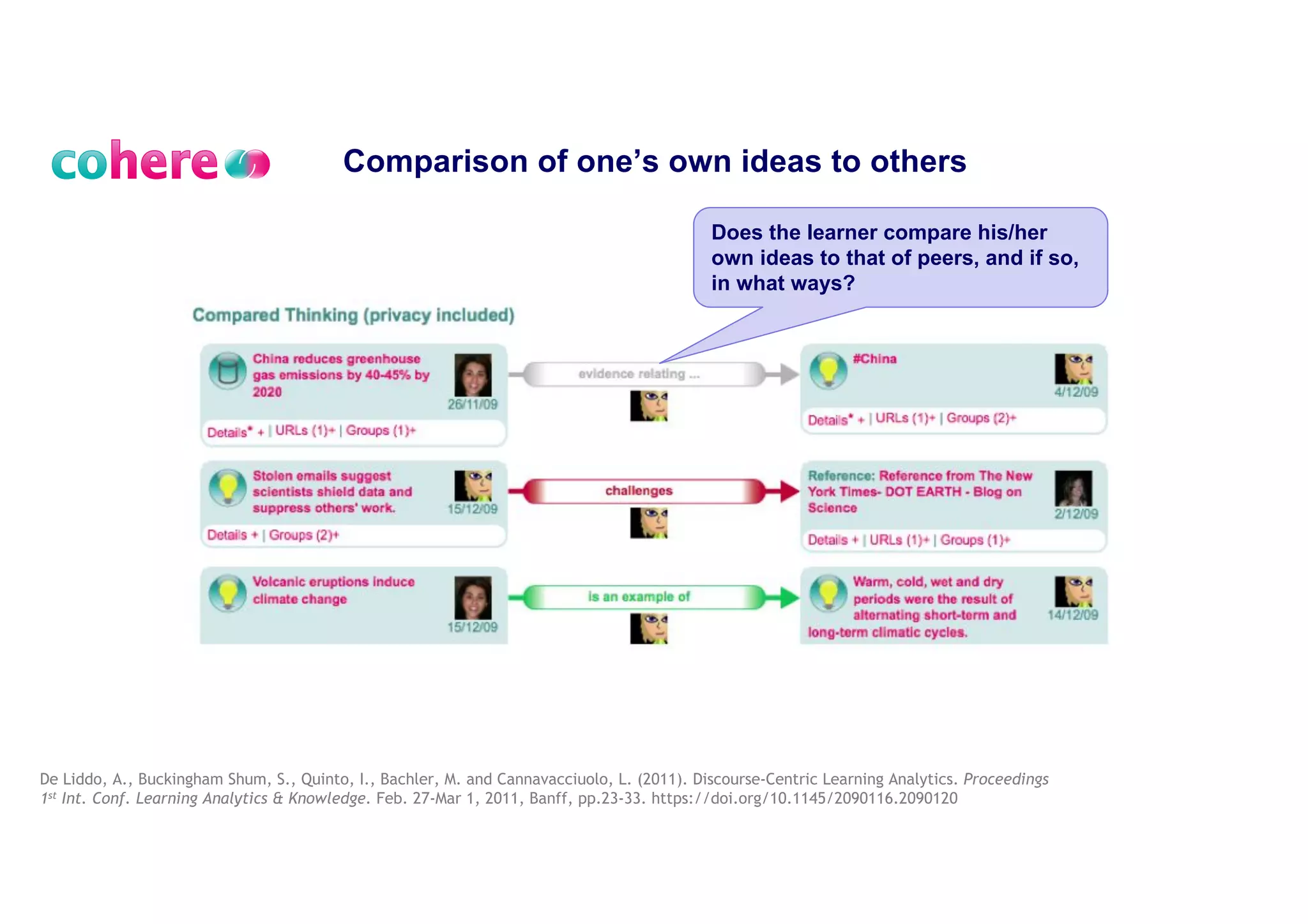

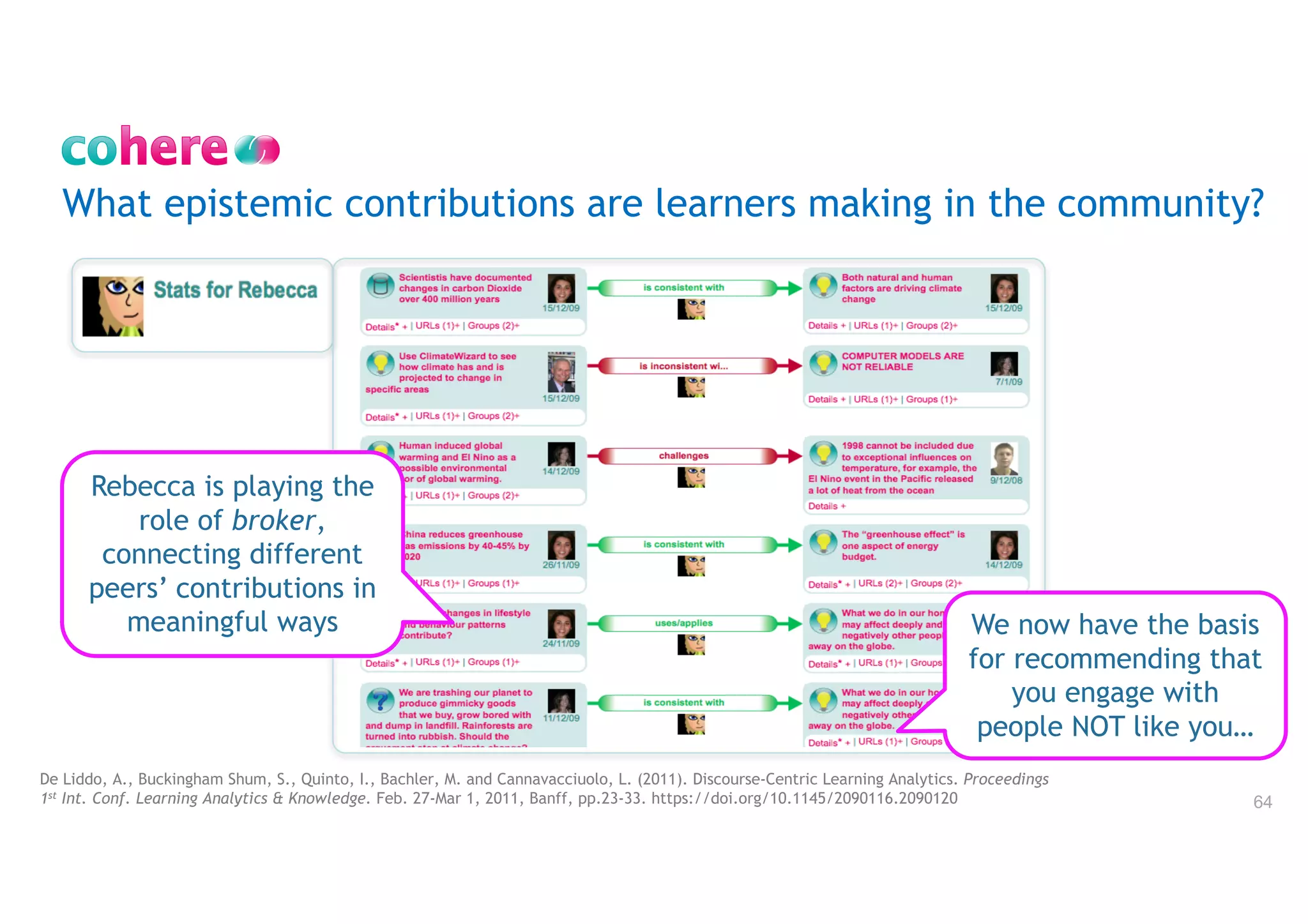

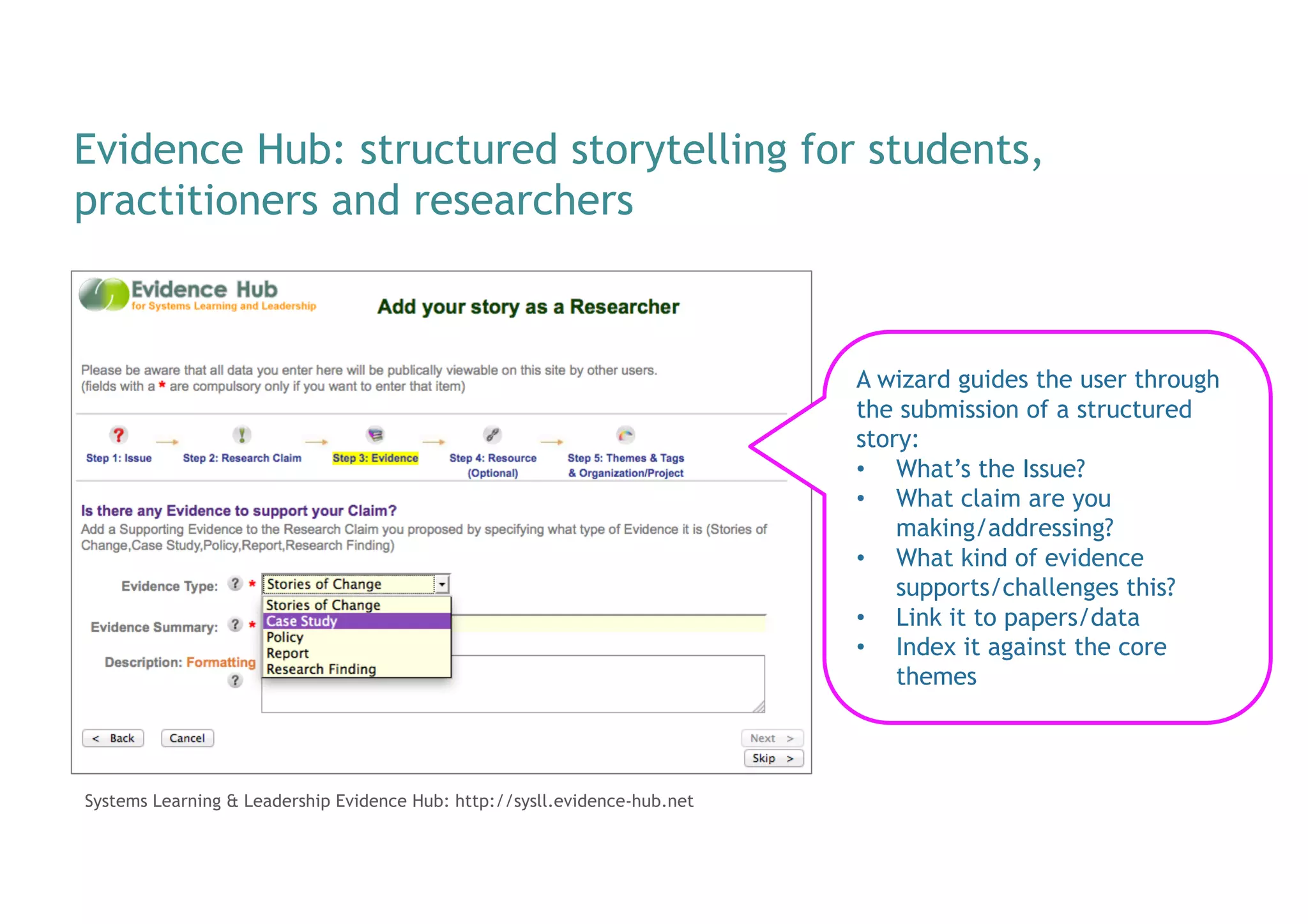

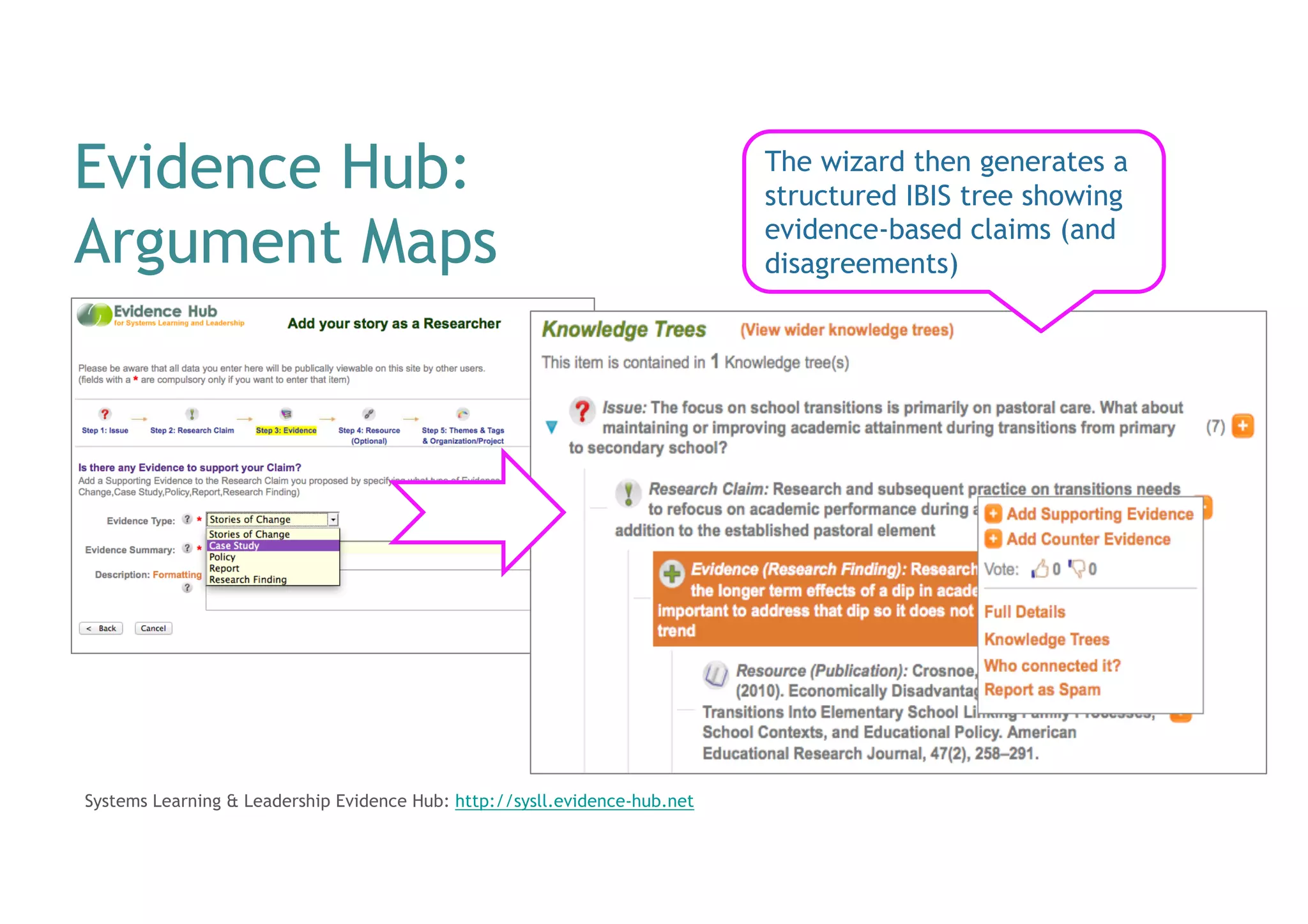

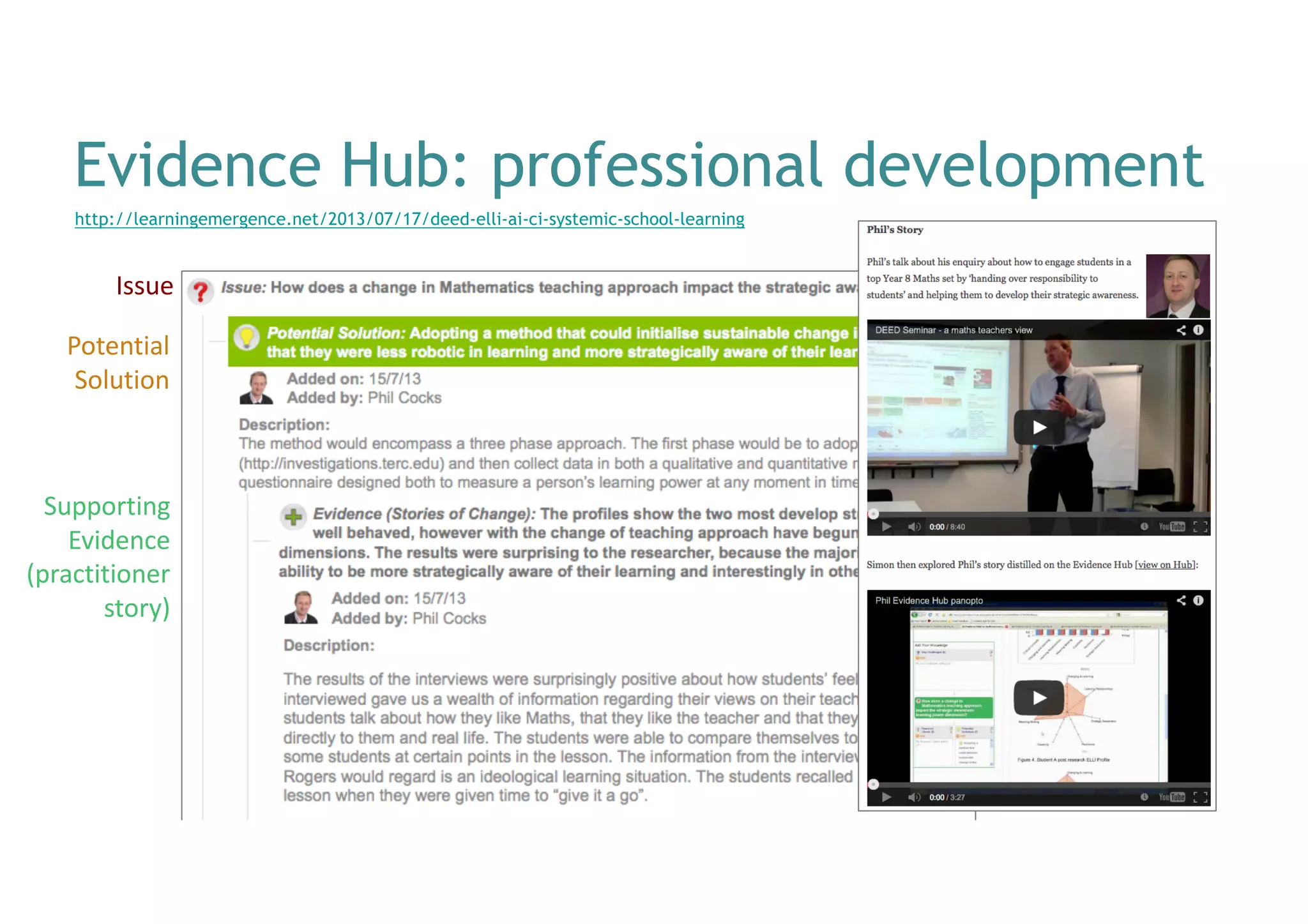

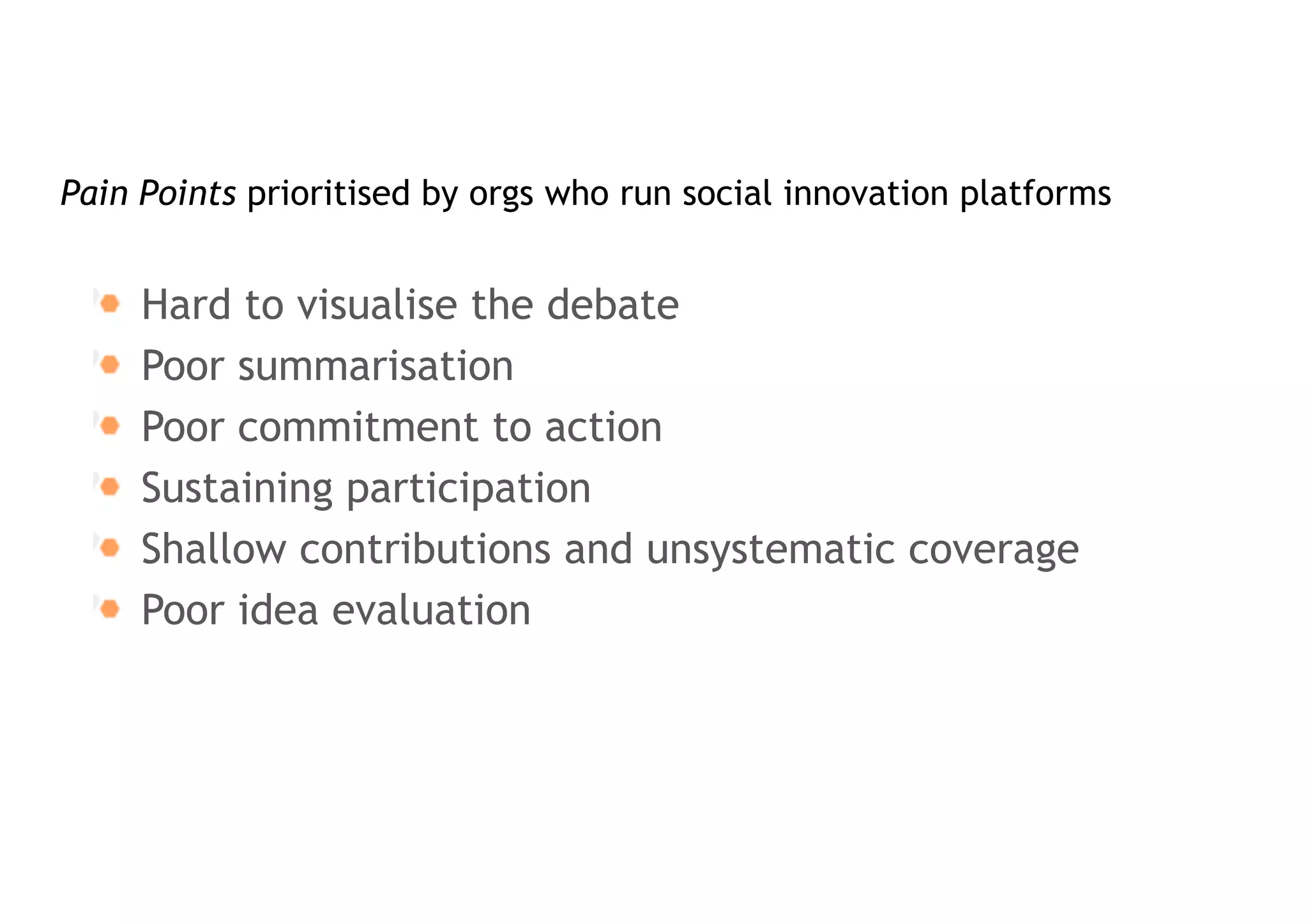

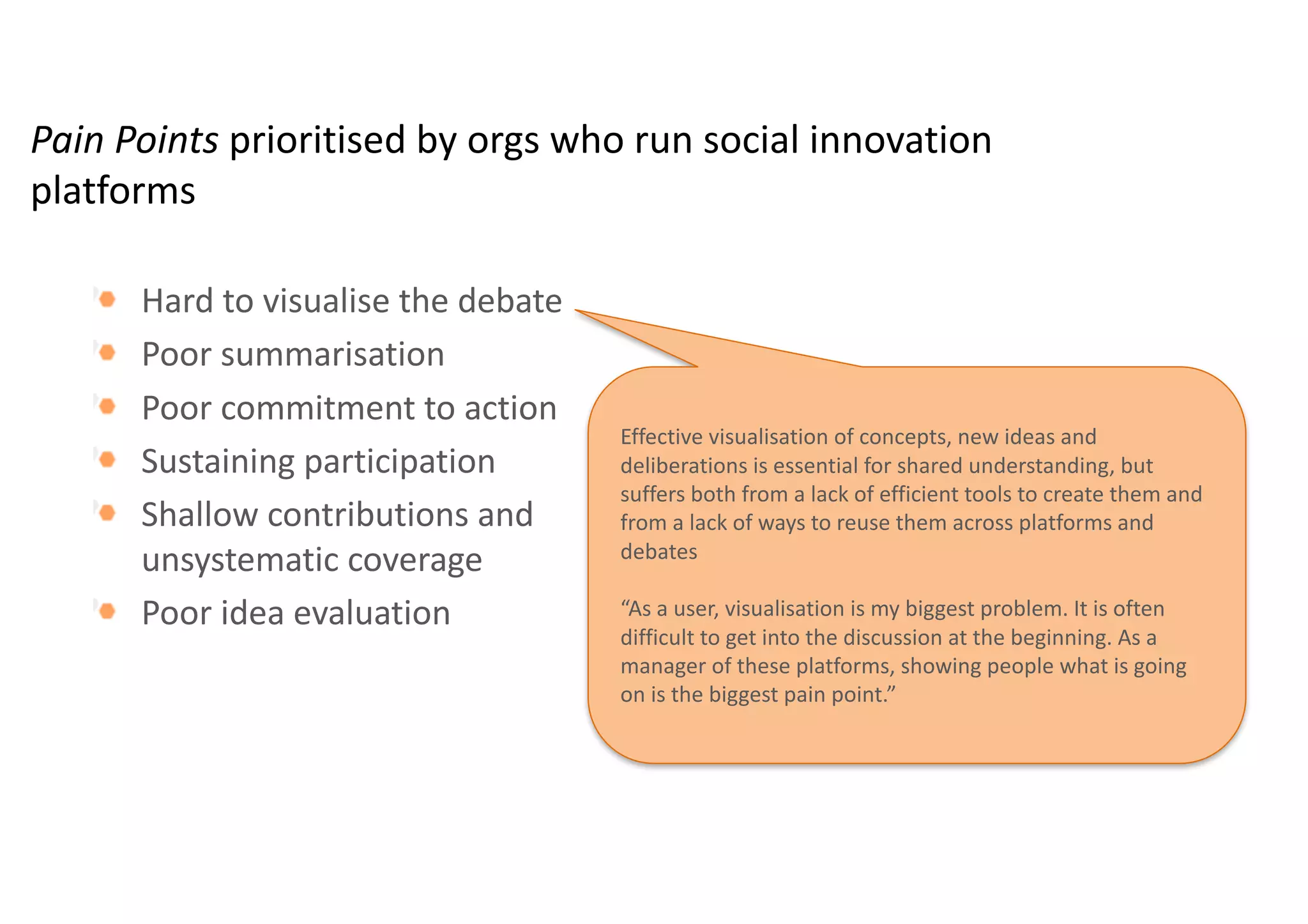

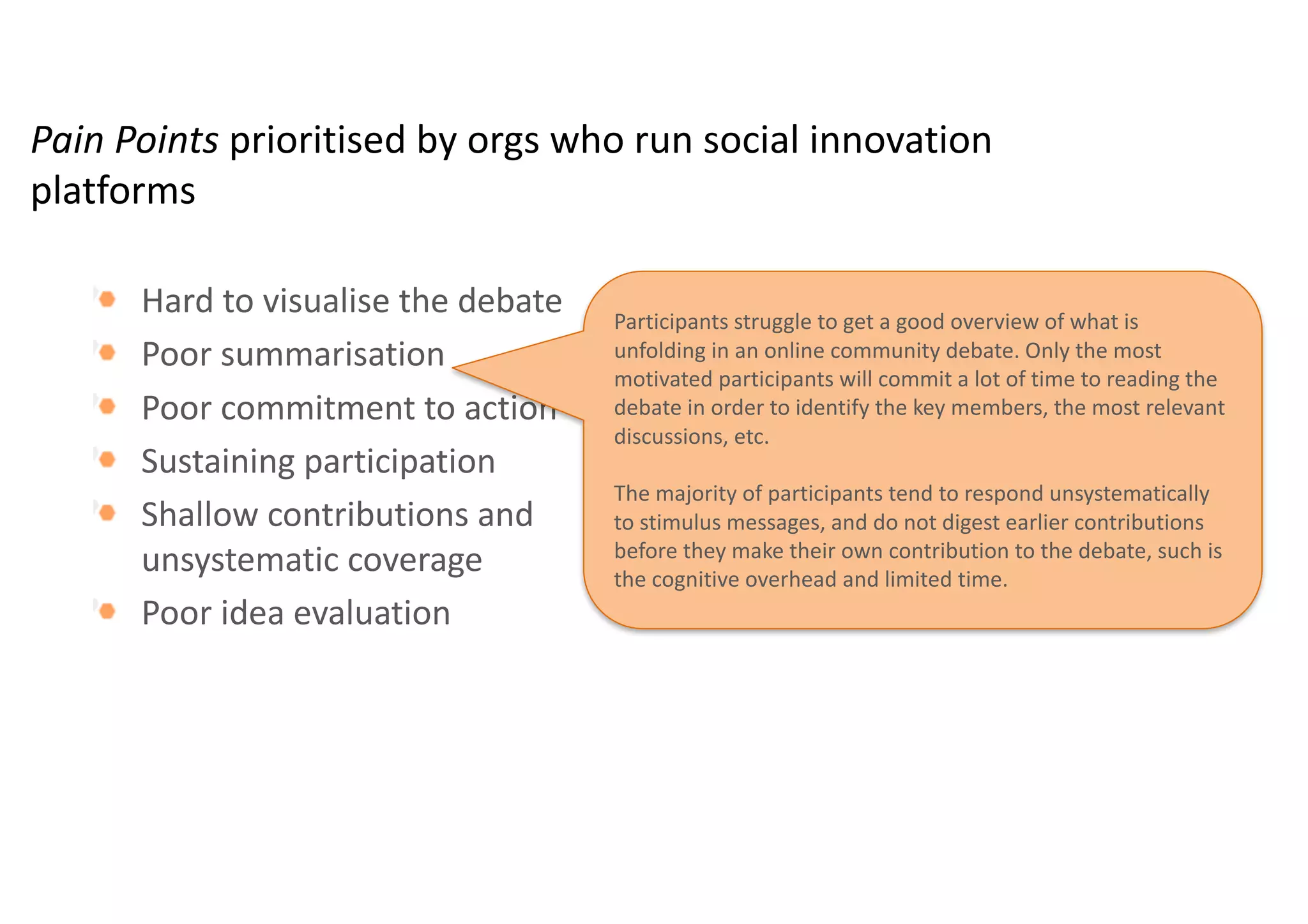

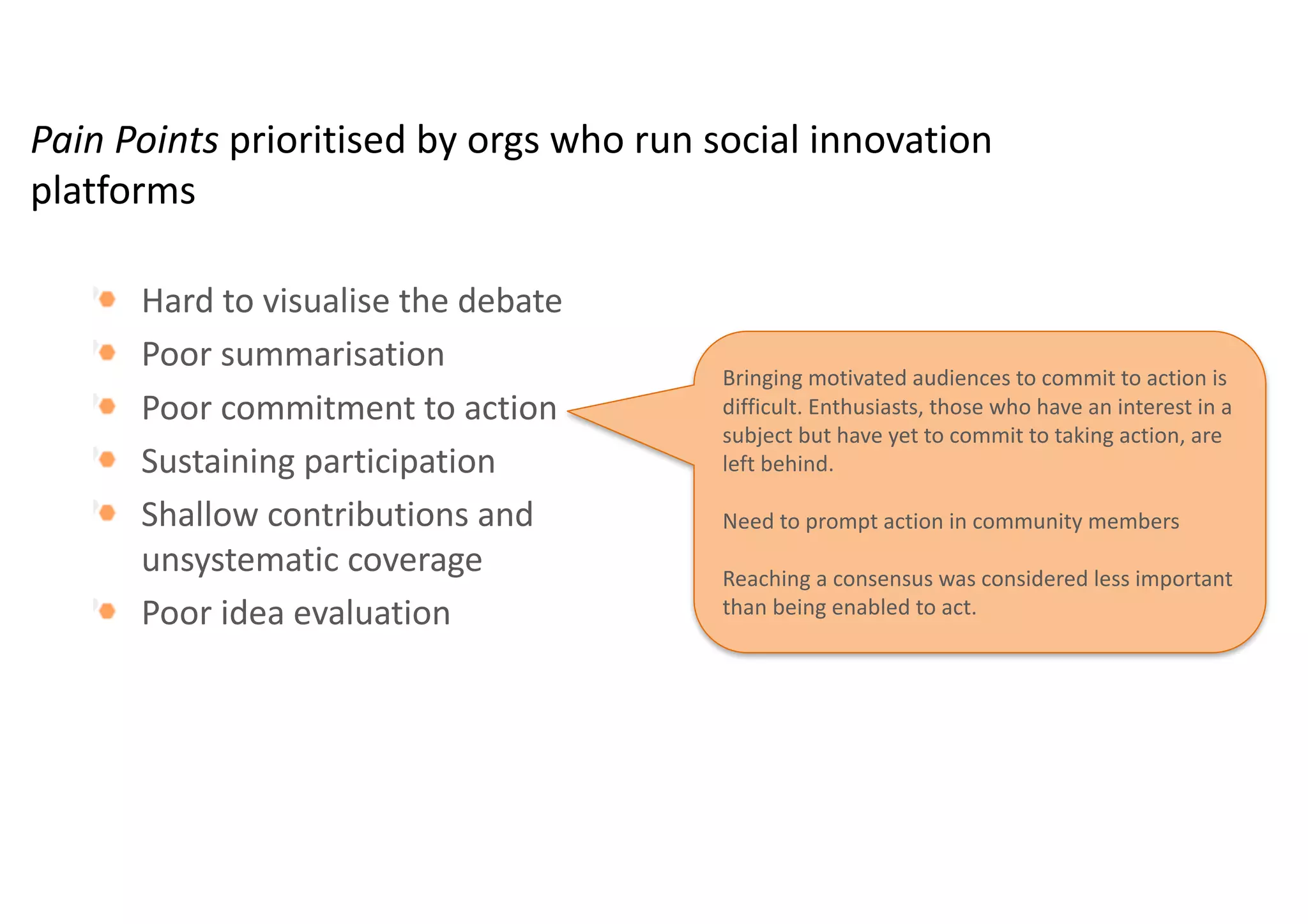

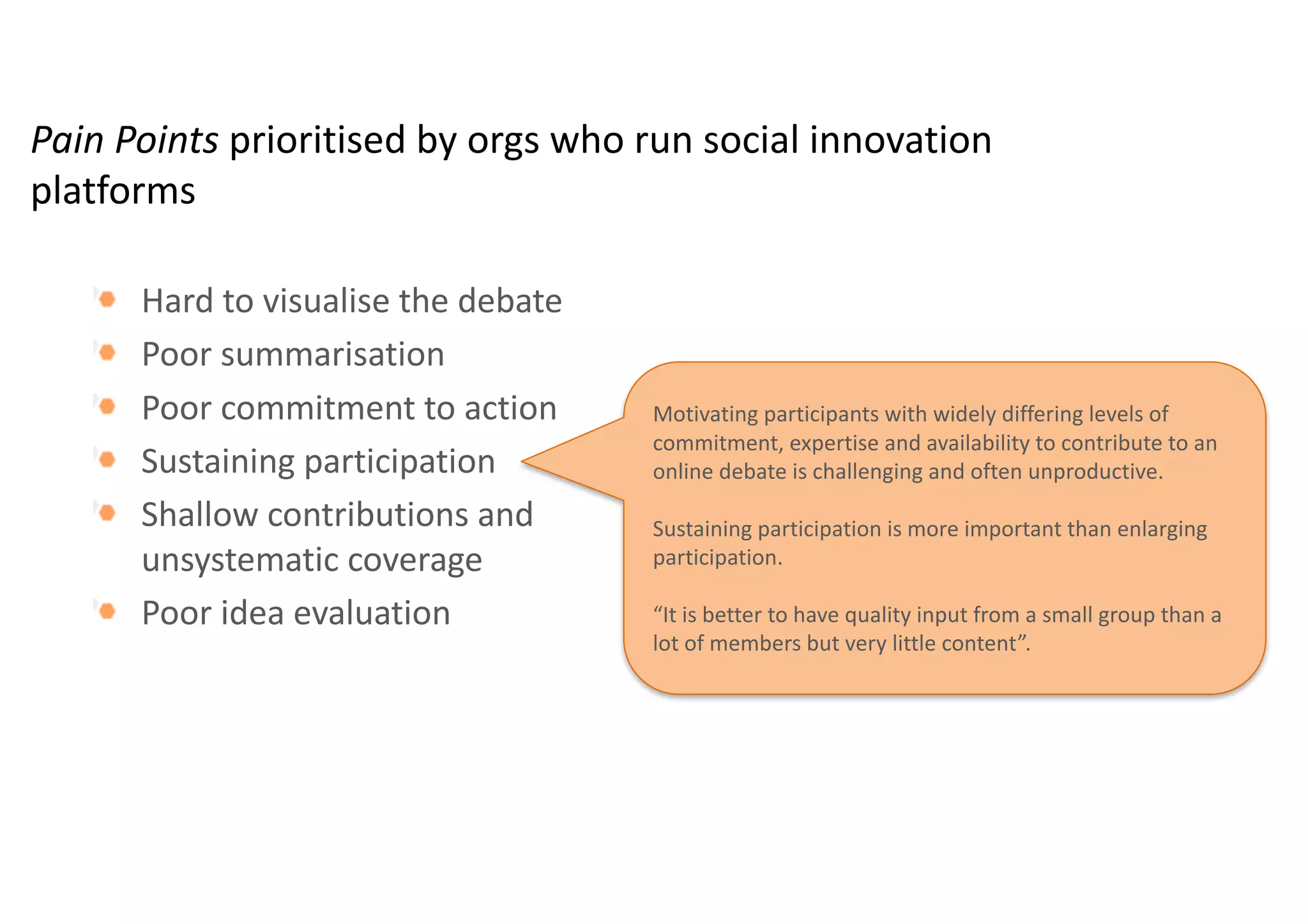

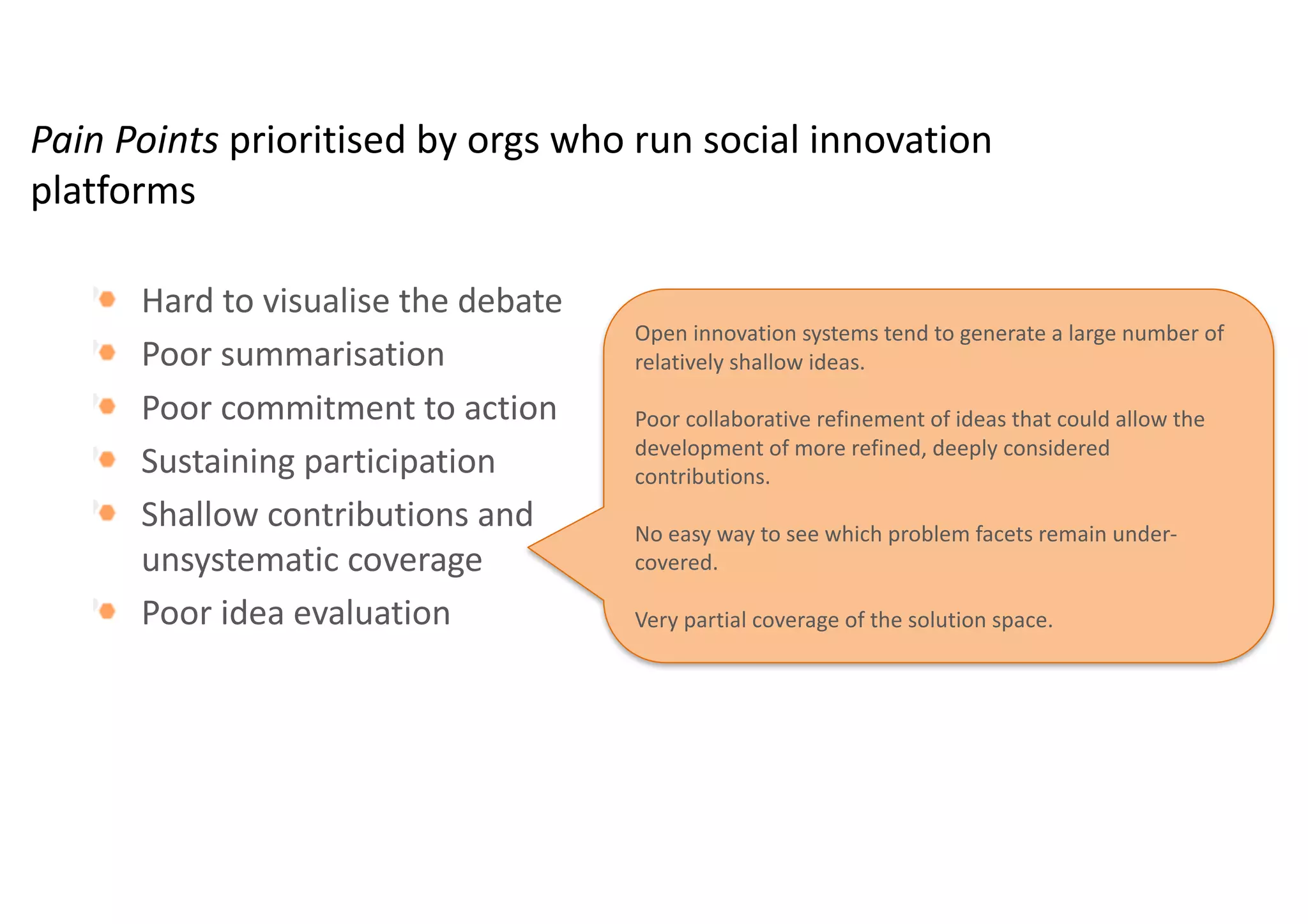

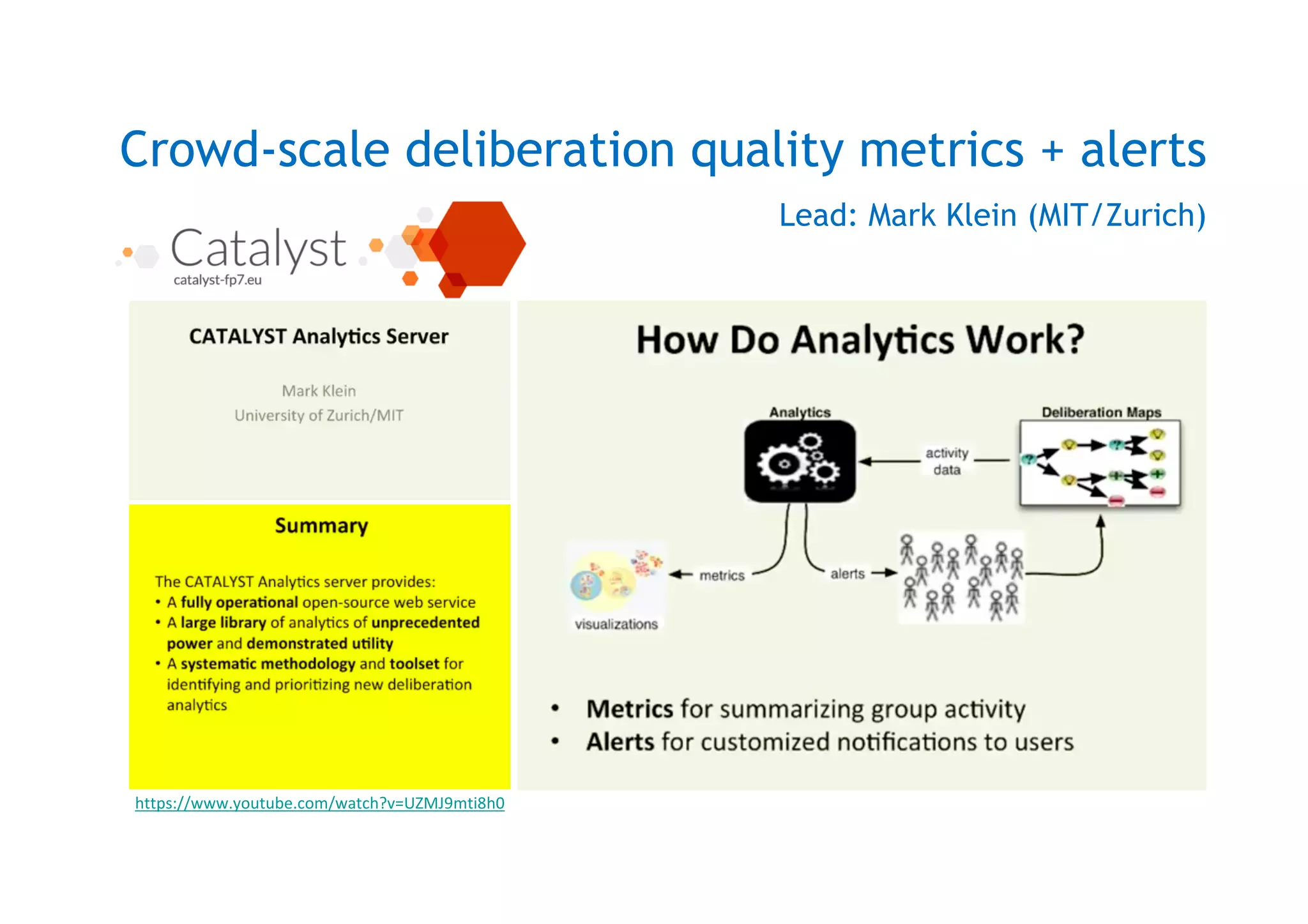

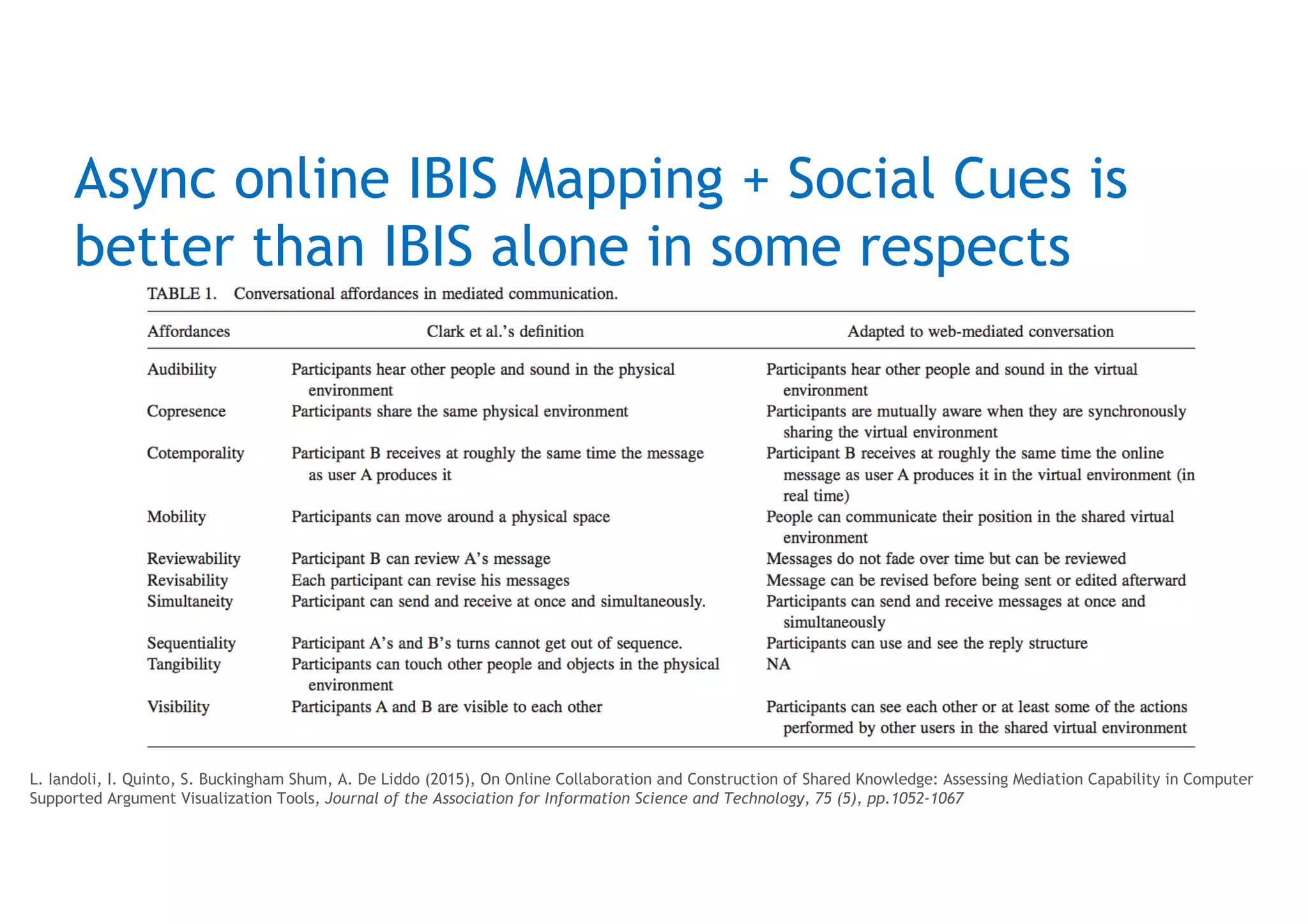

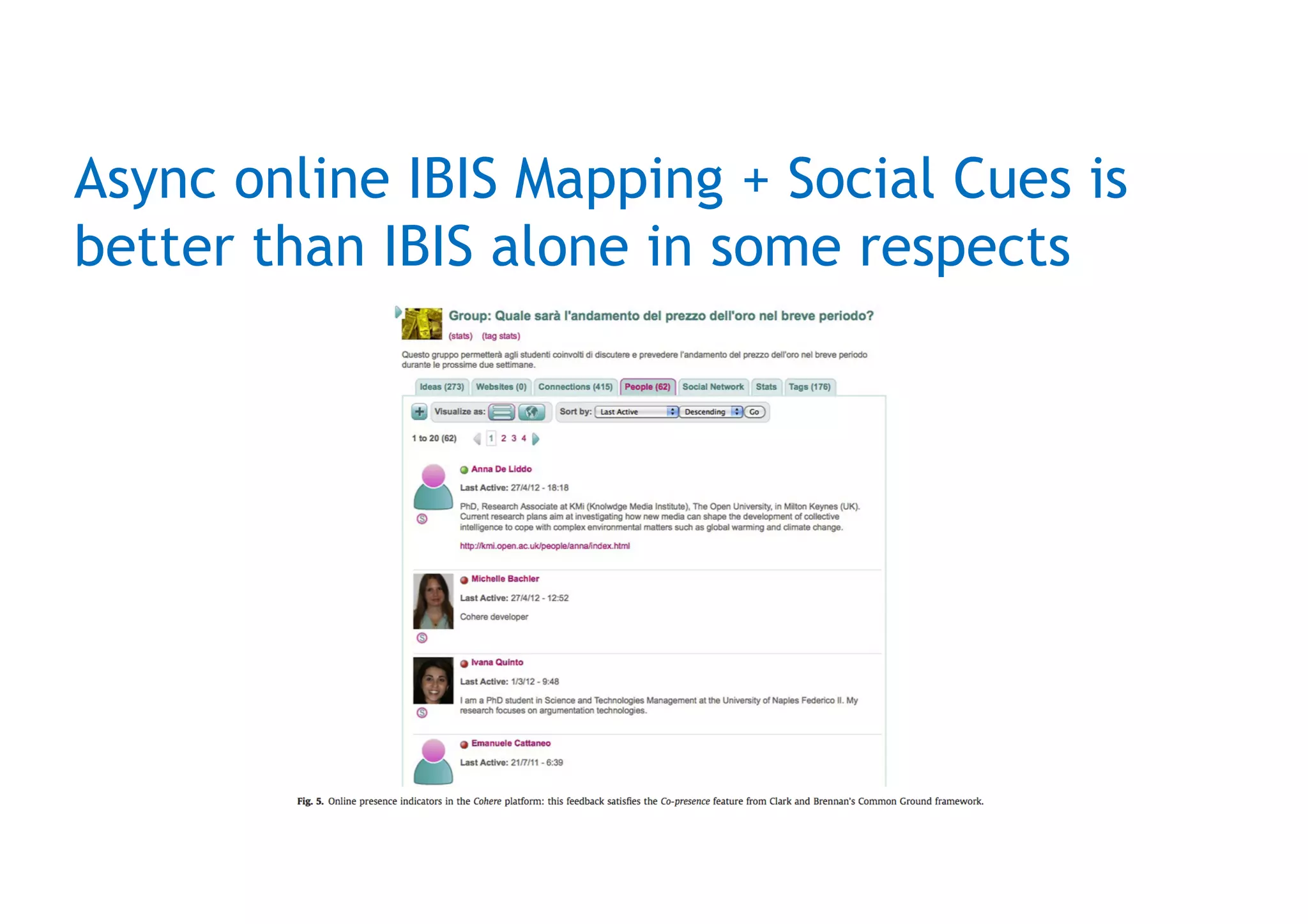

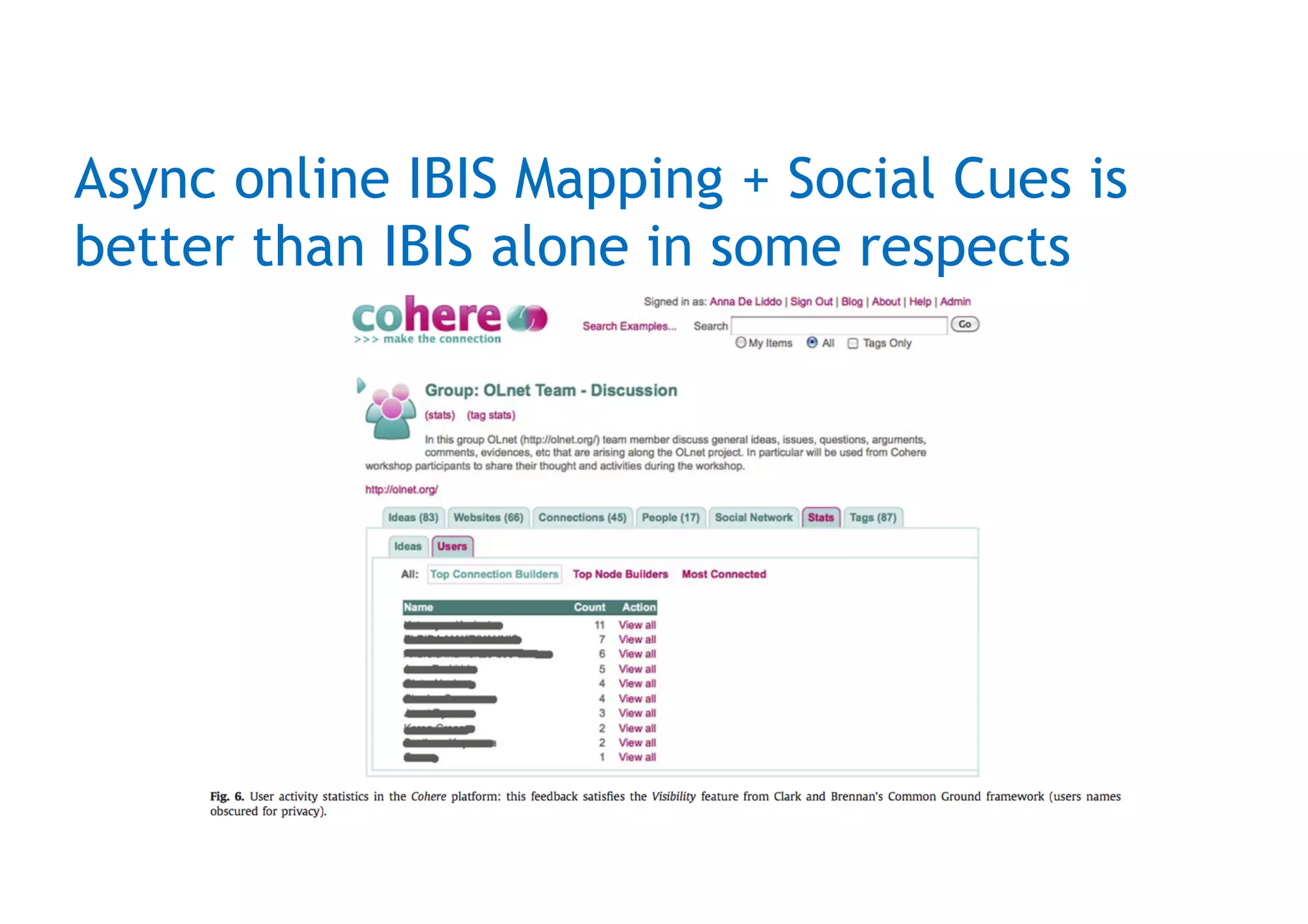

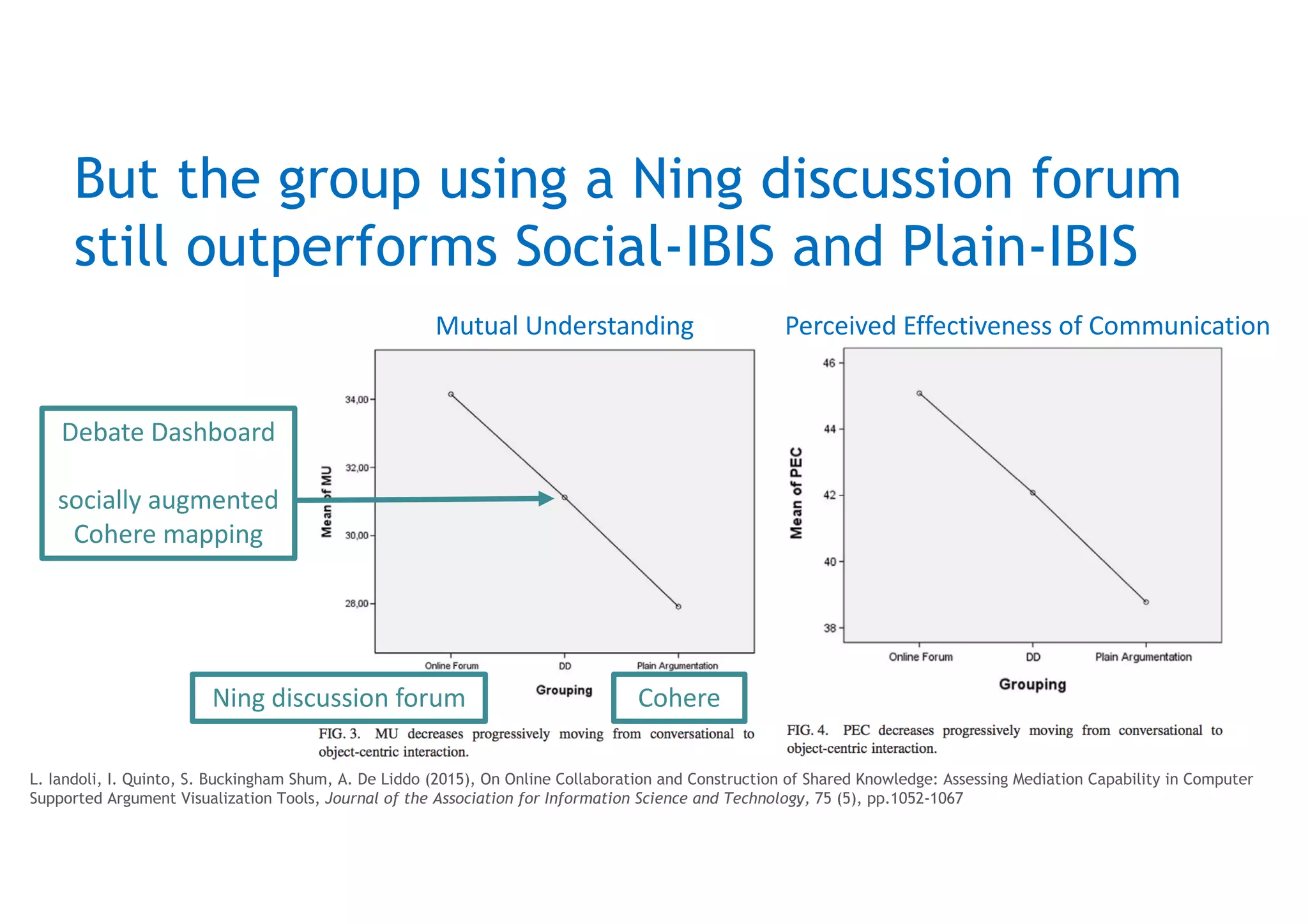

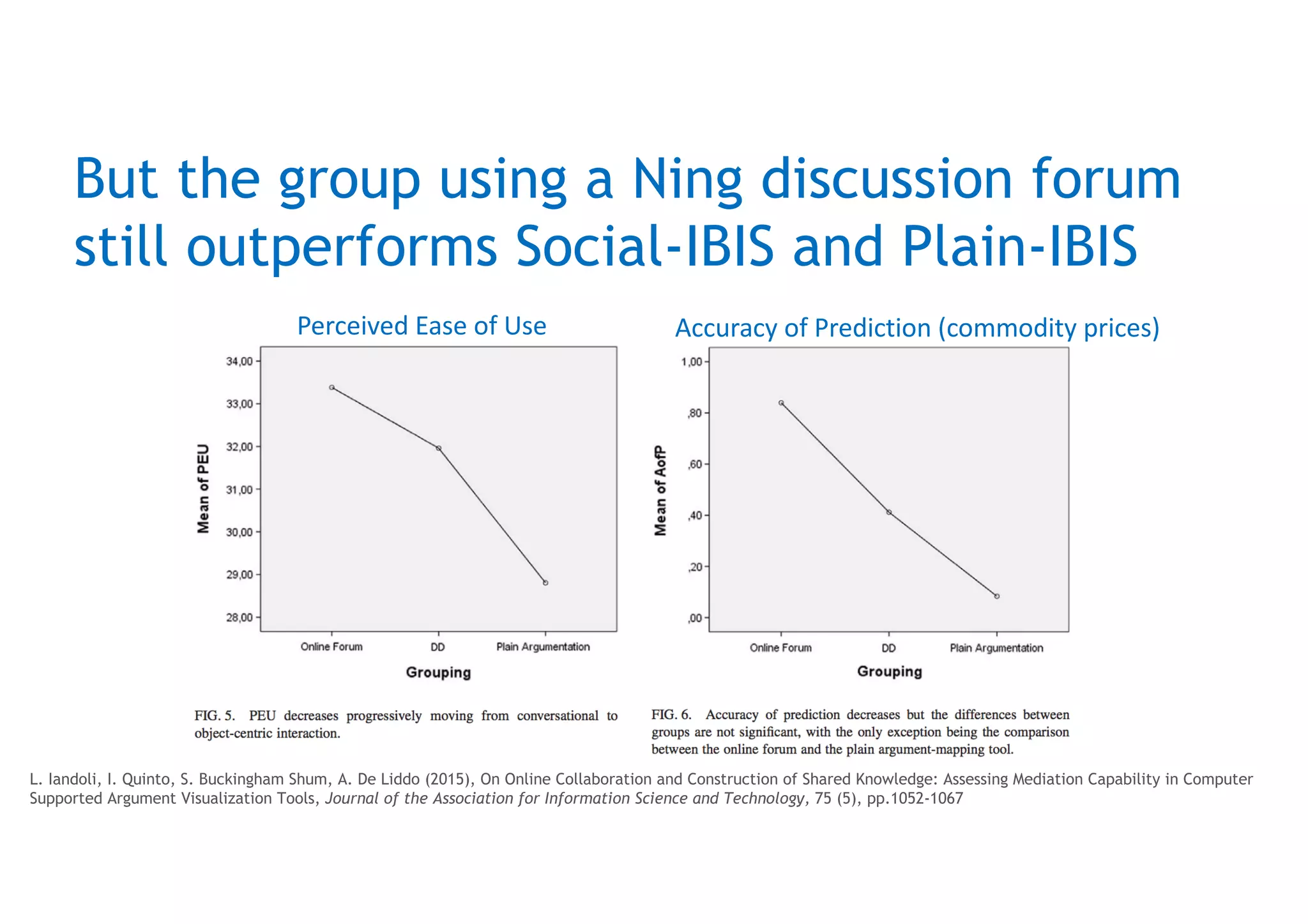

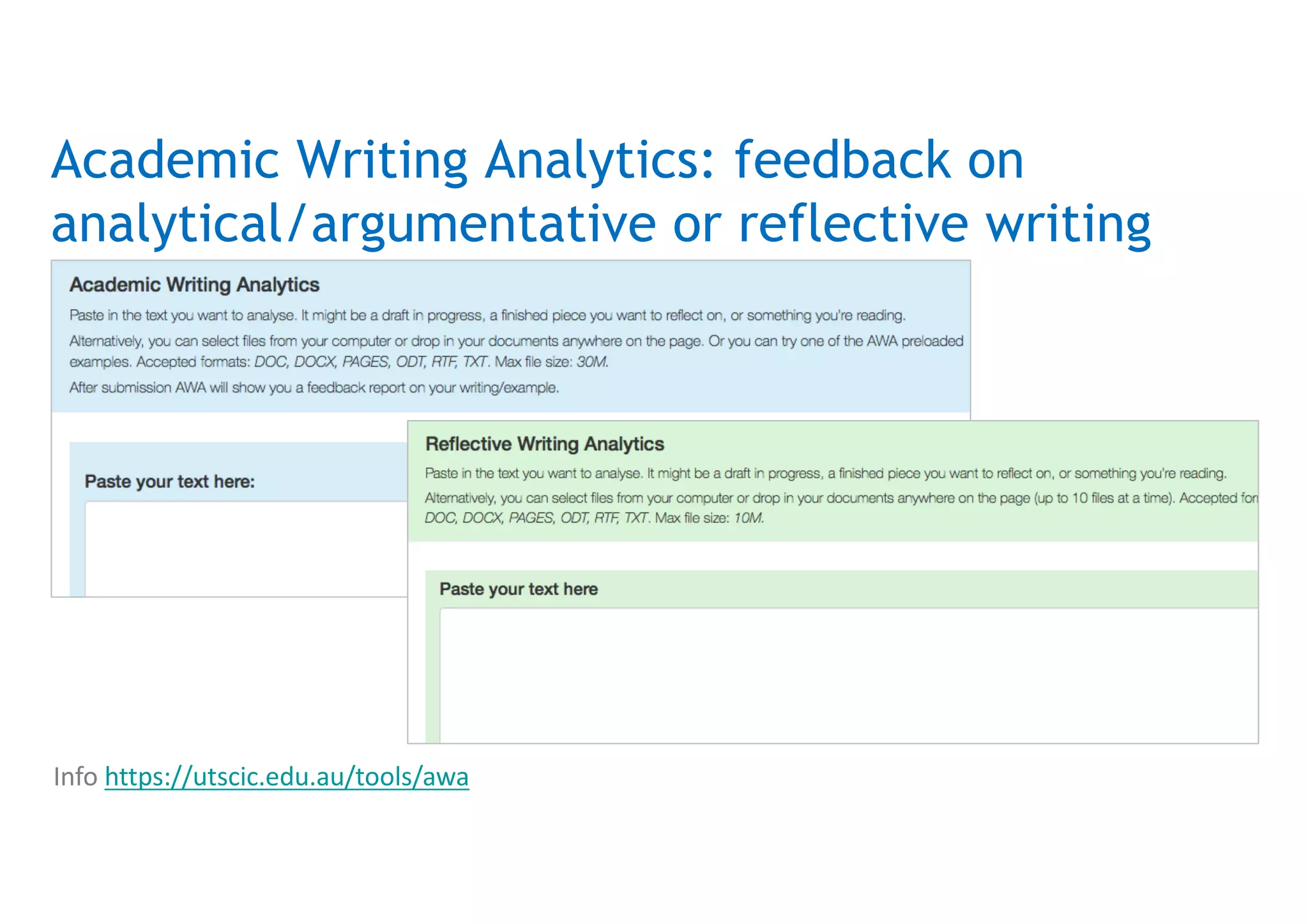

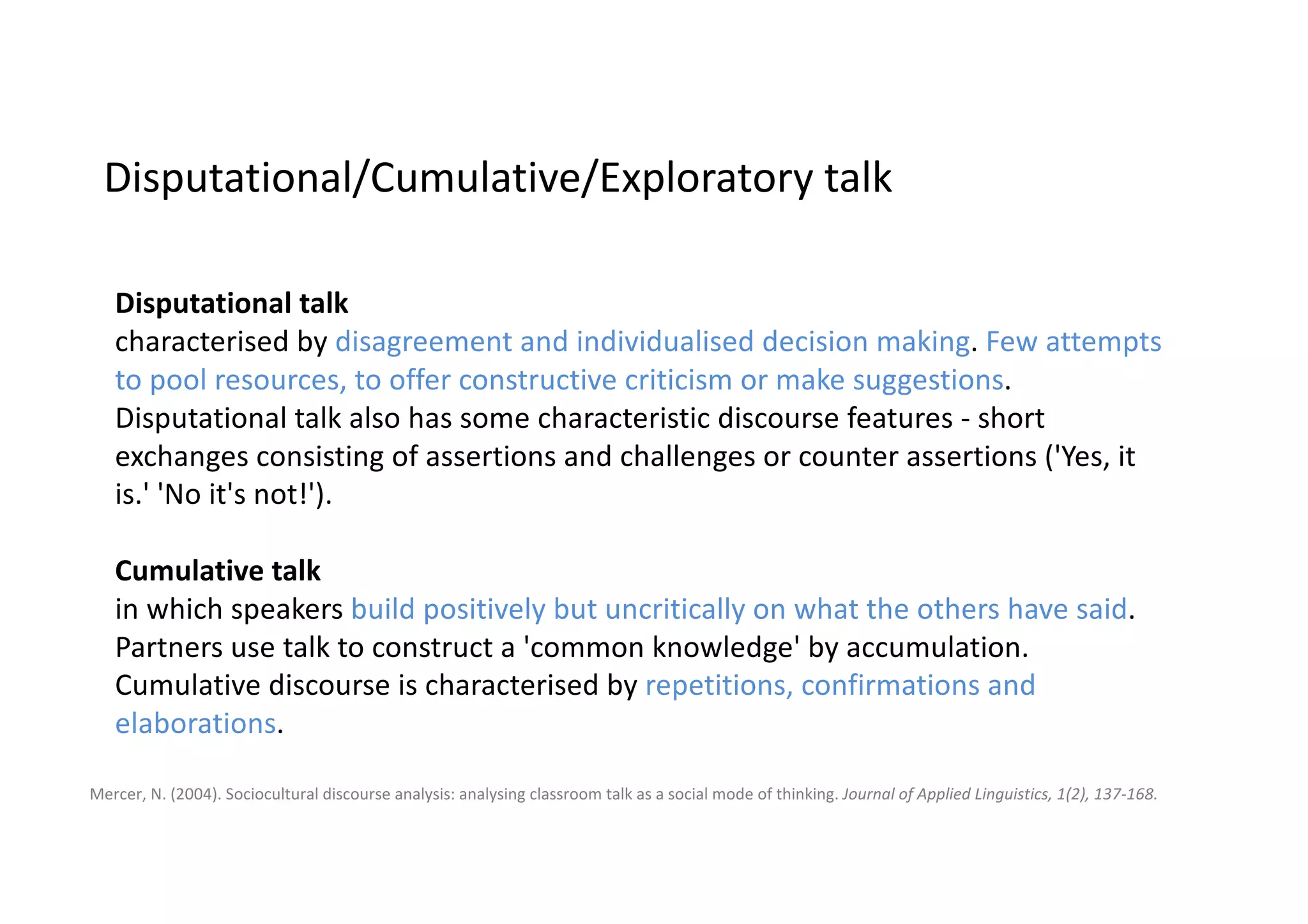

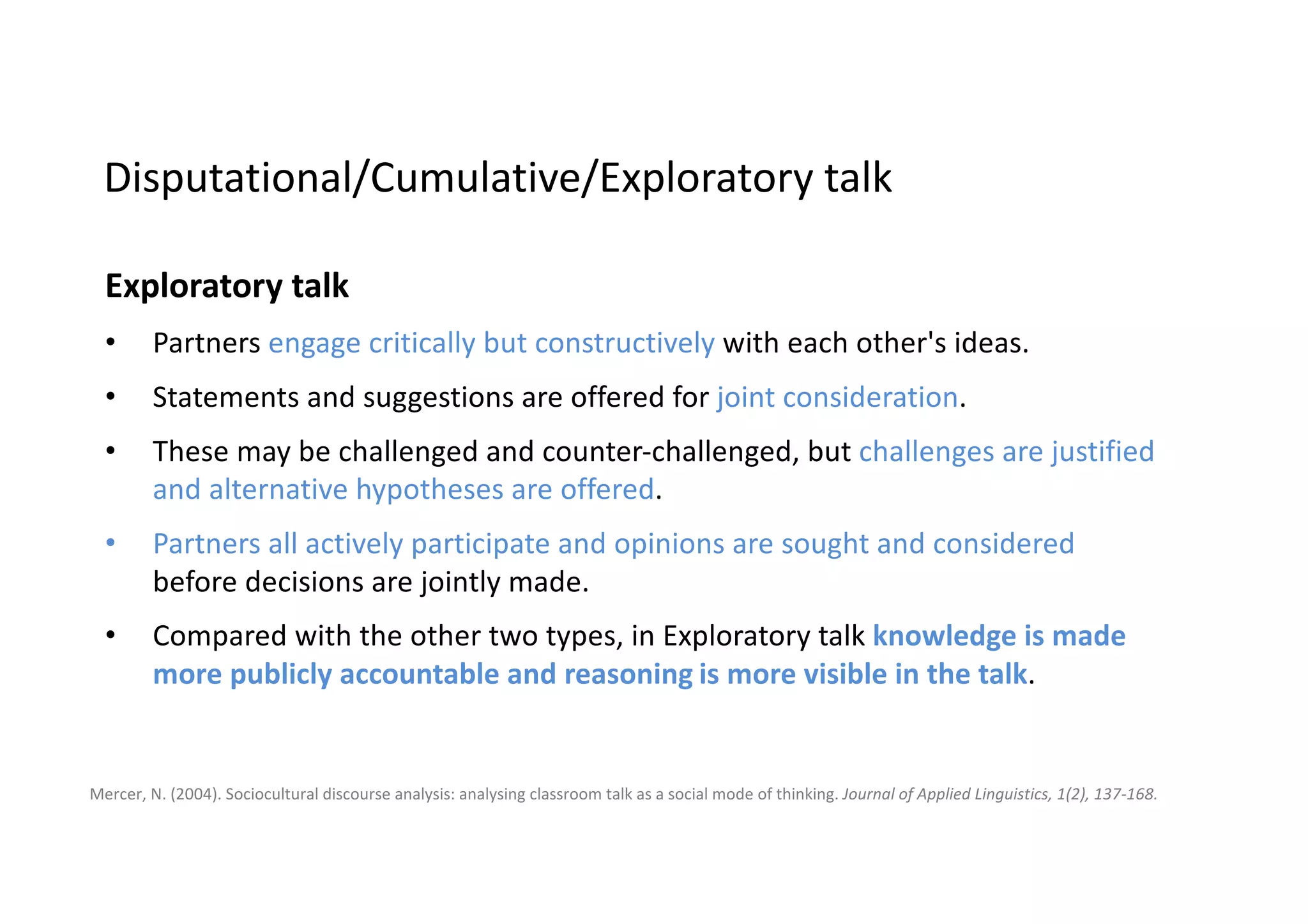

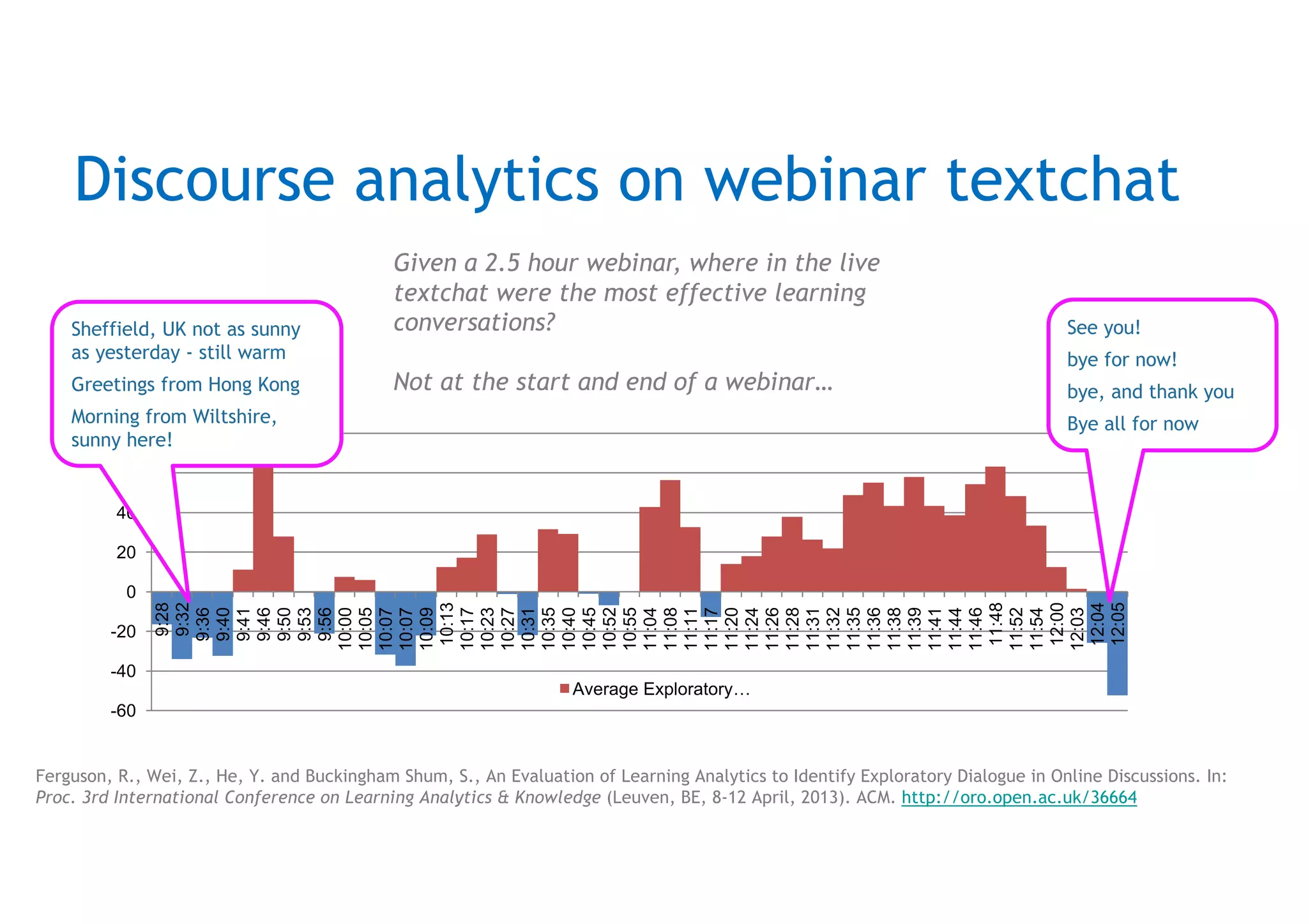

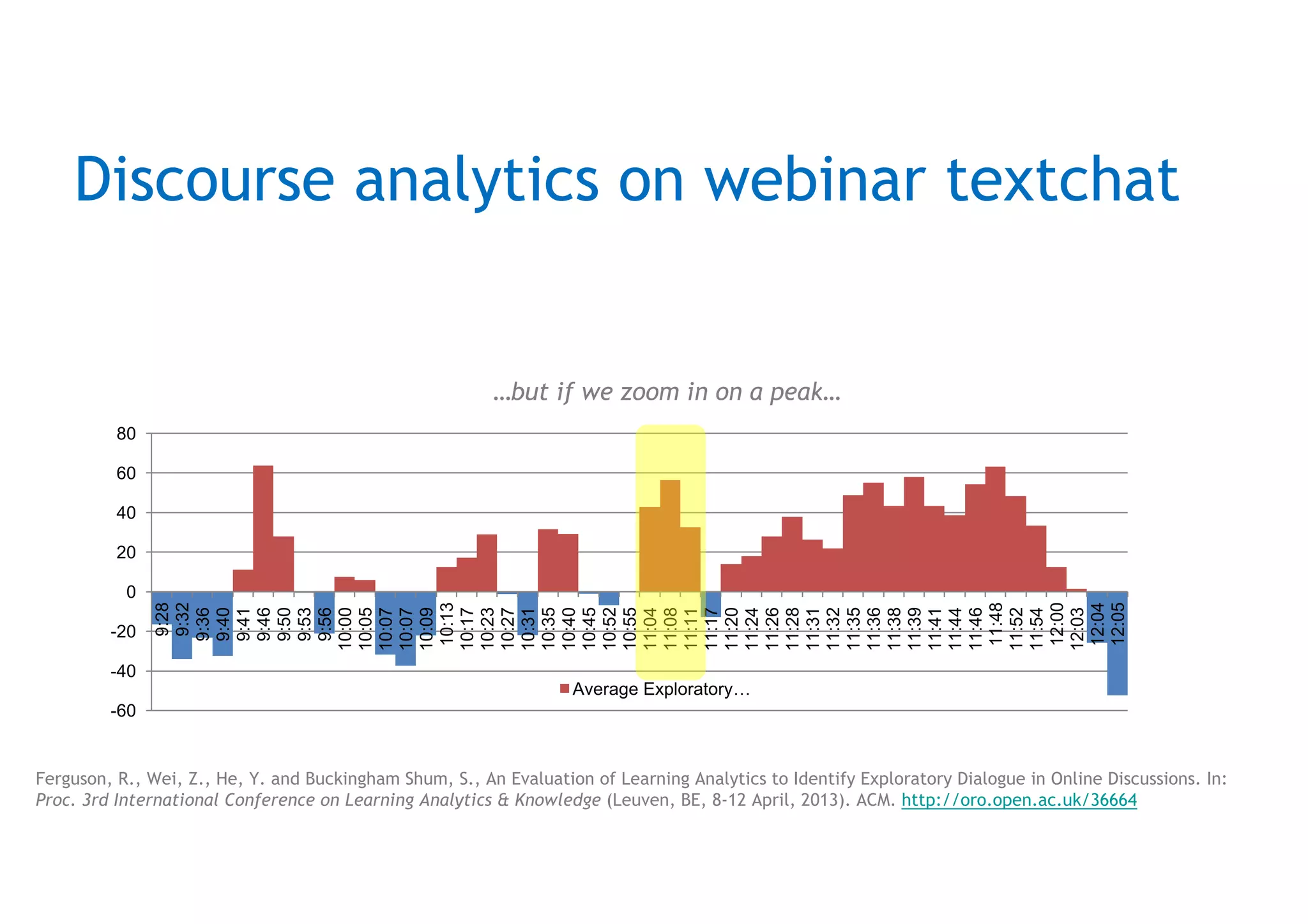

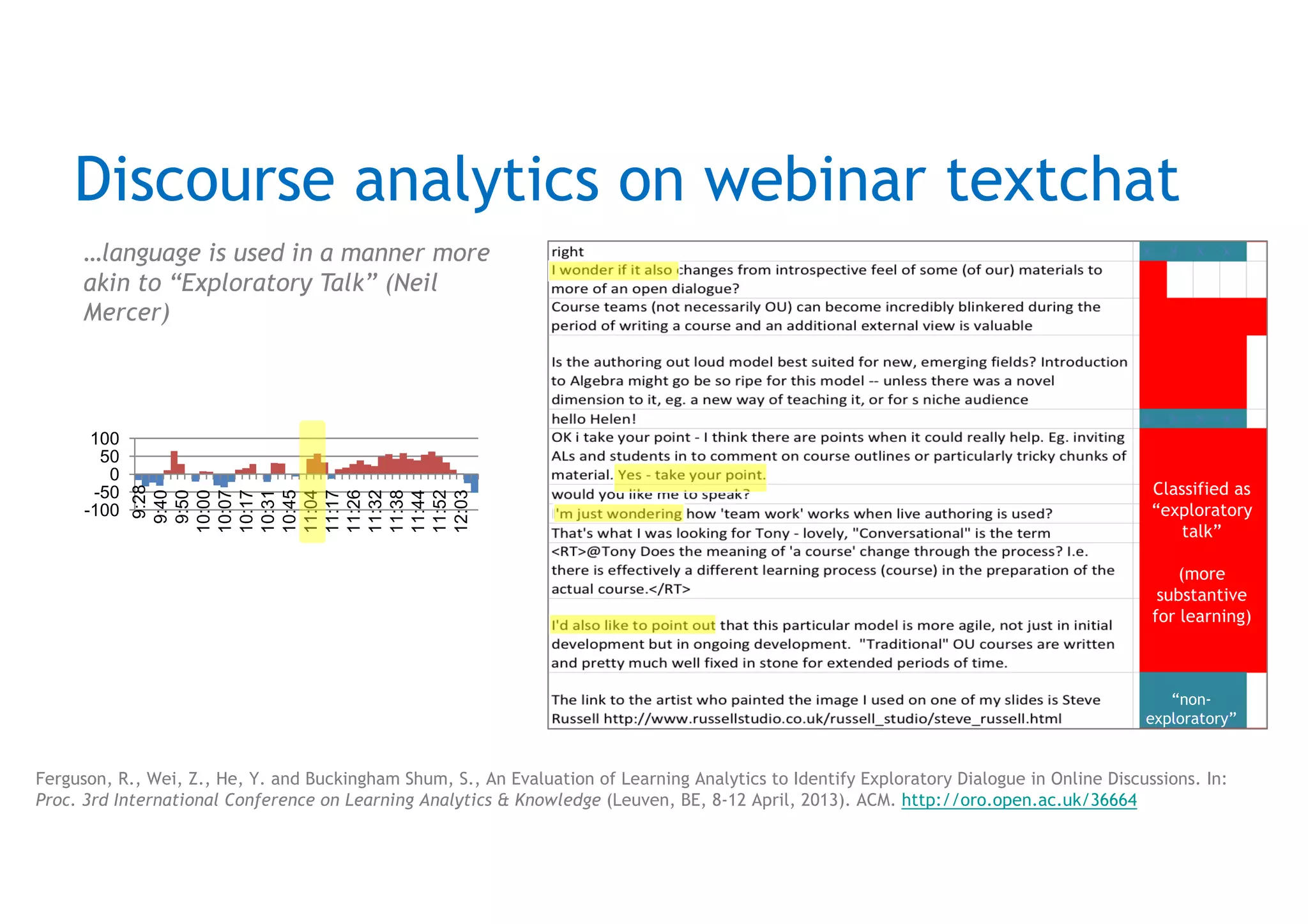

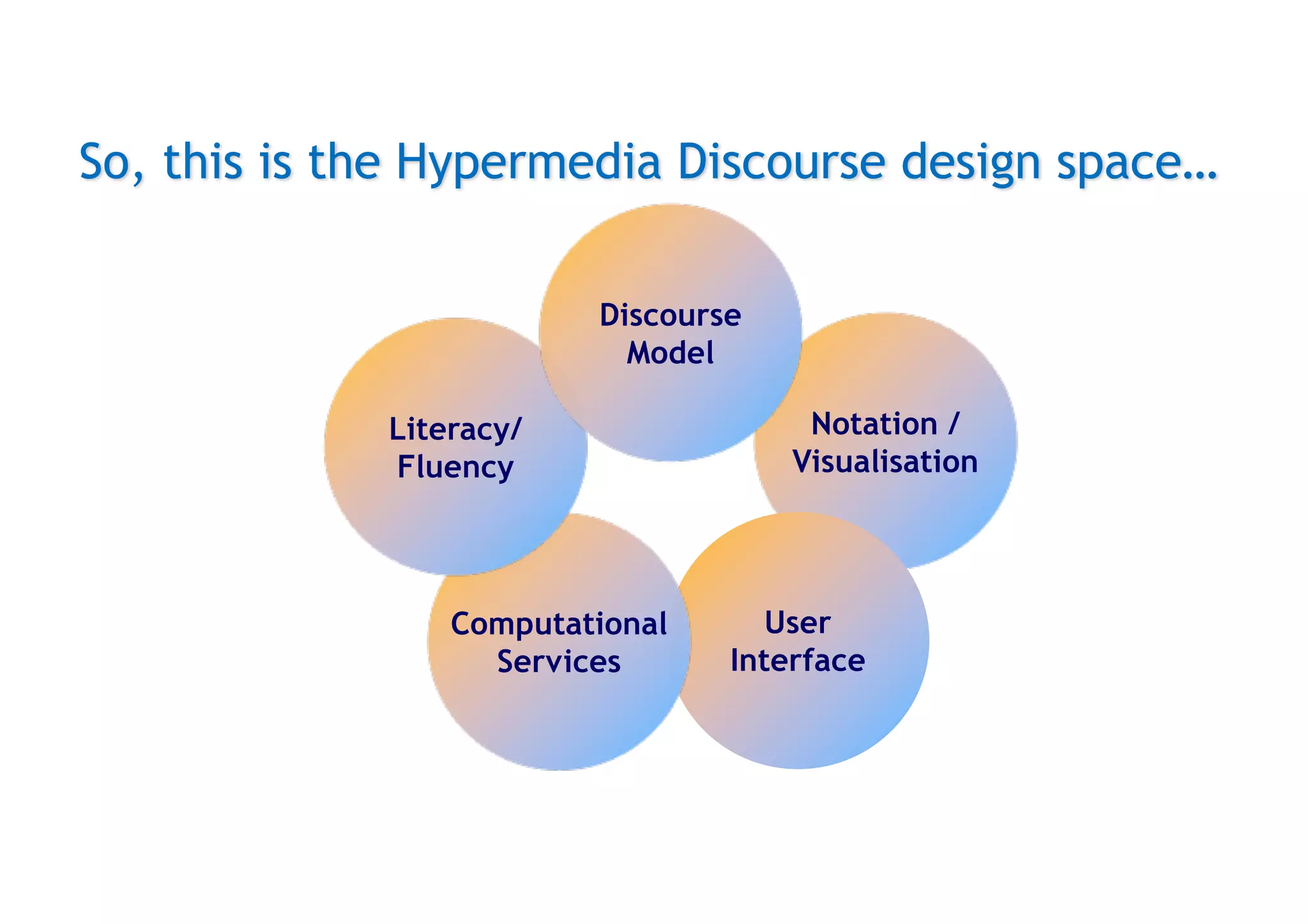

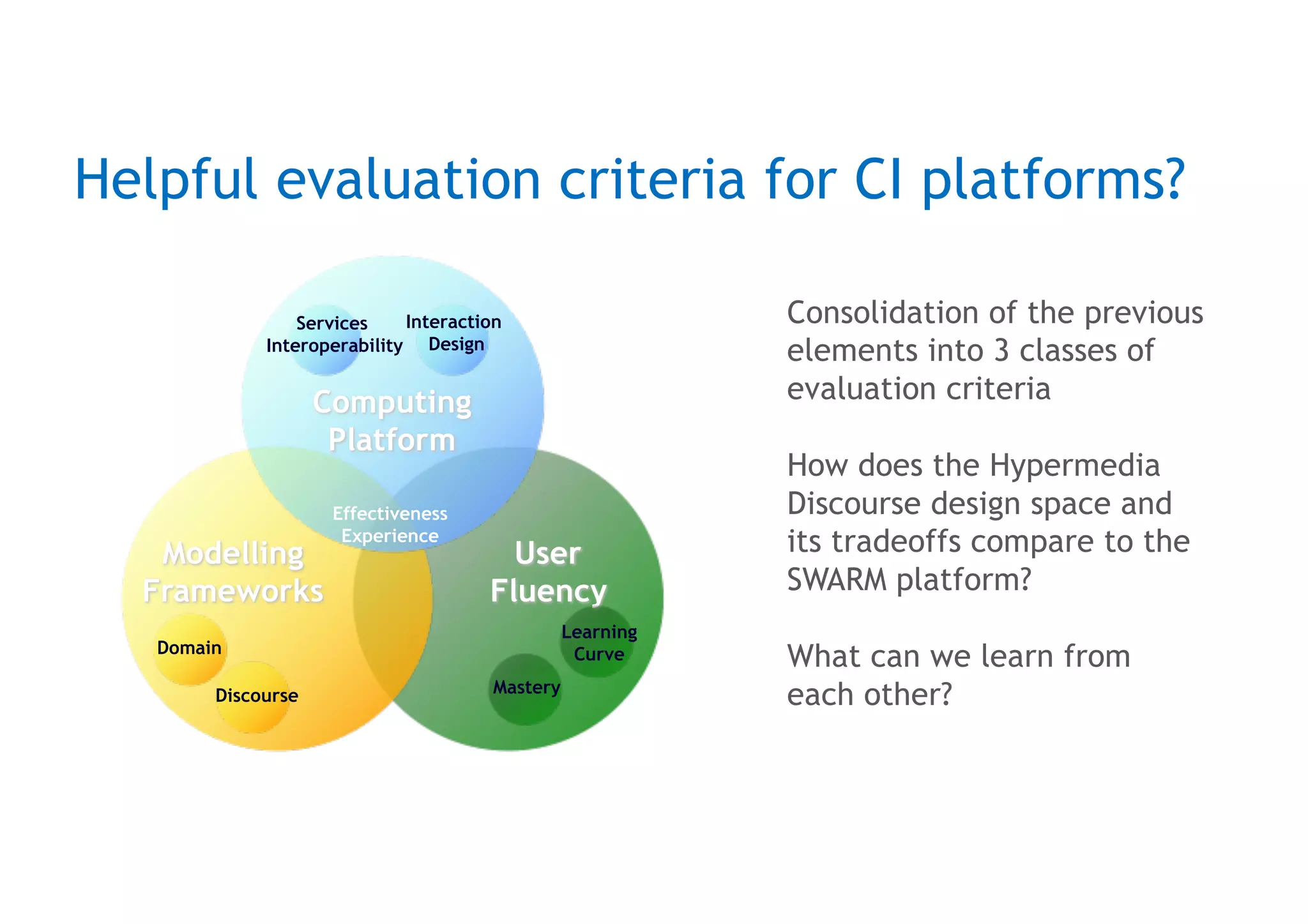

The document discusses the concept of contested collective intelligence in the context of wicked problems, emphasizing the importance of disagreement as a vital part of the discourse process. It explores the design space for tools that facilitate hypermedia discourse, highlighting challenges and potential solutions for synthesizing contributions in collaborative settings. The document also addresses the need for effective visualization and structuring of deliberations to enhance collective decision-making and participation.