This presentation, “Integrating AI in React Applications”, was prepared by Prof. Jaydeep N. Kale, Assistant Professor, Department of Computer Engineering, Sanjivani College of Engineering, Kopargaon.

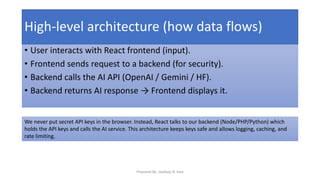

It explains how modern React applications can be enhanced with artificial intelligence using APIs such as OpenAI, Google Gemini, and Hugging Face.

The presentation covers:

The basics of React and the concept of AI integration

Real-world examples and architecture overview

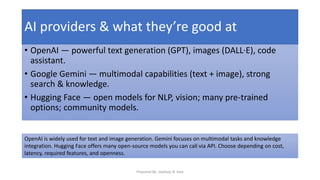

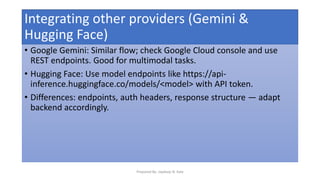

Comparison of AI providers (OpenAI, Gemini, Hugging Face)

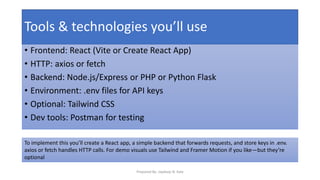

Tools and technologies required for integration

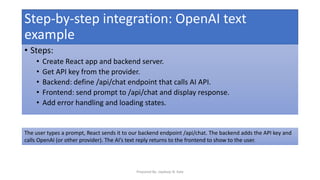

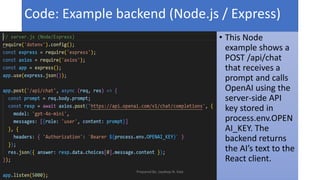

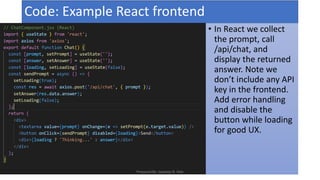

Step-by-step process with code examples (frontend & backend)

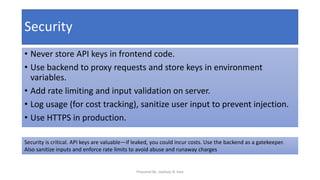

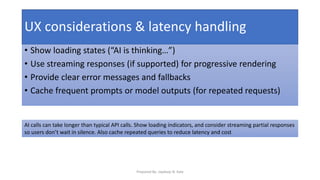

Security best practices and UX considerations

Objective:

To demonstrate how frontend developers can create intelligent, interactive web applications by connecting React with modern AI APIs securely and efficiently.

Prepared for academic and learning purposes under the Web Technology module.