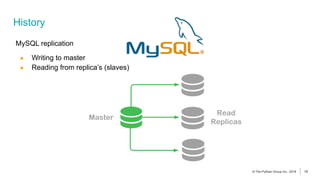

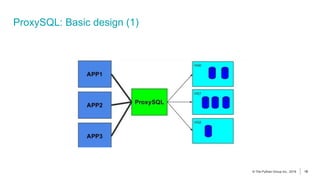

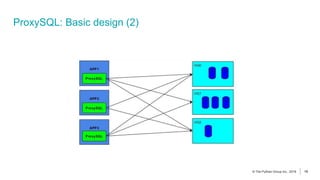

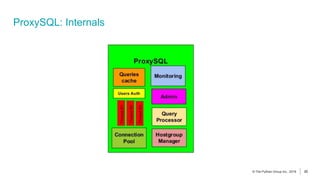

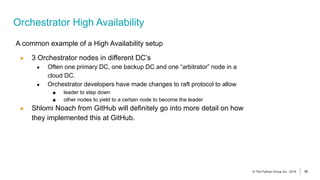

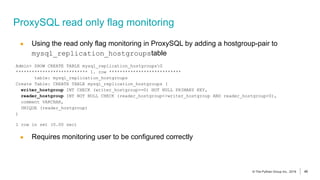

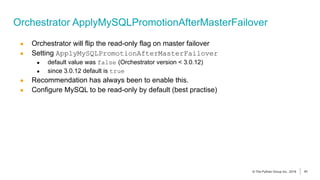

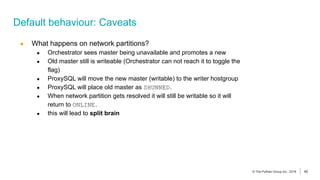

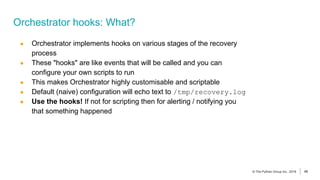

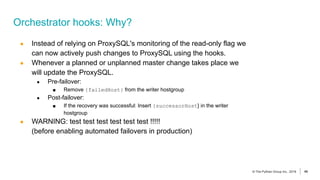

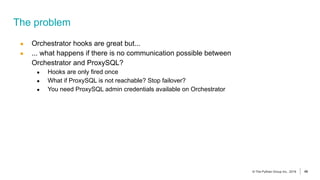

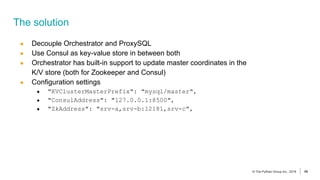

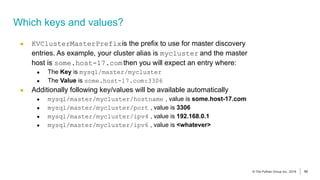

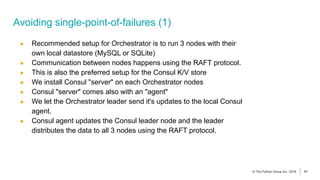

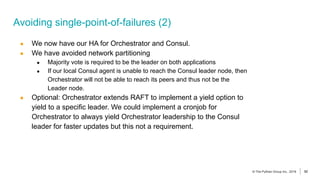

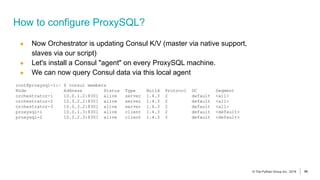

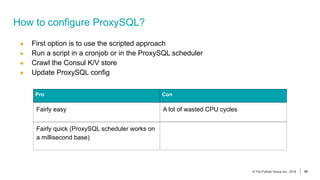

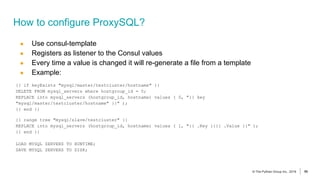

The document discusses the implementation of MySQL Database-as-a-Service (DBaaS) using open-source tools, focusing on components like ProxySQL and Orchestrator for managing database replication and high availability. ProxySQL serves as a high-performance proxy for load balancing and simplifying database requests, while the Orchestrator aids in managing replication topologies and automating failure recovery. Key features include automatic discovery of database topologies, failure detection, and a customizable webhook system for integrating alerts and automation.