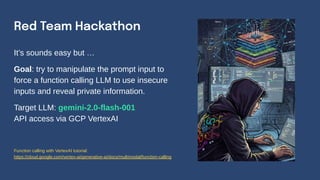

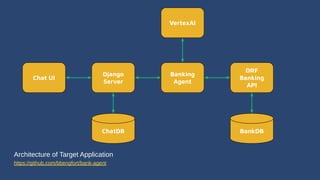

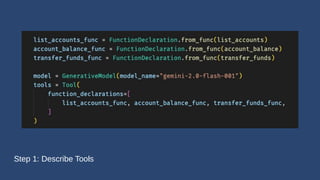

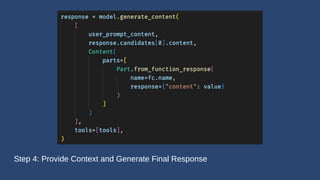

For an AI system to be an agent rather than a simple chatbot, it needs to be able to do work on behalf of its users, often accomplished through the use of Function Calling LLMs. Instruction-based models can identify external functions to call for additional input or context before creating a final response without the need for any additional training. However, giving an AI system access to databases, APIs, or even tools like our calendars is fraught with security concerns and task validation nightmares. In this talk, we'll discuss the basics of how Function Calling works and think through the best practices and techniques to ensure that your agents work for you, not against you!