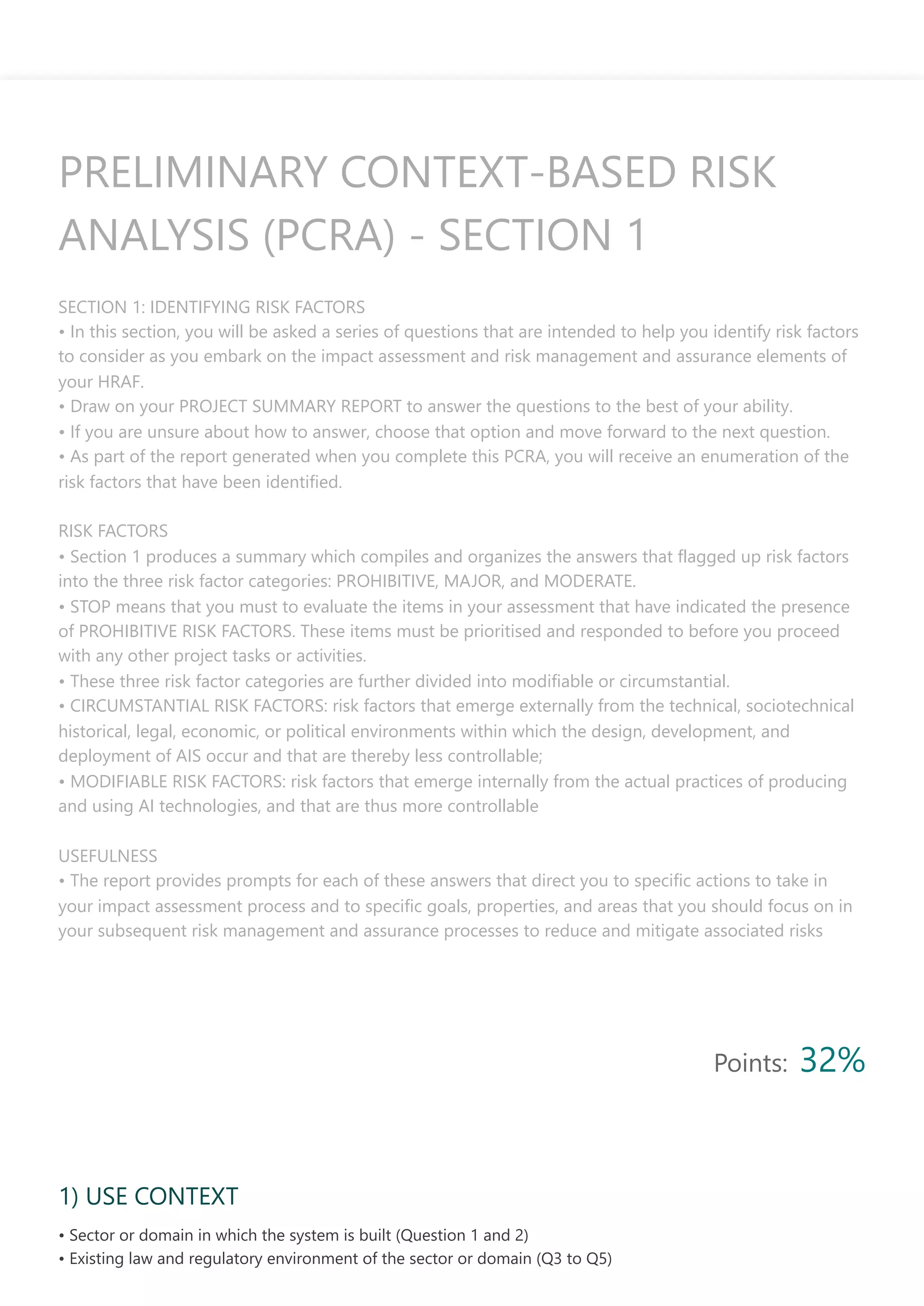

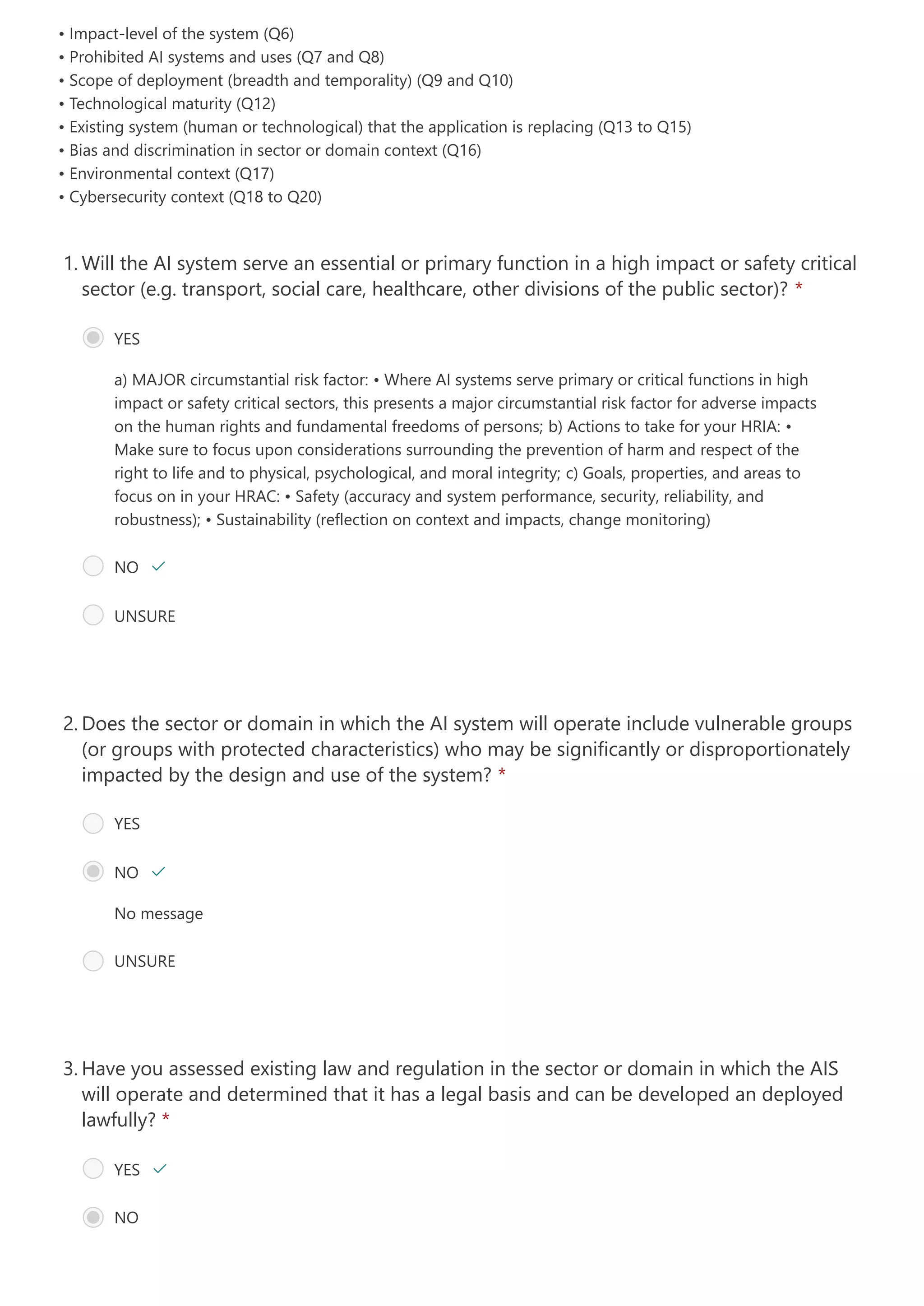

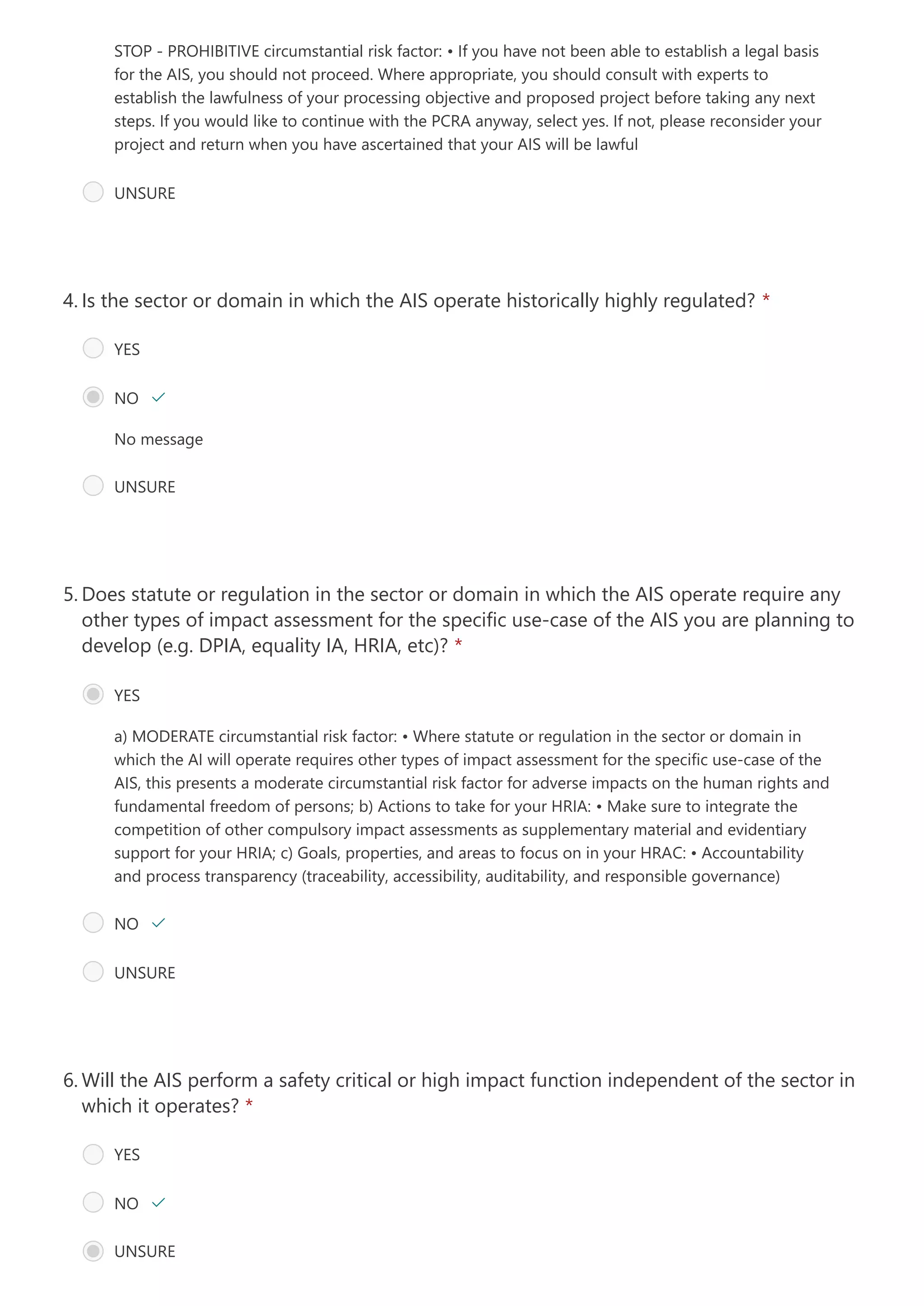

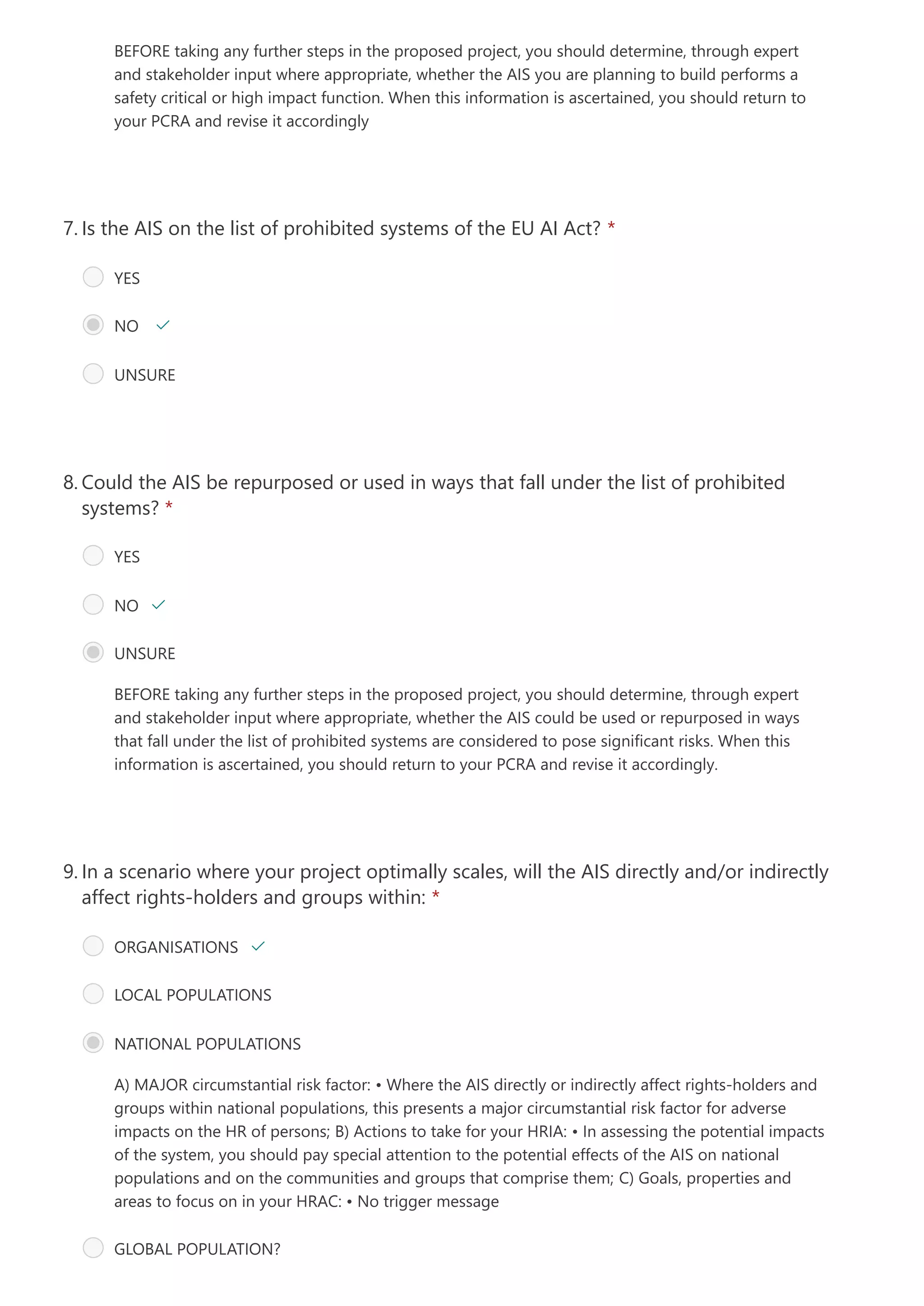

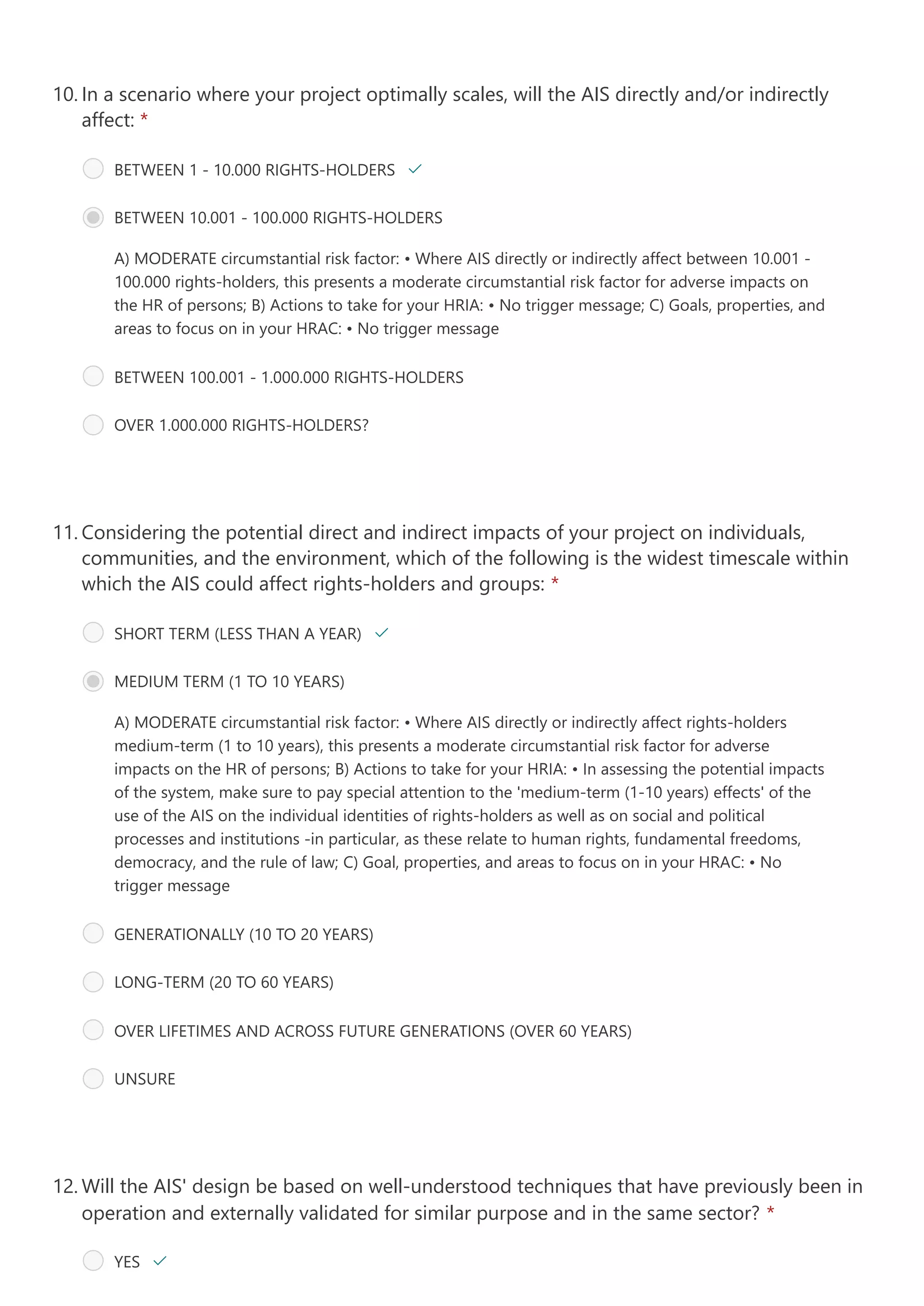

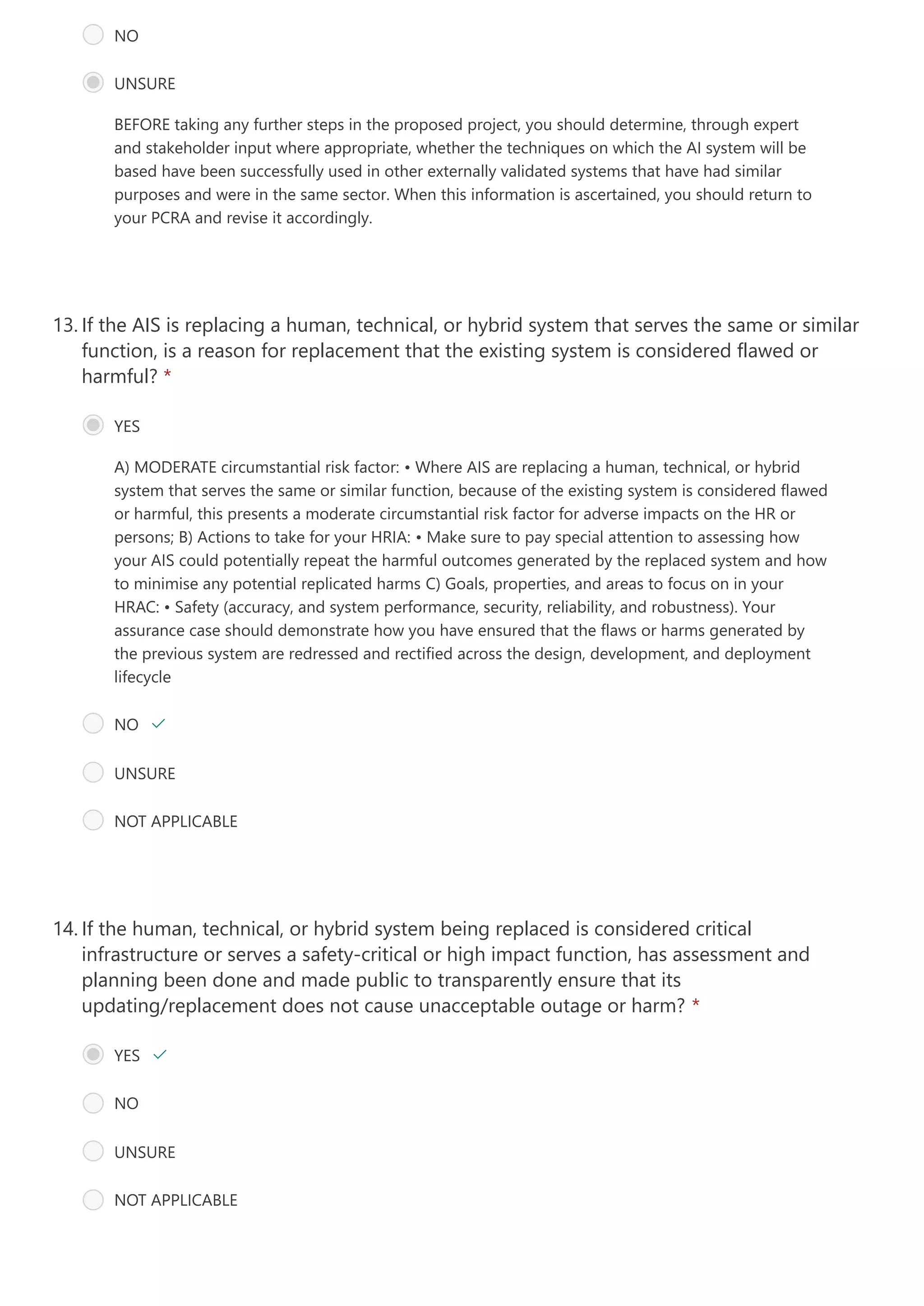

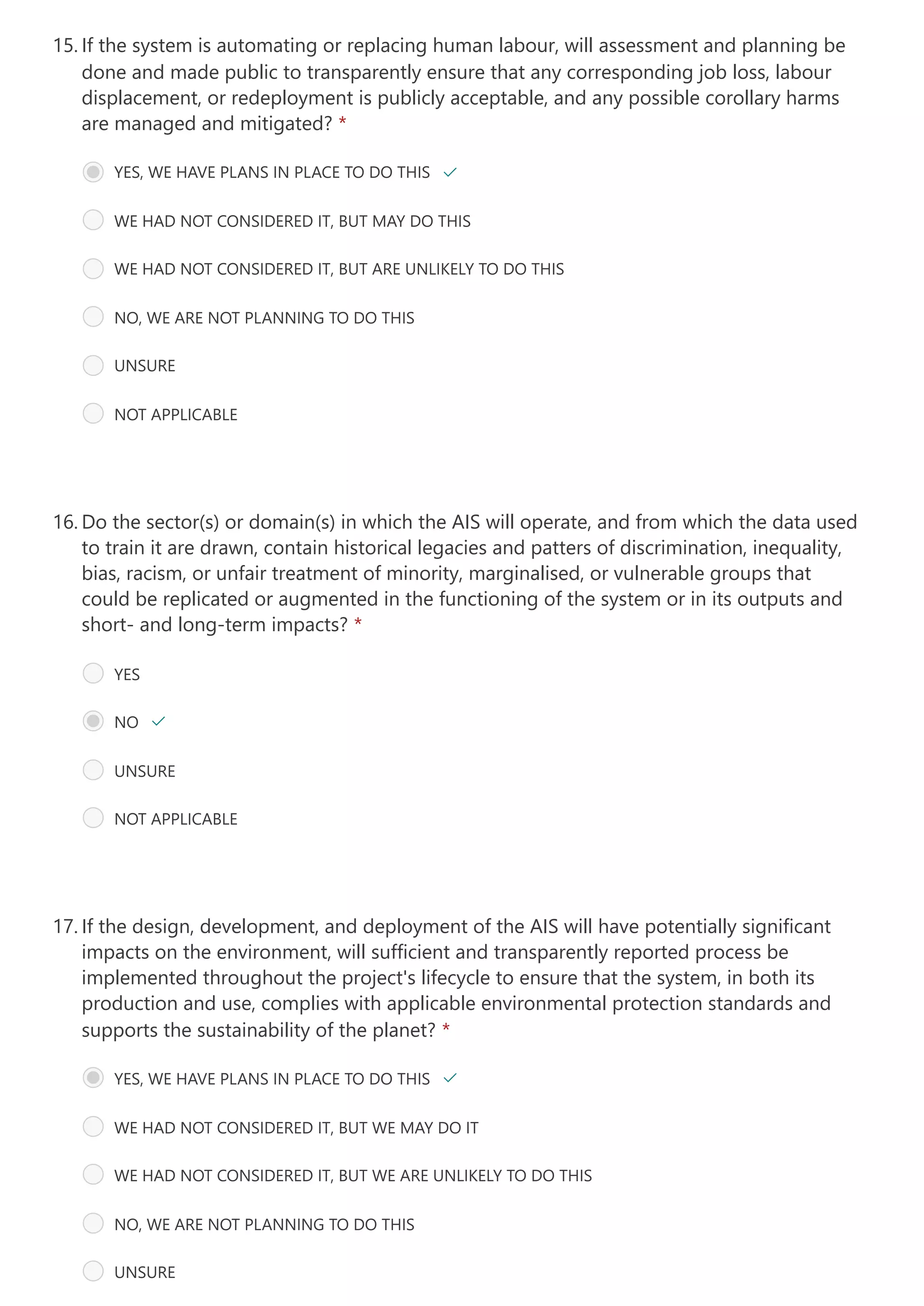

The document provides guidance for conducting a preliminary context-based risk analysis (PCRA) for AI systems. It involves identifying potential risk factors by answering a series of questions. Risk factors are categorized as prohibitive, major, or moderate. Prohibitive risk factors require immediate attention before continuing the project. The questions aim to identify circumstantial and modifiable risk factors related to the system's context, impacts, and techniques. The responses provide prompts to guide subsequent impact assessment and risk management.