1. The document discusses strategies for transitioning an existing system to microservices, containers, and cloud native technologies like Kubernetes over time rather than doing a complete rewrite.

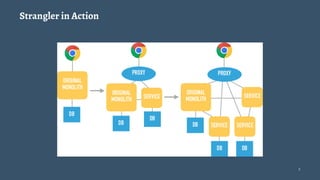

2. It recommends using a "strangler" pattern to slowly subsume the legacy application by writing new features in the new framework and replacing parts of the existing system gradually.

3. Challenges discussed include underestimating the existing system, moving too fast, dealing with changes to tools and processes, and ensuring developers and operations teams have the right skills for the transition.