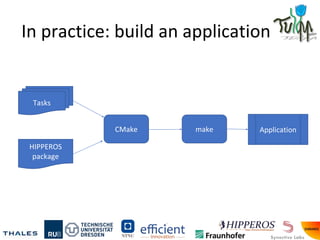

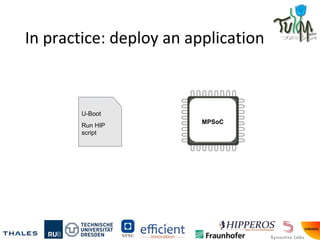

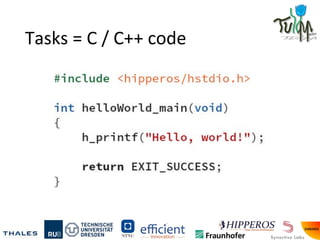

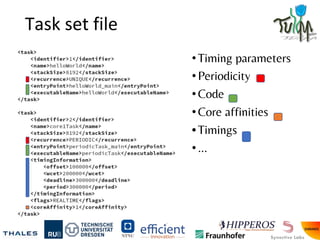

This document summarizes a workshop on the Tulipp project, which aims to develop ubiquitous low-power image processing platforms. The workshop covered shortcomings of existing platforms, introduced the Maestro real-time operating system as the reference platform, and described the concept of the Tulipp project to provide an operating system and tools to support heterogeneous architectures including FPGA and multi-core processors. Attendees participated in hands-on labs demonstrating how to build applications with Maestro, leverage OpenMP for parallelism, and use SDSoC tools to automatically accelerate functions in FPGA hardware.