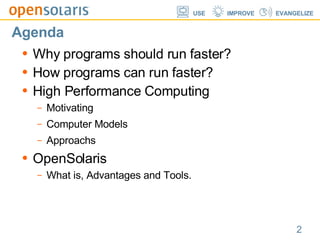

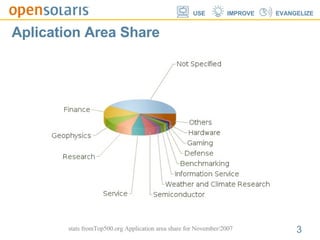

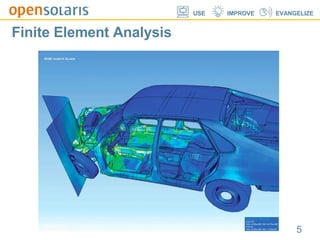

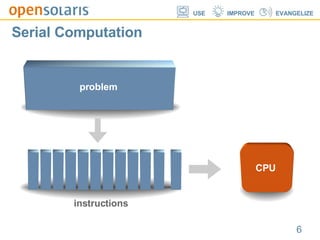

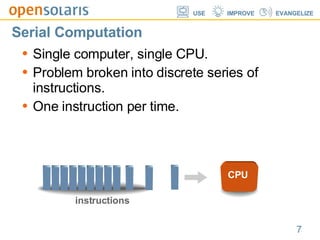

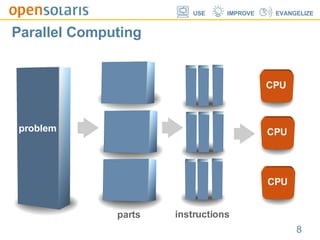

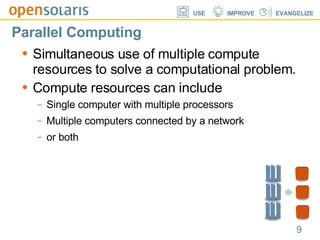

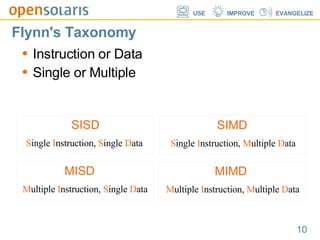

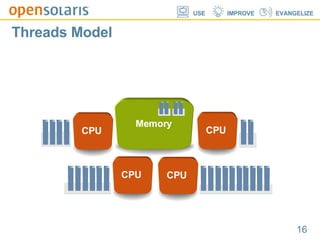

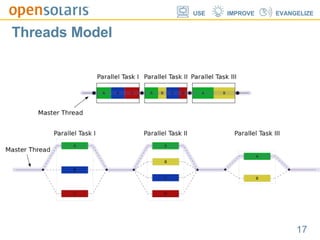

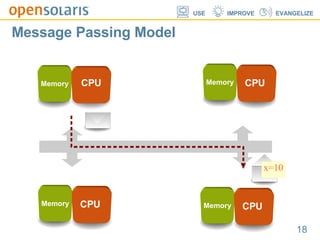

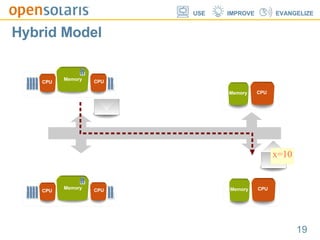

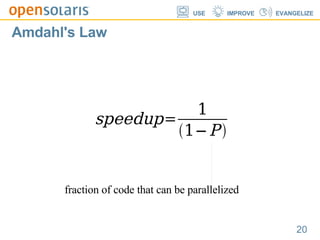

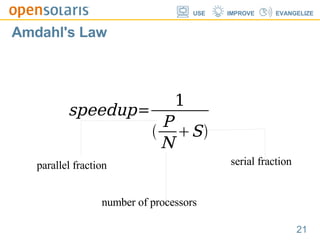

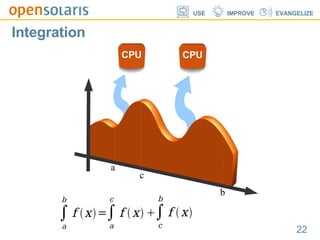

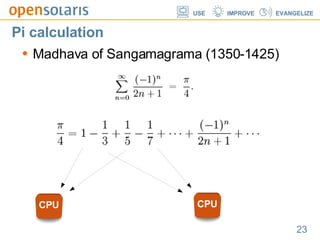

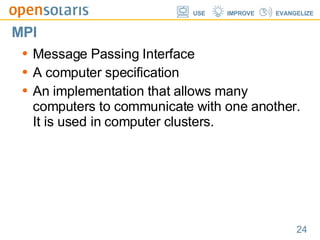

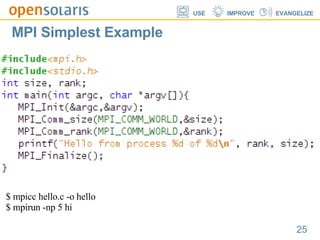

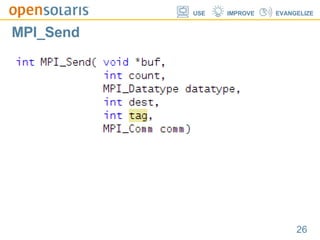

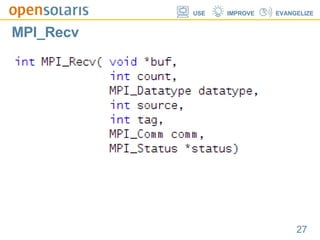

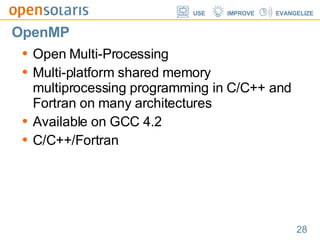

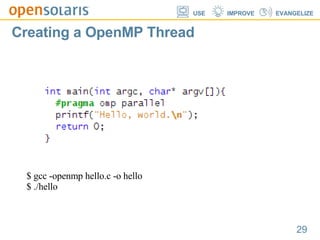

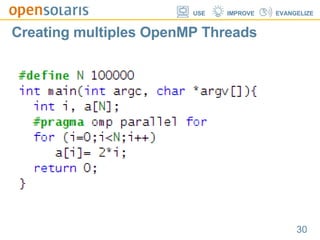

The document discusses high-performance computing, emphasizing the need for programs to run faster and the methodologies to achieve this, particularly through parallel computing. It covers the principles of parallel programming models, including shared memory and message passing, and elaborates on tools like OpenMPI and OpenMP. Additionally, it references resources and applications related to high-performance computing, particularly in scientific fields like computational fluid dynamics and finite element analysis.

![SIMD Single Instruction, Multiple Data LOAD A[0] LOAD B[0] C[0] = A[0]+B[0] STORE C[0] LOAD A[1] LOAD B[1] C[1] = A[1]+B[1] STORE C[1] LOAD A[n] LOAD B[n] C[n] = A[n]+B[n] STORE C[n] CPU 1 CPU 2 CPU n](https://image.slidesharecdn.com/hpcandopensolaris-1208375162036270-8/85/High-Performance-Computing-and-OpenSolaris-12-320.jpg)

![MISD Multiple Instruction, Single Data LOAD A[0] C[0] = A[0] *1 STORE C[0] LOAD A[1] C[1] = A[1] *2 STORE C[1] LOAD A[n] C[n] = A[n] *n STORE C[n] CPU 1 CPU 2 CPU n](https://image.slidesharecdn.com/hpcandopensolaris-1208375162036270-8/85/High-Performance-Computing-and-OpenSolaris-13-320.jpg)

![MIMD Multiple Instruction, Multiple Data LOAD A[0] C[0] = A[0] *1 STORE C[0] X=sqrt(2) C[1] = A[1] *X method(C[1]); something(); W = C[n]**X C[n] = 1/W CPU 1 CPU 2 CPU n](https://image.slidesharecdn.com/hpcandopensolaris-1208375162036270-8/85/High-Performance-Computing-and-OpenSolaris-14-320.jpg)

![Thank you! José Maria Silveira Neto Sun Campus Ambassador [email_address] http://silveiraneto.net “ open” artwork and icons by chandan: http://blogs.sun.com/chandan](https://image.slidesharecdn.com/hpcandopensolaris-1208375162036270-8/85/High-Performance-Computing-and-OpenSolaris-40-320.jpg)