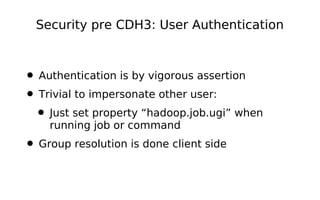

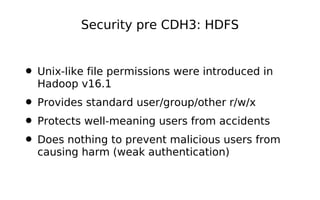

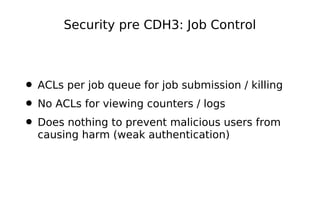

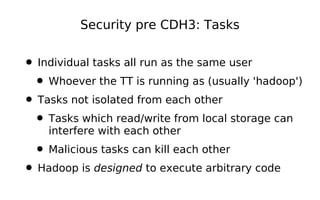

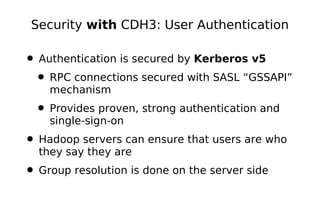

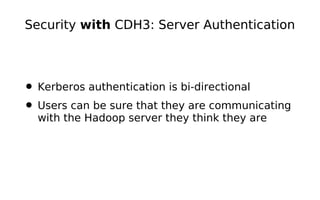

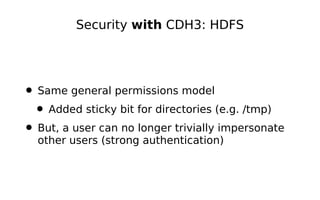

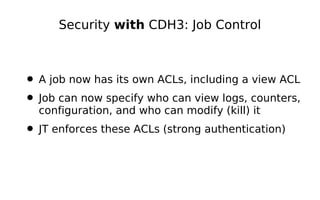

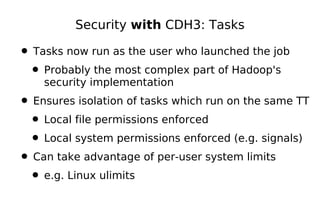

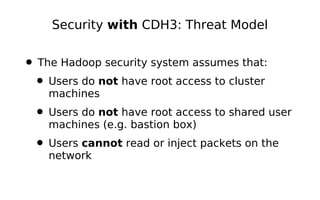

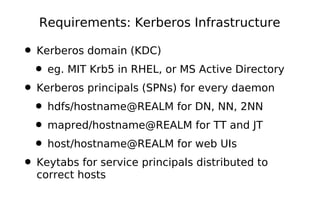

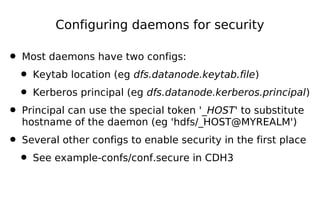

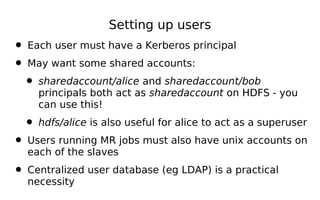

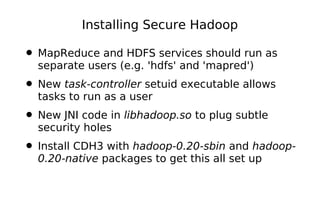

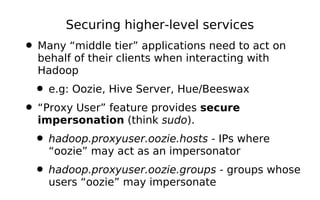

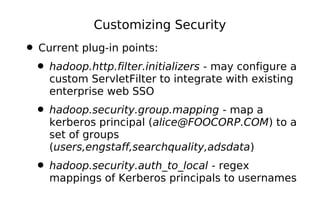

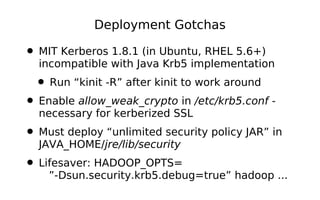

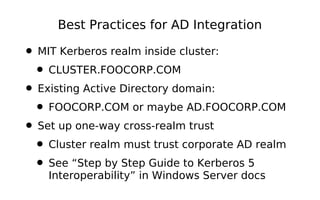

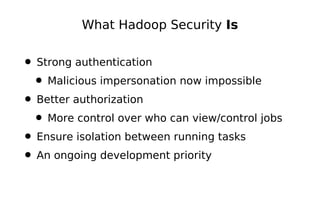

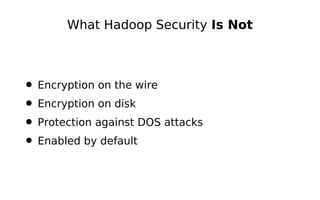

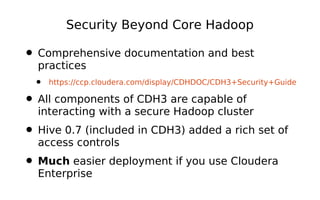

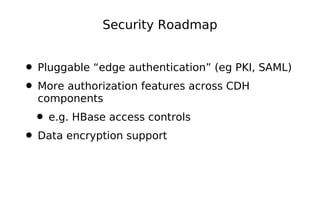

The document provides an overview of Hadoop security, detailing the evolution of security measures from pre-CDH3 to CDH3, including enhancements in user and server authentication via Kerberos, improved job control, and task isolation. It highlights the requirements for deploying secure Hadoop, such as Kerberos infrastructure and configurations, as well as the significance of strong authentication and authorization processes. Additional topics include deploying higher-level services, common deployment challenges, and best practices for integrating Hadoop security with existing corporate systems.