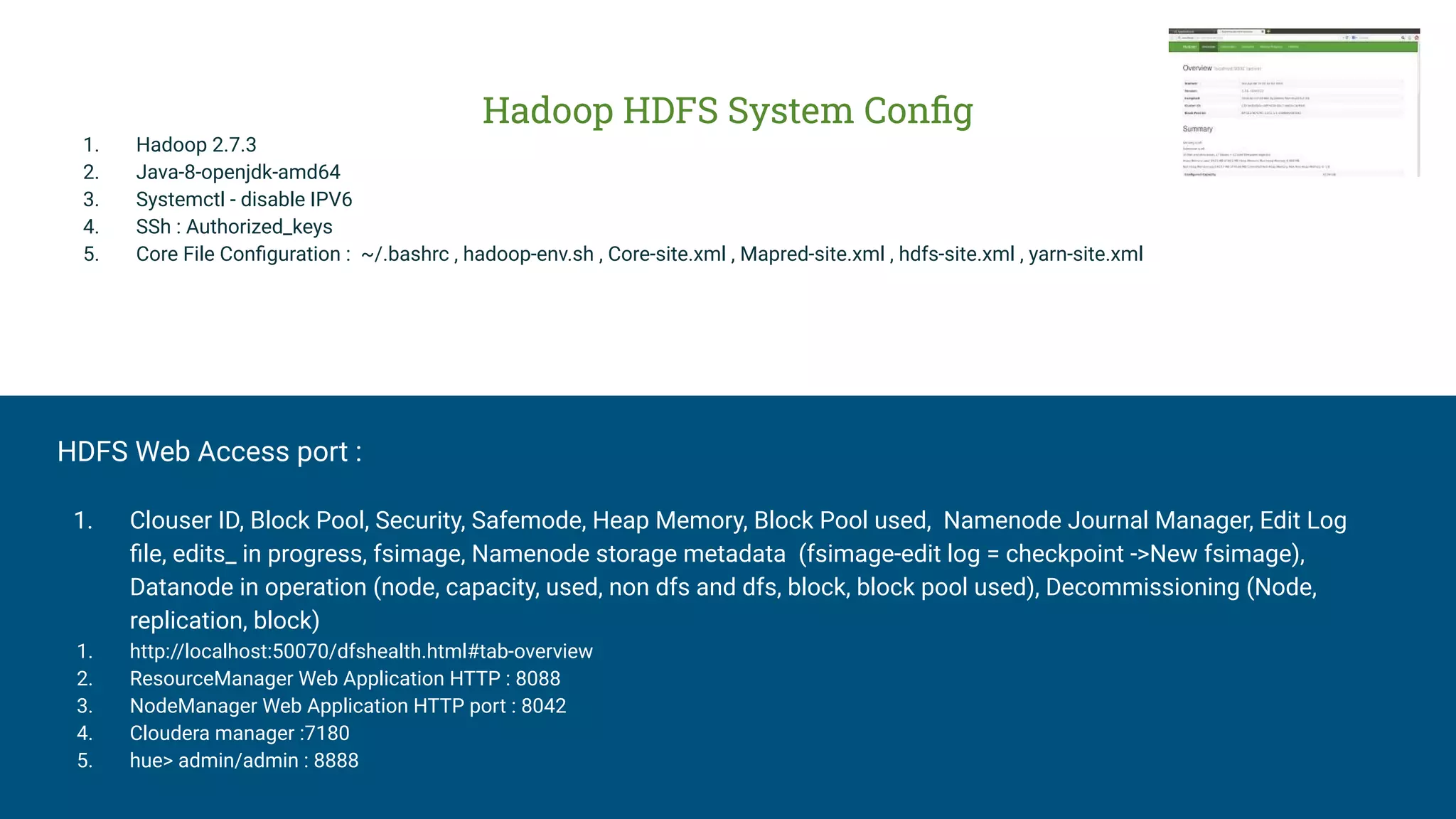

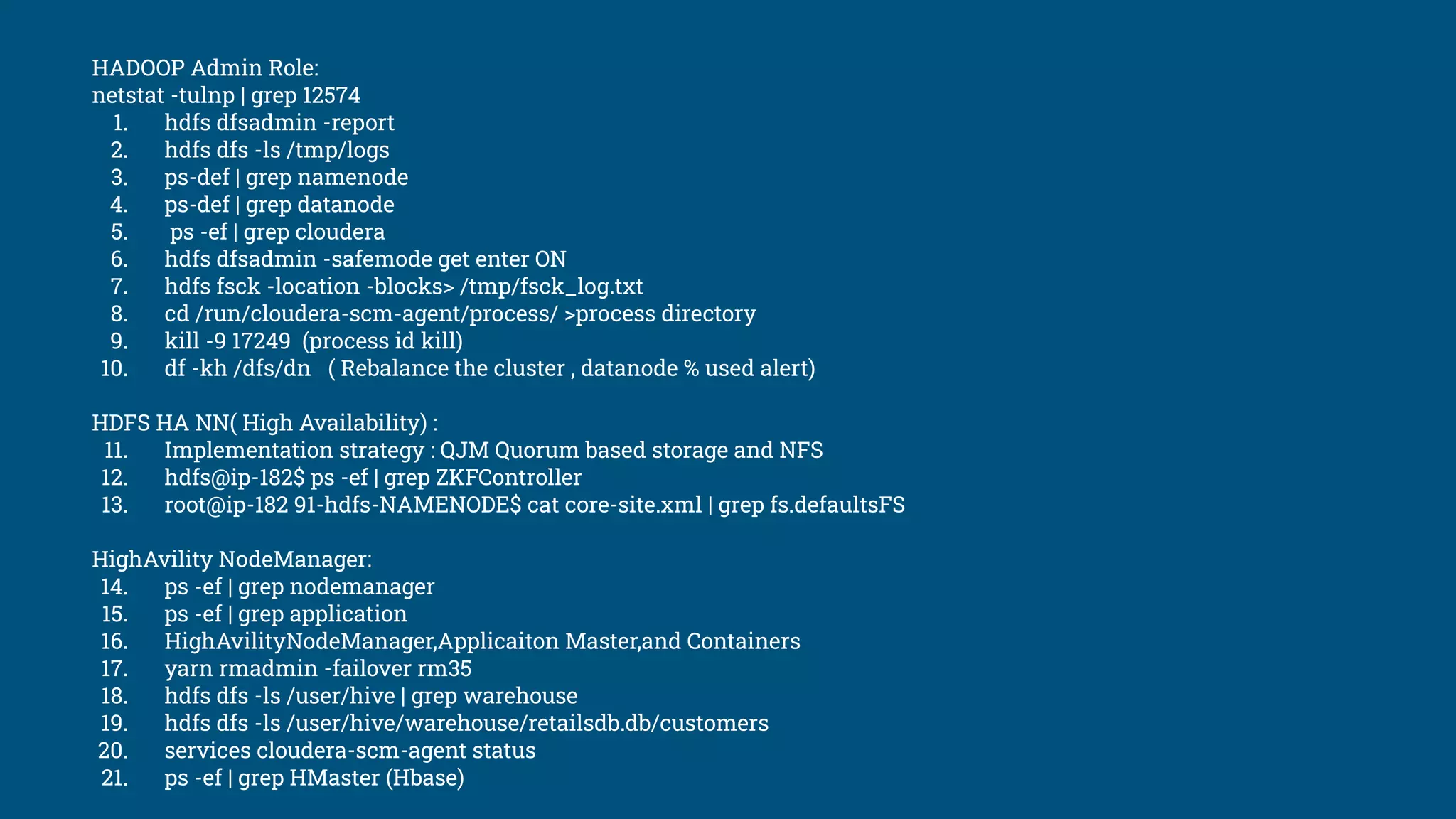

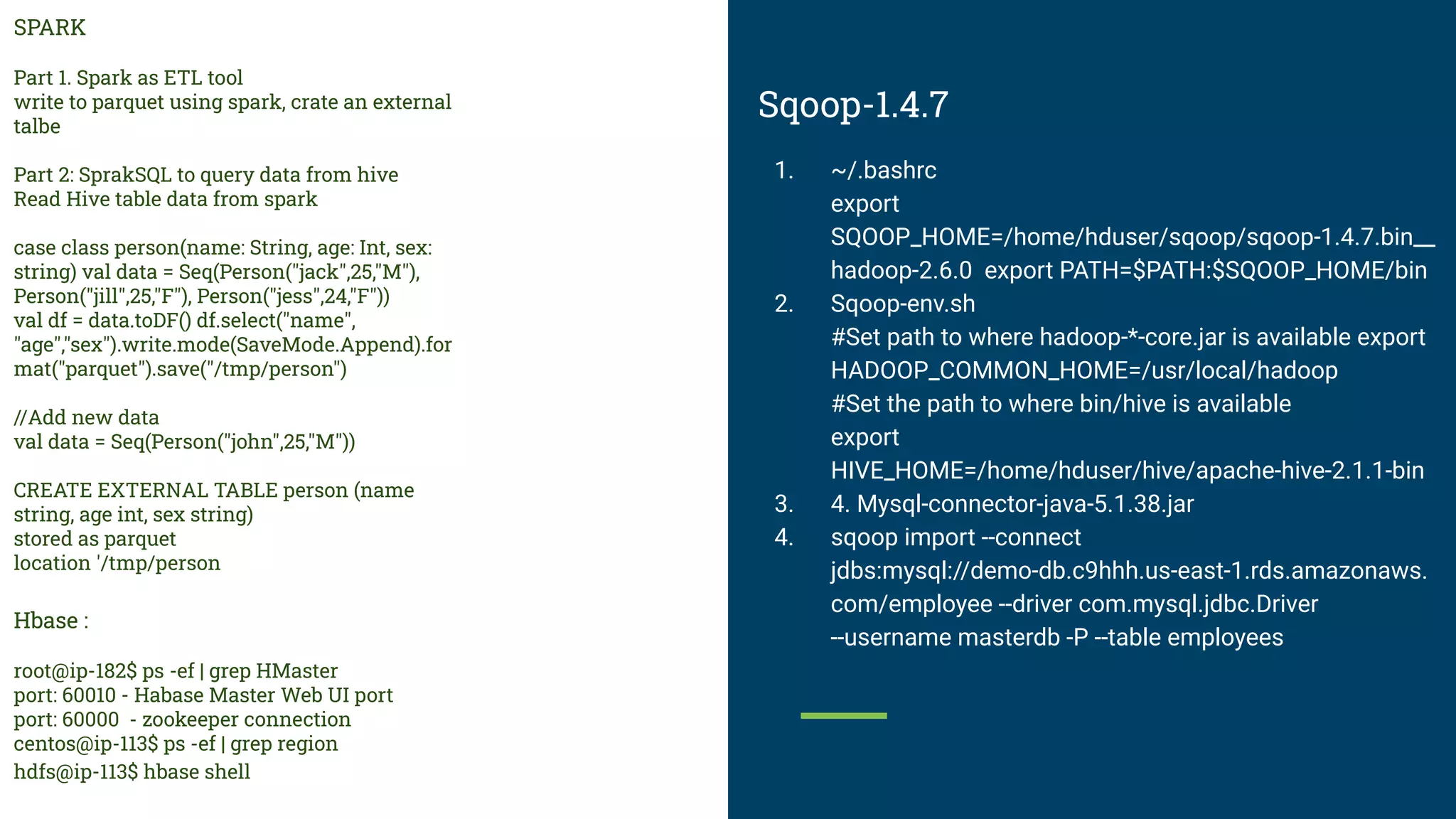

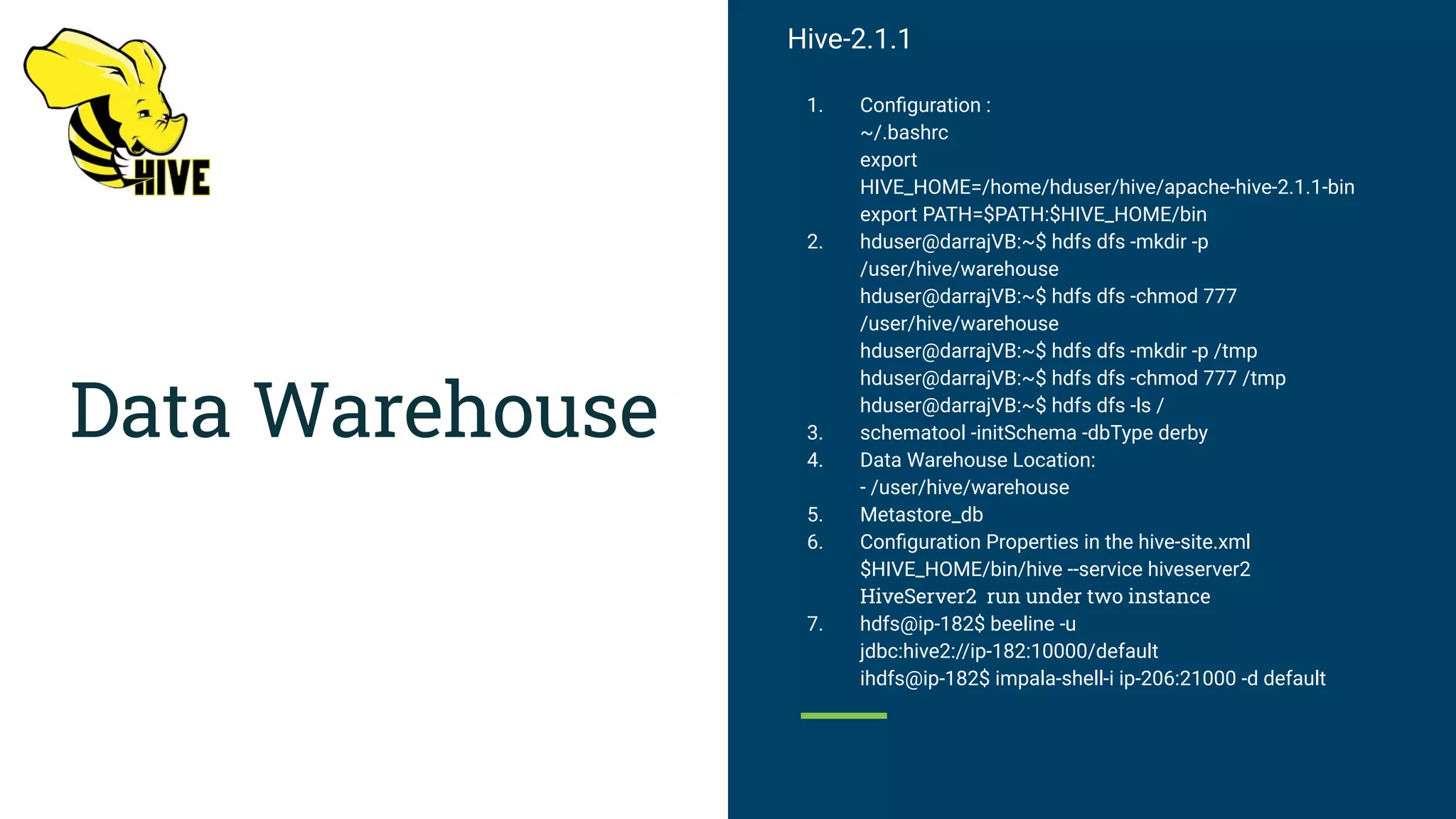

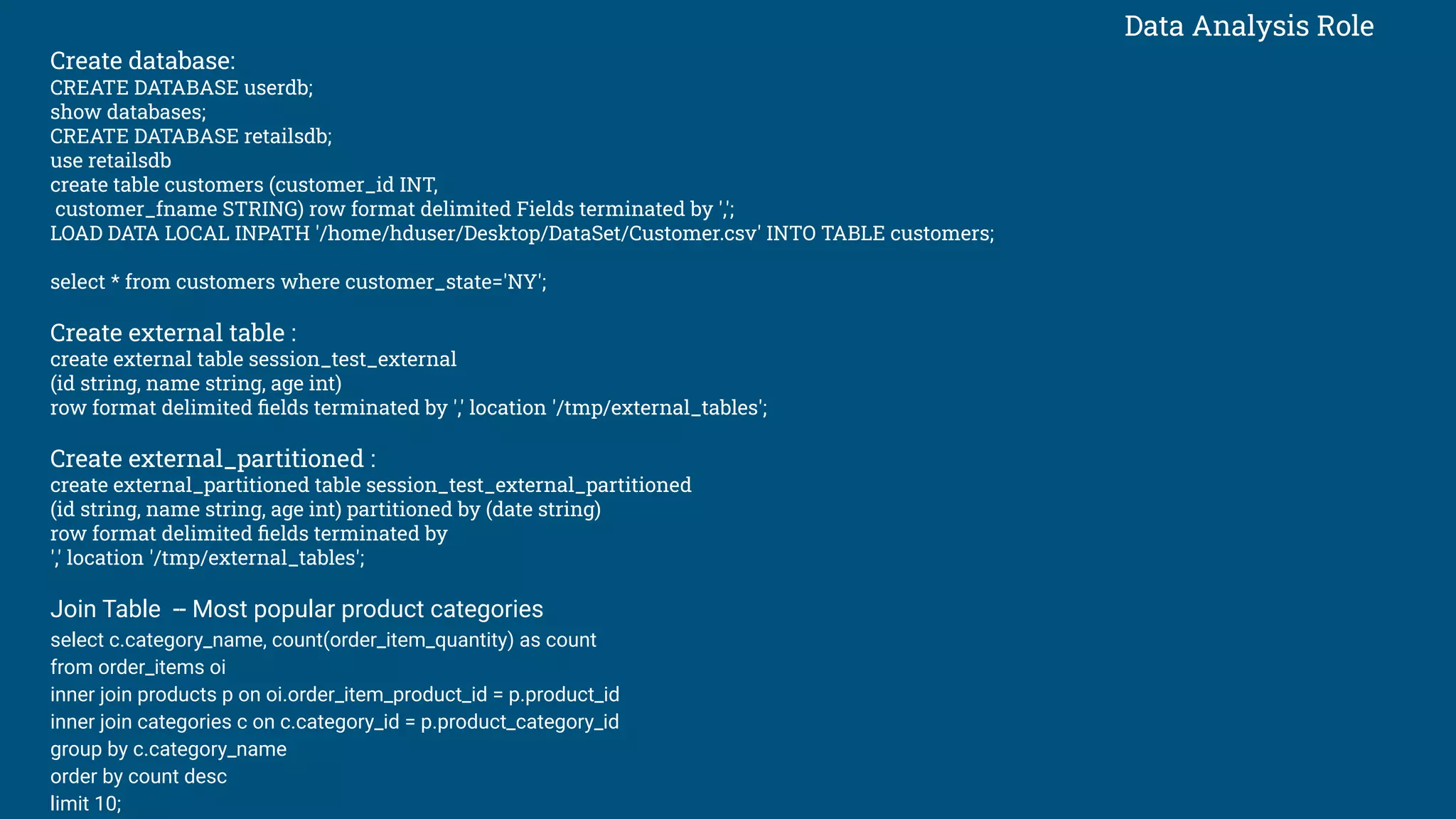

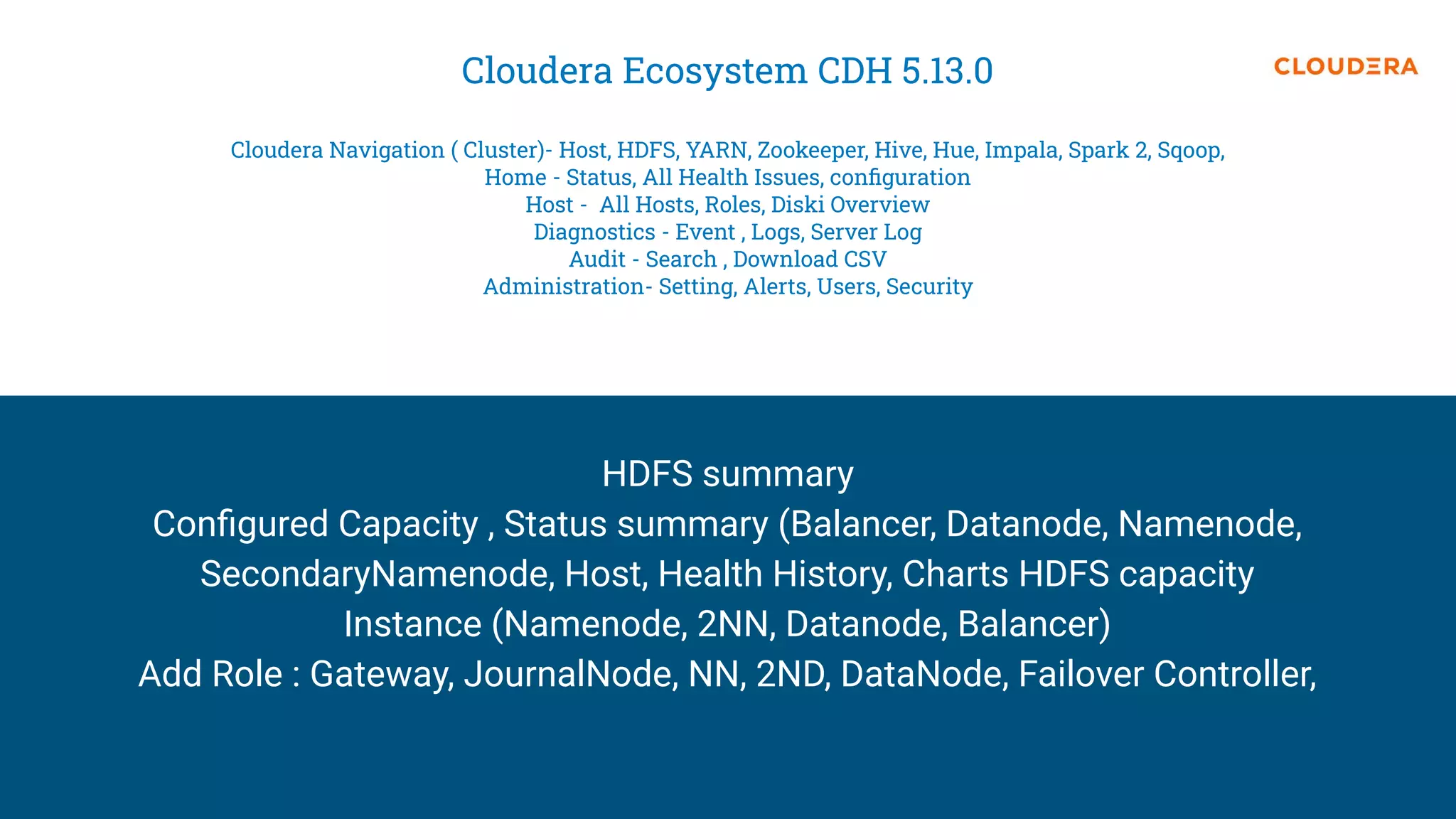

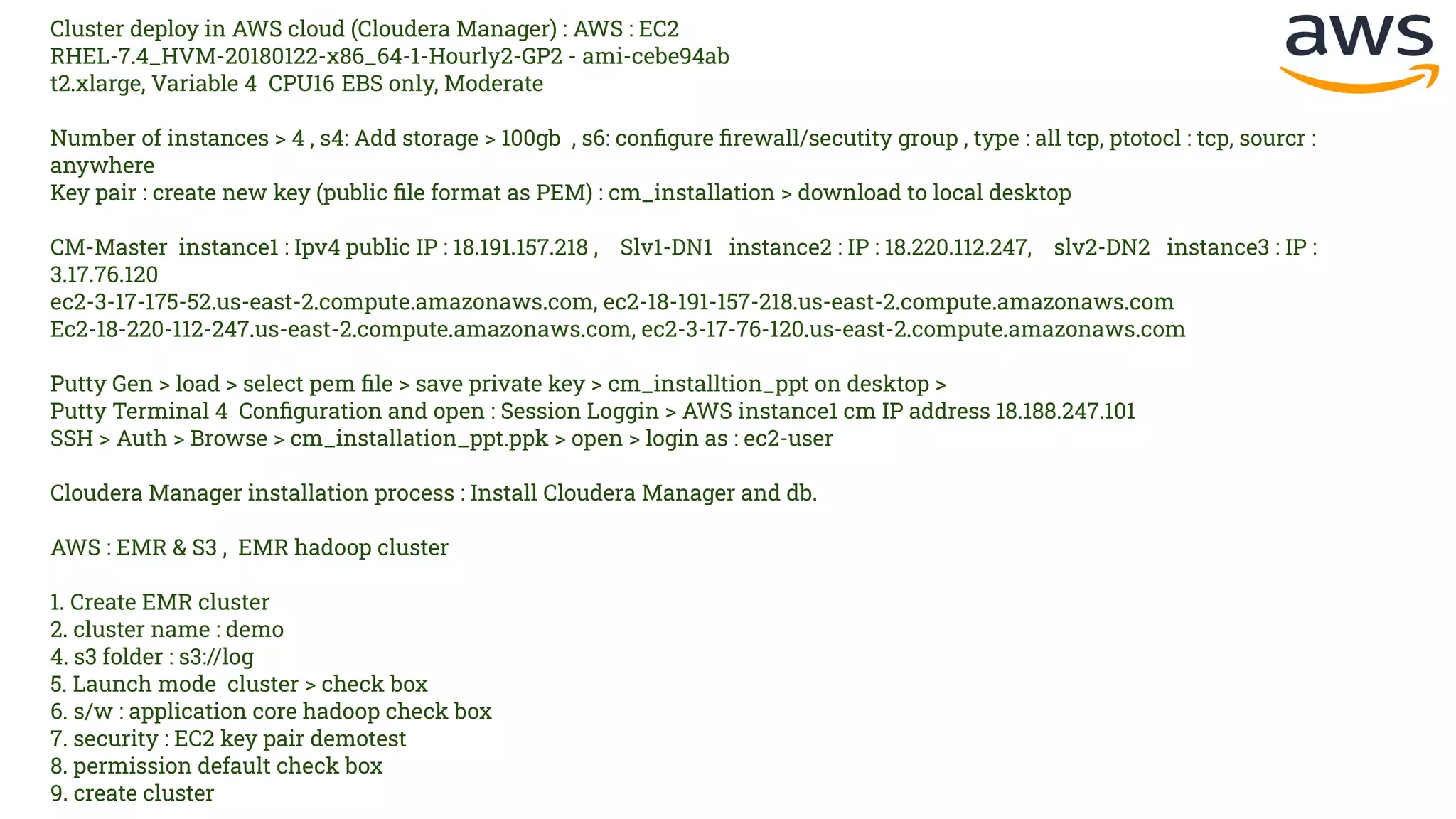

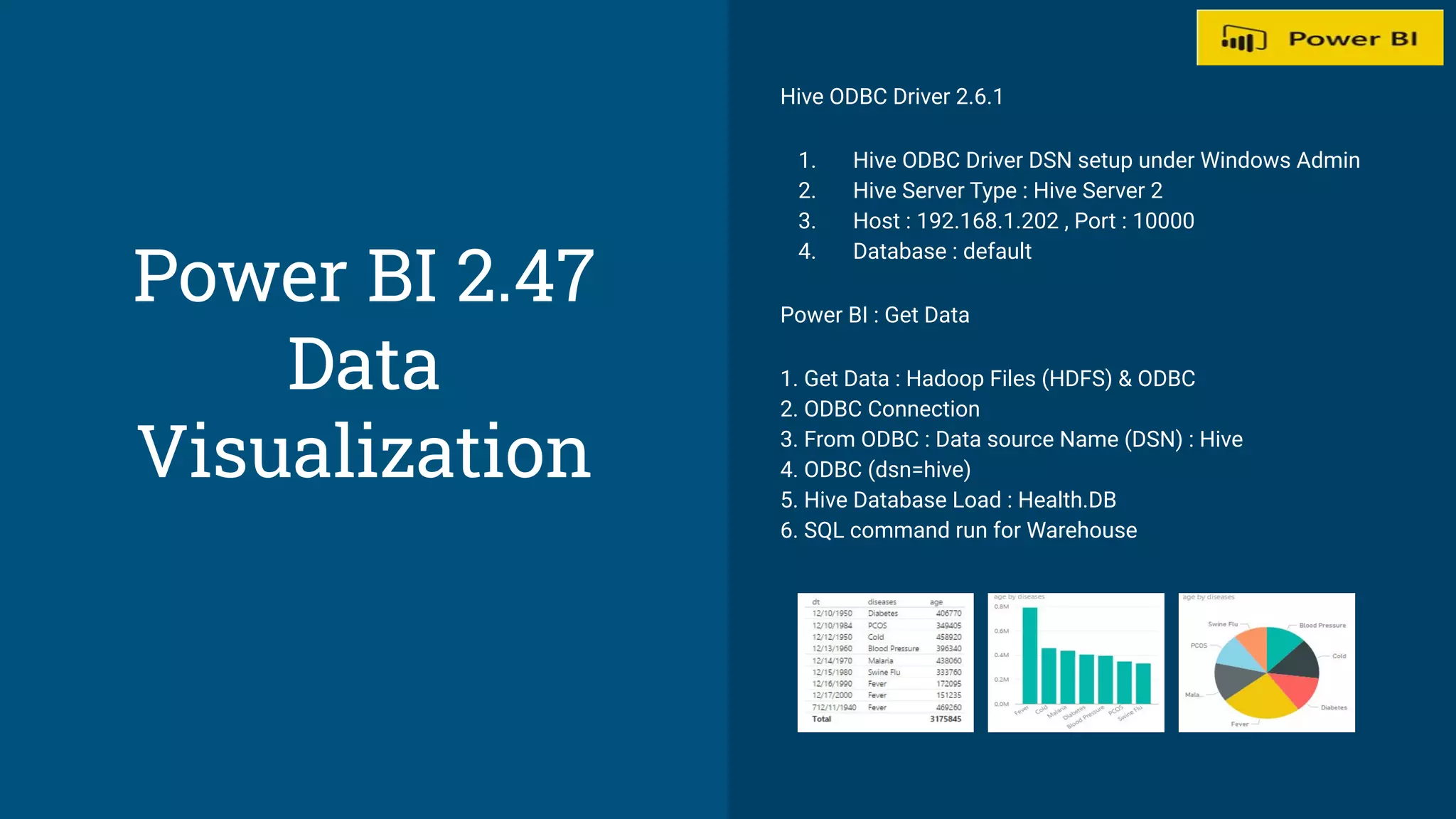

This document provides configuration details for a Hadoop cluster running various components including HDFS, YARN, Hive, HBase, Spark, and Sqoop. It outlines the Linux OS, Java, and Hadoop versions in use as well as configuration properties for core Hadoop systems. It also describes commands for common Hadoop administration tasks and how to configure high availability for HDFS, YARN, and HBase. Kerberos configuration is covered along with details on using Sqoop, Spark, Hive, and Cloudera Manager. The document concludes with sections on data analysis and visualization using Power BI.