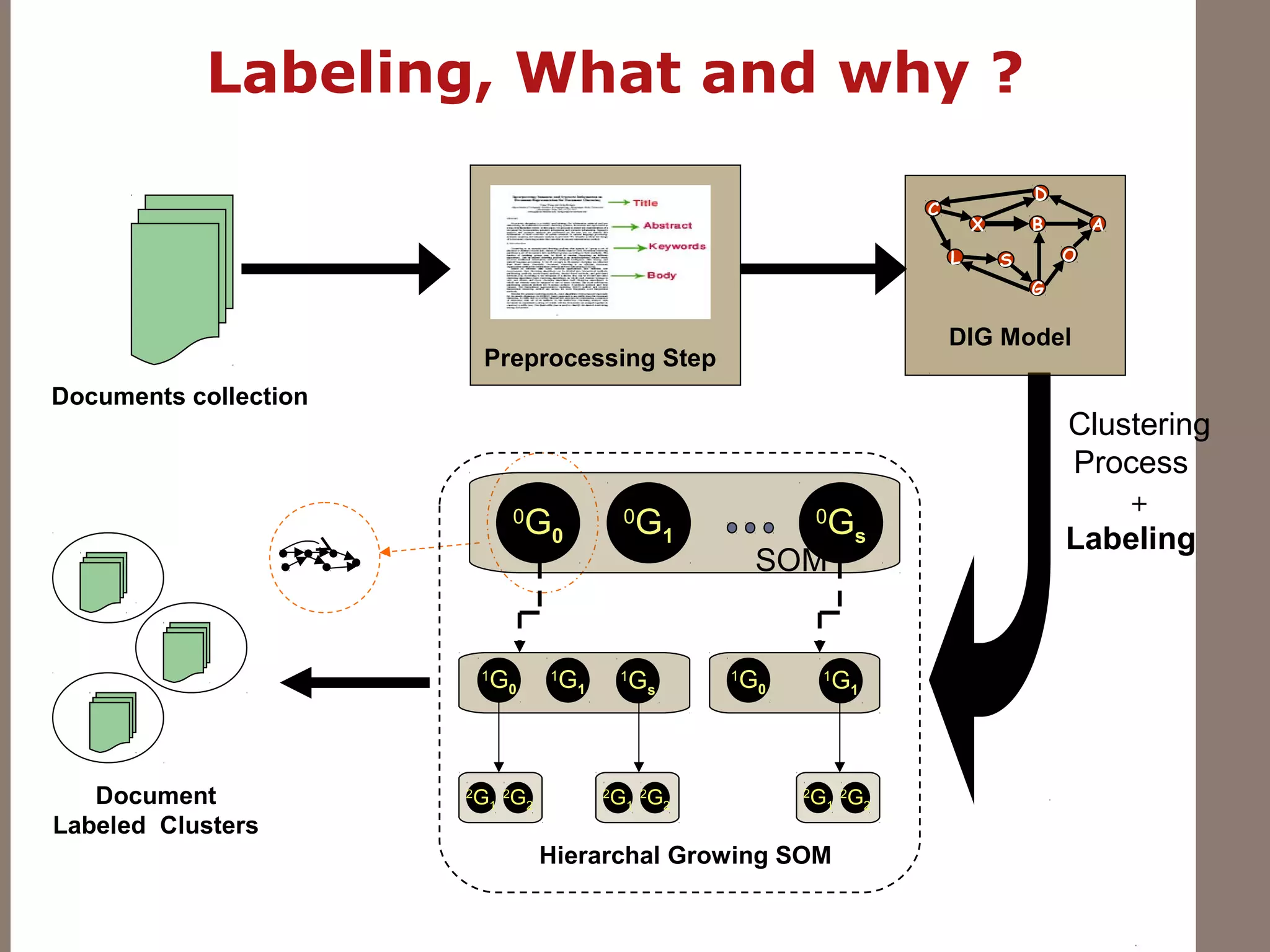

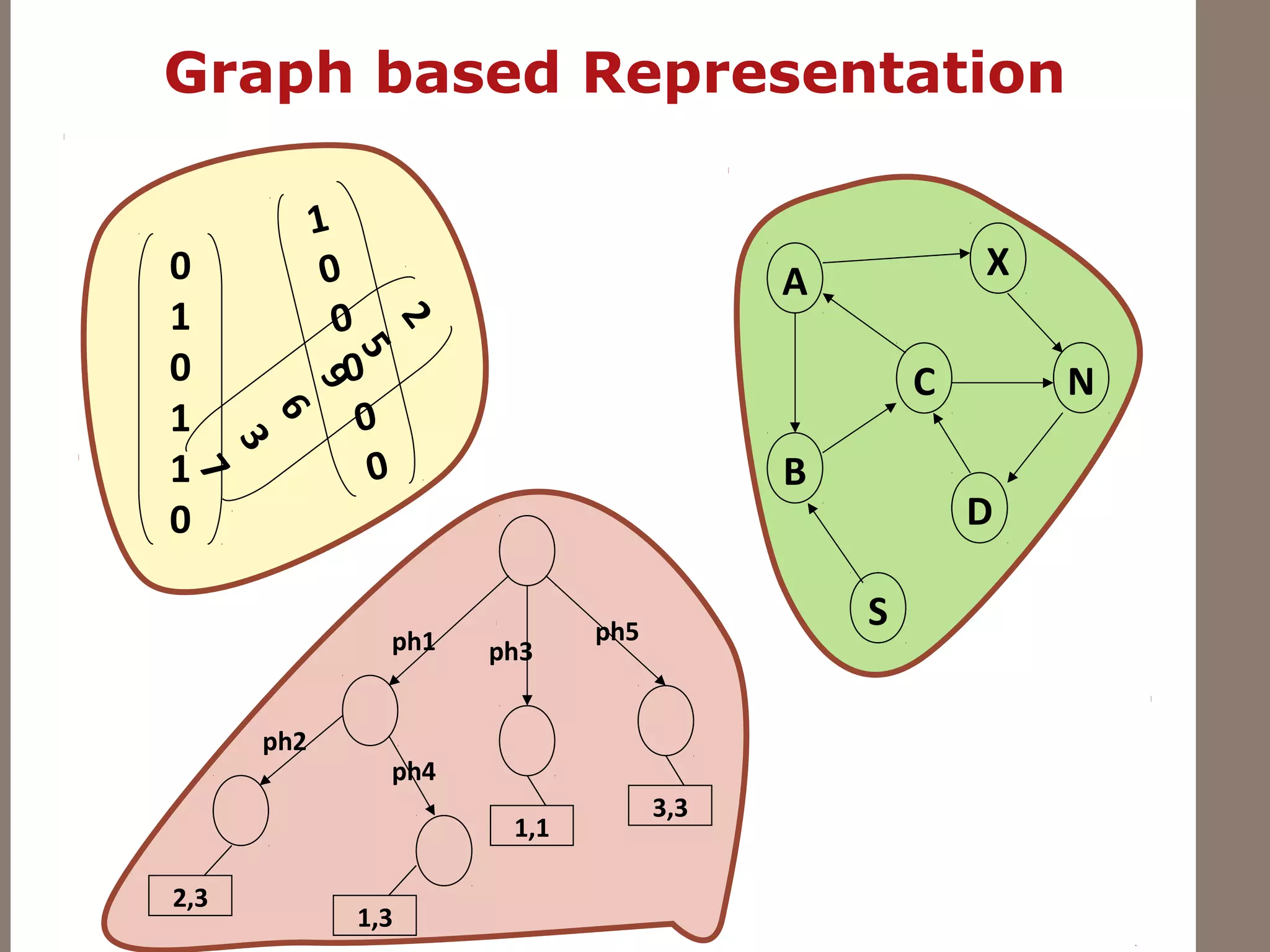

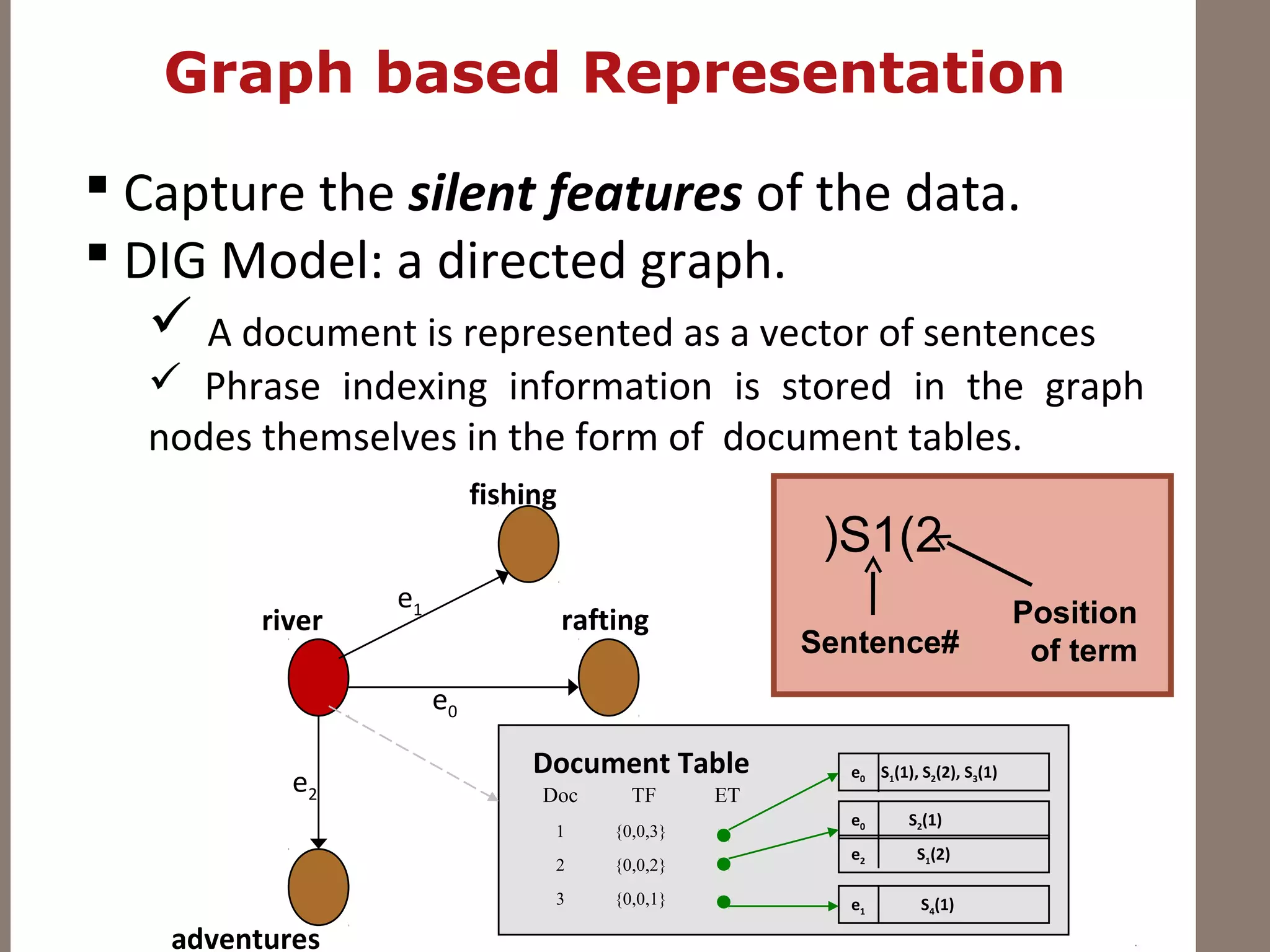

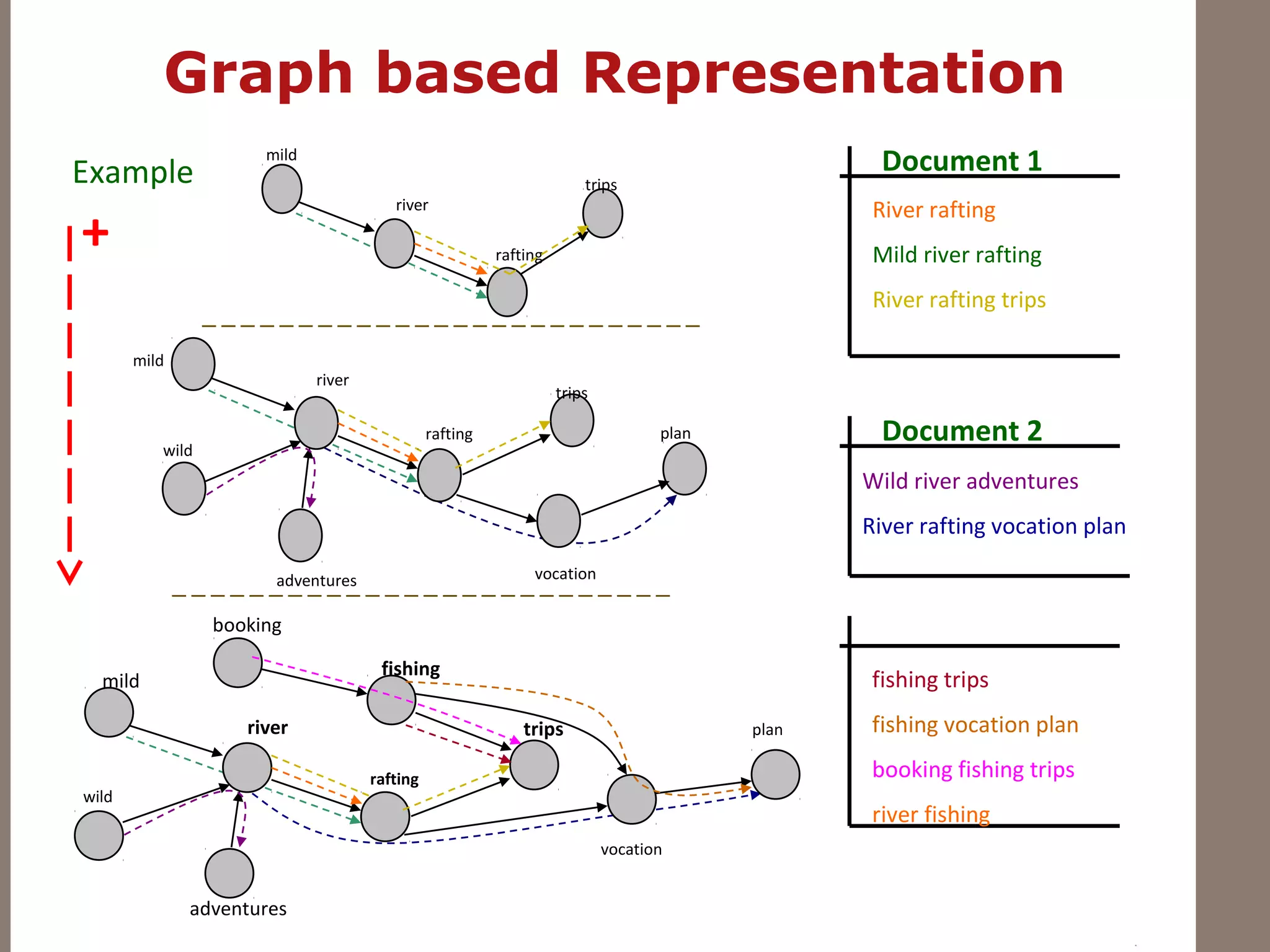

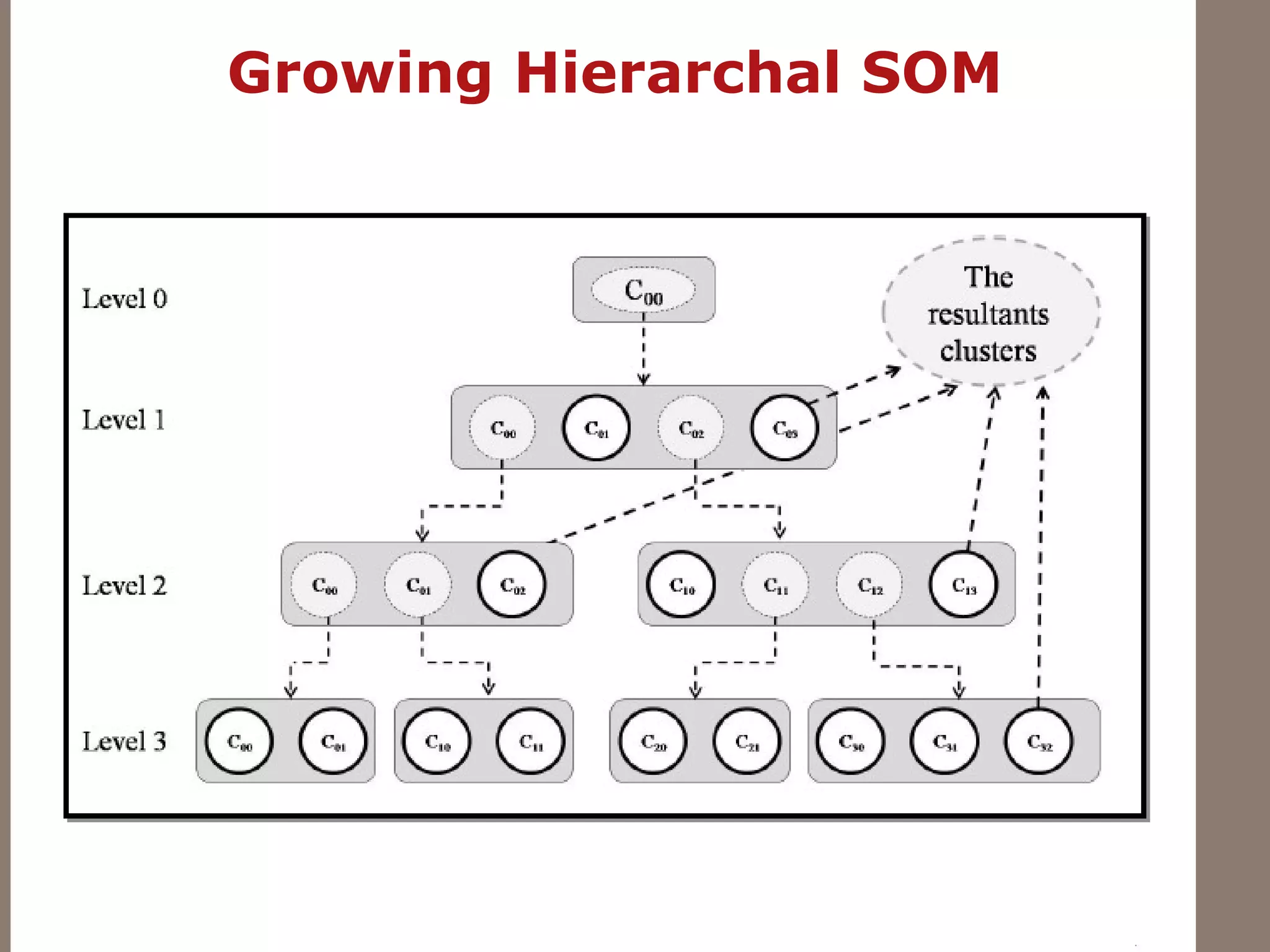

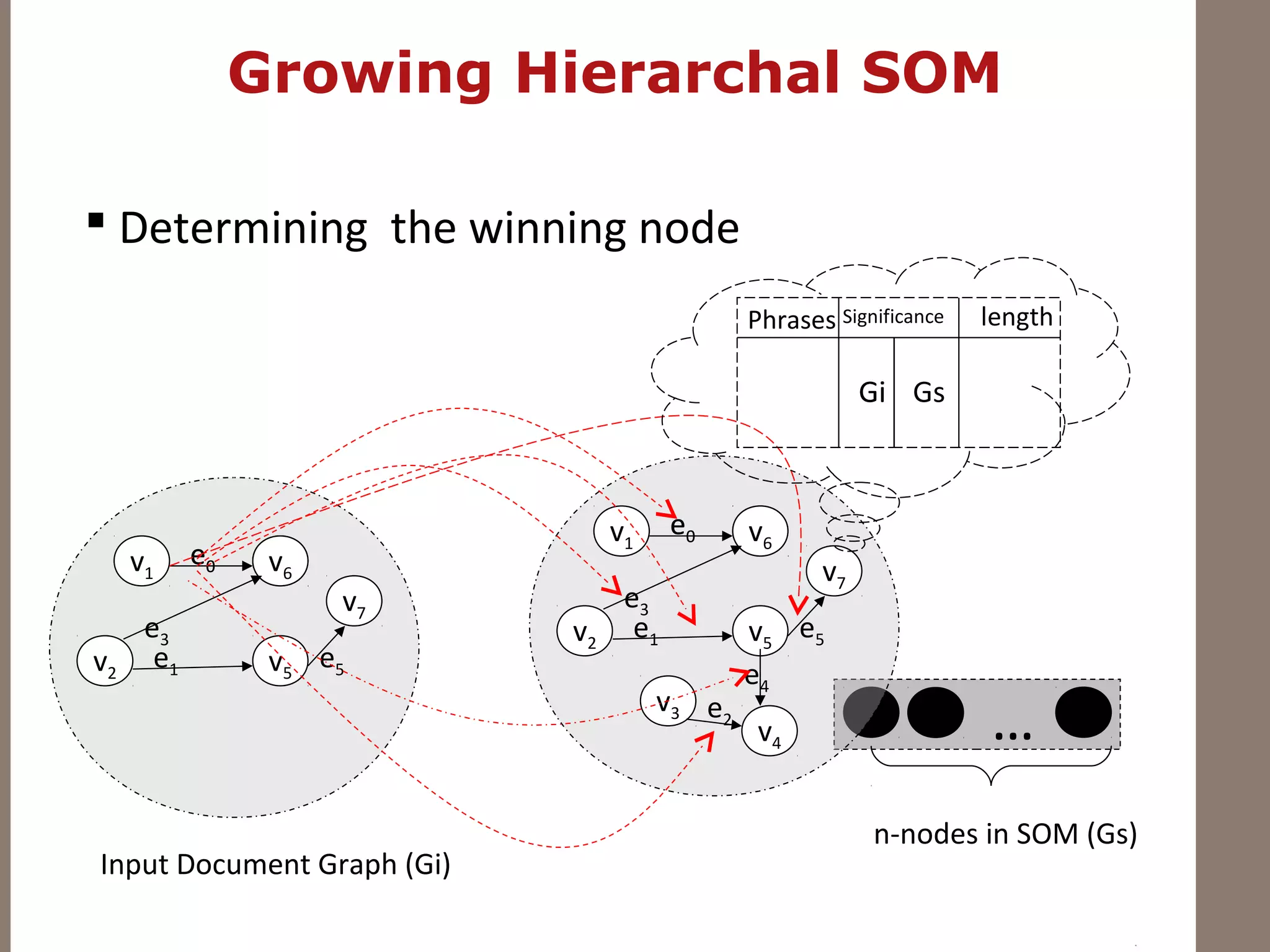

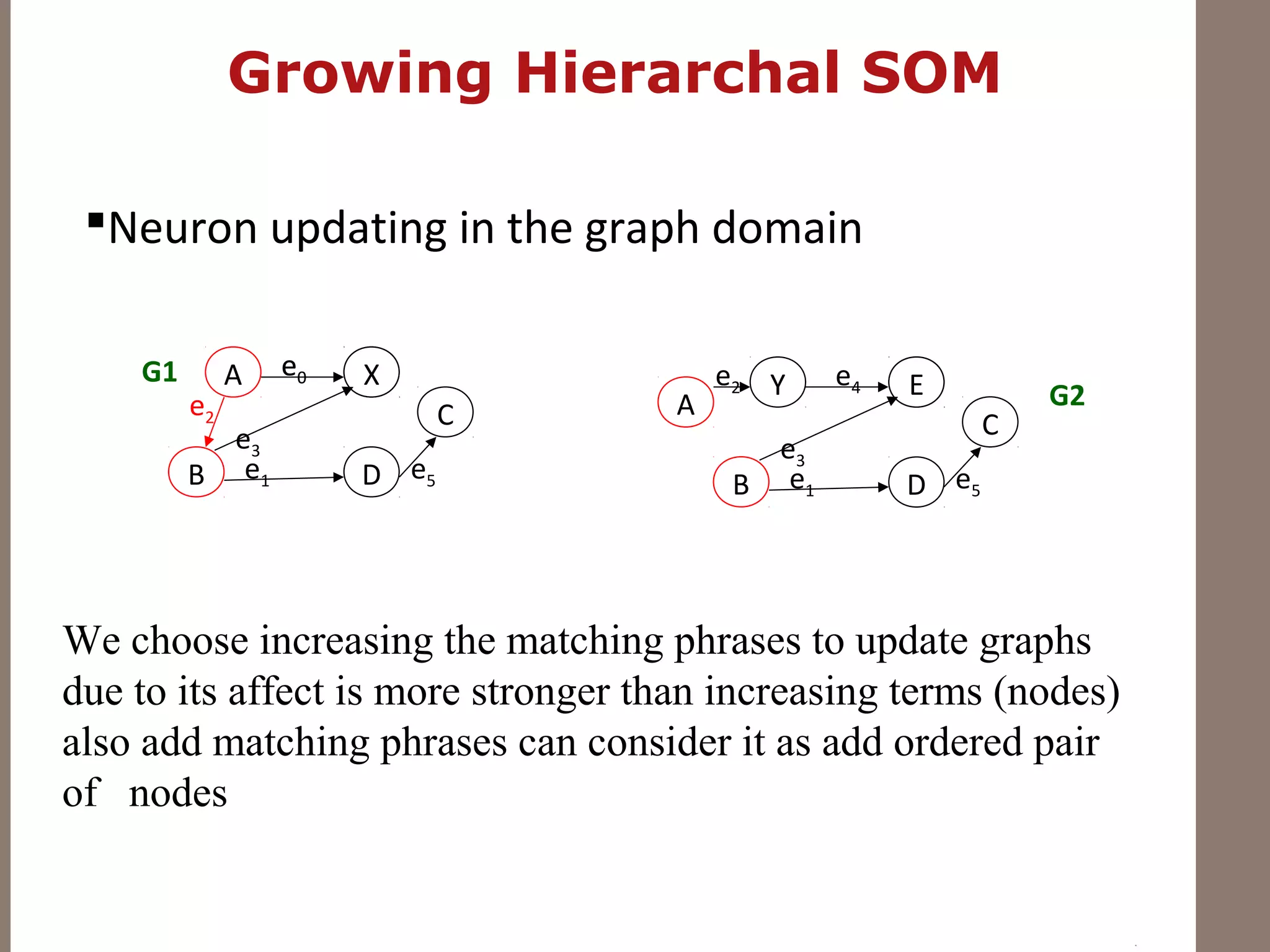

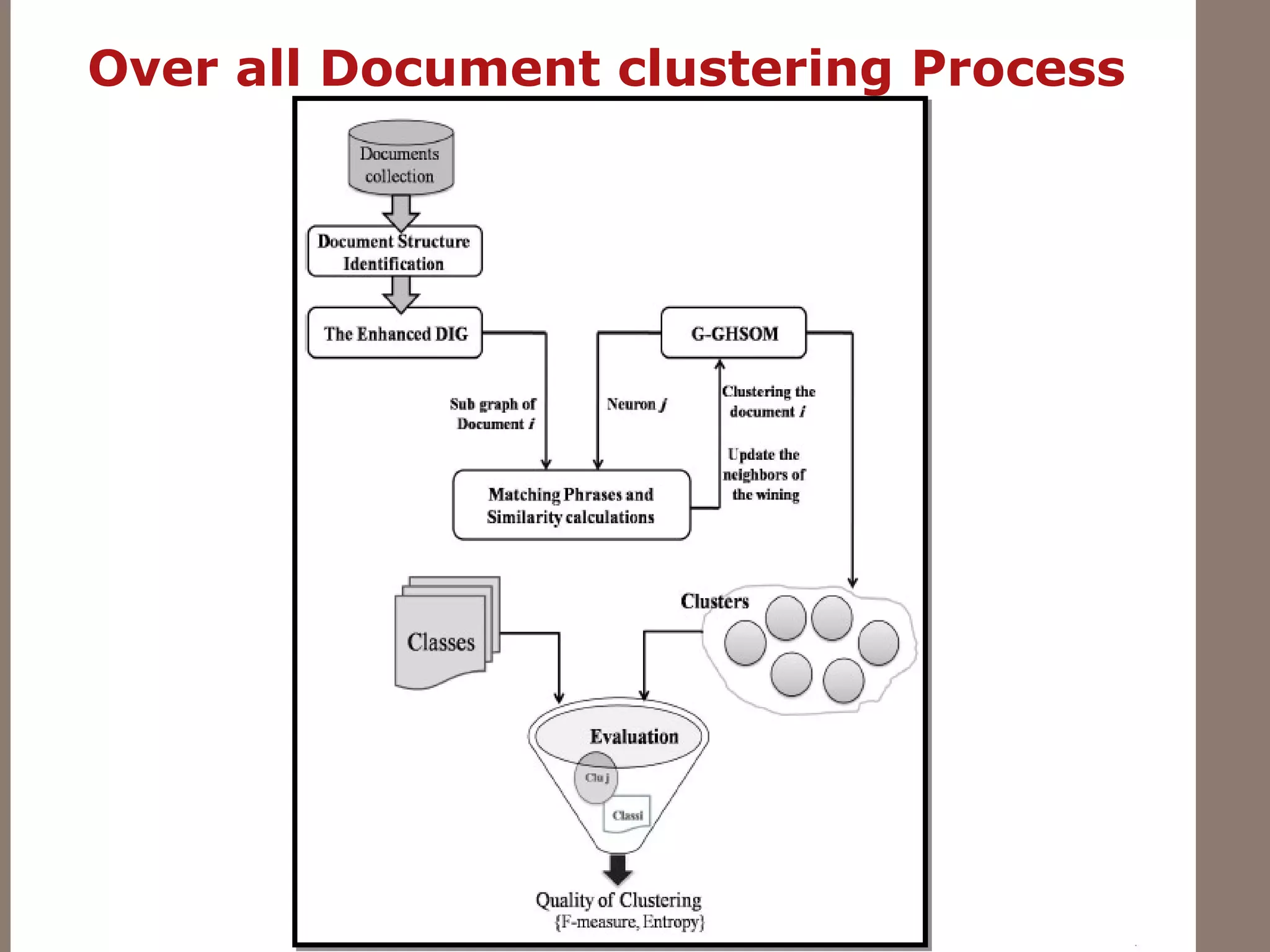

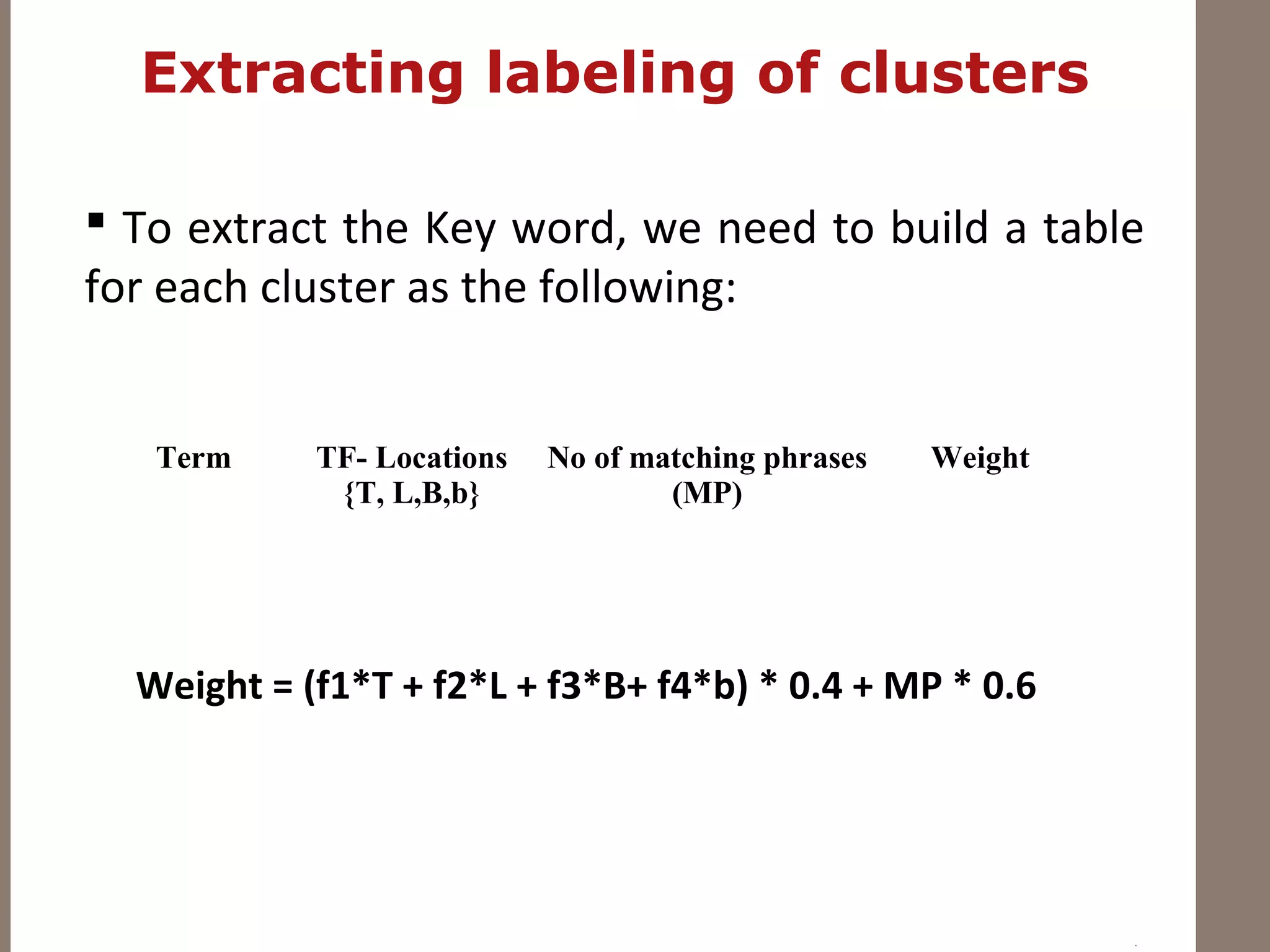

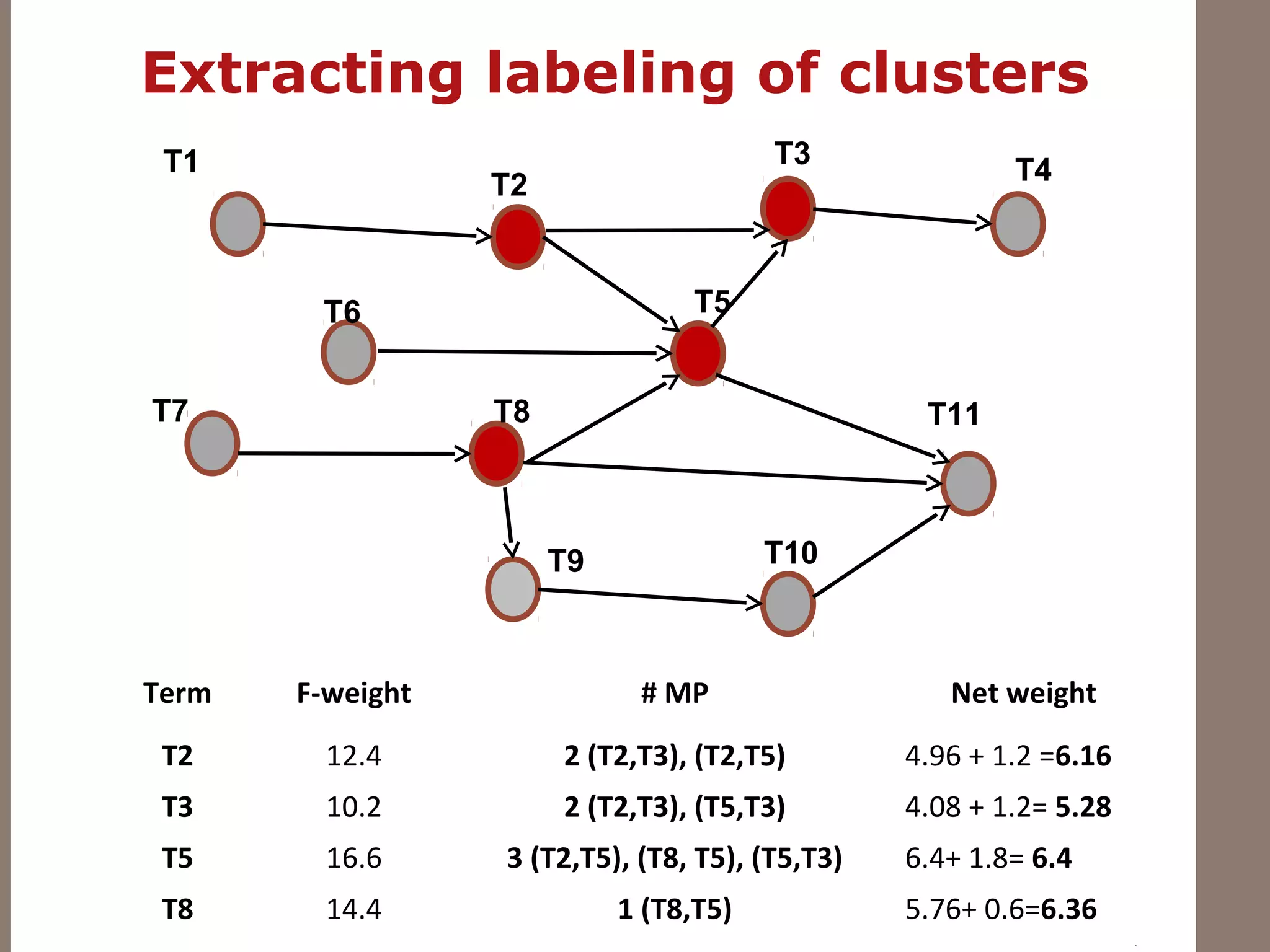

This document proposes a method for graph-based cluster labeling using Growing Hierarchical Self-Organizing Maps (SOM). It represents documents as graphs to capture relationships between phrases. A Growing Hierarchical SOM is used for document clustering, where nodes represent document graphs. Keywords are extracted from each cluster based on term frequency, location, and number of matching phrases between document graphs. The method aims to provide descriptive labels for clusters as document collections increase in size.