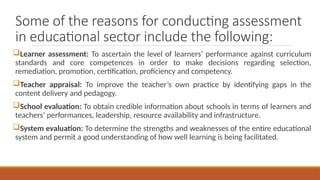

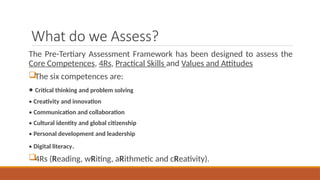

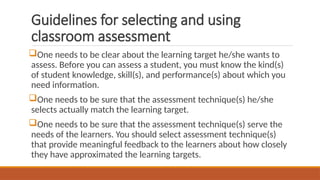

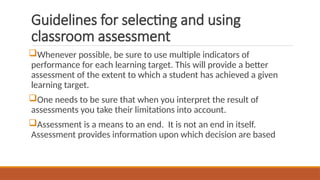

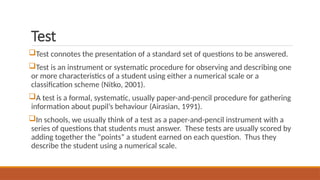

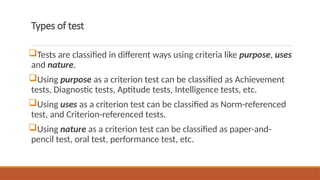

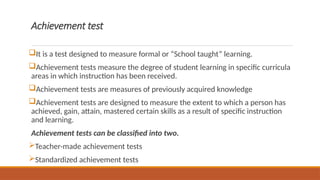

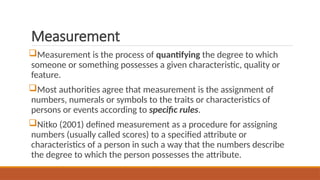

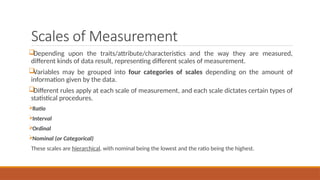

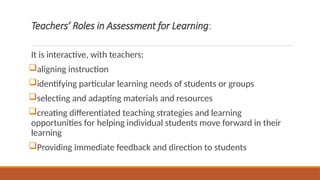

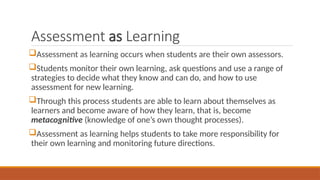

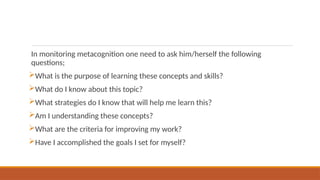

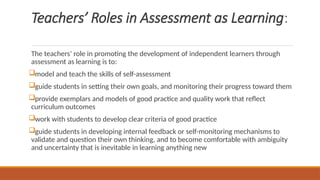

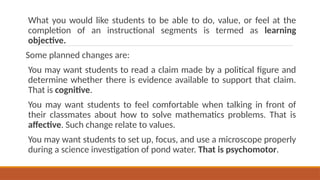

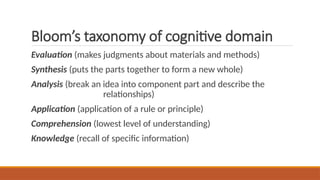

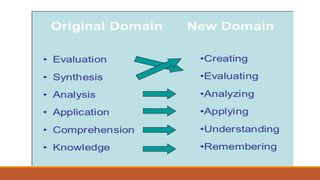

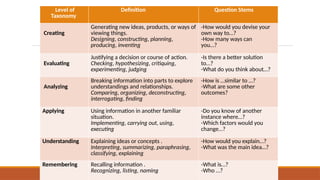

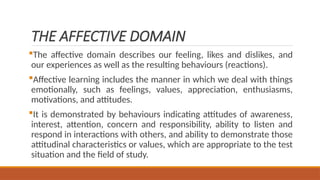

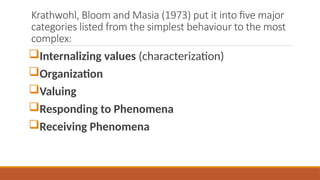

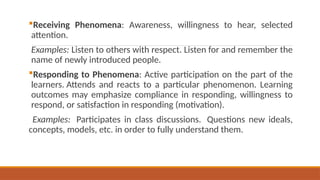

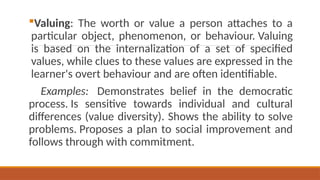

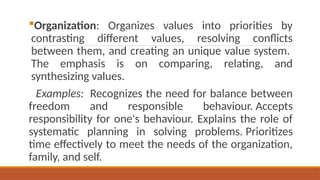

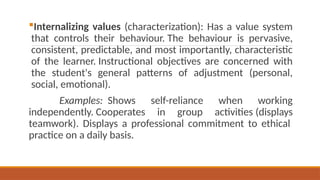

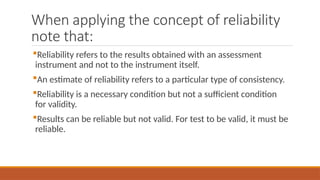

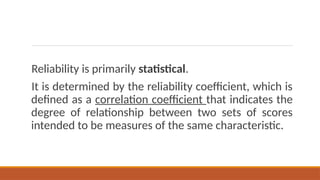

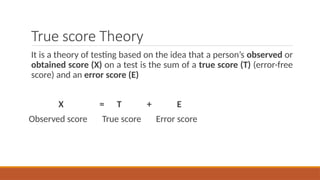

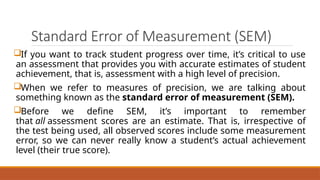

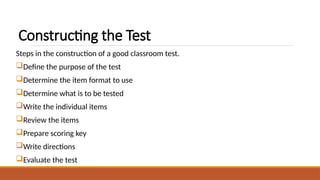

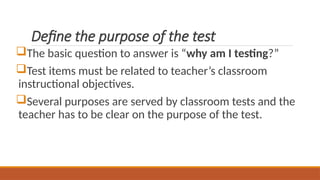

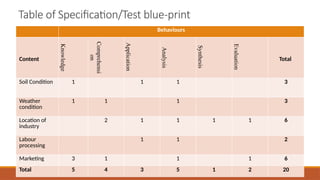

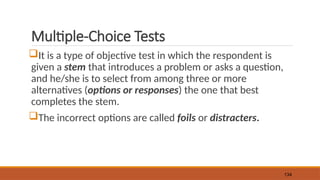

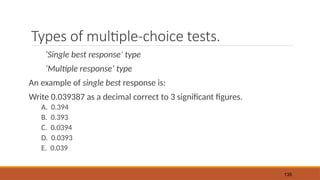

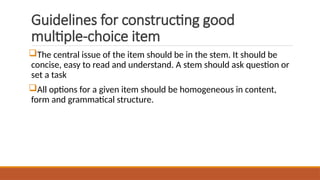

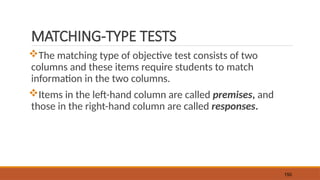

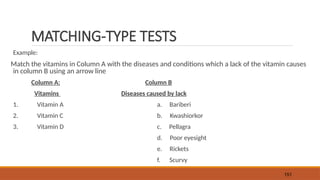

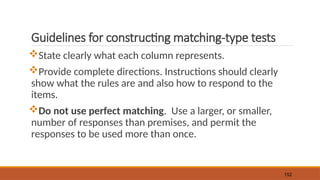

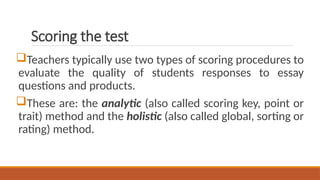

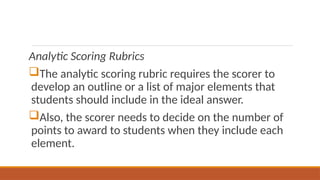

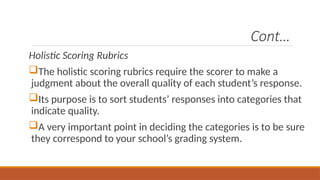

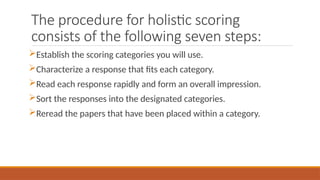

The document outlines key concepts in measurement, evaluation, and statistics in education, focusing on assessment as a broad process for gathering information about students, curricula, and educational policies. It differentiates between various types of assessments, their purposes, and the roles of teachers in facilitating effective assessment practices, emphasizing the importance of aligning assessments with learning objectives and providing meaningful feedback. Furthermore, it discusses different scales of measurement, forms of evaluation, and taxonomies of educational objectives to enhance understanding and implementation in educational settings.