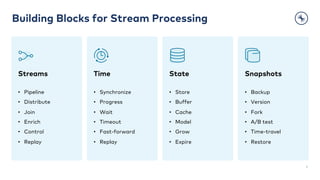

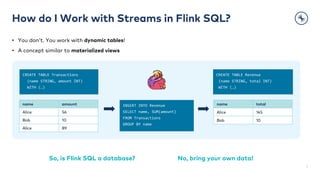

The document presents an overview of Apache Flink's SQL engine, highlighting its capabilities for stream processing using dynamic tables instead of traditional databases. It explains key concepts such as timestamp management, changelog modes, and SQL execution planning, demonstrating how SQL statements translate into physical execution plans and stream transformations. Various examples illustrate workflows, including joins and watermarks, showcasing how users can implement these features in their applications.

![Stream-Table Duality - Example

8

An applied changelog becomes a real (materialized) table.

name amount

Alice 56

Bob 10

Alice 89

name total

Alice 56

Bob 10

changelog

+I[Alice, 89] +I[Bob, 10] +I[Alice, 56] +U[Alice, 145] -U[Alice, 56] +I[Bob, 10] +I[Alice, 56]

145

materialization

CREATE TABLE Revenue

(name STRING, total INT)

WITH (…)

INSERT INTO Revenue

SELECT name, SUM(amount)

FROM Transactions

GROUP BY name

CREATE TABLE Transactions

(name STRING, amount INT)

WITH (…)](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-8-320.jpg)

![Stream-Table Duality - Example

9

An applied changelog becomes a real (materialized) table.

name amount

Alice 56

Bob 10

Alice 89

name total

Alice 56

Bob 10

+I[Alice, 89] +I[Bob, 10] +I[Alice, 56] +U[Alice, 145] -U[Alice, 56] +I[Bob, 10] +I[Alice, 56]

145

materialization

CREATE TABLE Revenue

(PRIMARY KEY(name) …)

WITH (…)

INSERT INTO Revenue

SELECT name, SUM(amount)

FROM Transactions

GROUP BY name

CREATE TABLE Transactions

(name STRING, amount INT)

WITH (…)

Save ~50% of traffic if the downstream system supports upserting!](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-9-320.jpg)

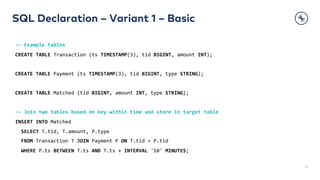

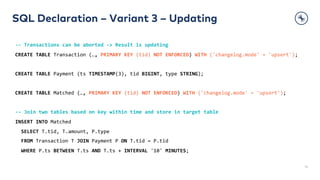

![Example 1 – No watermarks, no updates

24

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

Logical Tree

LogicalSink(table=[Matched], fields=[tid, amount, type])

+- LogicalProject(tid=[$1], amount=[$2], type=[$5])

+- LogicalFilter(condition=[AND(>=($3, $0), <=($3, +($0, 600000)))])

+- LogicalJoin(condition=[=($1, $4)], joinType=[inner])

:- LogicalTableScan(table=[Transaction])

+- LogicalTableScan(table=[Payment])

Physical Tree

Sink(table=[Matched], fields=[tid, amount, type], changelogMode=[NONE])

+- Calc(select=[tid, amount, type], changelogMode=[I])

+- Join(joinType=[InnerJoin], where=[AND(=(tid, tid0), >=(ts0, ts), <=(ts0, +(ts, 600000)))], …, changelogMode=[I])

:- Exchange(distribution=[hash[tid]], changelogMode=[I])

: +- TableSourceScan(table=[Transaction], fields=[ts, tid, amount], changelogMode=[I])

+- Exchange(distribution=[hash[tid]], changelogMode=[I])

+- TableSourceScan(table=[Payment], fields=[ts, tid, type], changelogMode=[I])](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-24-320.jpg)

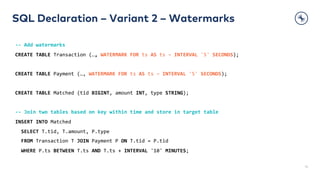

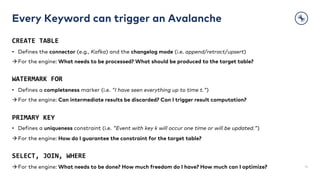

![Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Example 1 – No watermarks, no updates

25

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

Kafka Reader

Join Calc Kafka Writer

offset

Kafka Reader

offset

left side

right side txn id

txn

+I[<T>]

+I[<P>]

+I[<T>, <P>] +I[tid, amount, type] Message<k, v>](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-25-320.jpg)

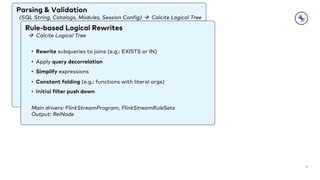

![Example 2 – With watermarks, no updates

26

Logical Tree

LogicalSink(table=[Matched], fields=[tid, amount, type])

+- LogicalProject(tid=[$1], amount=[$2], type=[$5])

+- LogicalFilter(condition=[AND(>=($3, $0), <=($3, +($0, 600000)))])

+- LogicalJoin(condition=[=($1, $4)], joinType=[inner])

:- LogicalWatermarkAssigner(rowtime=[ts], watermark=[-($0, 2000)])

: +- LogicalTableScan(table=[Transaction])

+- LogicalWatermarkAssigner(rowtime=[ts], watermark=[-($0, 2000)])

+- LogicalTableScan(table=[Payment])

Physical Tree

Sink(table=[Matched], fields=[tid, amount, type], changelogMode=[NONE])

+- Calc(select=[tid, amount, type], changelogMode=[I])

+- IntervalJoin(joinType=[InnerJoin], windowBounds=[leftLowerBound=…, leftTimeIndex=…, …], …, changelogMode=[I])

:- Exchange(distribution=[hash[tid]], changelogMode=[I])

: +- TableSourceScan(table=[Transaction, watermark=[-(ts, 2000), …]], fields=[ts, tid, amount], changelogMode=[I])

+- Exchange(distribution=[hash[tid]], changelogMode=[I])

+- TableSourceScan(table=[Payment, watermark=[-(ts, 2000), …]], fields=[ts, tid, type], changelogMode=[I])

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-26-320.jpg)

![Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Example 2 – With watermarks, no updates

27

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

Kafka Reader

Interval Join Calc Kafka Writer

offset

Kafka Reader

offset

left side

right side

txn id

txn

+I[<T>]

+I[<P>]

+I[<T>, <P>] +I[tid, amount, type] Message<k, v>

W[12:00]

W[11:55]

W[11:55]

timers](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-27-320.jpg)

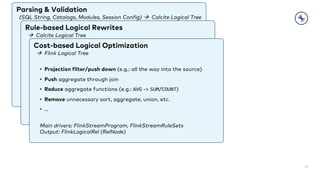

![Example 3 – With updates, no watermarks

28

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

Logical Tree

LogicalSink(table=[Matched], fields=[tid, amount, type])

+- LogicalProject(tid=[$1], amount=[$2], type=[$5])

+- LogicalFilter(condition=[AND(>=($3, $0), <=($3, +($0, 600000)))])

+- LogicalJoin(condition=[=($1, $4)], joinType=[inner])

:- LogicalTableScan(table=[Transaction])

+- LogicalTableScan(table=[Payment])

Physical Tree

Sink(table=[Matched], fields=[tid, amount, type], changelogMode=[NONE])

+- Calc(select=[tid, amount, type], changelogMode=[I,UA,D])

+- Join(joinType=[InnerJoin], where=[…], …, leftInputSpec=[JoinKeyContainsUniqueKey], changelogMode=[I,UA,D])

:- Exchange(distribution=[hash[tid]], changelogMode=[I,UA,D])

: +- ChangelogNormalize(key=[tid], changelogMode=[I,UA,D])

: +- Exchange(distribution=[hash[tid]], changelogMode=[I,UA,D])

: +- TableSourceScan(table=[Transaction], fields=[ts, tid, amount], changelogMode=[I,UA,D])

+- Exchange(distribution=[hash[tid]], changelogMode=[I])

+- TableSourceScan(table=[Payment], fields=[ts, tid, type], changelogMode=[I])](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-28-320.jpg)

![Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Kafka Reader

Join Calc Kafka Writer

Kafka Reader

Example 3 – With updates, no watermarks

29

INSERT INTO Matched

SELECT T.tid, T.amount, P.type

FROM Transaction T JOIN Payment P ON T.tid = P.tid

WHERE P.ts BETWEEN T.ts AND T.ts + INTERVAL '10' MINUTES;

Kafka Reader

Join Calc Kafka Writer

offset

Kafka Reader

offset

left side

right side txn id

txn

Join

Join

Join

Changelog

Normalize

last seen

+U[<T>]

+I[<P>]

+I[tid, amount, type] Message<k, v>

+I[<T>, <P>]

+I[<T>]

+U[<T>]

+I[<P>]

+U[tid, amount, type] Message<k, v>

+U[<T>,<P>]

+U[<T>]](https://image.slidesharecdn.com/bs30-20240319-ksl24-confluent-walthertimo-240402154534-c363d555/85/Flink-s-SQL-Engine-Let-s-Open-the-Engine-Room-29-320.jpg)