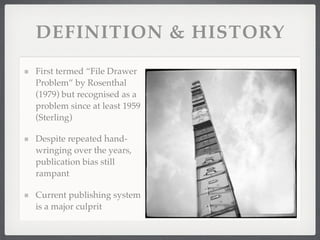

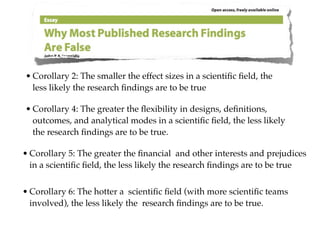

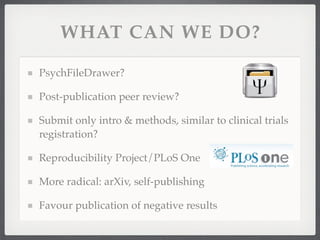

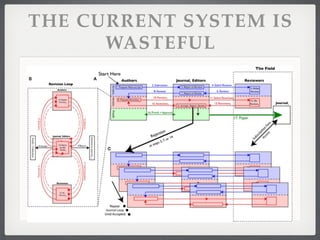

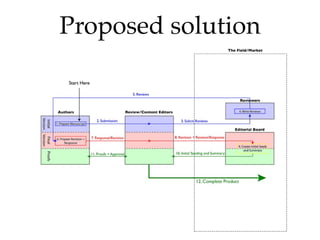

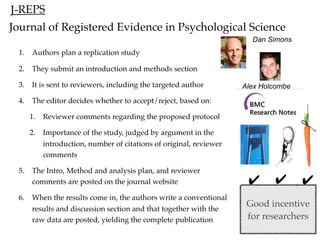

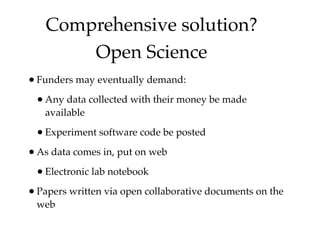

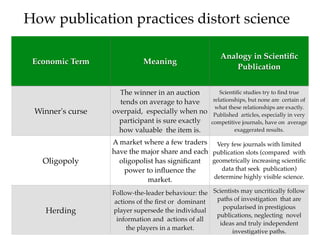

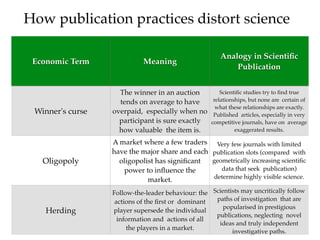

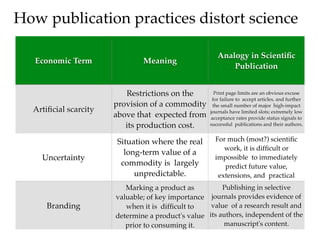

The document discusses how current scientific publishing practices can distort research findings and introduce biases. It draws parallels between economic concepts like the winner's curse, oligopoly, herding, artificial scarcity, uncertainty, and branding with problems in scientific publishing like exaggerated published results due to competition for limited journal slots, neglect of novel ideas, and using publication metrics as a status signal rather than evaluating results. Solutions proposed include registered replication studies and more open sharing of data, code, and draft publications.