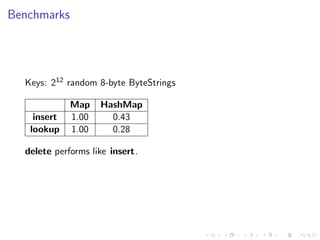

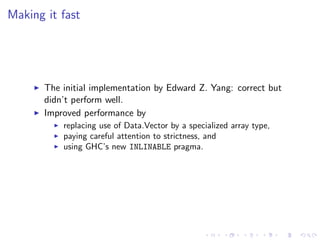

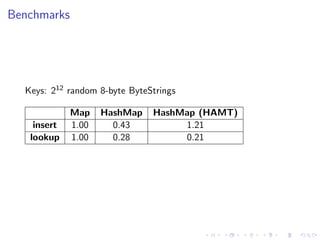

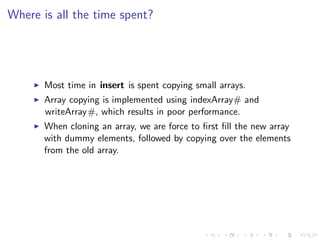

This document discusses using hashing to create faster persistent data structures. It summarizes an implementation of persistent hash maps in Haskell using Patricia trees and sparse arrays. Benchmark results show this approach is faster than traditional size balanced trees for string keys. The document also explores a Haskell implementation of Clojure's hash-array mapped tries, which provide even better performance than the hash map approach. Several ideas for further optimization are presented.

![First attempt at an implementation

−− D e f i n e d i n t h e c o n t a i n e r s p a c k a g e .

data IntMap a

= Nil

| Tip {−# UNPACK #−} ! Key a

| Bin {−# UNPACK #−} ! P r e f i x

{−# UNPACK #−} ! Mask

! ( IntMap a ) ! ( IntMap a )

type P r e f i x = I n t

type Mask = Int

type Key = Int

newtype HashMap k v = HashMap ( IntMap [ ( k , v ) ] )](https://image.slidesharecdn.com/galois-2011-110215175556-phpapp02/85/Faster-persistent-data-structures-through-hashing-8-320.jpg)

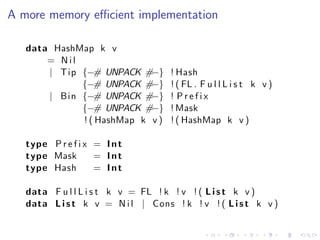

![Reducing the memory footprint

List k v uses 2 fewer words per key/value pair than [( k, v )] .

FullList can be unpacked into the Tip constructor as it’s a

product type, saving 2 more words.

Always unpack word sized types, like Int, unless you really

need them to be lazy.](https://image.slidesharecdn.com/galois-2011-110215175556-phpapp02/85/Faster-persistent-data-structures-through-hashing-10-320.jpg)