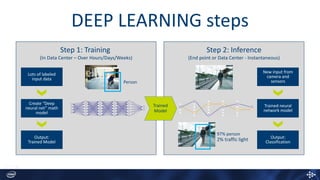

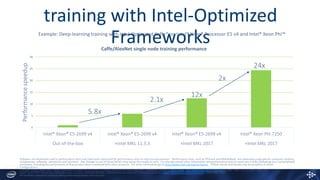

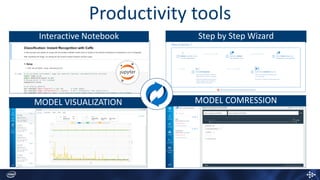

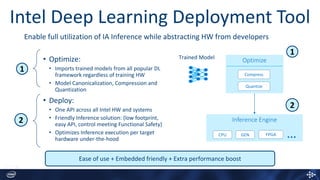

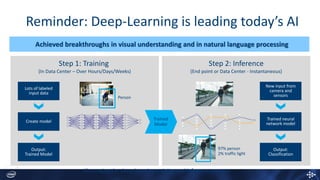

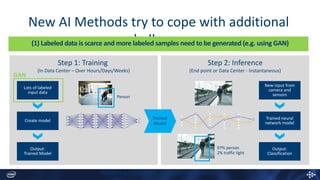

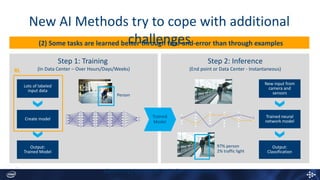

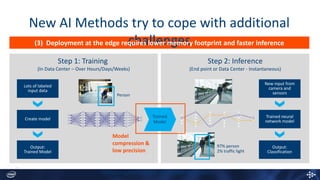

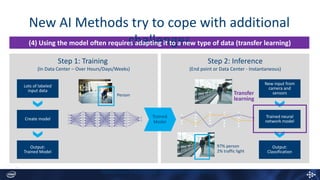

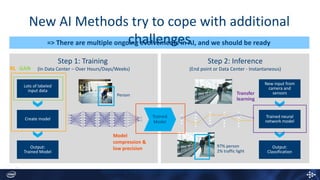

The document discusses Intel's Deep Learning SDK which aims to democratize deep learning by making it easily accessible and deployable. The SDK provides plug and train functionality through an easy installer, maximizes performance through optimized frameworks, and includes productivity tools. It allows for distributed multi-node training and deploys trained models across Intel hardware and systems through optimizations, compression, and quantization to accelerate deployment. The SDK addresses challenges in deep learning like limited labeled data, reinforcement learning tasks, and deployment at the edge with lower memory models.