Interpretable ML

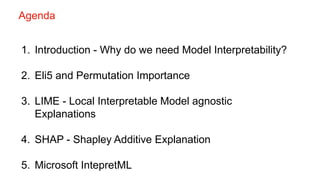

- 1. 1. Introduction - Why do we need Model Interpretability? 2. Eli5 and Permutation Importance 3. LIME - Local Interpretable Model agnostic Explanations 4. SHAP - Shapley Additive Explanation 5. Microsoft IntepretML Agenda

- 2. Why interpretability is important Data Science algortihms are used to make lot of cirtical decisions in various industries • Credit Rating • Disease diagnonsis • Banks (Loan processing) • Recommendations (Products , movies) • Crime control

- 3. Why interpretability is important ExplainDecisions Improve Models Strengthen Trust & Transparency Satisfy Regulatory Requirements

- 4. Fairness & Transperability in ML Model Example : Predicting Employees performance in big company Input Data : Past perfromance reviews of the employees for the last 10 yrs Caveat : What if company promotes more Men than Women ? Output : Model will learn the bias and predict favourable result for Men Models are Opinions Embedded in Mathematics — Cathy O’Neil

- 6. Some Models are easy to Interpret X1 X2 IF 5X1 + 2X2 > 0 OTHERWISE Savings Income X1 X2 X1 > 0.5 X2 > 0.5 Decision TreesLinear / Logistic Regression

- 7. Some models are harder to interpret Ensemble models (random forest, boosting, etc…) 1. Hard to understand the role of each feature 2. Usually comes with feature importance 3. Doesn’t tell us if feature affects decision positively or negatively Deep Neural Networks 1. No correlation between the input layer & output 2. Black-box

- 8. Should we use only simple model • Use simple models like Linear or Logessitic regressions , so that you can explain the results • Use complex models & use interpredicbility techinques to explain the res

- 9. Types of Interpretations ● Local: Explain how and why a specific prediction was made ○ Why did our model predict certain outcome for given a given customer ● Global: Explain how our model works overall ○ What features are important for our model and how do they impact the prediction ? ○ Feature Importance provides amplitude , not direction of impact

- 10. ELI5 & Permutation Importance • Can be used to interpret sklearn-like models • Works for both Regression & Classification models • Create nice visualisations for white-box models o Can be used for local and global interpretation • Implements Permutation Importance for black-box models (boosting) o Only global interpretation

- 11. ELI5 – White-box Models Explain model globally (feature importance): import eli5 eli5.show_weights(model)

- 12. ELI5 – White-box Models Explain model Locally import eli5 eli5.show_prediction(model, observation)

- 13. ELI5 – Permutation Importance • Model Agnostic • Provides global interpretation • For each feature: o Shuffle values in the provided dataset o Generate predictions using the model on the modified dataset o Compute the decrease in accuracy vs before shuffling

- 14. ELI5 – Permutation Importance perm = PermutationImportance(model) perm.fit(X, y) eli5.show_weights(perm)

- 15. LIME - Local Interpretable Model-Agnostic Explanations Local: Explains why a single data point was classified as a specific class Model-agnostic: Treats the model as a black-box. Doesn’t need to know how it makes predictions Paper “Why should I trust you?” published in August 2016. "Why Should I Trust You?": Explaining the Predictions of Any Classifier Marco Tulio Ribeiro, Sameer Singh, Carlos Guestrin

- 16. Being Model Agnostic No assumptions about the internal structure… X1 >0.5 f Explain any existing, or future, model Data Decision F(x )

- 17. Being Local & Model-Agnostic Source: https://www.youtube.com/watch?v=LAm4QmVaf0E&t=3658s “Global” explanation is too complicated

- 18. Being Local & Model-Agnostic Source: https://www.youtube.com/watch?v=LAm4QmVaf0E&t=3658s “Global” explanation is too complicated

- 19. Being Local & Model-Agnostic Source: https://www.youtube.com/watch?v=LAm4QmVaf0E&t=3658s “Global” explanation is too complicated Explanation is an interpretable model, that is locally accurate

- 20. LIME - How does it work? "Why Should I Trust You?": Explaining the Predictions of Any Classifier Marco Tulio Ribeiro, Sameer Singh, Carlos Guestrin

- 21. How LIME Works 1. Permute data -Create new dataset around observation by sampling from distribution learnt on training data (In the Perturbed dataset for Numerical features are picked based on the distribution & categorical values based on the occurrence) 2. Calculate distance between permutations and original observations 3. Use model to predict probability on new points, that’s our new y 4. Pick m features best describing the complex model outcome from the permuted data 5. Fit a linear model on data in m dimensions weighted by similarity

- 22. ● Central plot shows importance of the top features for this prediction ○ Value corresponds to the weight of the linear model ○ Direction corresponds to whether it pushes in one way or the other ● Numerical features are discretized into bins ○ Easier to interpret: weight == contribution / sign of weight == direction How LIME Works

- 23. "Why Should I Trust You?": Explaining the Predictions of Any Classifier Marco Tulio Ribeiro, Sameer Singh, Carlos Guestrin LIME - Canbe used on images

- 24. Lime supports many types of data: ● Tabular Explainer ● Recurrent Tabular Explainer ● Image Explainer ● Text Explainer LIME Available Explainers

- 25. Shapley Additive Explanations(SHAP) ● Anexplanation model is a simpler model that is a good approximation of our complex model. ● “Additive feature attribution methods”: the local explanation is a linear combination of the features. Dataset Complex Model Prediction Explainer Explanation

- 26. ShapleyAdditive Explanations(SHAP) ● For a given observation, we compute a SHAP value per feature, such that: sum(SHAP_values_for_obs) = prediction_for_obs - model_expected_value ● Model’s expected value is the average prediction made by our model

- 27. How much has each feature contributed to the prediction compared to the average prediction House price Prediction 400,000 € Average house price prediction for all the apartments 420,000 € Delta in Price -20,000 €

- 28. How much has each feature contributed to the prediction compared to the average prediction Park Contributed + 10k Cats Contributed -50k Secure Neighbourhood Contributed + 30k Paint Contributed -10k

- 29. Shapley Additive Explanations(SHAP) For the model agnostic explainer, SHAP leverages Shapley values from Game Theory. To get the importance of feature X{i}: ● Get all subsets of features S that do not contain X{i} ● Compute the effect on our predictions of adding X{i} to all those subsets ● Aggregate all contributions to compute marginal contribution of the feature

- 30. Shapley Additive Explanations (SHAP) ● TreeExplainer - For tree based models . Works with scikit- learn, xgboost, lightgbm, catboost ● DeepExplainer - For Deep Learning models ● KernelExplainer - Model agnostic explainer

- 31. SHAP - Local Interpretation ● Base value is the expected value of your model ● Output value is what your model predicted for this observation ● In red are the positive SHAP values, the features that contribute positively to the outcome ● In blue the negative SHAP values, features that contribute negatively to the outcome

- 32. SHAP - Global Interpretation Although SHAP values are local, by plotting all of them at once we can learn a lot about our model globally

- 33. SHAP-TreeExplainerAPI 1. Create a new explainer, with our model as argument explainer = TreeExplainer(my_tree_model) 2. Calculate shap_values from our model using some observations shap_values = explainer.shap_values(observations) 3. Use SHAP visualisation functions with our shap_values shap.force_plot(base_value, shap_values[0]) # local explanation shap.summary_plot(shap_values) # Global features importance

- 34. Microsoft IntepretML Glassbox - Models Blackbox Explanations Interpredibility Approaches https://github.com/interpretml/interpret

- 35. Glassbox – Models Models designed to be interpretable. Lossesless explainablity . Linear Regression Decision Models Rule Lists Explainable Boosting Machines (EBM) ……..

- 36. Explainable Boosting Machines (EBM) 1. EBM preserves interpredibulty of a linear model 2. Matches Accuracy of powerfull blackbox models – Xgboost , Random Forest 3. Light in deployment 4. Slow in fitting on data from interpret.glassbox import * from interpret import show ebm =ExplainableBoostingClassifier() ebm.fit(X_train, y_train) ebm_global = ebm.explain_global() show(ebm_global) ebm_local = ebm.explain_local(X_test[:5], y_test[:5], show(ebm_local)

- 37. Blackbox – Explanations Model Perturb Inputs Analyse Explanations SHAP LIME Partial Dependence Senstive Analysis

- 38. Questions. ?