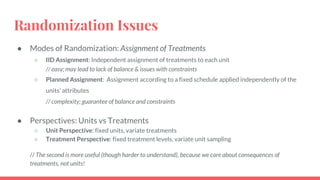

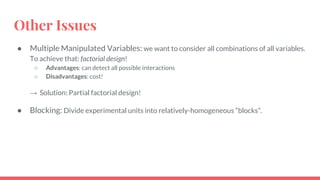

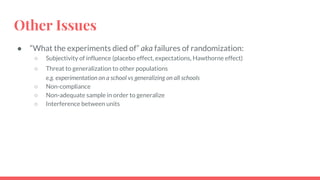

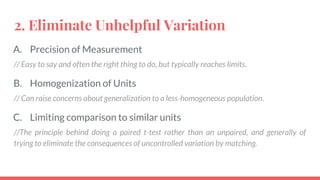

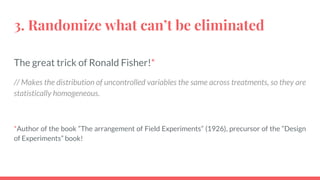

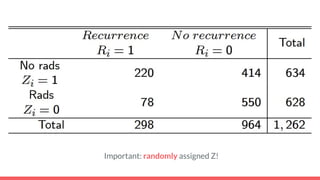

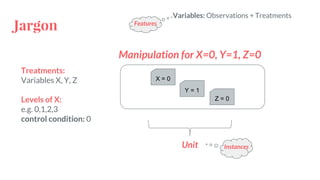

The document discusses experimental causal inference and key concepts in experimental design. It defines causal inference as trying to answer causal questions from data, and experimental causal inference as doing so using experiments rather than observations. The basic ideas of experimental design are outlined as maximizing useful variation, eliminating unhelpful variation, and randomizing what cannot be eliminated. Randomization is described as the key to ensuring treatment groups are statistically equivalent. Some open issues discussed include types of randomization, choice of treatment levels, and other challenges like multiple variables, blocking, and limitations of randomization.

![Randomization & Linear Models

In all the below-mentioned cases, linear models (e.g. Linear Regression) can be

sufficient for the estimation of the expected causal effects, either entirely or under

conditions.

● Randomize one treatment

○ Binary Values

Coefficient on X: E[Y|X=1]-E[Y|X=0]

○ Discrete Values

Coefficients on X: E[Y|X=x]-E[Y|X=0] //for all x

● Randomize multiple treatments

E[Y|do(X=x,Z=z)] = μ + fX

(x) + fZ

(z) + fXZ

(x,z) //only if levels of X and Z are discrete](https://image.slidesharecdn.com/experimentalcausalinference-161115204924/85/Experimental-Causal-Inference-18-320.jpg)