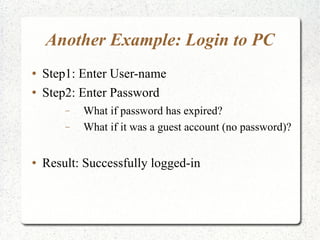

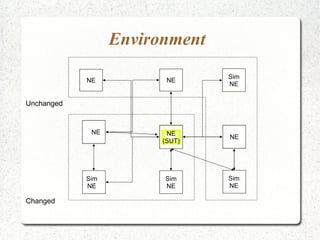

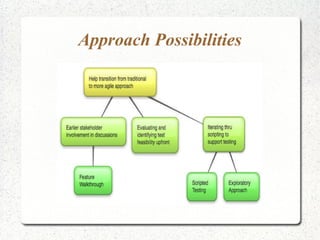

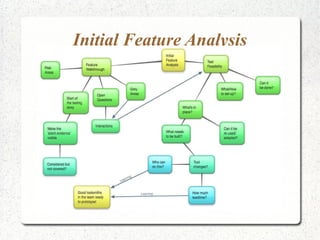

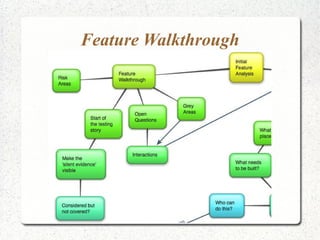

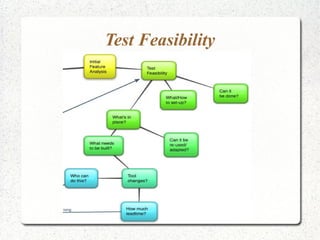

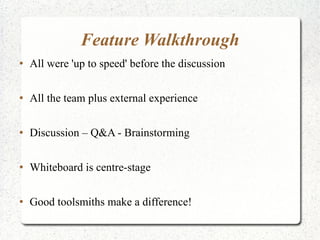

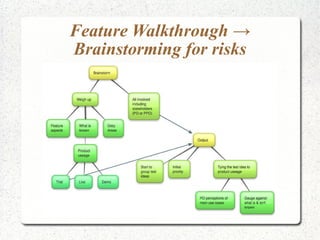

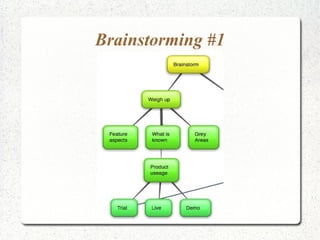

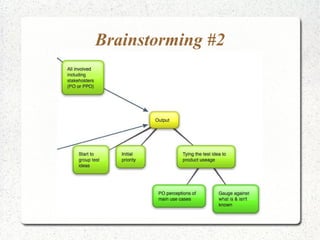

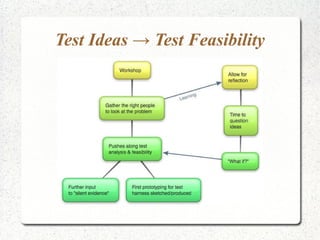

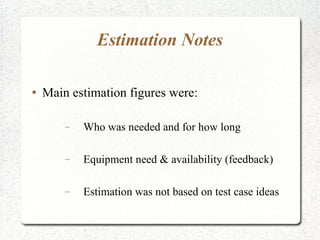

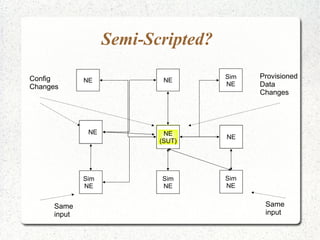

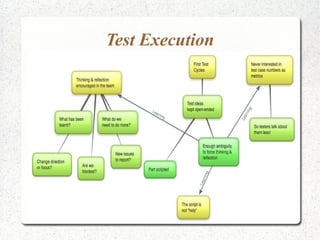

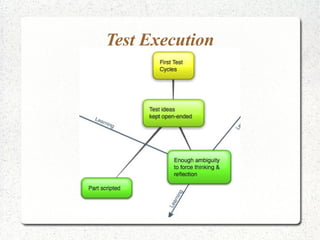

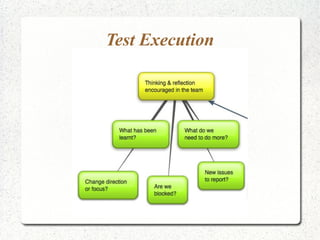

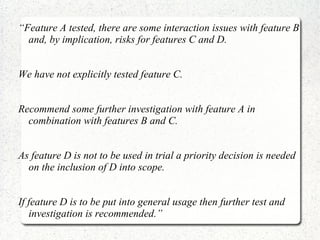

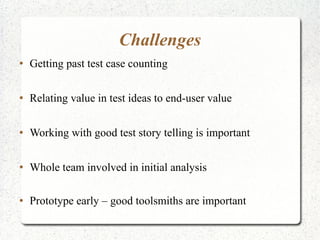

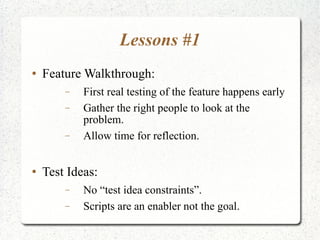

The document discusses experiences with combining semi-scripted and exploratory testing approaches. It describes a case where short timelines and complex environments typically led to traditional scripted testing. A semi-scripted approach was used, involving feature walkthroughs, brainstorming test ideas, and execution with some predefined setup but freedom for exploratory testing. This transitioned teams to value investigative testing over scripted test cases and numbers, finding more issues and providing better information to stakeholders.