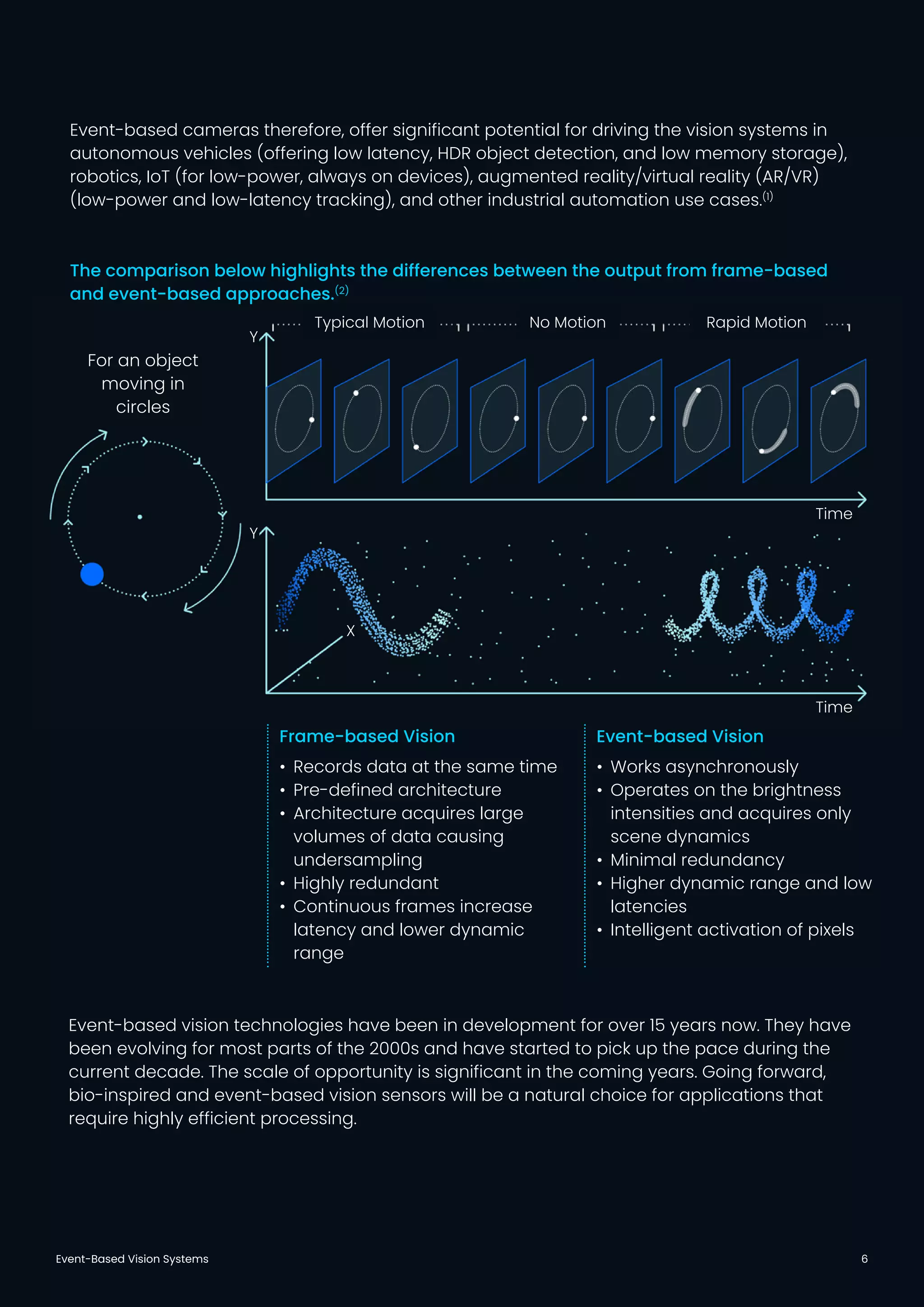

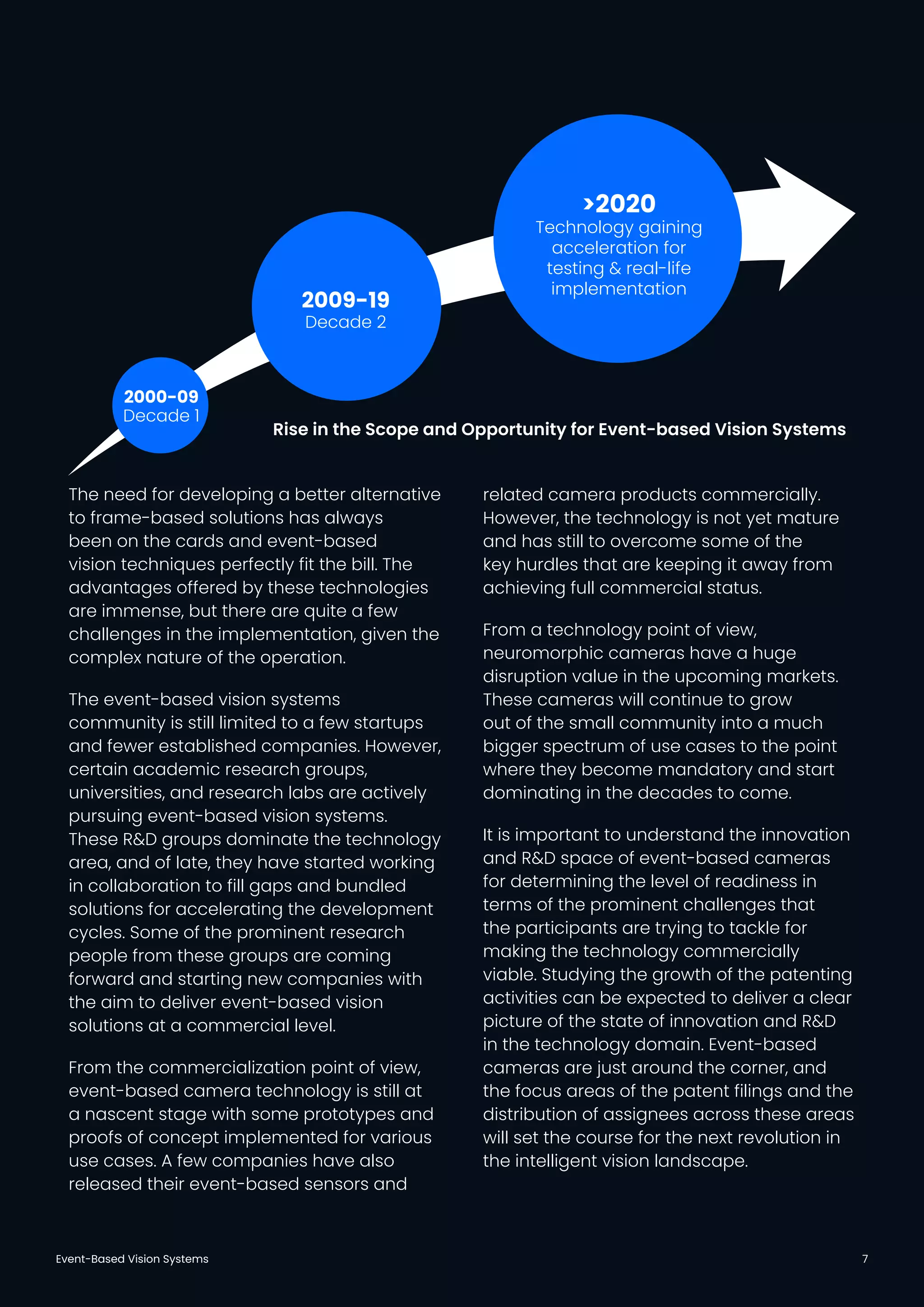

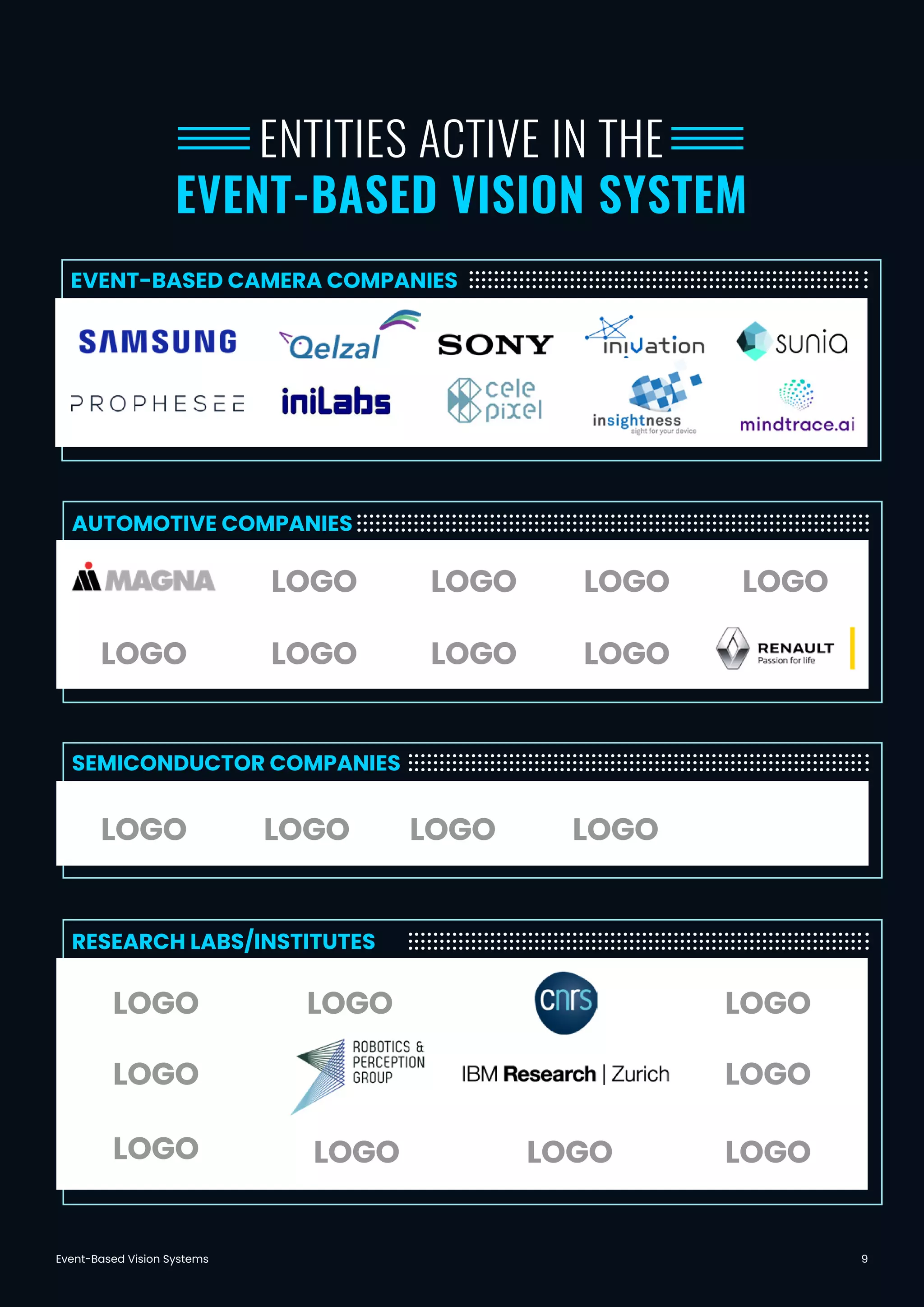

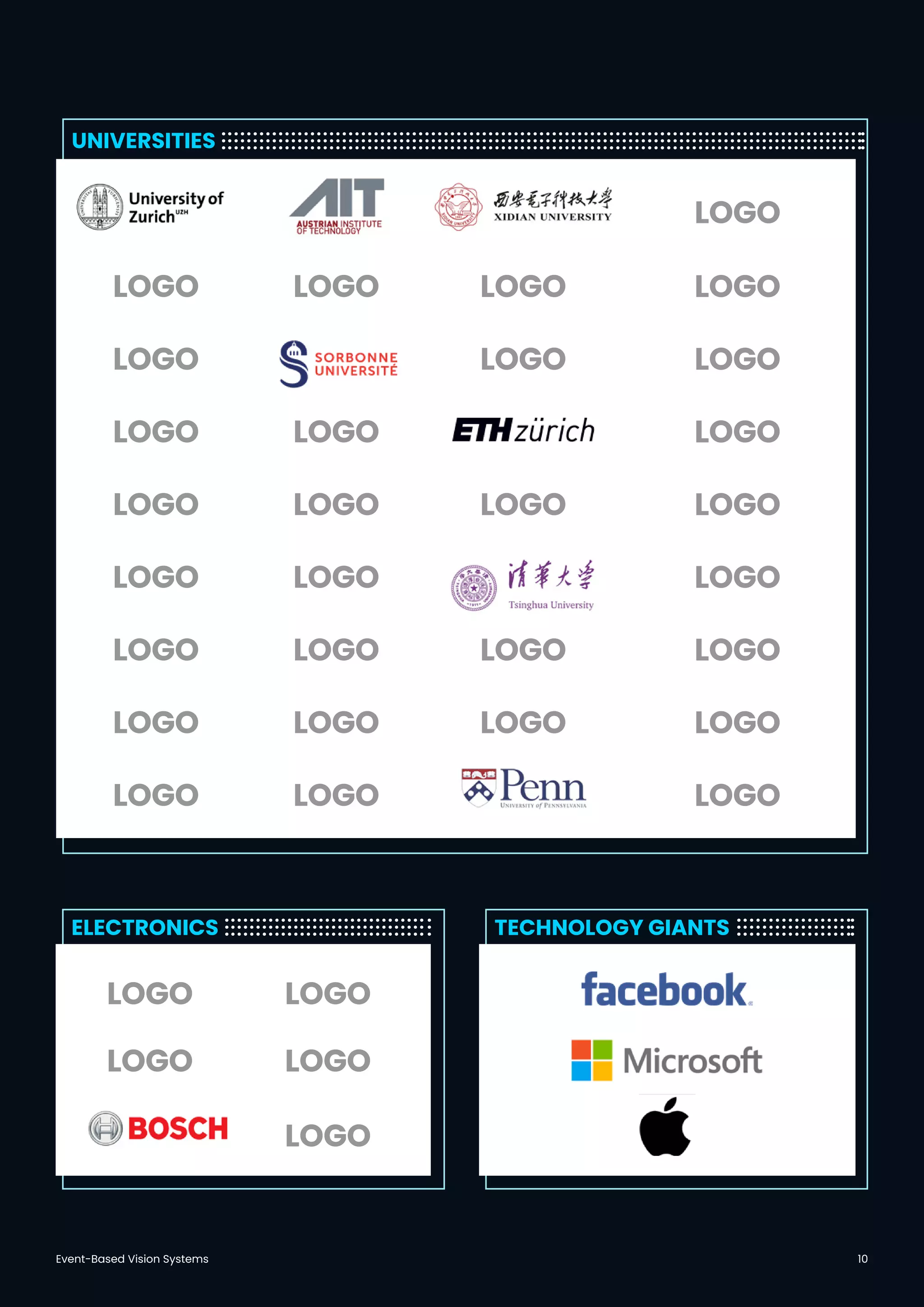

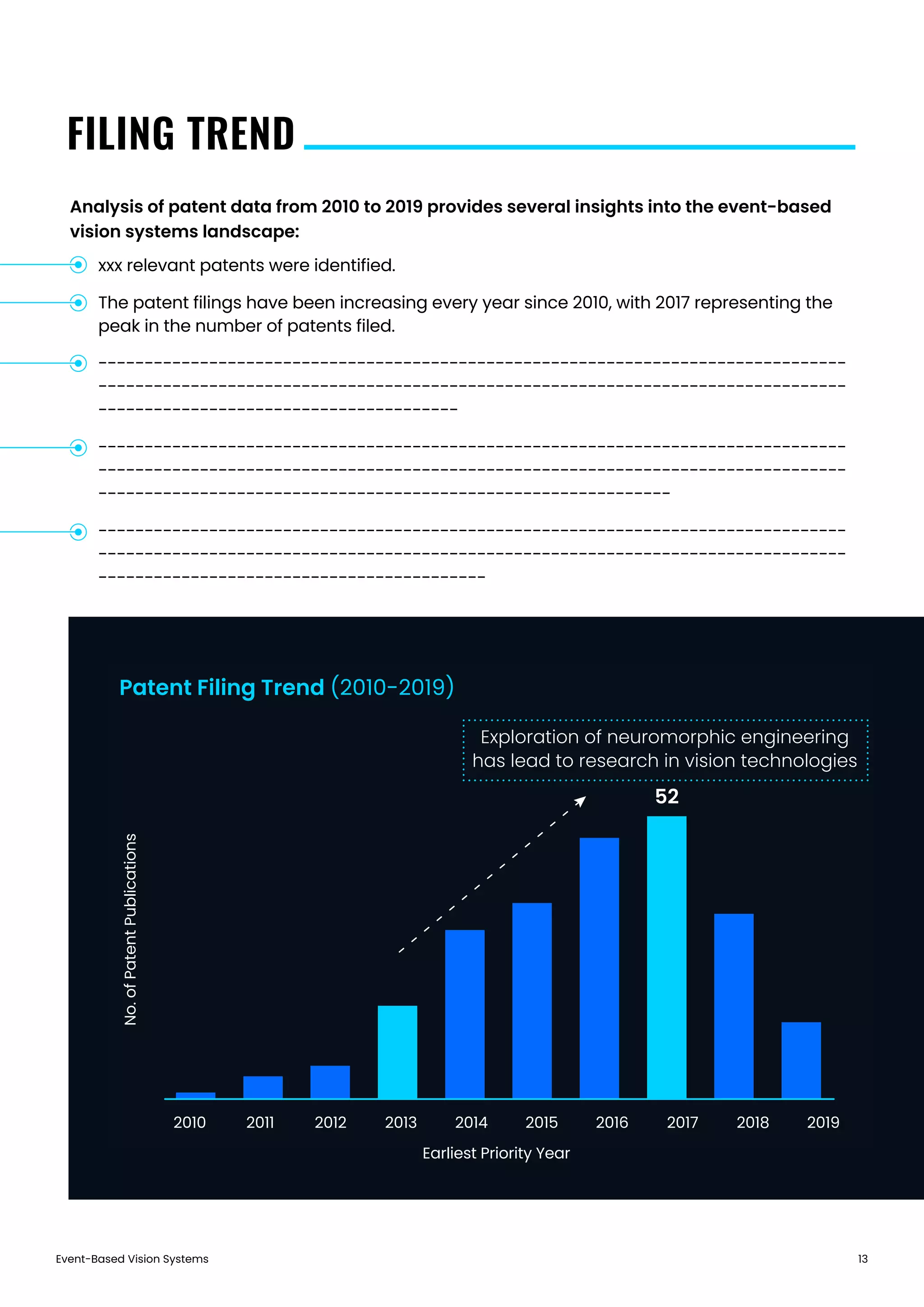

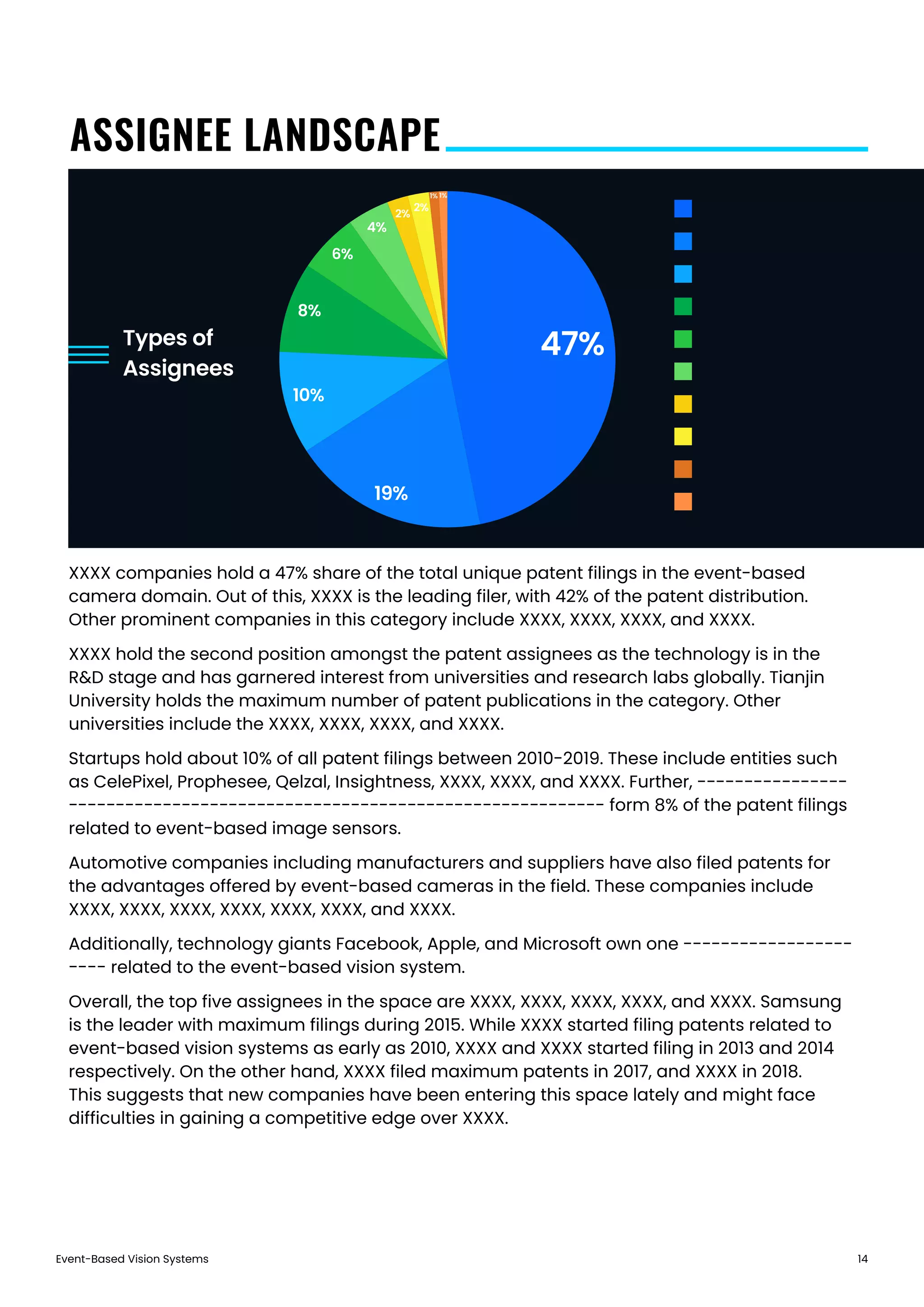

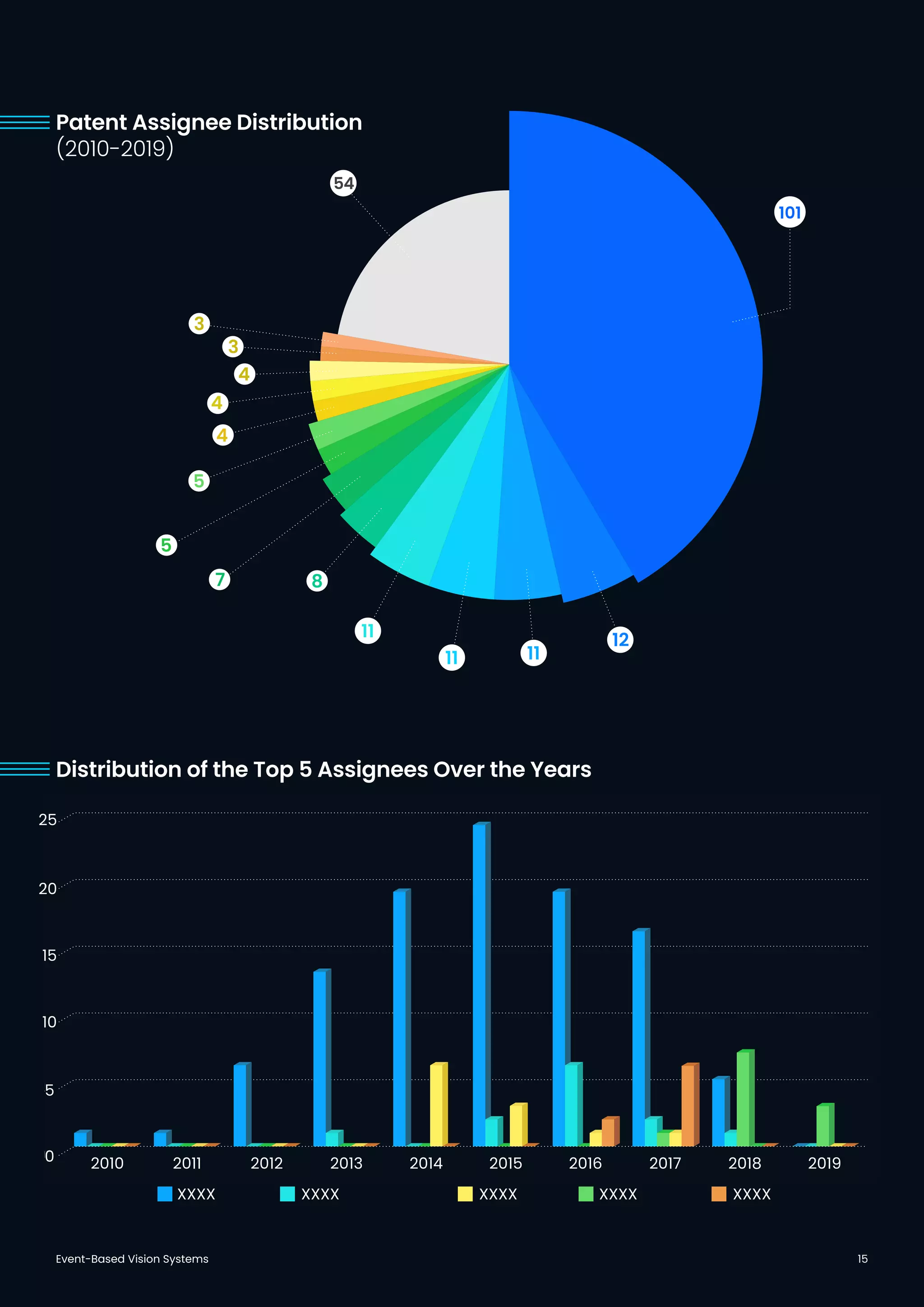

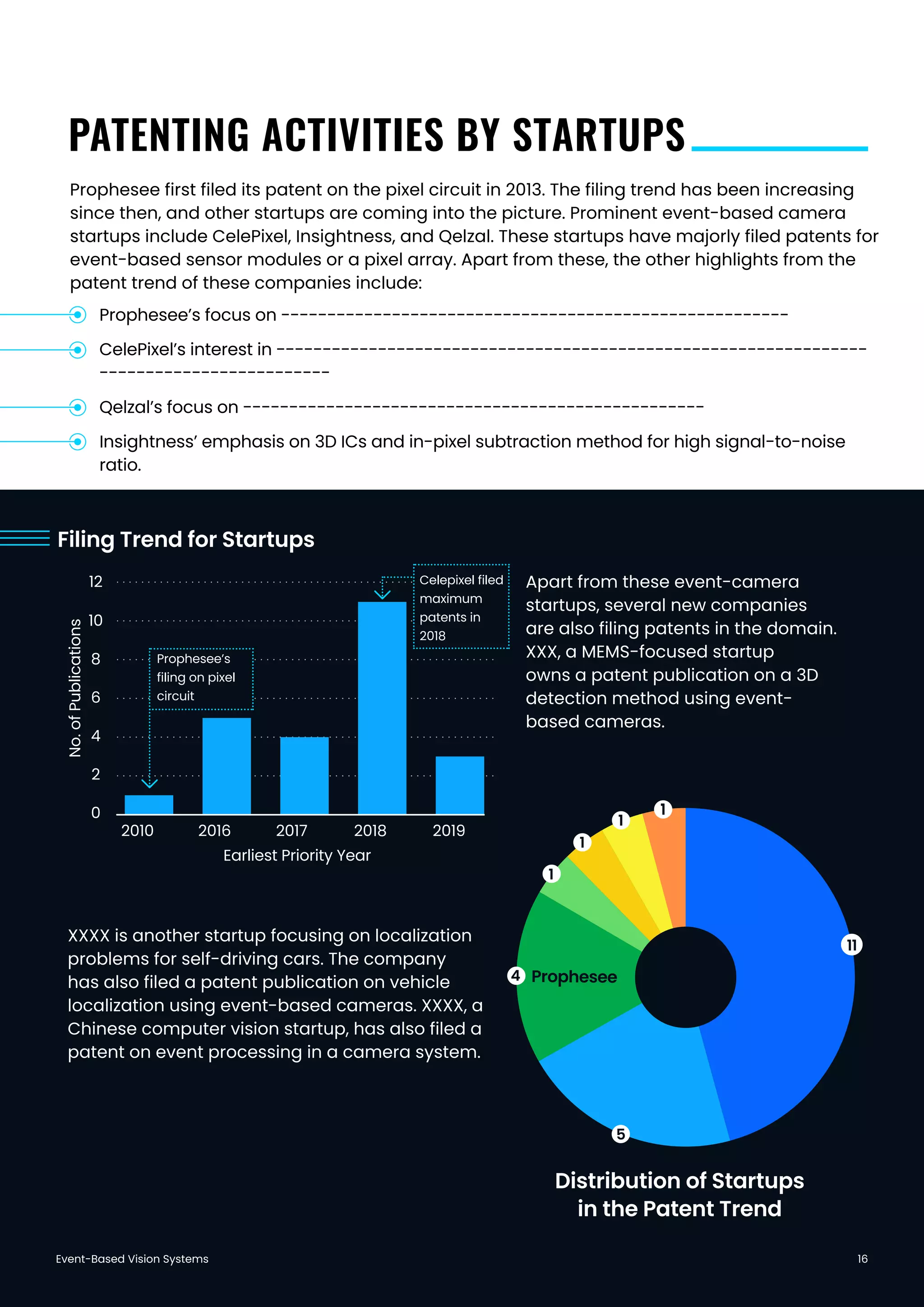

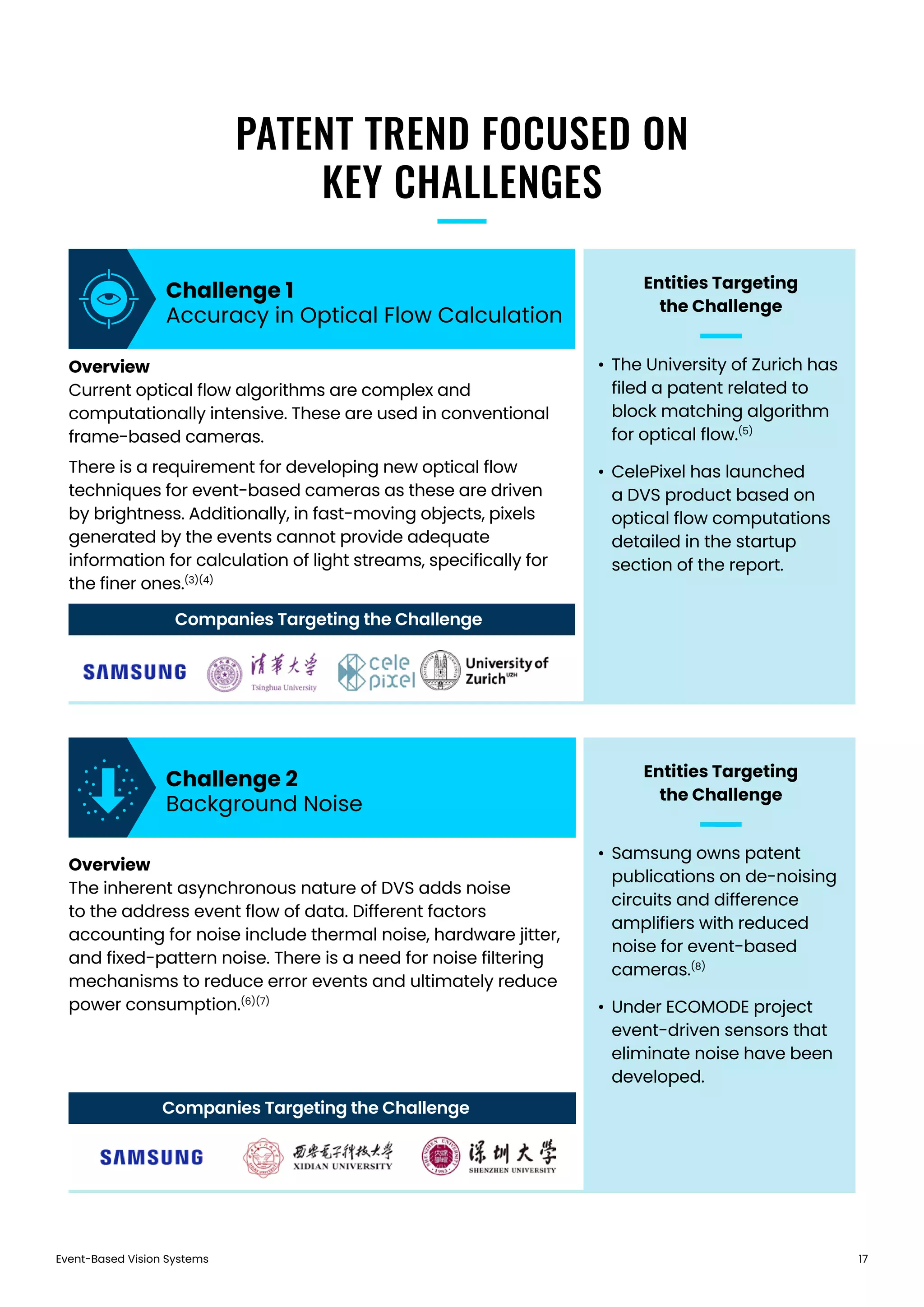

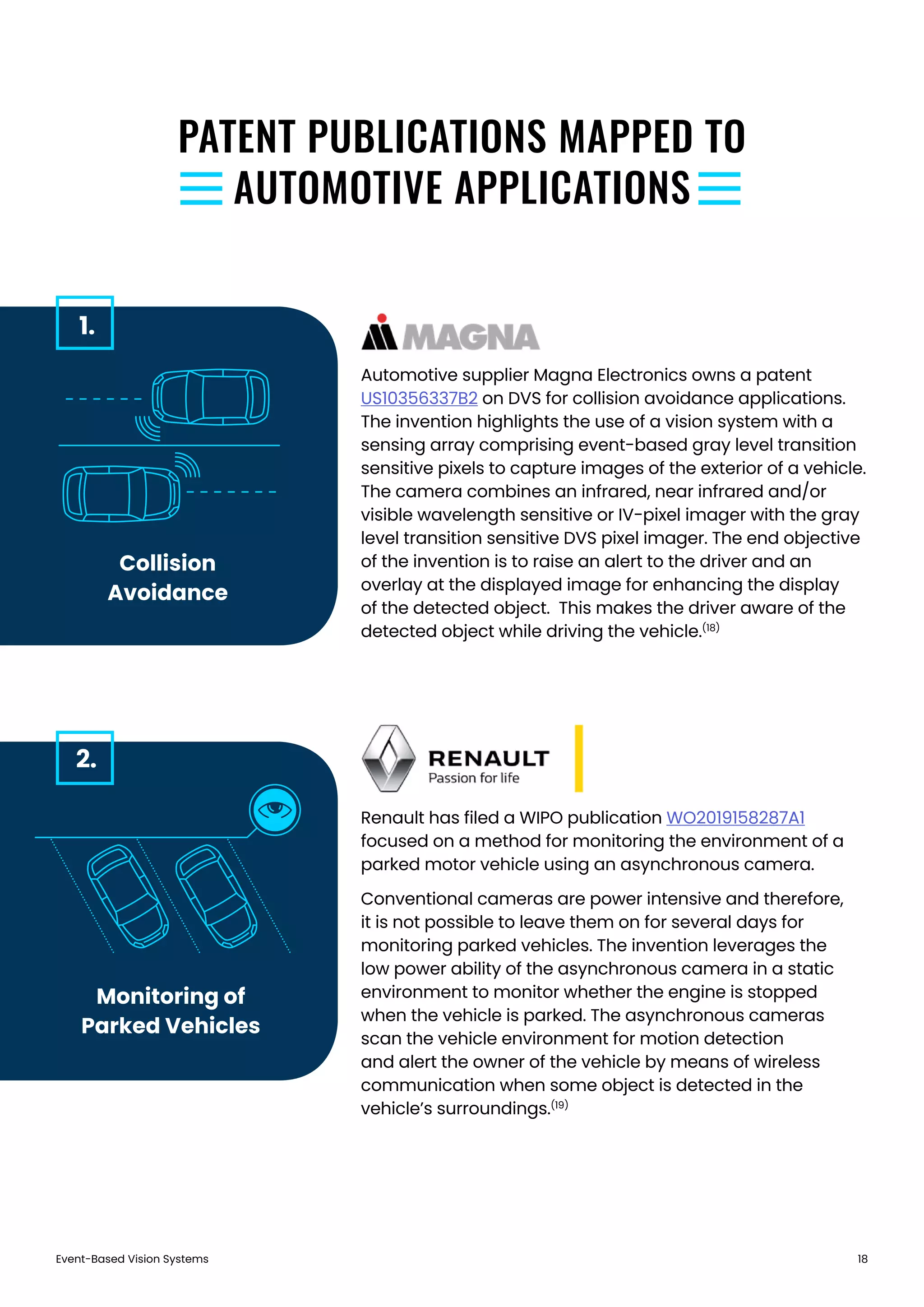

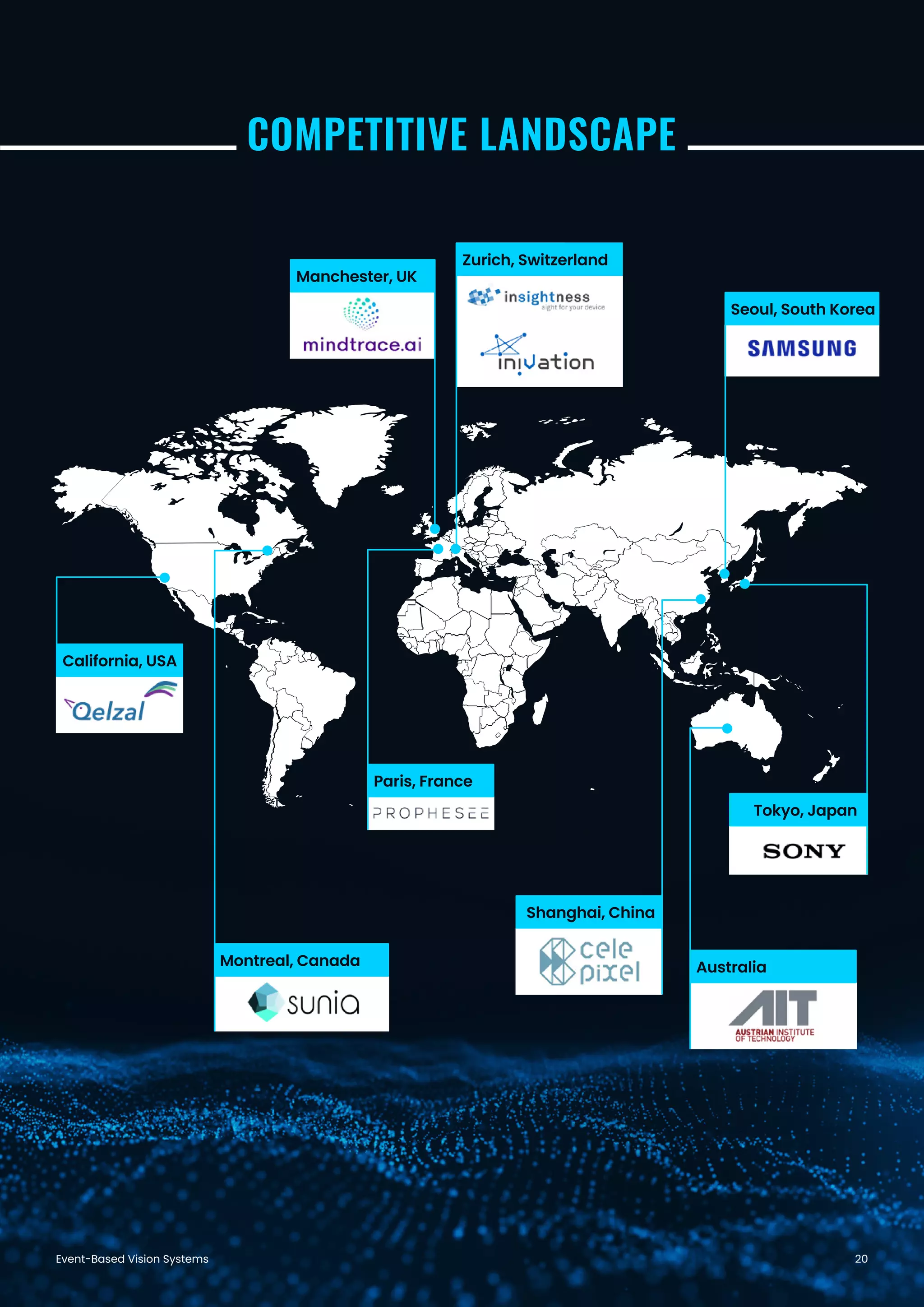

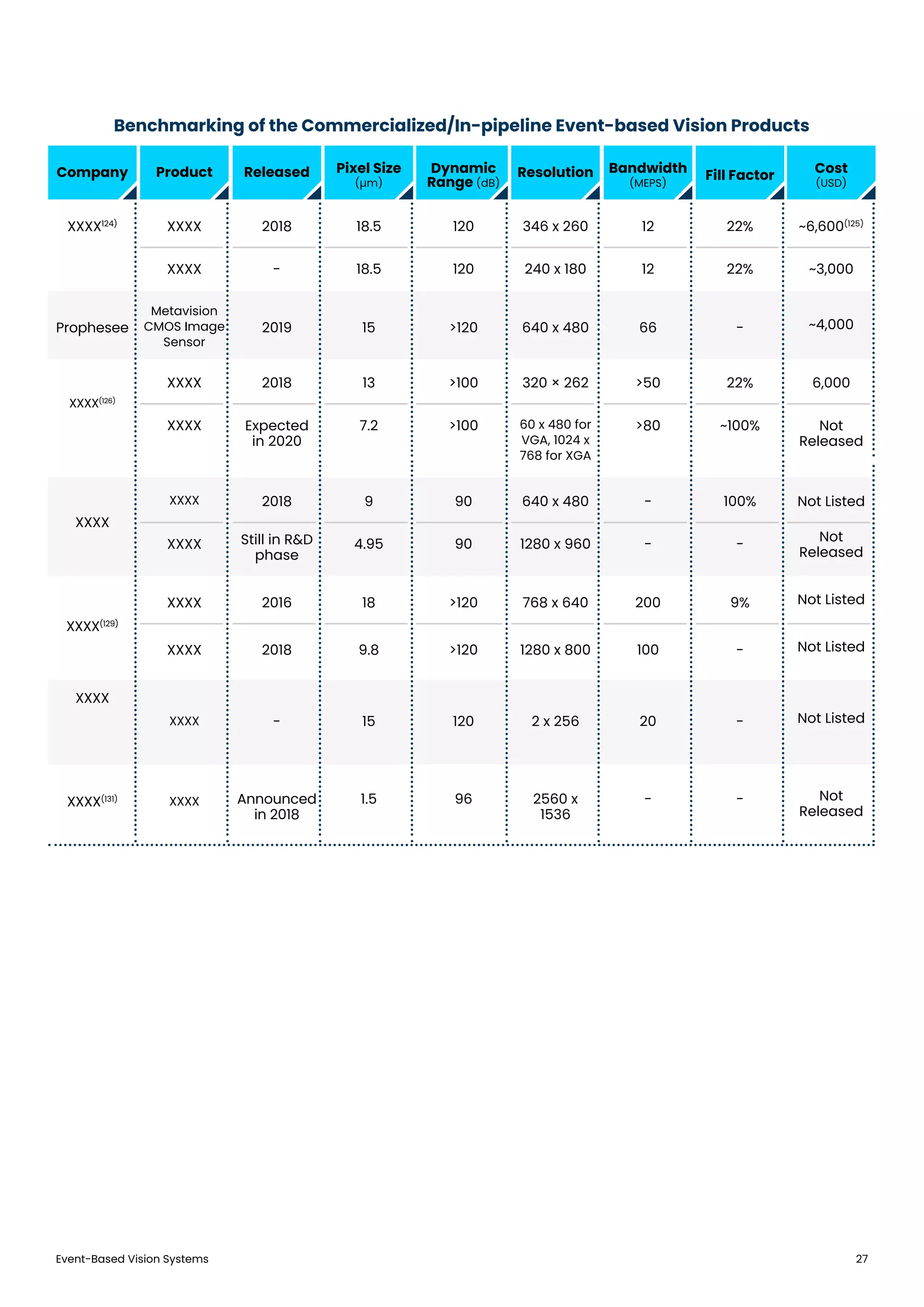

The document provides a comprehensive analysis of event-based vision systems, highlighting their advantages over traditional frame-based imaging, including reduced latency and lower power consumption. It explores patent trends, startup activity, and key challenges in the development of these systems, emphasizing their potential applications in autonomous vehicles, robotics, and industrial automation. The study indicates that while event-based camera technology is still in its infancy, significant opportunities and developments are emerging in this field.