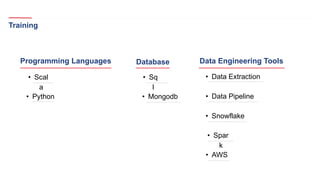

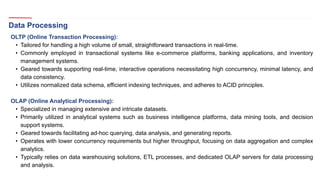

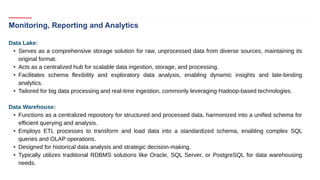

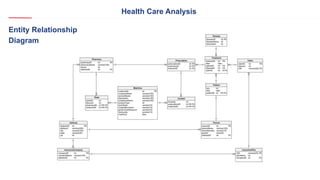

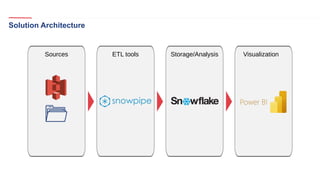

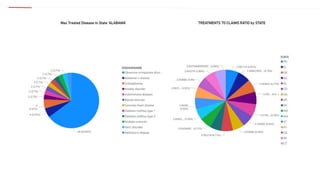

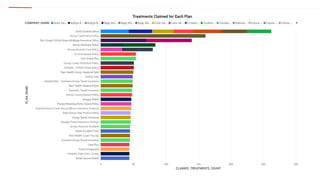

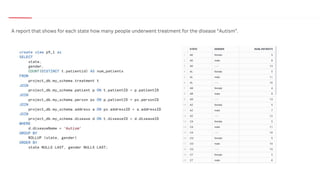

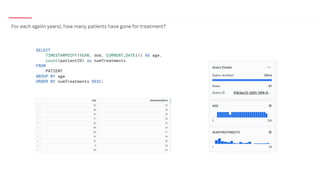

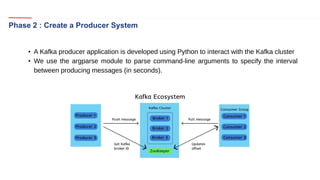

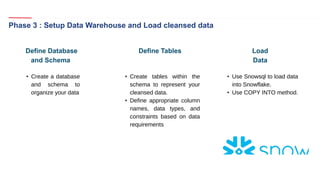

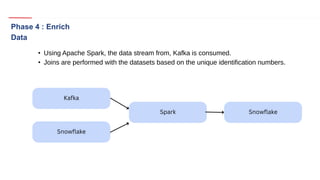

The document outlines a healthcare and telecom revenue analysis project focusing on data engineering practices, including data extraction, transformation, and loading (ETL) processes utilizing tools like Python, Snowflake, and Apache Spark. It details the workflow for analyzing customer behavior and financial insights, employing technologies for real-time data processing and analytics. A comprehensive approach to data management is highlighted, emphasizing both monitoring and reporting capabilities to improve decision-making across industries.