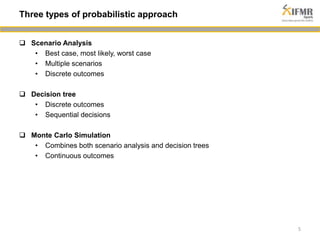

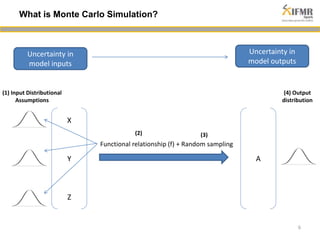

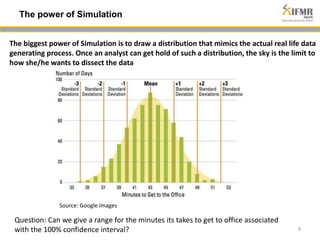

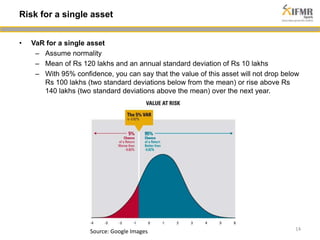

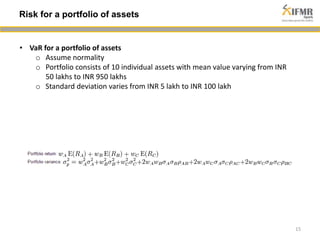

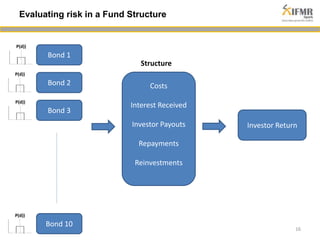

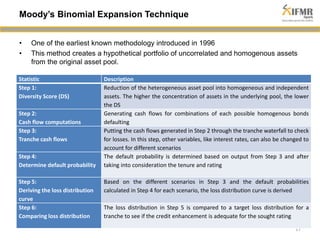

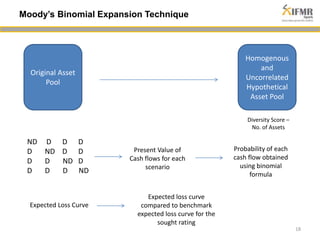

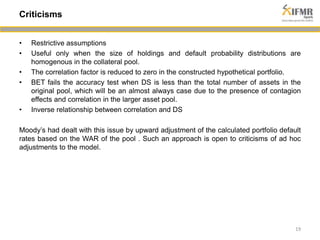

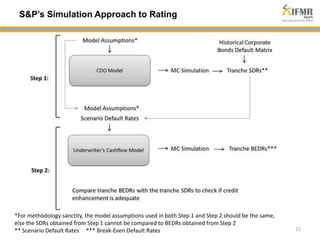

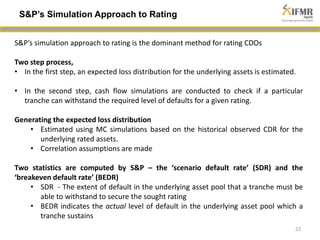

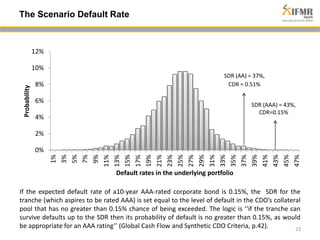

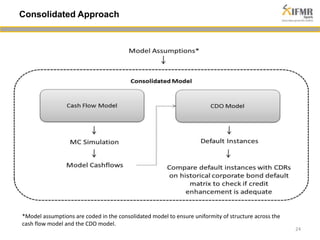

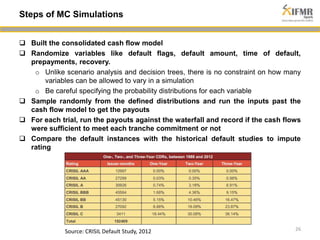

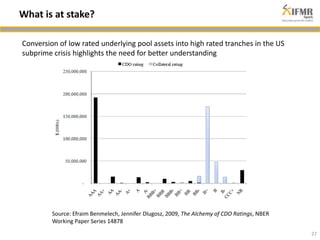

The document discusses methodologies for estimating default risk in fund structures, primarily through Monte Carlo (MC) simulations, scenario analysis, and decision trees. It highlights the advantages of simulations in managing uncertainty and assessing risks, as well as the specific techniques used for evaluating asset portfolios and credit default obligations (CDOs). Additionally, it addresses the limitations and criticisms of these methods, while emphasizing the importance of accurate data and assumptions in the simulation process.

![Non Probabilistic

• Point estimate – one value as the

‘best guess’ for the population

parameter

• E.g. Sample mean is a point

estimate for Population mean

4

Approach to Uncertainty

Probabilistic

• Interval estimate – Range of

values that is likely to contain the

population parameter

• E.g. Sample mean lies within [a.b]

with 95% confidence (i.e. a

confidence interval)

Sample Population

Statistic Parameter](https://image.slidesharecdn.com/estimatingdefaultriskinfundstructures-141030031050-conversion-gate02/85/Estimating-default-risk-in-fund-structures-4-320.jpg)