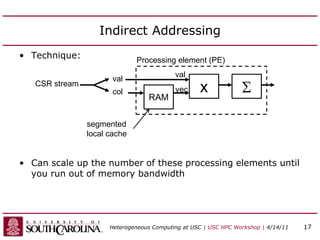

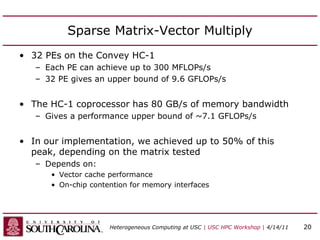

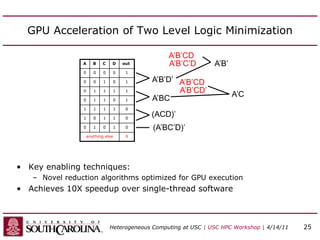

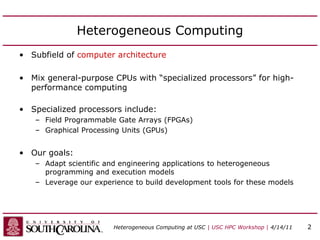

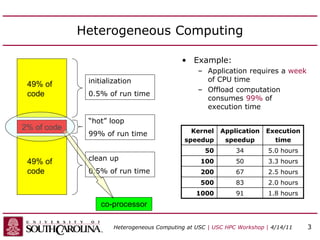

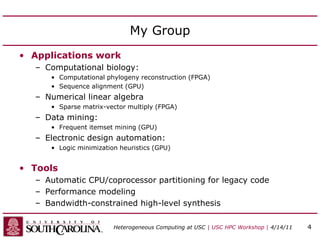

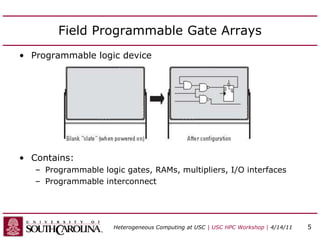

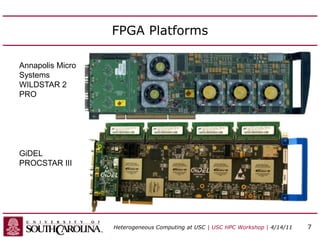

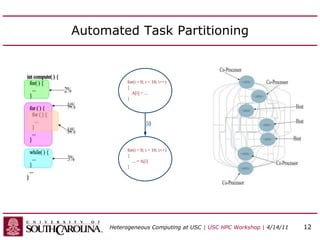

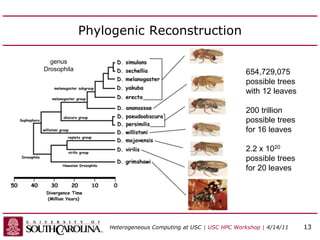

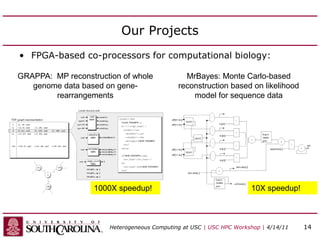

This document discusses heterogeneous computing research at the University of South Carolina. It summarizes that heterogeneous computing uses general-purpose CPUs combined with specialized processors like FPGAs and GPUs. The research group's goals are to adapt applications to heterogeneous models and build development tools. Examples of applications accelerated with FPGAs and GPUs include computational biology algorithms, sparse matrix arithmetic, sequence alignment, and logic minimization.

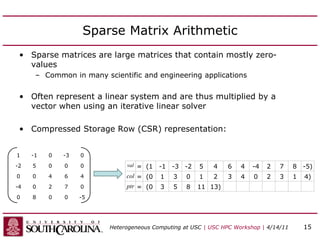

![Sparse Matrix-Vector Multiply

• Code for Ax = b

– A is matrix stored in val, col, ptr

row = 0

for i = 0 to number_of_nonzero_elements do

if i = ptr[row+1] then row=row+1, b[row]=0.0

b[row] = b[row] + val[i] * x[col[i]]

end

recurrence (reduction)

non-affine (indirect) indexing

Heterogeneous Computing at USC | USC HPC Workshop | 4/14/11 16](https://image.slidesharecdn.com/epscortalk2-220920024221-d368c578/85/epscor_talk_2-pptx-16-320.jpg)