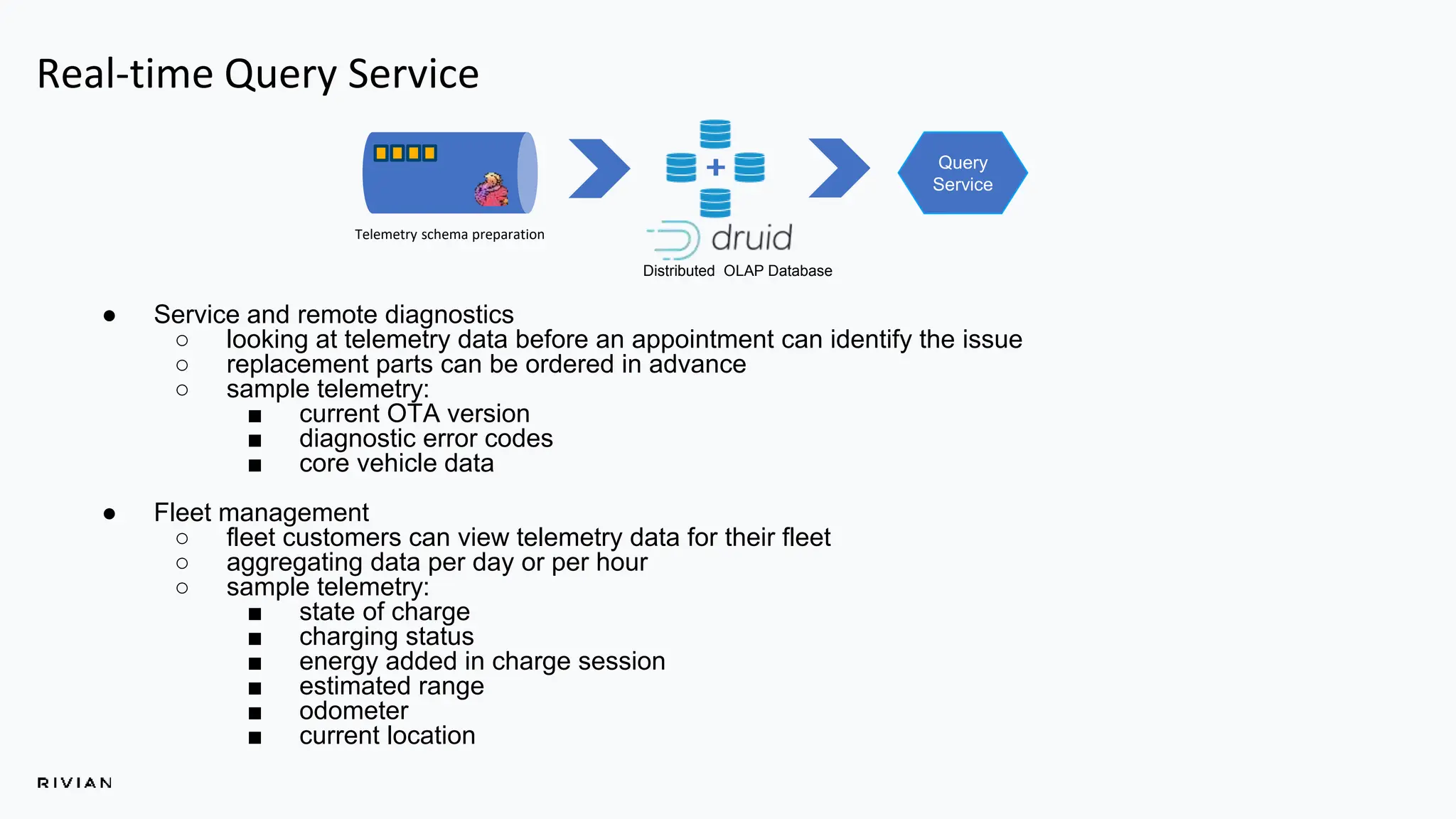

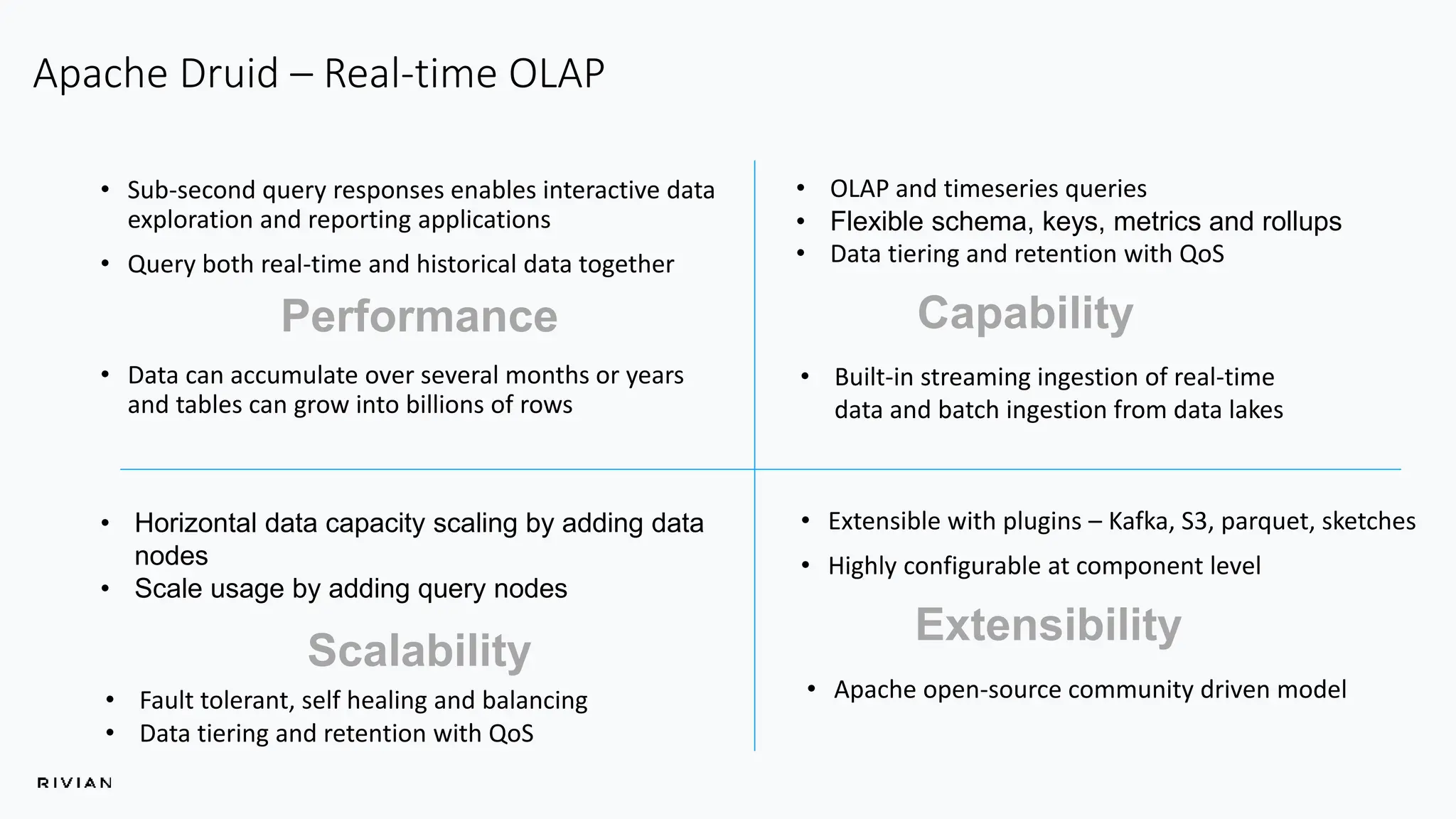

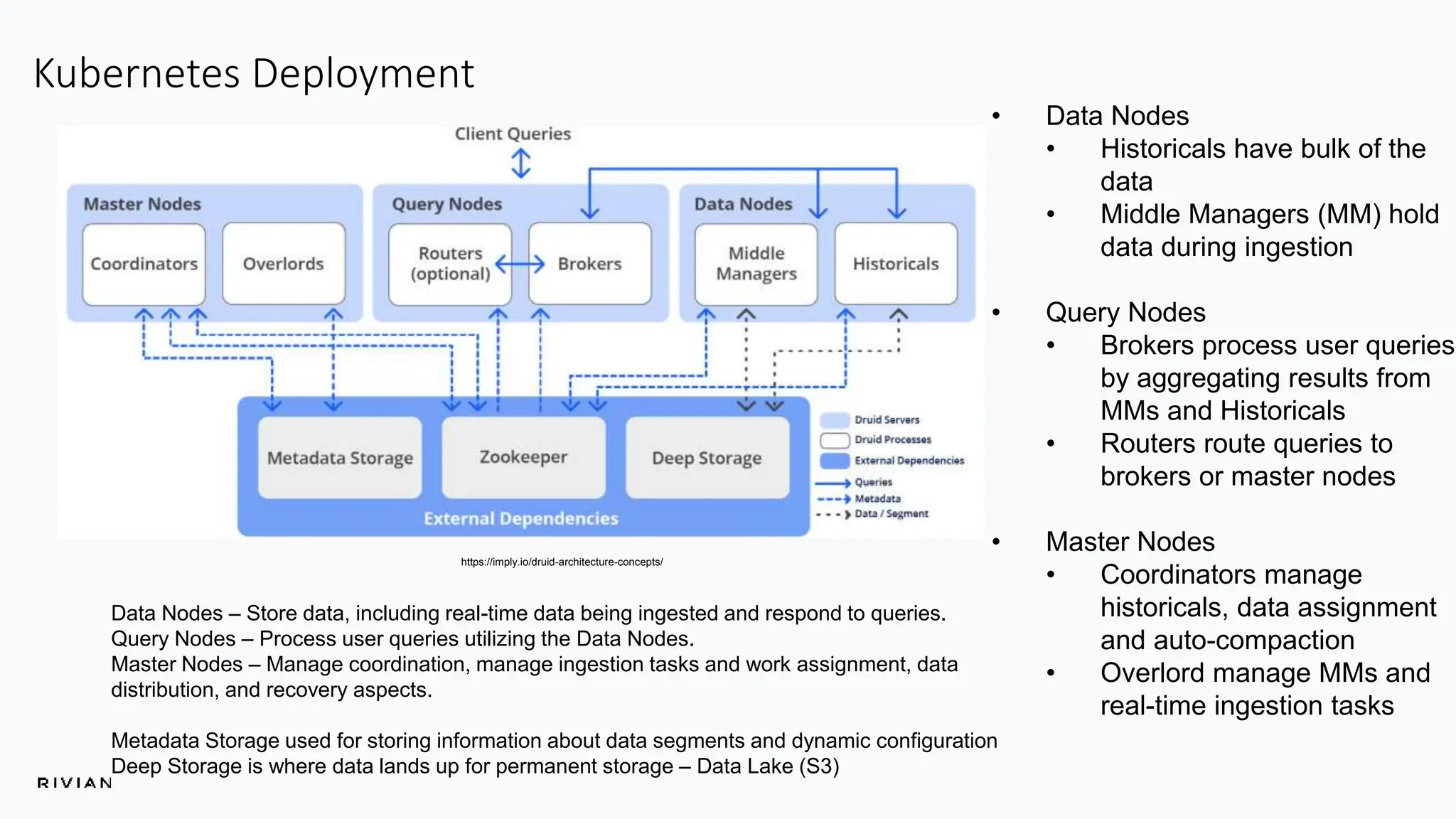

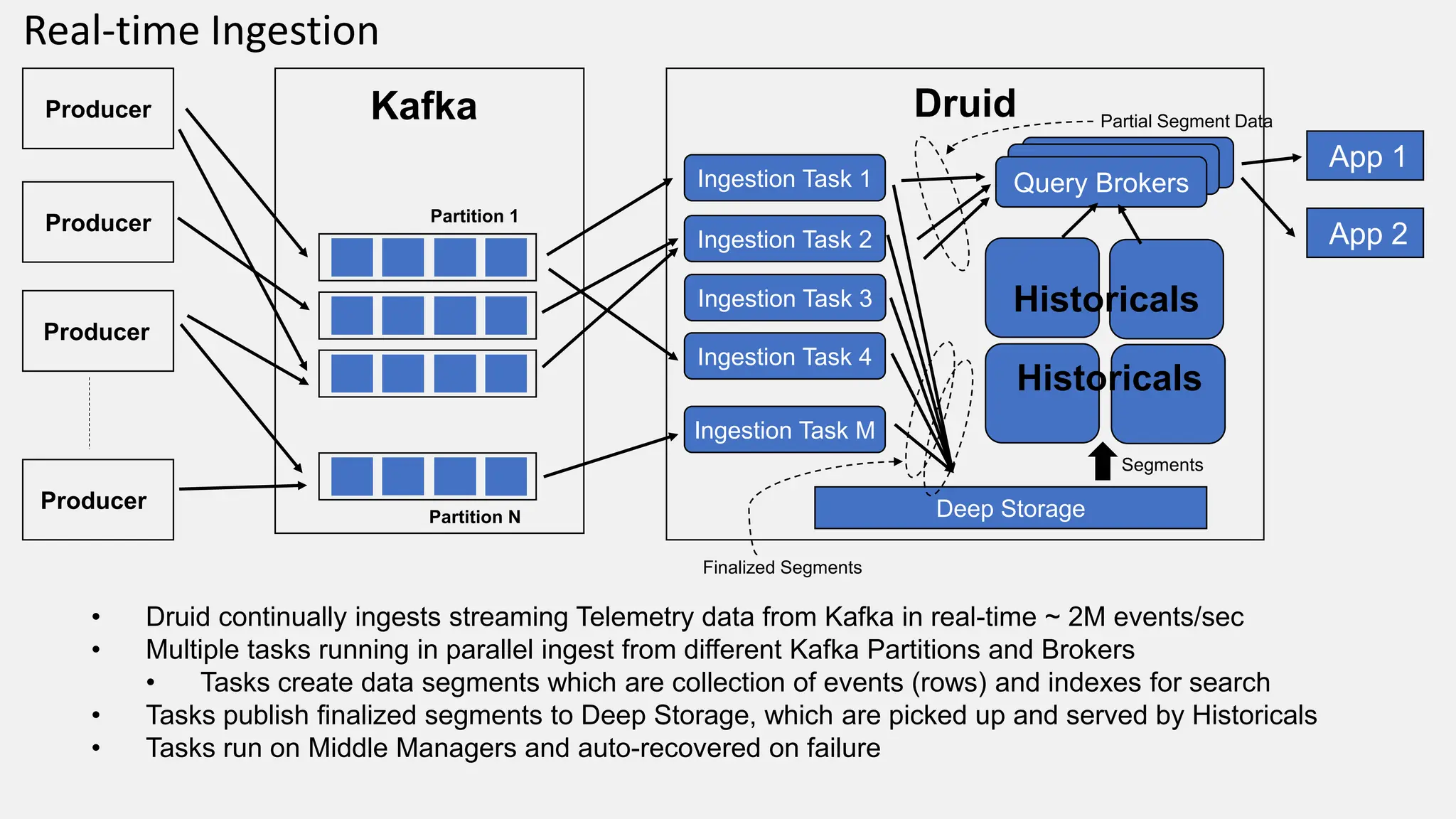

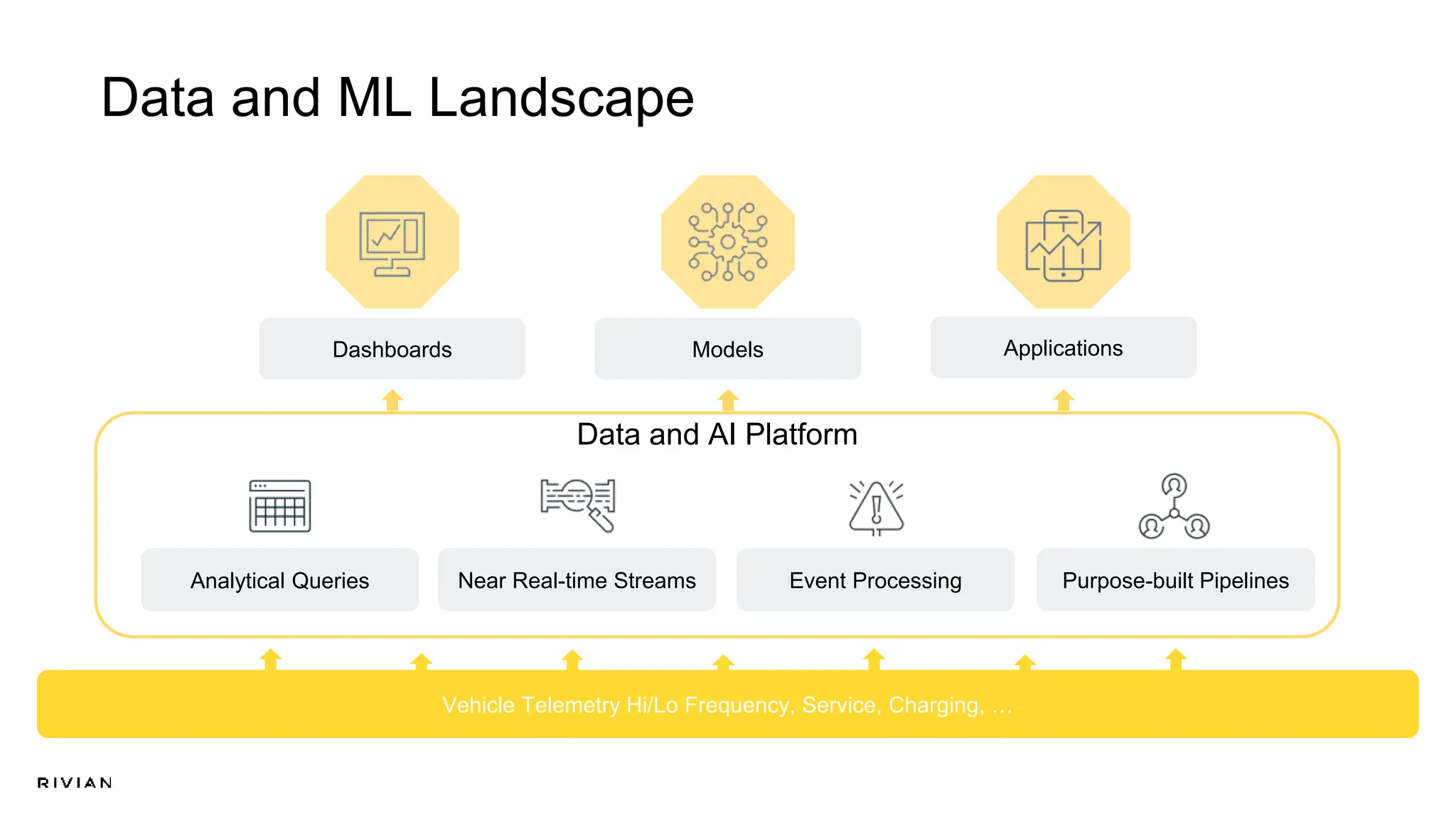

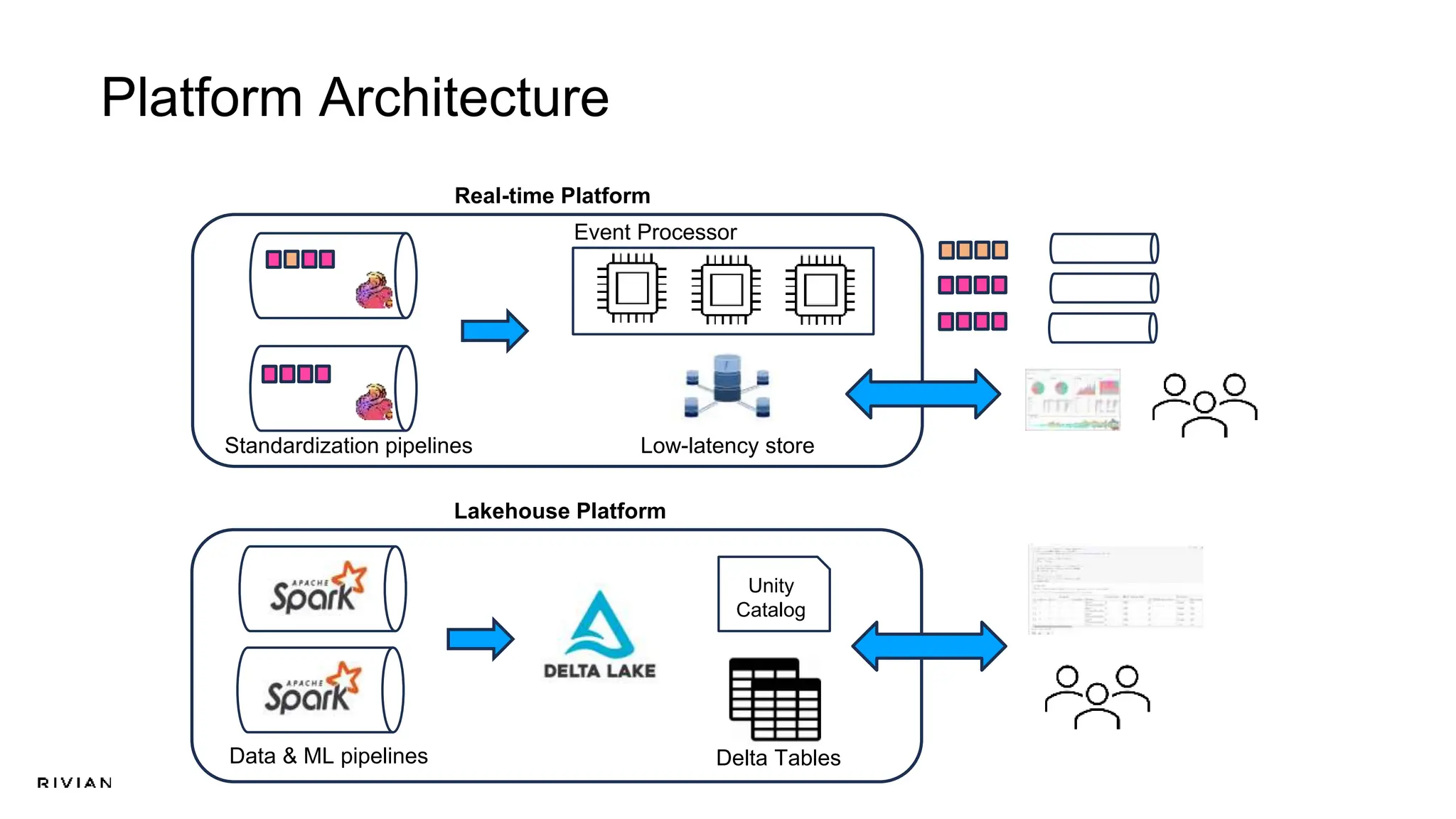

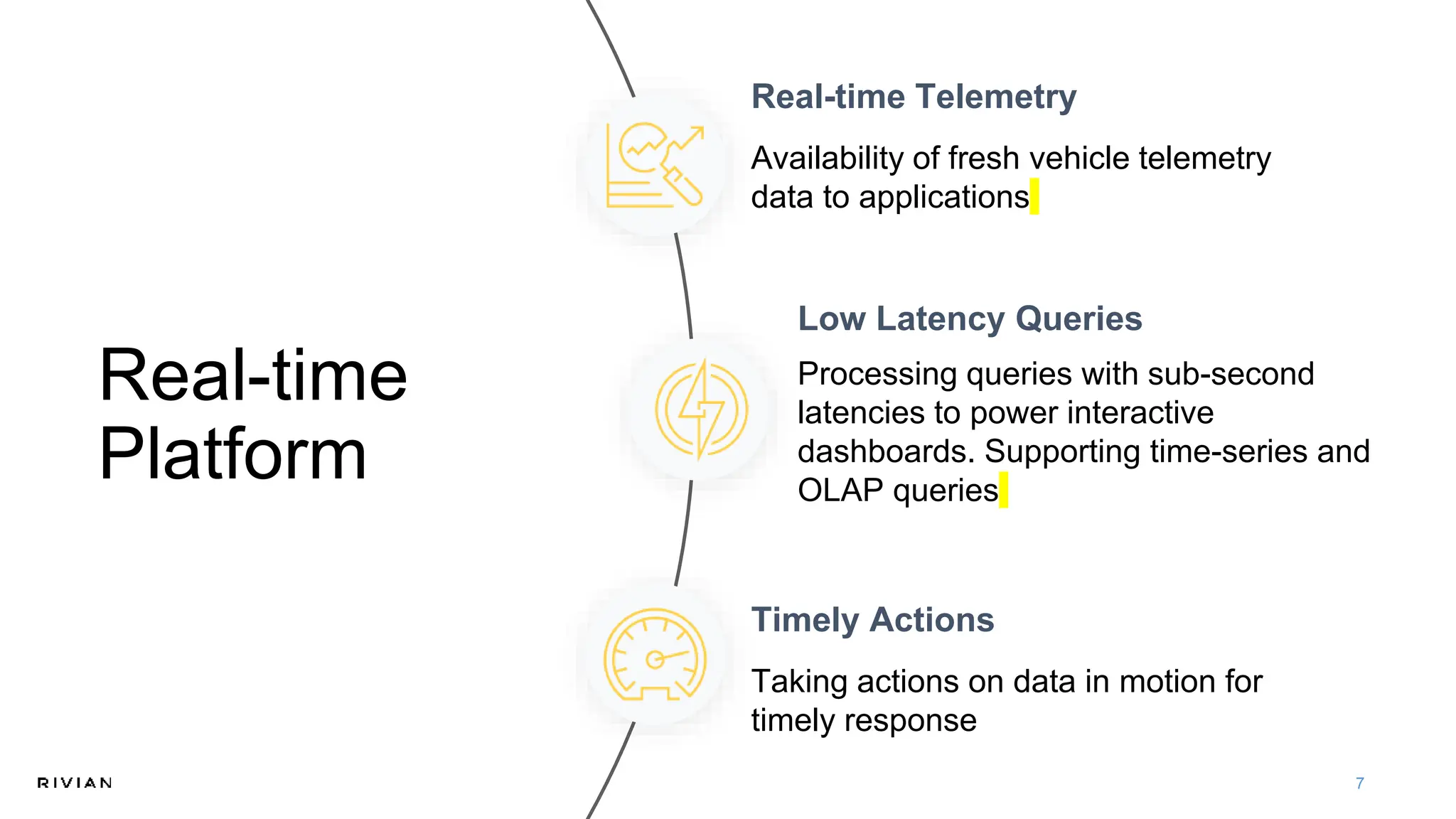

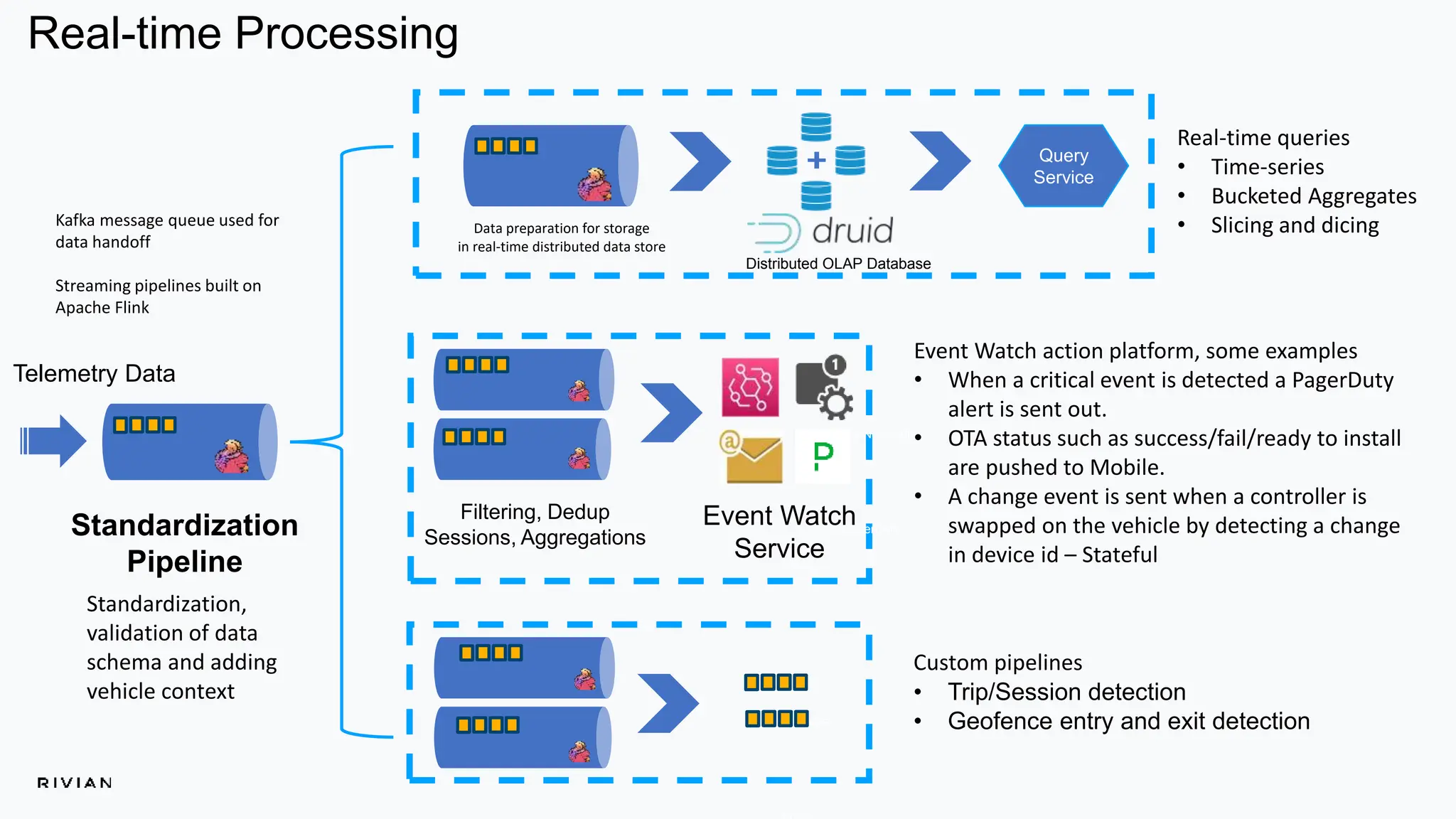

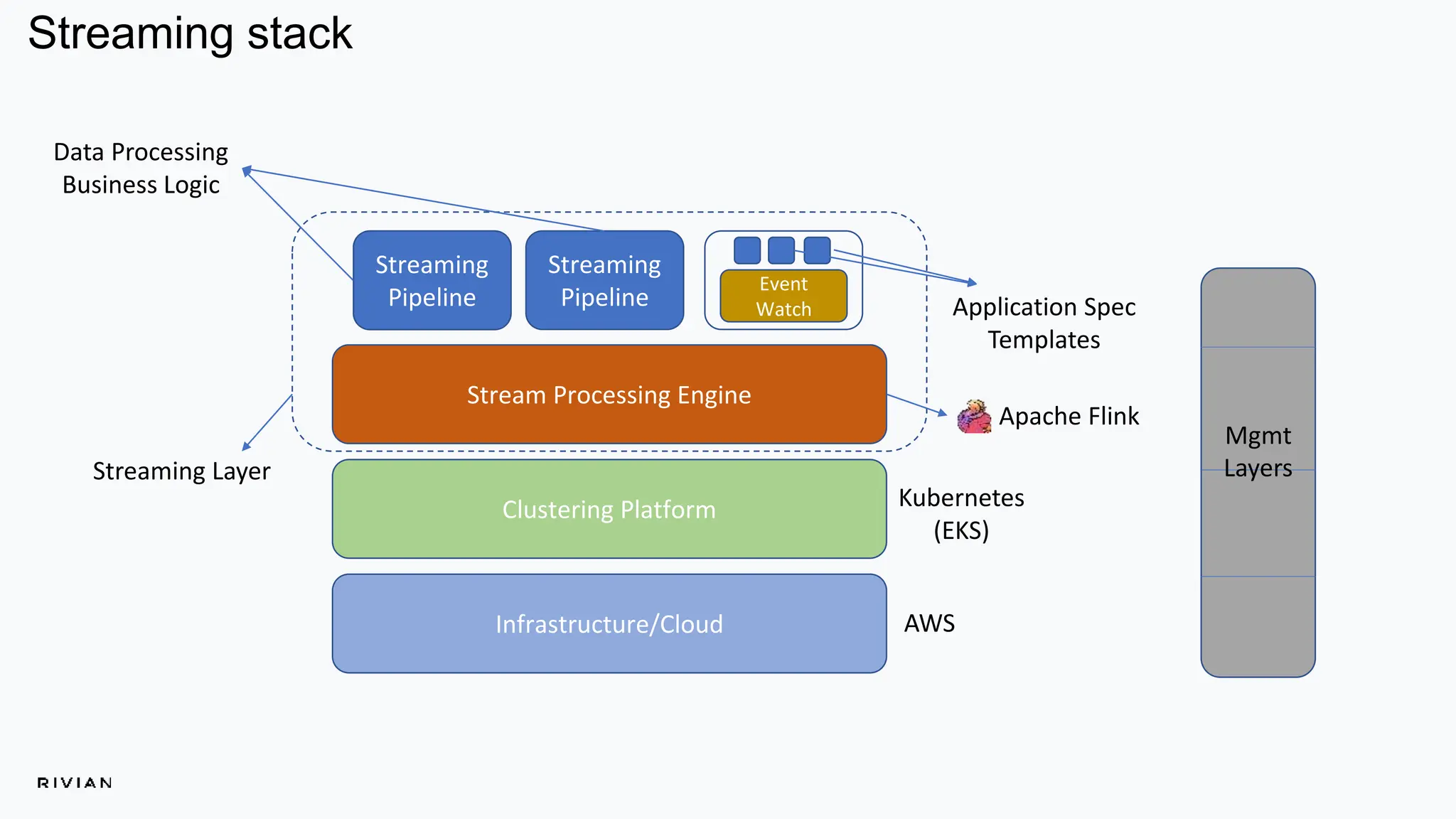

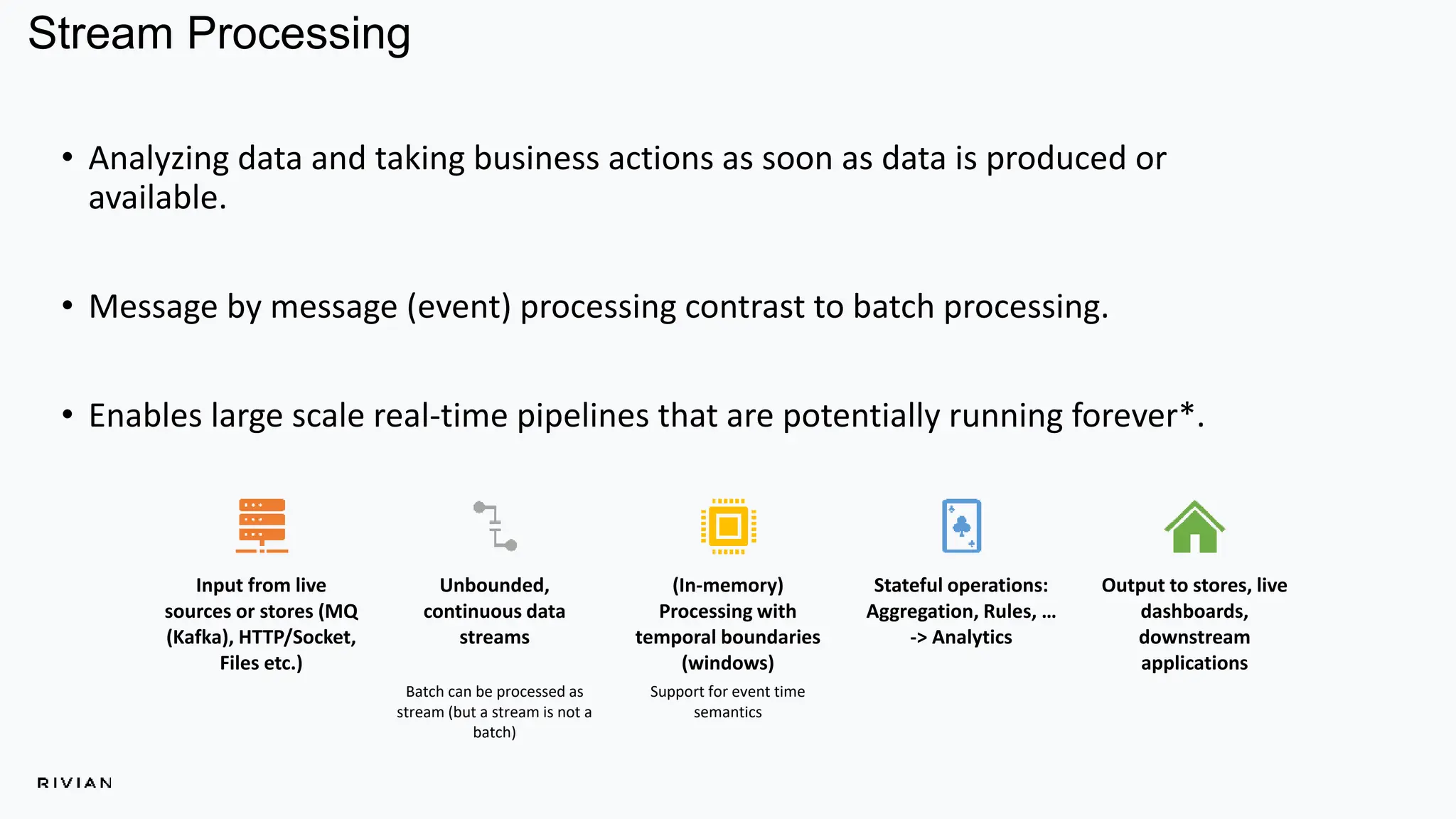

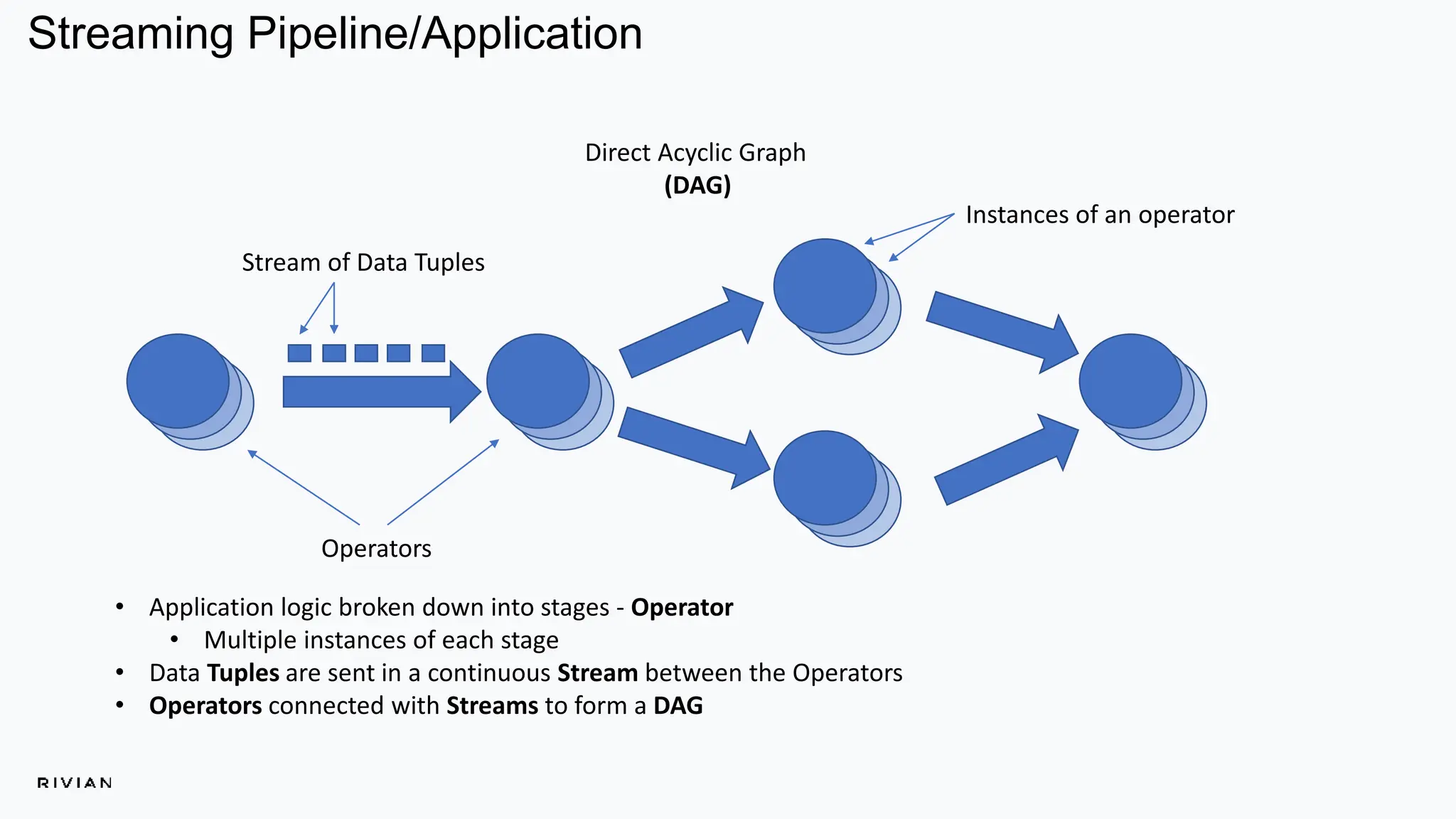

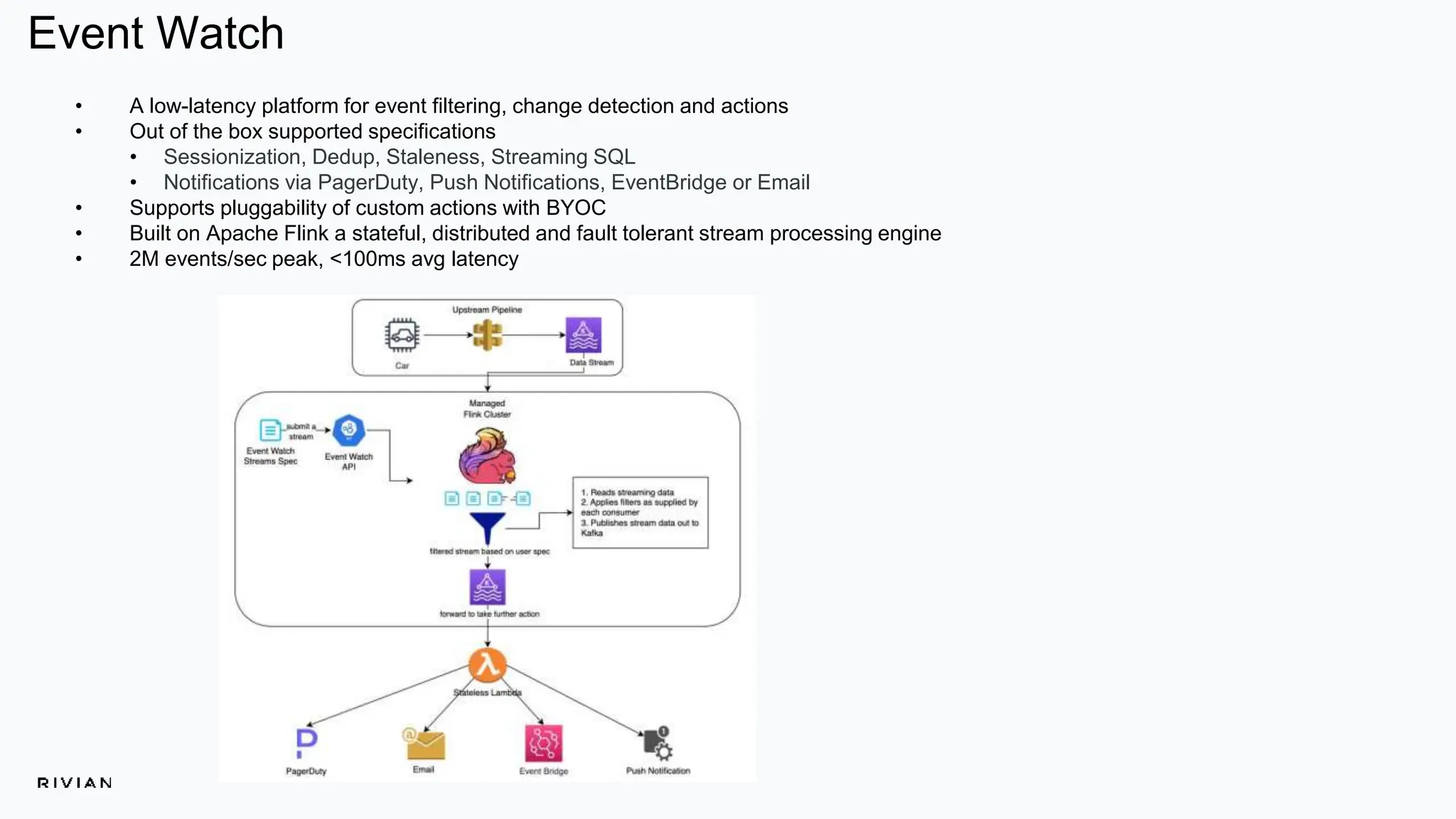

The document outlines Rivian's real-time analytics platform, designed for processing vehicle telemetry data with low latency and high efficiency. It highlights the use of streaming technologies, including Apache Flink and Kafka, to facilitate event detection, data processing, and proactive alerts. The platform supports real-time queries, data visualization, and fleet management through a robust architecture that integrates both historical and real-time data for comprehensive analytics.

![Specifications

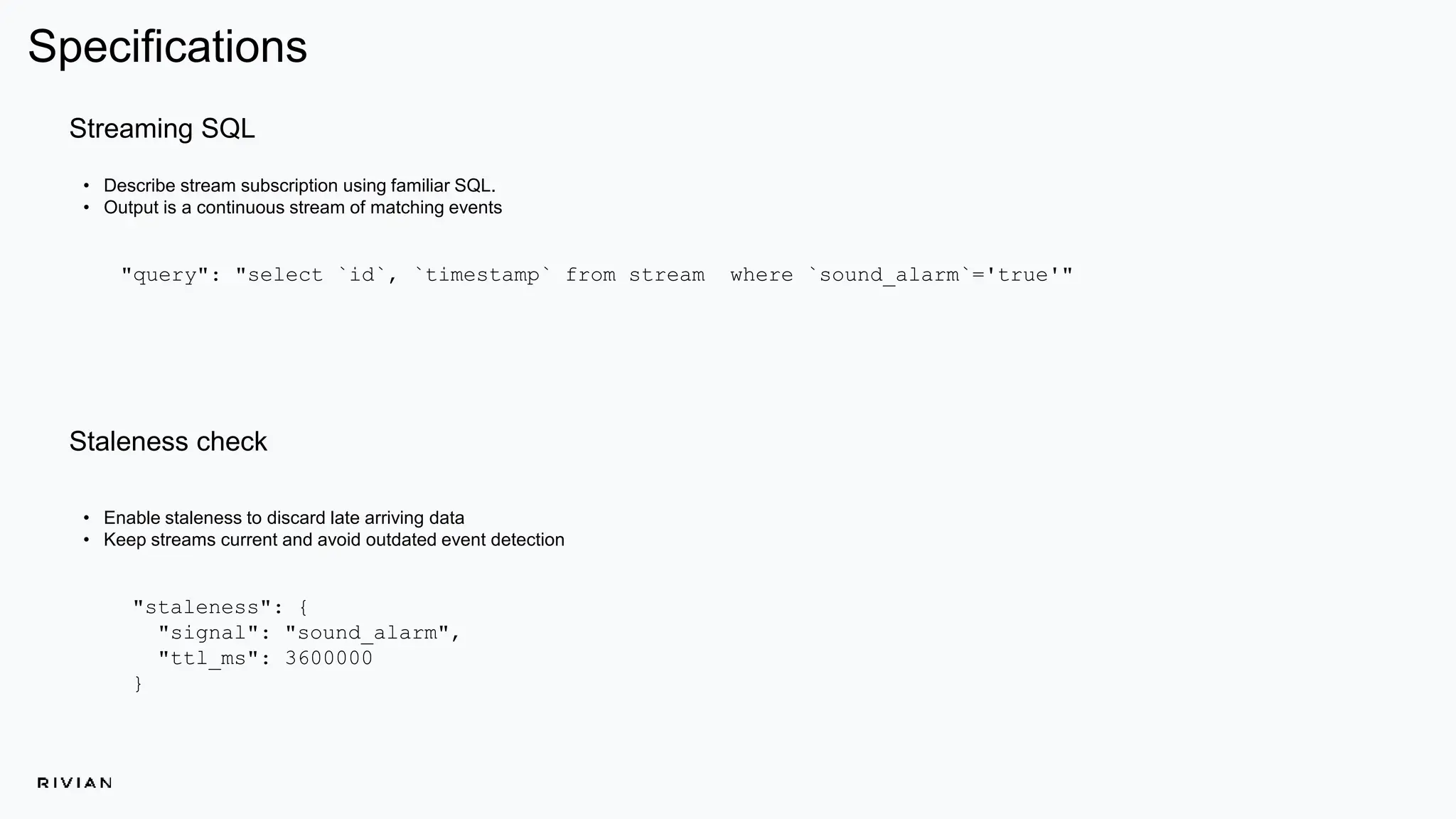

• Virtual session on event detection

• Query other signals in context of the session

• Supports TTL on the dependency signal

Deduplication

• Identify and discard duplicates

• Useful to avoid triggering duplicate notifications

• Provide TTL for deduplication at ms granularity

Sessions

"dedup": true,

"dedup_ttl_ms": 86400000

"query" : "select `id`, `range_threshold`, `pet_mode_status`from stream

where `range_threshold` = 'VEHICLE_RANGE_CRITICALLY_LOW'",

…

"dependency": {

"type": "thermal",

"subtype": "hvac_settings",

"signal": "pet_mode_status",

"values": ["On"]

},](https://image.slidesharecdn.com/pramodimmaneni-realtimeanalytics-231129101509-4494cb76/75/DSC-Europe-23-Pramod-Immaneni-Real-time-analytics-at-IoT-scale-13-2048.jpg)