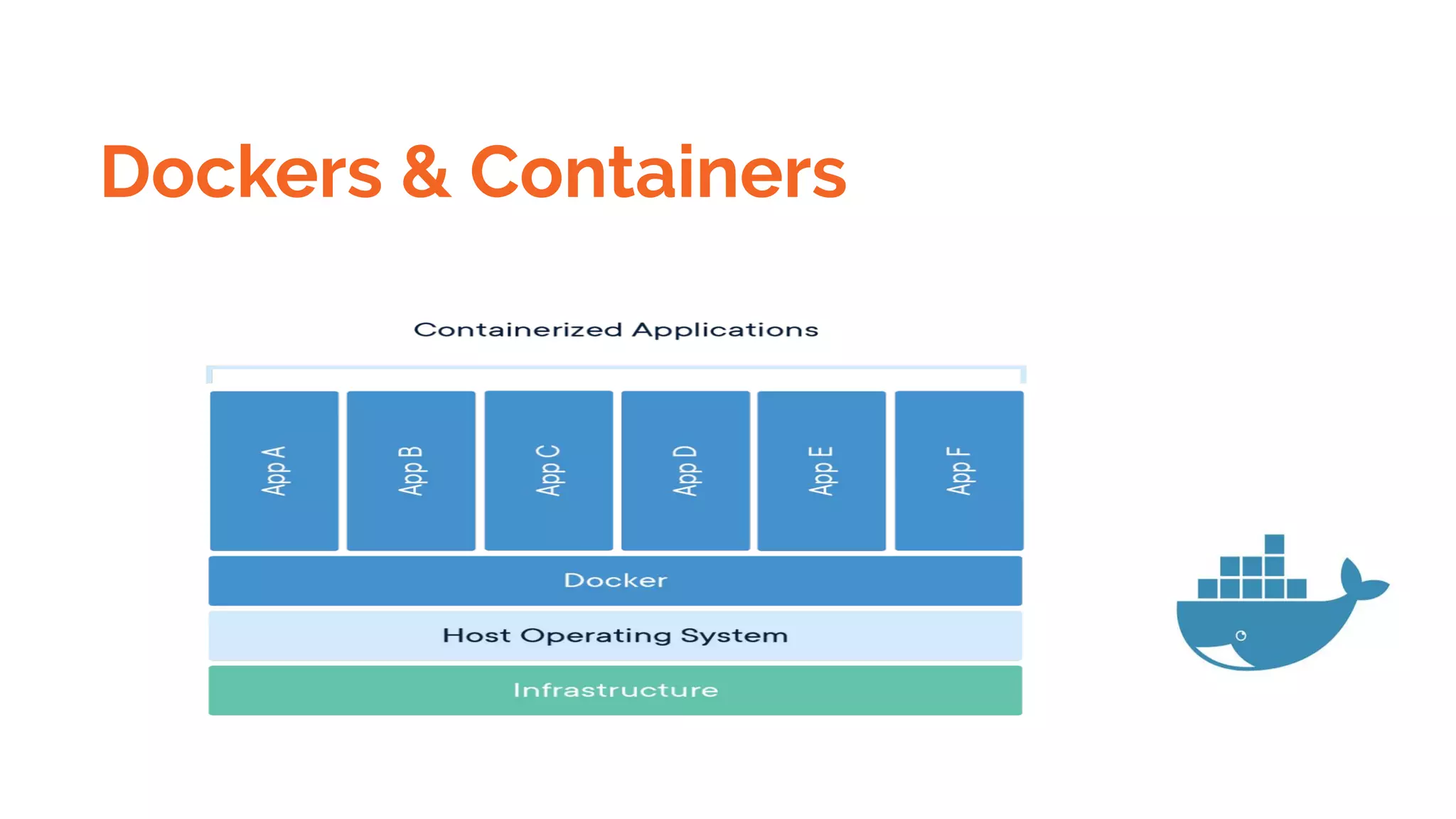

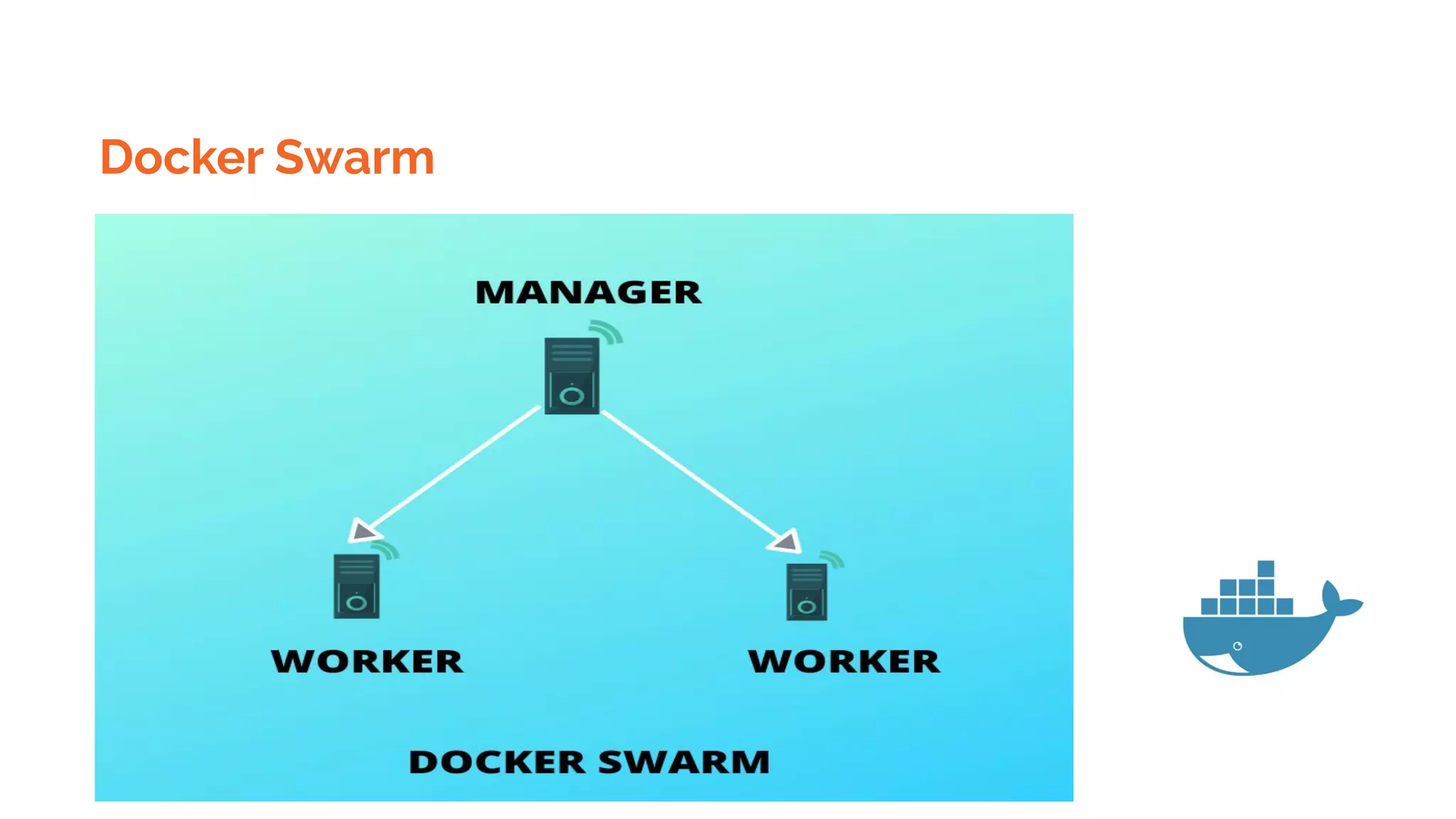

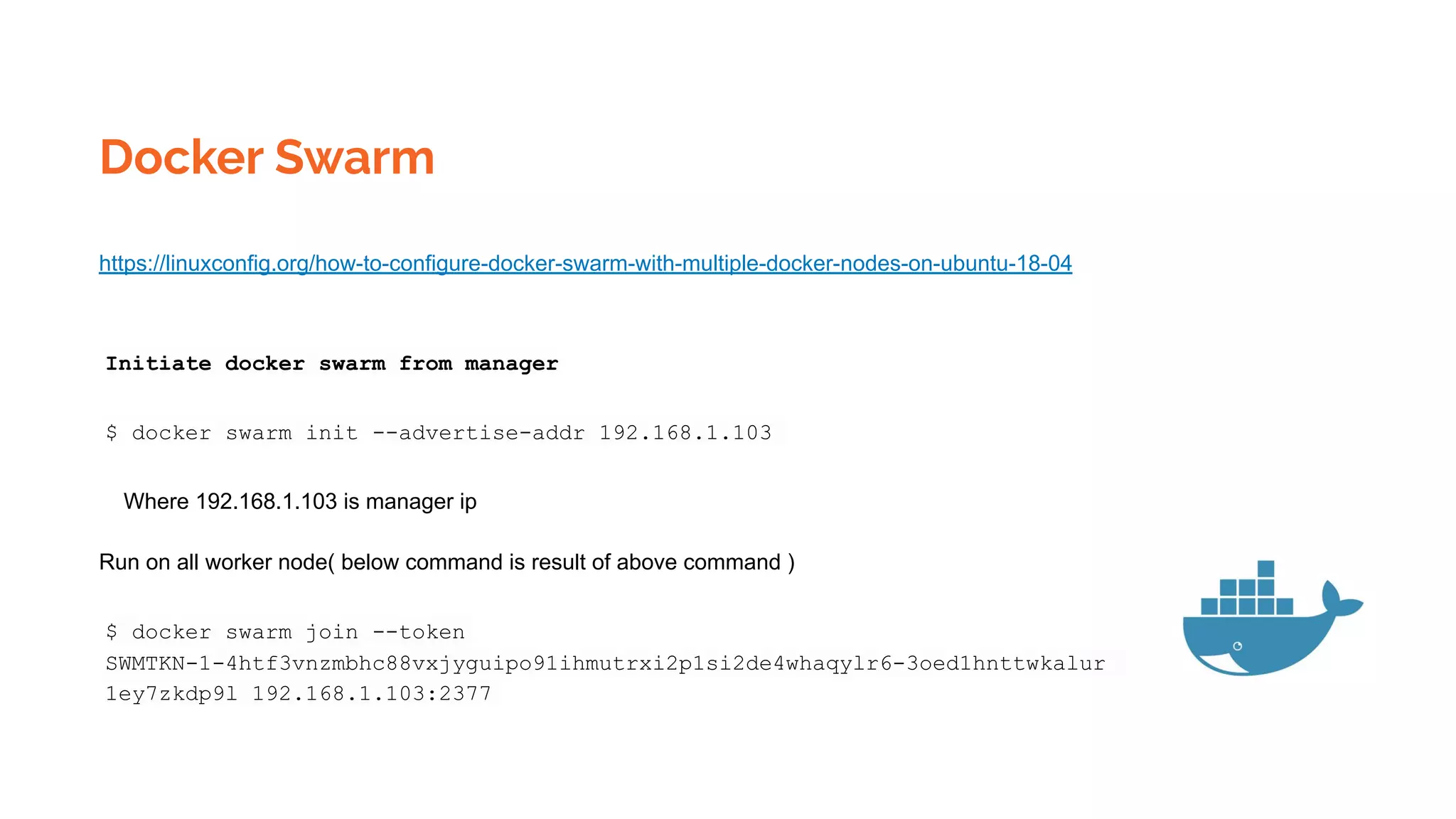

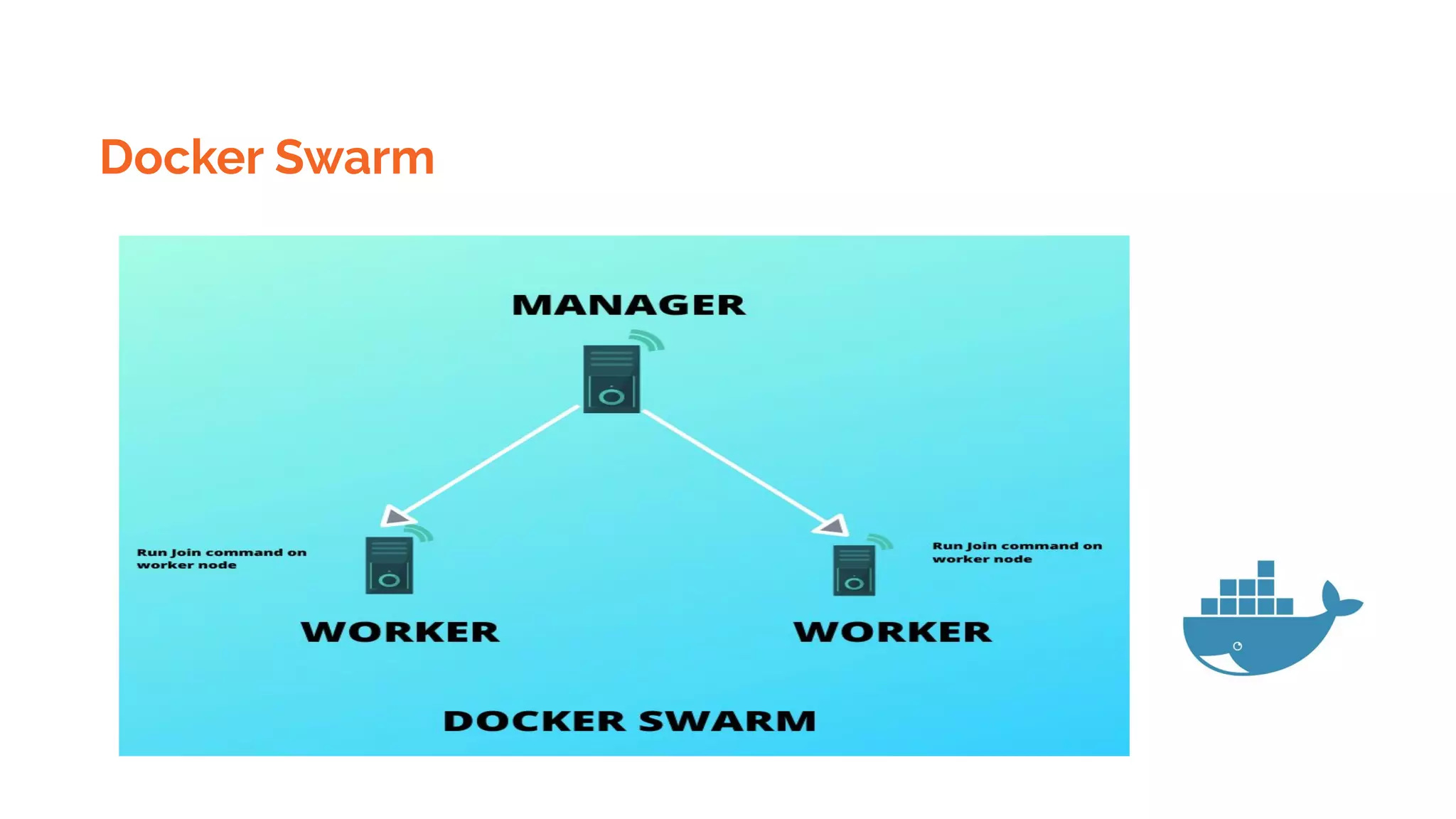

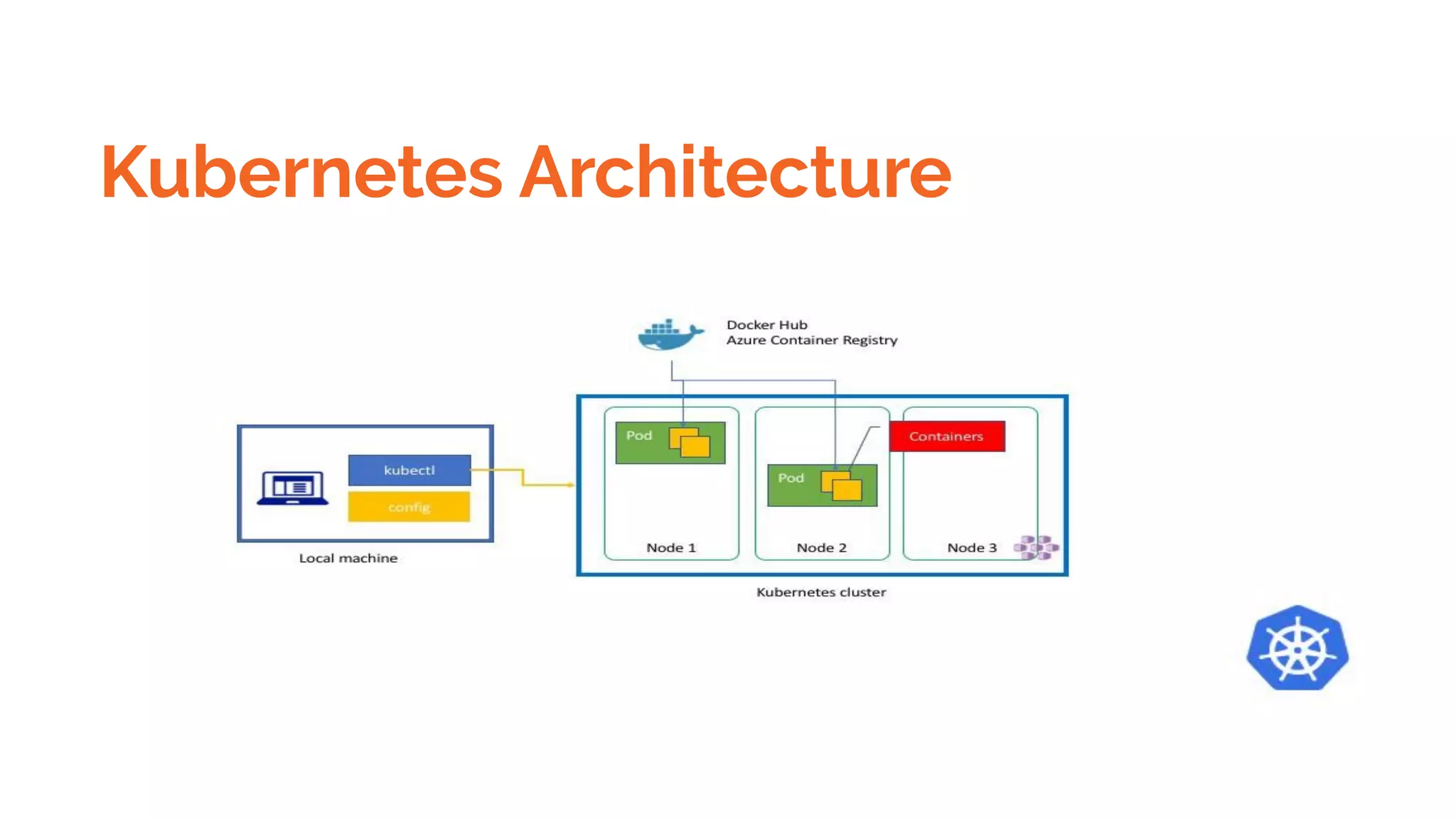

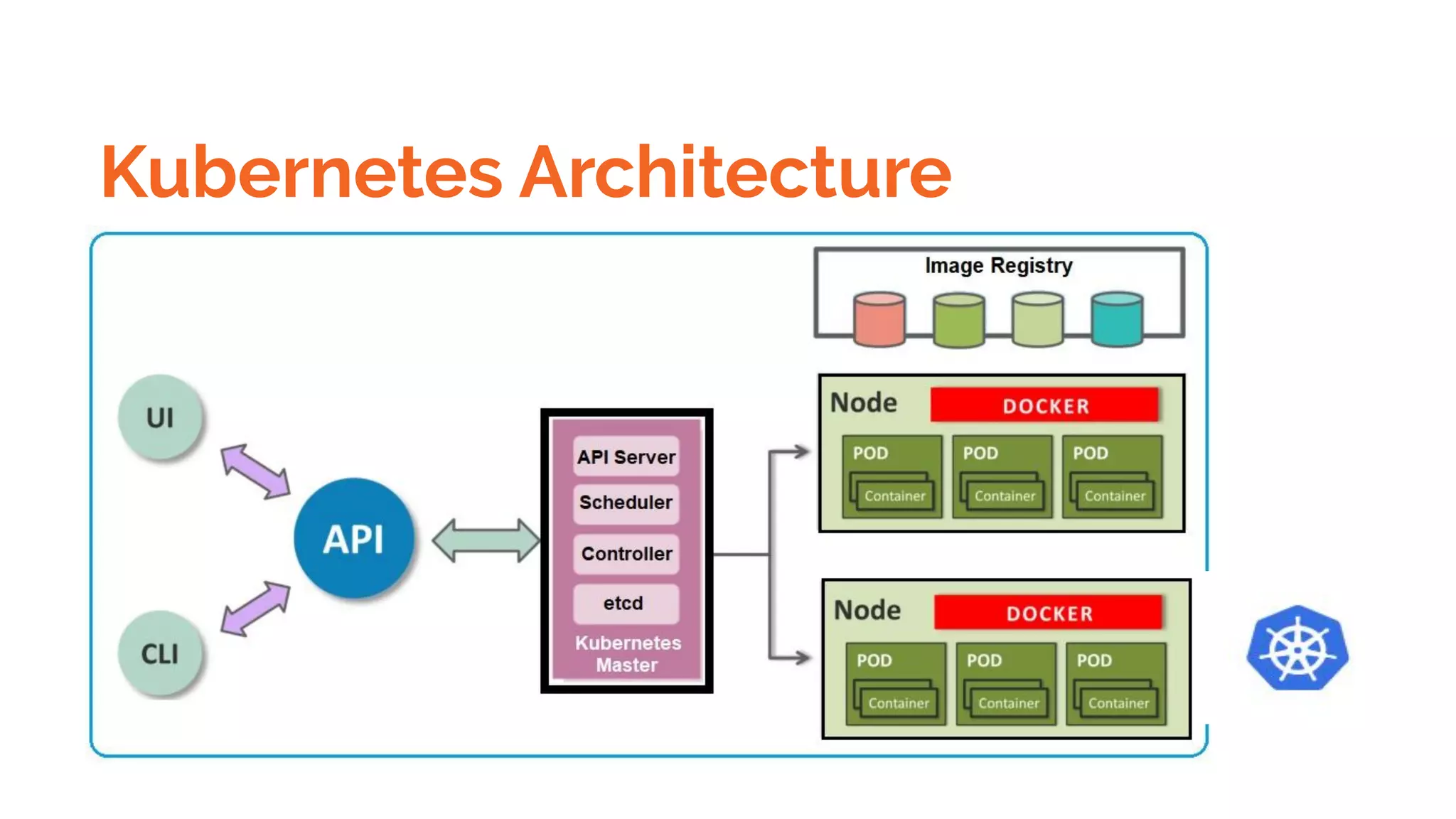

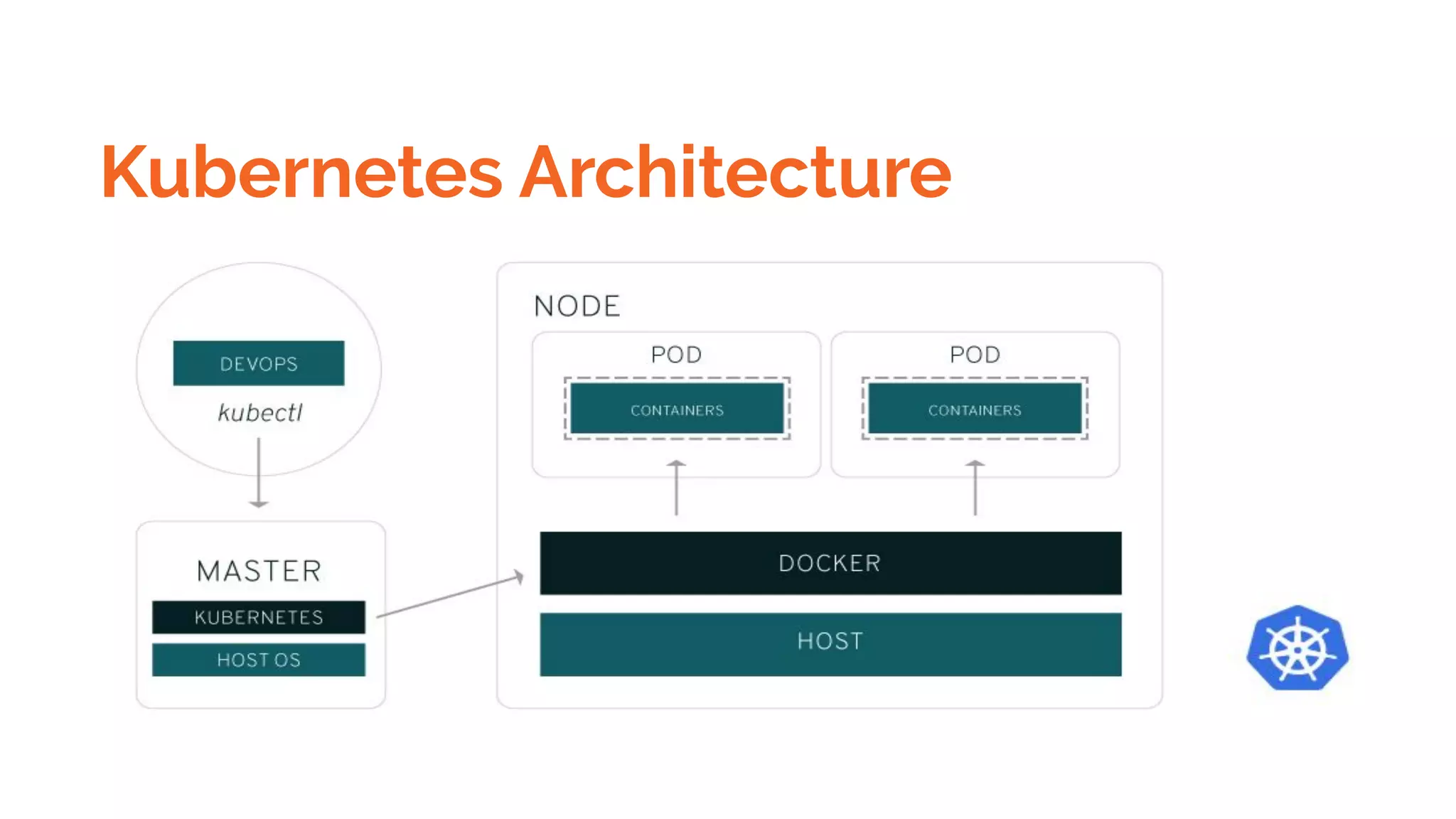

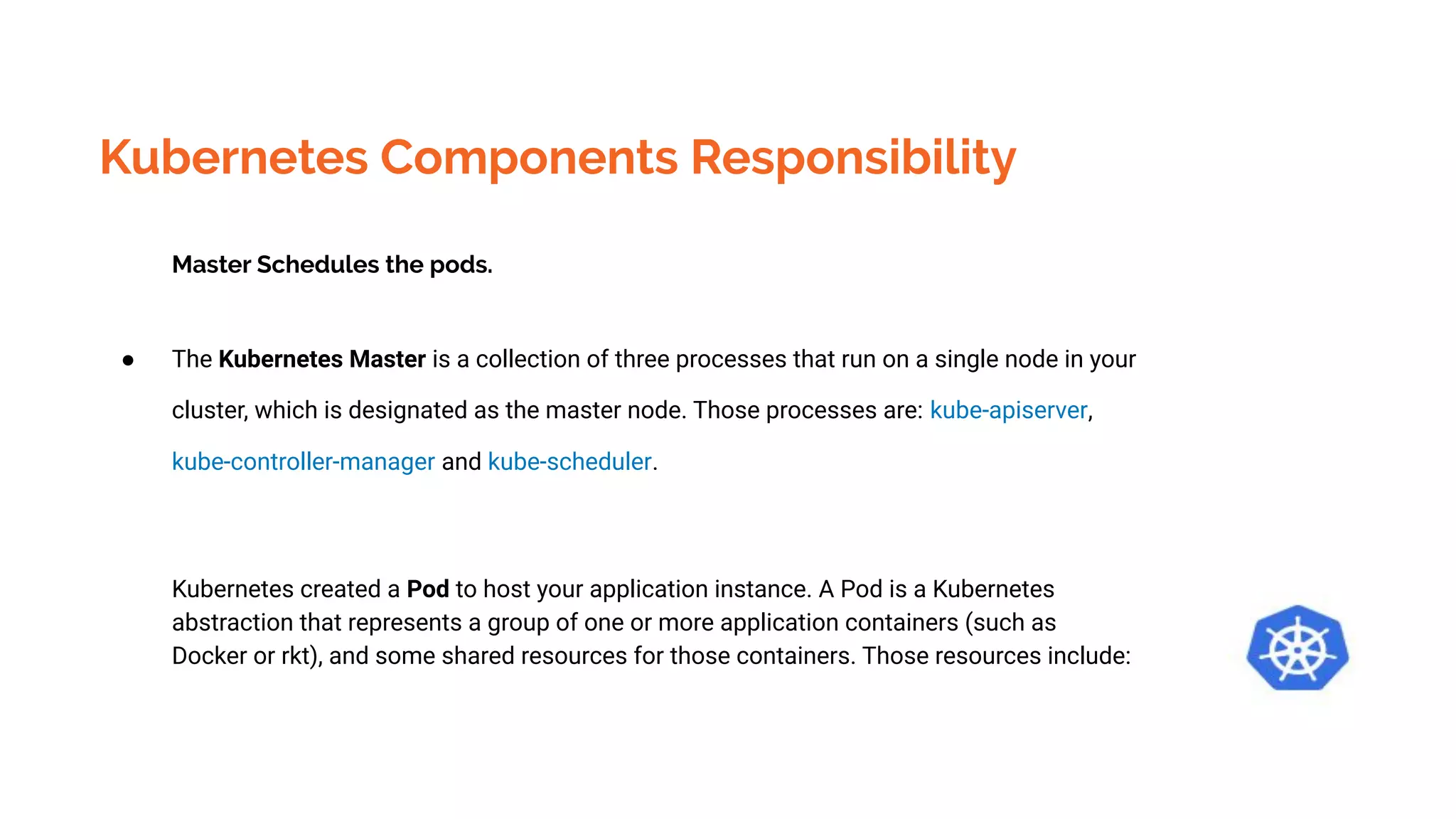

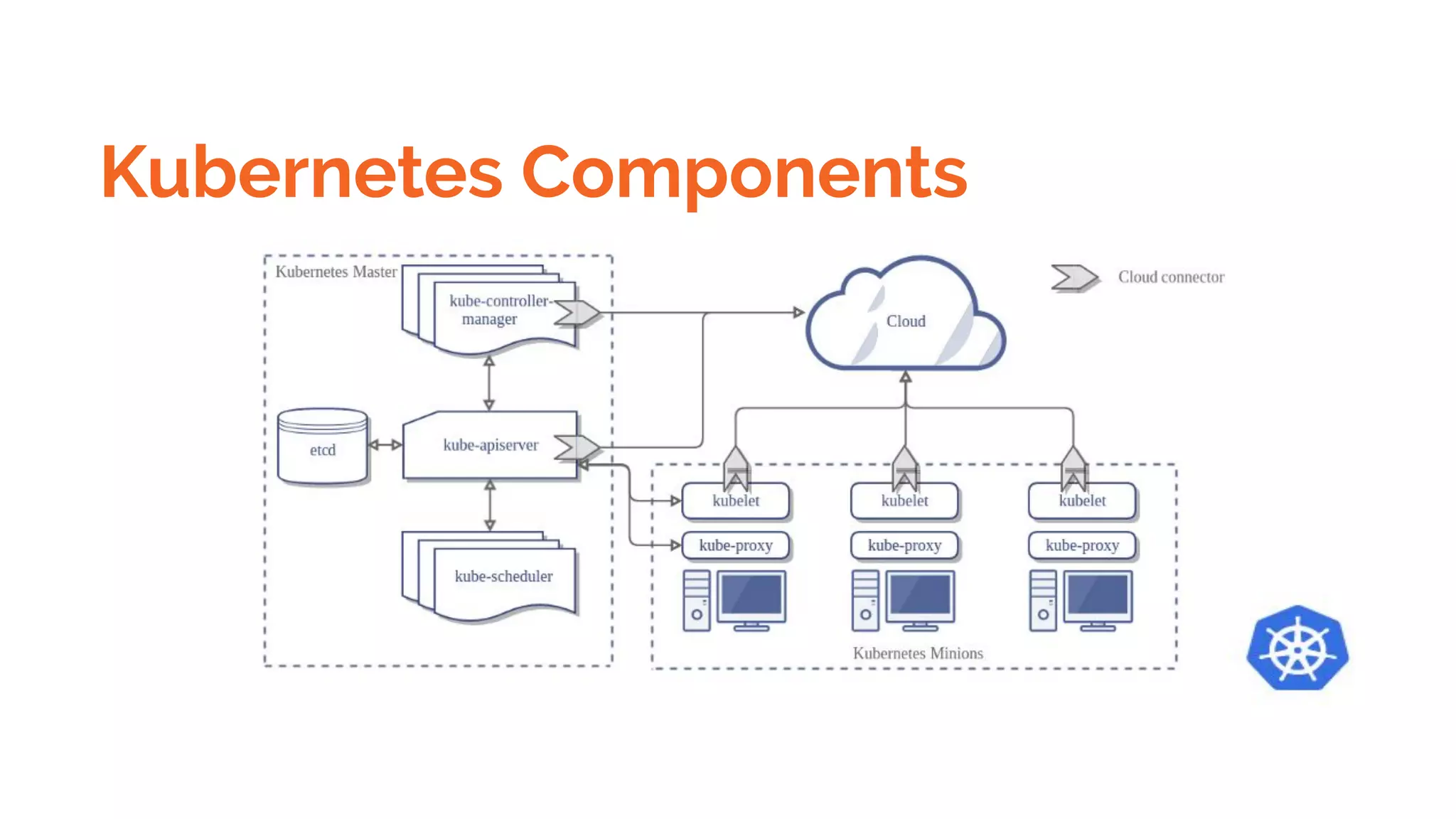

Docker is a platform for building, distributing and running containerized applications. It allows applications to be bundled with their dependencies and run in isolated containers that share the same operating system kernel. Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications. It groups Docker containers that make up an application into logical units for easy management and discovery. Docker Swarm is a native clustering tool that can orchestrate and schedule containers on machine clusters. It allows Docker containers to run as a cluster on multiple Docker hosts.

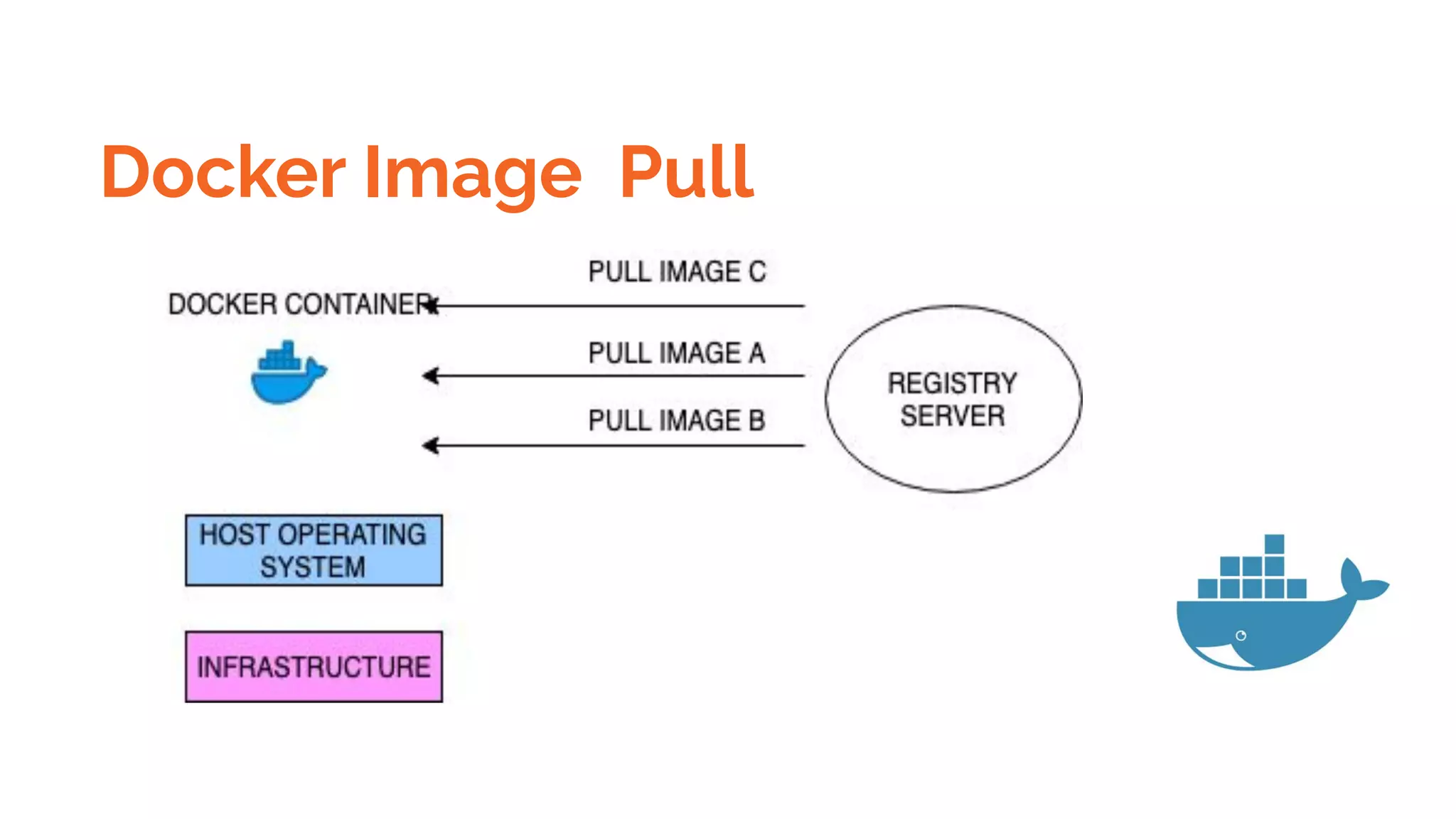

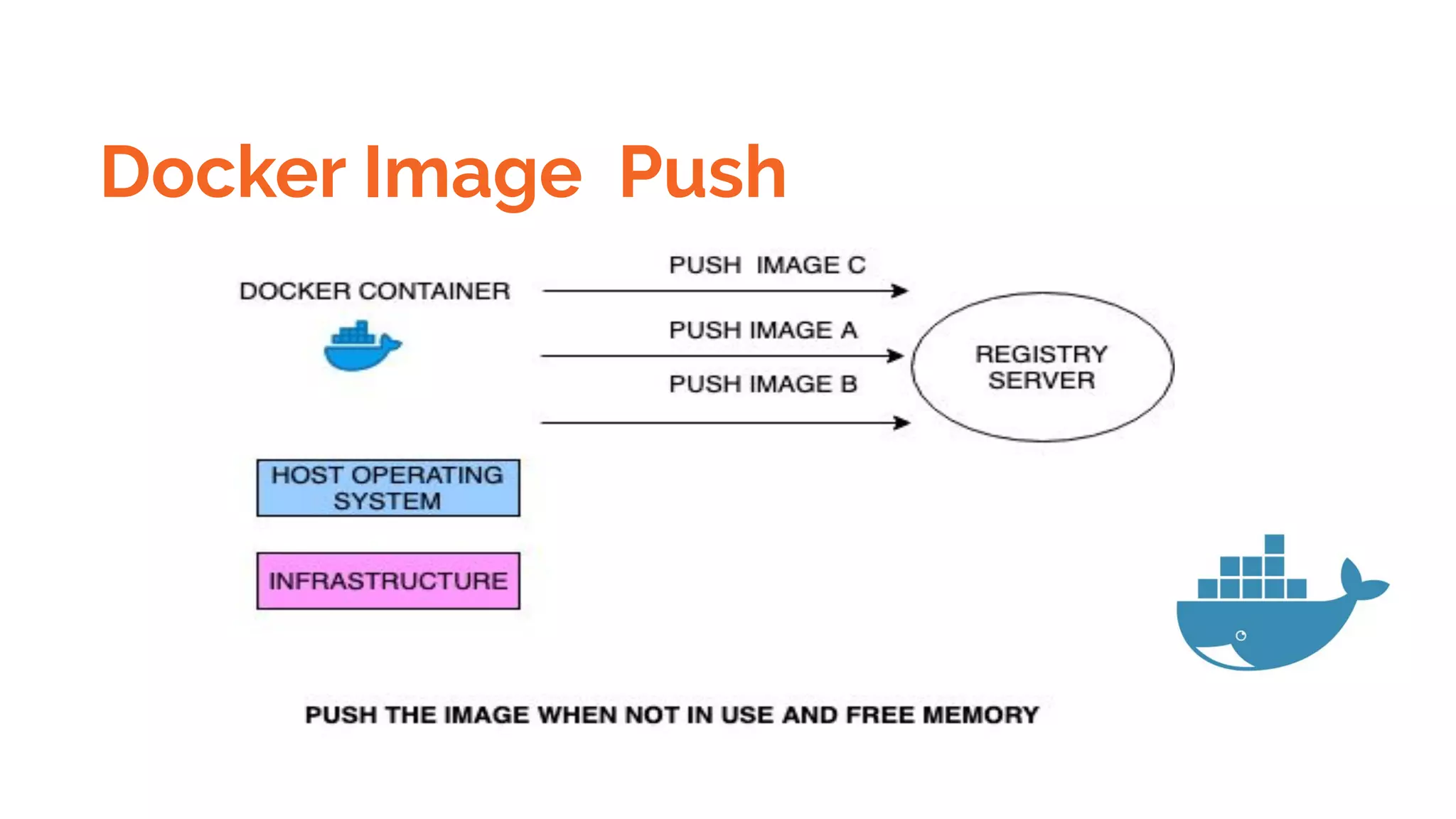

![Docker Registry and push pull images

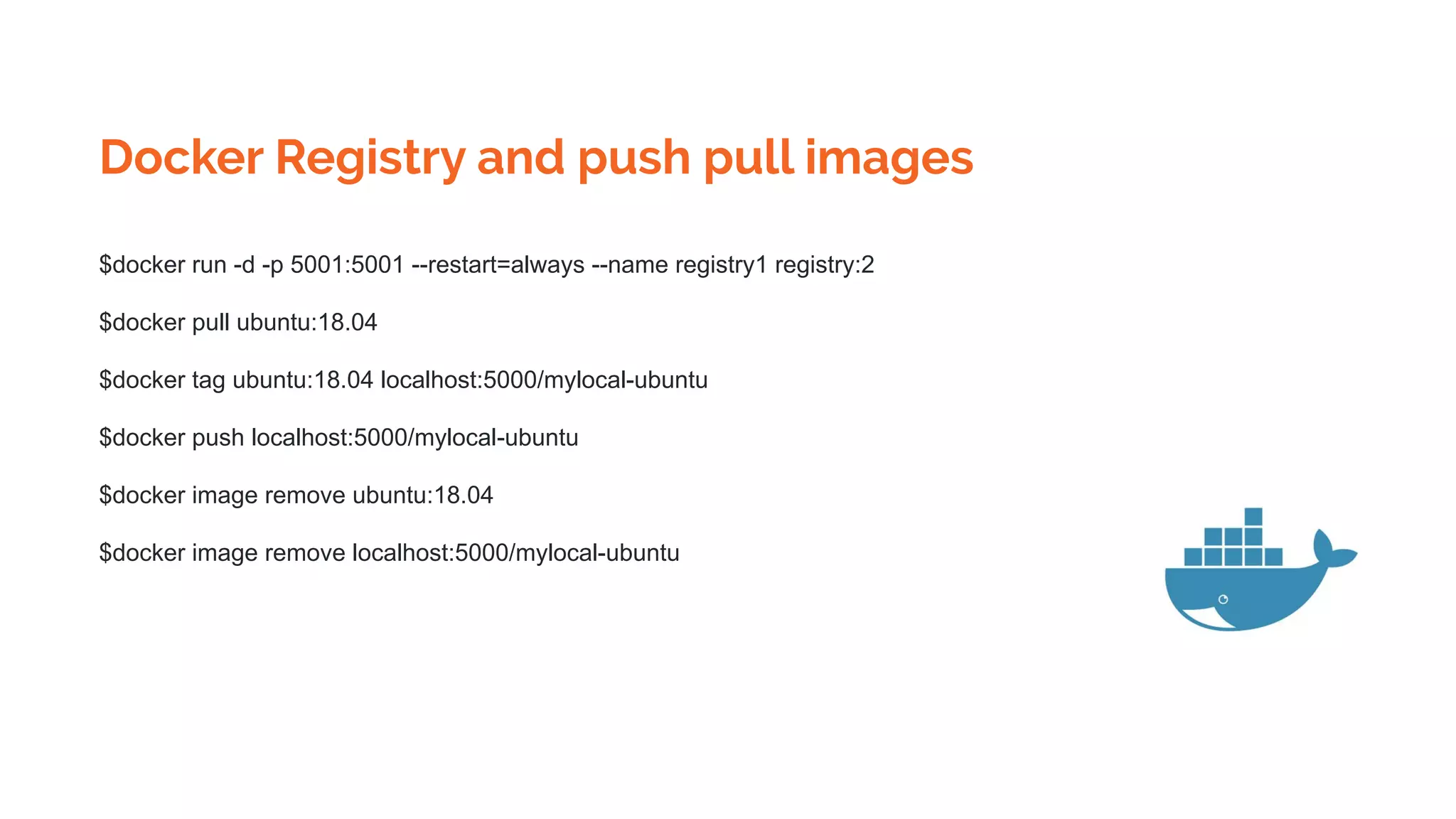

$docker tag SOURCE_IMAGE[:TAG] TARGET_IMAGE[:TAG]

Tag an image for a private repository

To push an image to a private registry and not the central Docker registry you must tag it with the registry

hostname and port (if needed).

$ docker tag 0e5574283393 myregistryhost:5000/fedora/httpd:version1.0](https://image.slidesharecdn.com/dockerskubernetesdetailed1-200107090538/75/Dockers-kubernetes-detailed-Beginners-to-Geek-22-2048.jpg)

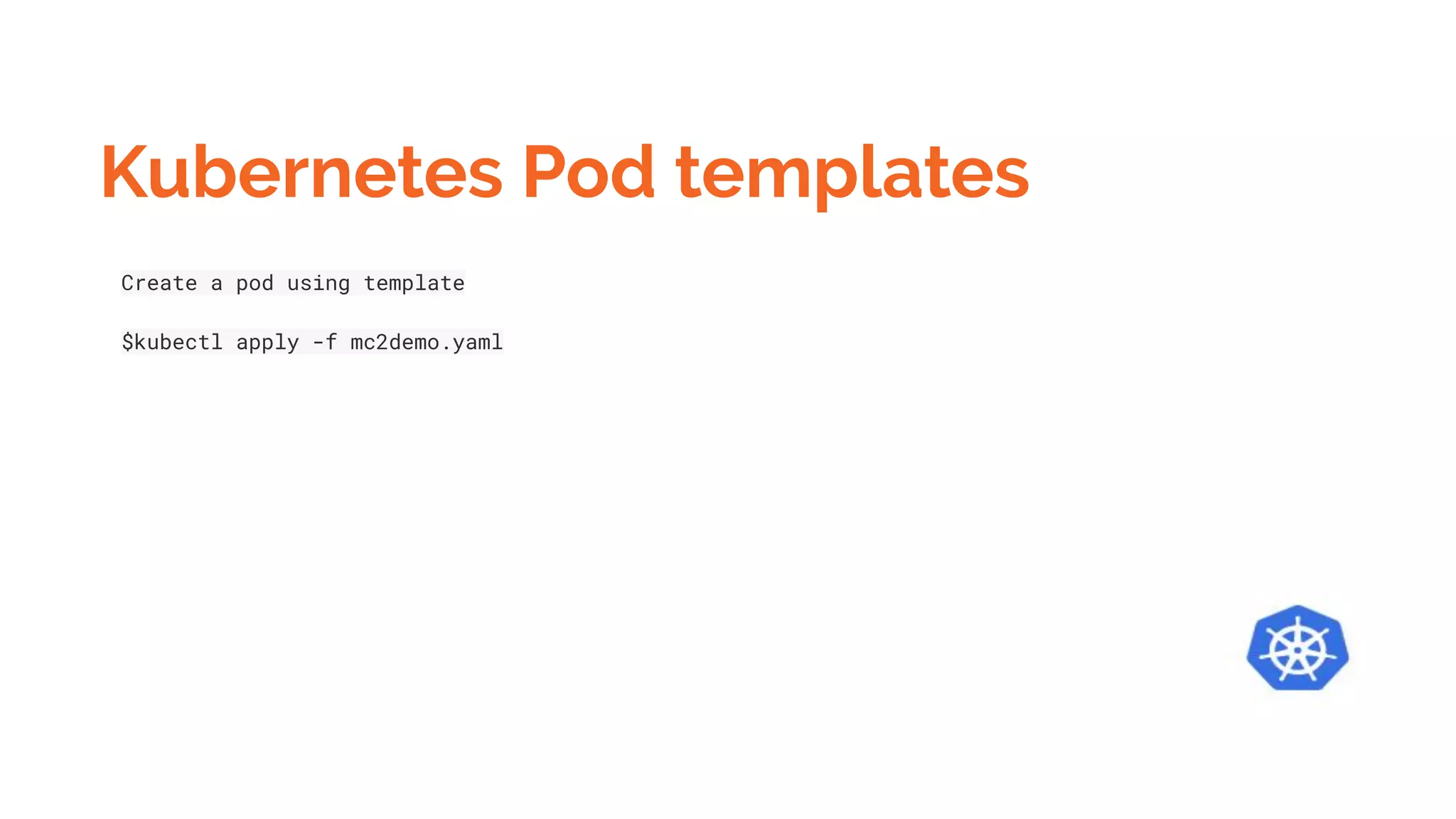

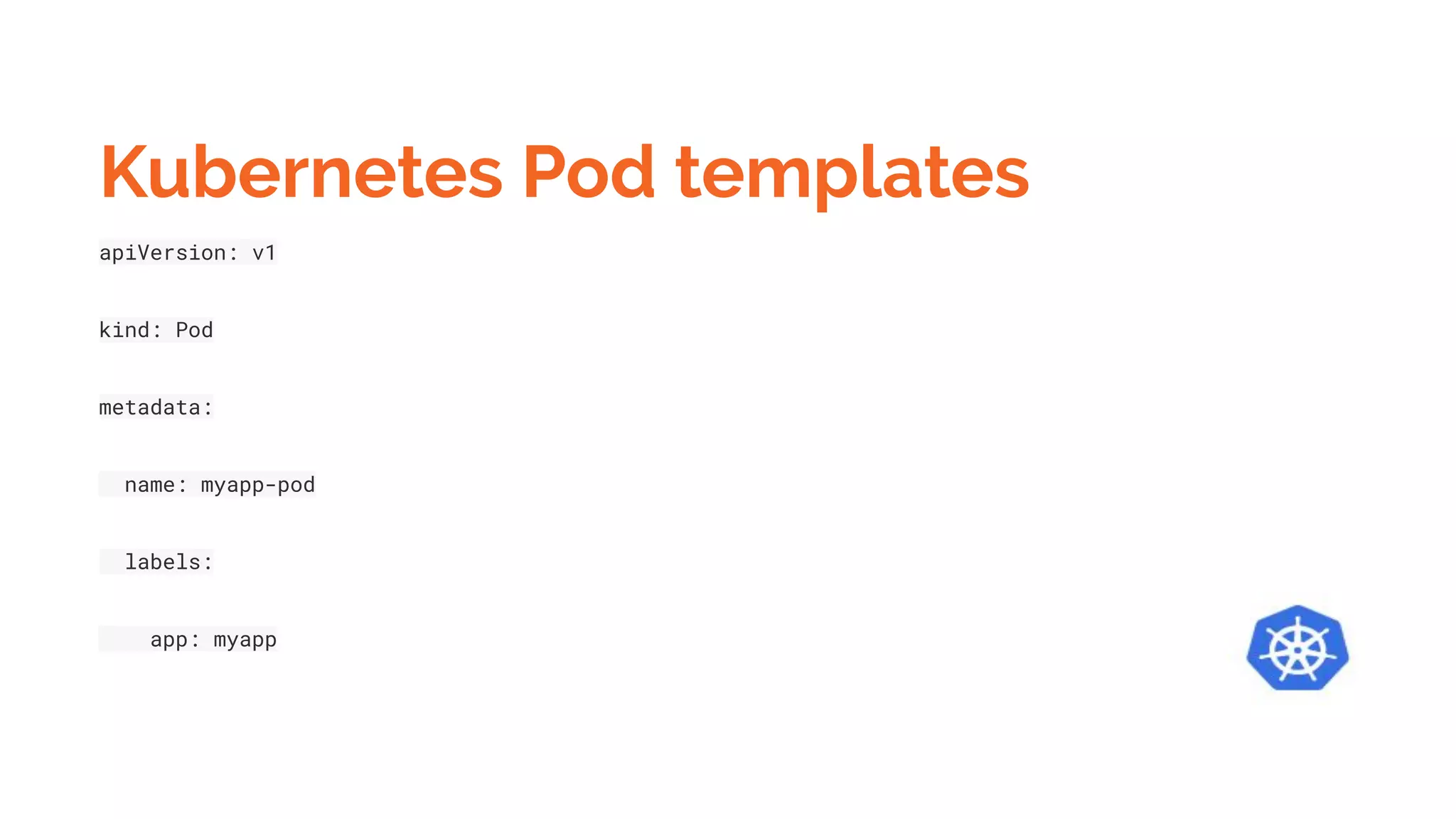

![Kubernetes Pod templates

spec:

containers:

- name: myapp-container

image: busybox

command: ['sh', '-c', 'echo Hello Kubernetes! && sleep 3600']](https://image.slidesharecdn.com/dockerskubernetesdetailed1-200107090538/75/Dockers-kubernetes-detailed-Beginners-to-Geek-98-2048.jpg)