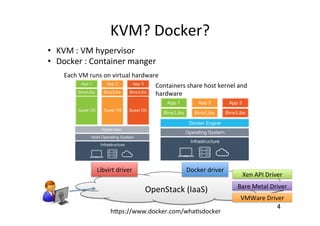

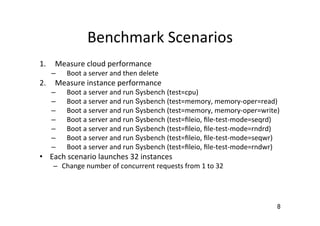

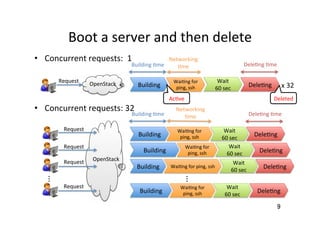

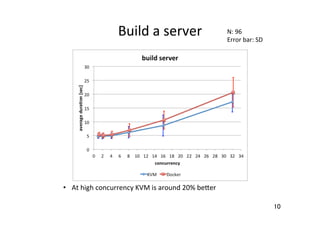

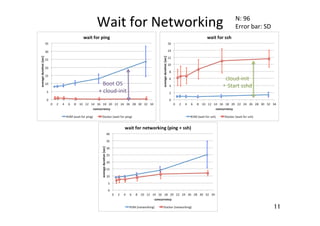

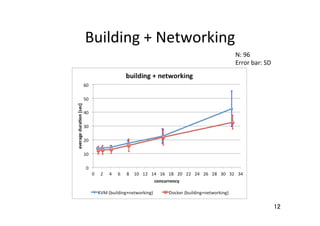

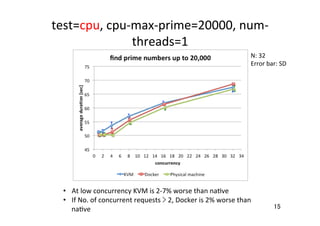

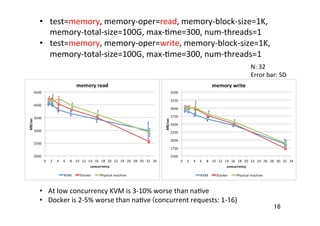

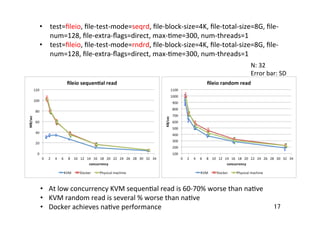

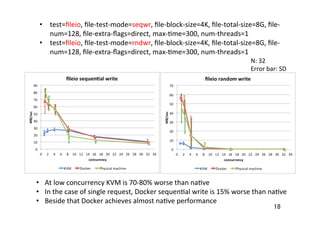

This document compares the performance of KVM and Docker instances in OpenStack. It finds that for boot and delete operations, KVM is around 20% faster than Docker at high concurrency levels. For CPU and memory tests at low concurrency, KVM is 2-10% slower than bare metal, while Docker is 2-5% slower. For sequential and random read/write file I/O tests, KVM is similar or slightly slower than bare metal, while Docker is slightly slower than KVM. Overall performance decreases for both KVM and Docker as concurrency increases.